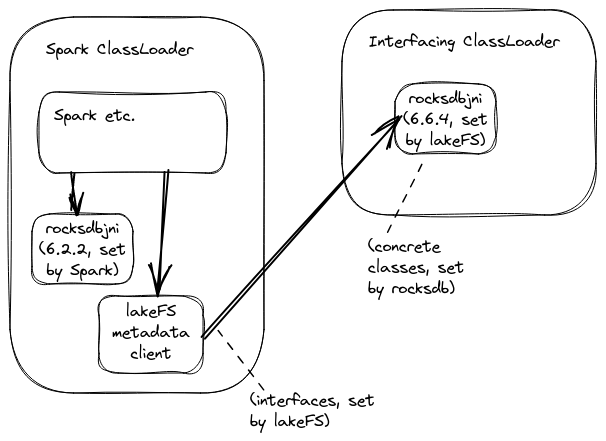

github.com/treeverse/lakefs@v1.24.1-0.20240520134607-95648127bfb0/design/accepted/double-rocksdbjni.md (about) 1 # Loading `rocksdbjni` twice 2 3 ## Introduction 4 5 The lakeFS Spark metadata client uses the `rocksdbjni` library to read and 6 parse RocksDB-format SSTables. This fails on Spark-as-a-service providers 7 (such as DataBricks) whose Spark comes with an old version of this library 8 -- older versions do not support reading a single SSTable file without the 9 full DB. Spark appears to have no plug-in structure that helps here. All 10 JARs loaded by Spark or any package included in Spark are shared, and only 11 the first version accessed is loaded. 12 13 The normal resolution in the Spark universe for these issues is to provide 14 assembled JARs. During assembly one uses a shader to rename ("shade") the 15 desired version. When Spark loads the assembled JAR, the included version 16 has a different name so it is loaded separately, and everything can mostly 17 work. 18 19 There are multiple issues with shading, notably that shading does not work 20 with JNI-based libraries. This happens for two reasons: 21 22 1. The binaries ("`.so`s") are included in the JAR, and the Java code must 23 be able to load them. Typically they get stored in the root directory, 24 and shading has no effect on them -- the wrong binary is loaded. 25 1. Java types are encoded inside the symbols inside the binary; shading is 26 not able to rename those (it cannot understand native binary formats). 27 28 As its name implies, `rocksdbjni` is a JNI-based library, so this applies. 29 [rocksdb say](https://github.com/facebook/rocksdb/issues/7222): 30 31 > You would be best not to relocate the package names for RocksDB when you 32 > create your Uber jar. 33 34 ## Shading the unshadeable 35 36 This is a design for a solution based on _dynamic loading_. Specifically, 37 we utilize the unique role of ClassLoaders in the JVM. The Spark metadata 38 client will use a special-purpose ClassLoader to load `rocksdbjni`. Every 39 ClassLoader can isolate its loaded classes and their associated resources, 40 allowing _both_ versions in the same JVM. 41 42 The client is loaded by the _Spark ClassLoader_ and loads the `rocksdbjni` 43 JAR from its _Interfacing ClassLoader_ (the name will be explained). 44 45  47 48 This creates a new difficulty. The Interfacing ClassLoader can expose any 49 class that it loads the client code. But it is not possible for that code 50 to access that class directly without using reflection: it has no (static) 51 types! 52 53 Instead, we require the client code to define _interfaces_ that match each 54 `rocksdbjni` class used. The Interfacing ClassLoader needs to replace all 55 occurrences of a type with a matching interface. 56 57 ## Rules (and limitations) 58 59 The Interfacing ClassLoader is constructed with a Map that tells it how to 60 translate _class names_ in the JAR to _interface_ classes the client knows 61 about. When it fetches a class object, it edits that class to expose only 62 the interfaces. 63 64 The JAR _does not implement_ these interfaces at the bytecode level. This 65 changes when the class is loaded, so the returned class does implement the 66 interface. 67 68 ### Constructors still need reflection 69 70 The Instance ClassLoader returns a `java.lang.Class` at runtime, so client 71 code cannot even write a constructor call. It has to construct objects by 72 using reflection. 73 74 Instead of creating an object directly: 75 76 ```scala 77 val options = new org.rocksdb.Options 78 ``` 79 80 client code fetches a class and calls its constructor dynamically: 81 82 ```scala 83 val optionsClass: Class[_] = interfacingClassLoader.loadClass("org.rocksdb.Options") 84 val options = optionsClass.newInstance 85 ``` 86 87 `newInstance` takes any constructor parameters, but obviously typechecking 88 these will only occur at runtime. 89 90 Similarly for static functions, of course: the client must fetch them using 91 reflection. 92 93 ### Classes are declared to implement their interfaces 94 95 If a class is to be translated to an interface, when the ClassLoader loads 96 it it marks it as implementing that interface. 97 98 This declaration is true _if_ the loaded class does indeed implement those 99 methods needed by the interface. This will be checked by the JVM but only 100 at run-time. 101 102 ### Return types are safely covariant 103 104 The ClassLoader adds no code to translate return types: covariance is safe 105 and automatic. However it does change the method to return the interface: 106 the caller will not be aware of the real type. 107 108 Because Java has no variance annotation on any of its types, in general it 109 is not possible to perform such type translation on complex types (without 110 performing slow two-way copies and other undesired and unsafe edits). For 111 instance, the ClassLoader does nothing when returning container types such 112 as arrays, functions, or generic containers, that involve types which need 113 translation. Fortunately the methods of `rocksdbjni` which we need do not 114 have methods with such "composite" return types, so this less important in 115 the first phase. 116 117 ### Parameter types are (at best) unsafely covariant 118 119 When a method parameter has a translated type, the client code cannot pass 120 that type, it only sees the translated interface type. So the ClassLoader 121 translates the parameter type to the interface type. It also adds code at 122 the top of the method that casts the incoming value to the original method 123 parameter type. 124 125 This translation is unsafe: if the client passes some other implementation 126 of the interface (as allowed by the interface!), the downcast fails with a 127 `ClassCastException`. 128 129 For example, if the class `org.rocksdb.Options` is mapped to the interface 130 `shading.rocksdb.Options`, and the `org.rocksdb.SstFileReader` constructor 131 has this interface: 132 133 ```scala 134 package org.rocksdb 135 136 class SstFileReader(options: Options) ... 137 ``` 138 139 the ClassLoader returns an edited version that looks like it compiled: 140 ```scala 141 package org.rocksdb 142 143 class SstFileReader(options: shading.rocksdb.Options) ... { 144 (CHECKCAST options, "Lorg/rocksdb/Options;") 145 // ... the actual constructor code ... 146 } 147 ``` 148 149 We do not handle composite parameter types, just like for return types.