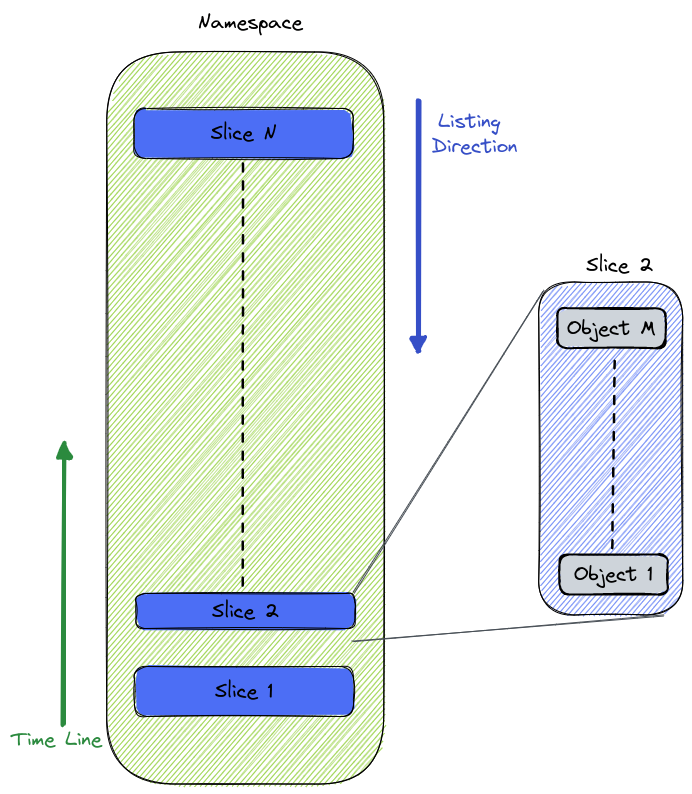

github.com/treeverse/lakefs@v1.24.1-0.20240520134607-95648127bfb0/design/accepted/gc_plus/uncommitted-gc.md (about) 1 # Uncommitted Garbage Collector 2 3 ## Motivation 4 5 Uncommitted data which is no longer referenced (due to any staged data deletion or override, reset branch etc.) is not being deleted by lakeFS. 6 This may result in excessive storage usage and possible compliance issues. 7 To solve this problem two approaches were suggested: 8 1. A batch operation performed as part of an external process (GC) 9 2. An online solution inside lakeFS 10 11 Several attempts for an online solution have been made, most of which are documented [here](https://github.com/treeverse/lakeFS/blob/master/design/rejected/hard-delete.md). 12 This document will describe the **offline** GC process for uncommitted objects. 13 14 ## Design 15 16 Garbage collection of uncommitted data will be performed using the same principles of the current GC process. 17 The basis for this is a GC Client (i.e. _Spark_ job) consuming objects information from both lakeFS and directly from the underlying object storage, 18 and using this information to determine which objects be deleted from the namespace. 19 20 The GC process is composed of 3 main parts: 21 1. Listing namespace objects 22 2. Listing of lakeFS repository committed objects 23 3. Listing of lakeFS repository uncommitted objects 24 25 Objects that are found in 1 and are not in 2 or 3 can be safely deleted by the Garbage Collector. 26 27 ### 1. Listing namespace objects 28 29 For large repositories, object listing is a very time-consuming operation - therefore we need to find a way to optimize it. 30 The suggested method is to split the repository structure into fixed size (upper bounded) slices. 31 These slices can then be scanned independently using multiple workers. 32 In addition, taking advantage of the common property of the listing operation, which lists objects in a lexicographical order, we can create the slices in a manner which 33 enables additional optimizations on the GC process (read further for details). 34 To resolve indexing issues with existing repositories that have a flat filesystem, suggested to create these slices under a `data` prefix. 35 36  37 38 ### 2. Listing of lakeFS repository committed objects 39 40 Similar to the way GC works today, use repository meta-ranges and ranges to read all committed objects in the repository. 41 42 ### 3. Listing of lakeFS repository uncommitted objects 43 44 Expose a new API in lakeFS which writes repository uncommitted objects information into data formatted files and save them in a 45 dedicated path in the repository namespace 46 47 ### Required changes by lakeFS 48 49 The following are necessary changes in lakeFS in order to implement this proposal successfully. 50 51 #### Objects Path Conventions 52 53 Uncommitted GC must scan the bucket in order to find objects that are not referenced by lakeFS. 54 To optimize this process, suggest the following changes: 55 56 1. Store lakeFS data under `<namespace>/data/` path 57 2. Divide the repository data path into time-and-size based slices. 58 3. Slice name will be a time based, reverse sorted unique identifier. 59 4. LakeFS will create a new slice on a timely basis (for example: hourly) or when it has written < MAX_SLICE_SIZE > objects to the slice. 60 5. Each slice will be written by a single lakeFS instance in order to track slice size. 61 6. The sorted slices will enable partial scans of the bucket when running the optimized GC. 62 63 #### StageObject 64 65 The StageObject operation will only be allowed on addresses outside the repository's storage namespace. This way, 66 objects added using this operation are never collected by GC. 67 68 #### [Get/Link]PhysicalAddress 69 70 1. GetPhysicalAddress to return a validation token along with the address (or embedded as part of the address). 71 2. The token will be valid for a specified amount of time and for a single use. 72 3. lakeFS will need to track issued tokens/addresses, and delete them when tokens are expired/used 73 4. LinkPhysicalAddress to verify token valid before creating an entry. 74 1. Doing so will allow us to use this time interval to filter objects that might have been uploaded and waiting for 75 the link API and avoid them being deleted by the GC process. 76 2. Objects that were uploaded to a physical address issued by the API and were not linked before the token expired will 77 eventually be deleted by the GC job. 78 >**Note:** These changes will also solves the following [issue](https://github.com/treeverse/lakeFS/issues/4438) 79 80 #### Track copied objects in ref-store 81 82 lakeFS will track copy operations of uncommitted objects and store them in the ref-store for a limited duration. 83 GC will use this information as part of the uncommitted data to avoid a race between the GC job and rename operation. 84 lakeFS will periodically scan these entries and remove copy entries from the ref-store after such time that will 85 allow correct execution of the GC process. 86 87 #### S3 Gateway CopyObject 88 89 When performing a shallow copy - track copied objects in ref-store. 90 GC will read the copied objects information from the ref-store, and will add them to the list of uncommitted. 91 lakeFS will periodically clear the copied list according to timestamp. 92 93 1. Copy of staged object in the same branch will perform a shallow copy as described above 94 2. All other copy operations will use the underlying adapter copy operation. 95 96 #### CopyObject API 97 Clients working through the S3 Gateway can use the CopyObject + DeleteObject to perform a Rename or Move operation. 98 For clients using the OpenAPI this could have been done using StageObject + DeleteObject. 99 To continue support of this operation, introduce a new API to Copy an object similarly to the S3 Gateway functionality 100 101 #### PrepareUncommittedForGC 102 103 A new API which will create files from uncommitted object information (address + creation date). These files 104 will be saved to `_lakefs/retention/gc/uncommitted/run_id/uncommitted/` and used by the GC client to list repository's uncommitted objects. 105 At the end of this flow - read copied objects information from ref-store and add it to the uncommitted data. 106 For the purpose of this document we'll call this the `UncommittedData` 107 >**Note:** Copied object information must be read AFTER all uncommitted data was collected 108 109 ### GC Flows 110 111 The following describe the GC process run flows on a repository: 112 113 #### Flow 1: Clean Run 114 115 1. Listing namespace objects 116 1. List all objects directly from object store (can be done in parallel using the slices) -> `Store DF` 117 2. Skip slices that are newer than < TOKEN_EXPIRY_TIME > 118 2. Listing of lakeFS repository uncommitted objects 119 1. Mark uncommitted data 120 1. List branches 121 2. Run _PrepareUncommittedForGC_ on all branches 122 2. Get all uncommitted data addresses 123 1. Read all addresses from `UncommittedData` -> `Uncommitted DF` 124 >**Note:** To avoid possible bug, `Mark uncommitted data` step must complete before listing of committed data 125 3. Listing of lakeFS repository committed objects 126 1. Get all committed data addresses 127 1. Read all addresses from Repository commits -> `Committed DF` 128 4. Find candidates for deletion 129 1. Subtract committed data from all objects (`Store DF` - `Committed DF`) 130 2. Subtract uncommitted data from all objects (`Store DF` - `Uncommitted DF`) 131 3. Filter files in special paths 132 4. The remainder is a list of files which can be safely removed 133 5. Save run data (see: [GC Saved Information](#gc-saved-information)) 134 135 #### Flow 2: Optimized Run 136 137 Optimized run uses the previous GC run output, to perform a partial scan of the repository to remove uncommitted garbage. 138 139 ##### Step 1. Analyze Data and Perform Cleanup for old entries (GC client) 140 141 1. Read previous run's information 142 1. Previous `Uncommitted DF` 143 2. Last read slice 144 3. Last run's timestamp 145 2. Listing of lakeFS repository uncommitted objects 146 See previous for steps 147 3. Listing of lakeFS repository committed objects (optimized) 148 1. Read addresses from repository's new commits (all new commits down to the last GC run timestamp) -> `Committed DF` 149 4. Find candidates for deletion 150 1. Subtract `Committed DF` from previous run's `Uncommitted DF` 151 2. Subtract current `Uncommitted DF` from previous run's `Uncommitted DF` 152 3. The result is a list of files that can be safely removed 153 154 >**Note:** This step handles cases of objects that were uncommitted during previous GC run and are now deleted 155 156 ##### Step 2. Analyze Data and Perform Cleanup for new entries (GC client) 157 158 1. Listing namespace objects (optimized) 159 1. Read all objects directly from object store 160 2. Skip slices that are newer than < TOKEN_EXPIRY_TIME > 161 3. Using the slices, stop after reading the last slice read by previous GC run -> `Store DF` 162 2. Find candidates for deletion 163 1. Subtract `Committed DF` from `Store DF` 164 2. Subtract current `Uncommitted DF` from `Store DF` 165 3. Filter files in special paths 166 4. The remainder is a list of files which can be safely removed 167 3. Save run data (see: [GC Saved Information](#gc-saved-information)) 168 169 ### GC Saved Information 170 171 For each GC run, save the following information using the GC run id as detailed in this [proposal](https://github.com/treeverse/cloud-controlplane/blob/main/design/accepted/gc-with-run-id.md): 172 1. Save `Uncommitted DF` in `_lakefs/retention/gc/uncommitted/run_id/uncommitted/` (Done by _PrepareUncommittedForGC_) 173 2. Create and add the following to the GC report: 174 1. Run start time 175 2. Last read slice 176 3. Write report to `_lakefs/retention/gc/uncommitted/run_id/` 177 178 ## Limitations 179 180 * Since this solution relies on the new repository structure, it is not backwards compatible. Therefore, another solution will be required for existing 181 repositories 182 * Even with the given optimizations, the GC process is still very much dependent on the amount of changes that were made on the repository 183 since the last GC run. 184 185 ## Performance Requirements 186 187 TLDR; [Bottom Line](#minimal-performance-bottom-line) 188 189 The heaviest operation during the GC process, is the namespace listing. And while we added the above optimizations to mitigate 190 this process, the fact remains - we still need to scan the entire namespace (in the Clean Run mode). 191 As per this proposal, we've created an experiment, creating 20M objects in an AWS S3 bucket divided into 2K prefixes (slices), 10K objects in each prefix. 192 Performing a test against the bucket using a Databricks notebook on a c4.2xlarge cluster, with 16 workers we've managed to list the entire bucket in approximately 1 min. 193 194 Prepare Uncommitted for GC: 195 For 5M uncommitted objects on 1K branches, the object lists are divided into 3 files, as we are targeting the file size to be approximately 20MB. 196 It takes approximately 30 seconds to write the files, and uploading them to S3 will take ~1 min. using a 10 Mbps connection. 197 198 Reading committed and uncommitted data from lakeFS is very much dependent on the repository properties. Tests performed on very large repository with 199 ~120K range files (with ~50M distinct entries) and ~30K commits resulted in listing of committed data taking ~15 minutes. 200 201 Identifying the candidates for deletion is a minus operation between data frames, and should be done efficiently and its impact on the total runtime is negligible. 202 Deleting objects on S3 can be done in a bulk operation that allows passing up to 1K objects to the API. 203 Taking into account a HTTP timeout of 100 seconds per request, 10 workers and 1M objects to delete - the deletion process 204 should take at **most** around 2.5 hours 205 206 ### Minimal Performance Bottom Line 207 208 * On a repository with 20M objects 209 * 1K branches, 30K commits and 5M uncommitted objects 210 * 1M stale objects to be deleted 211 * Severe network latencies 212 213 **The entire process should take approximately 3 hours** 214 215