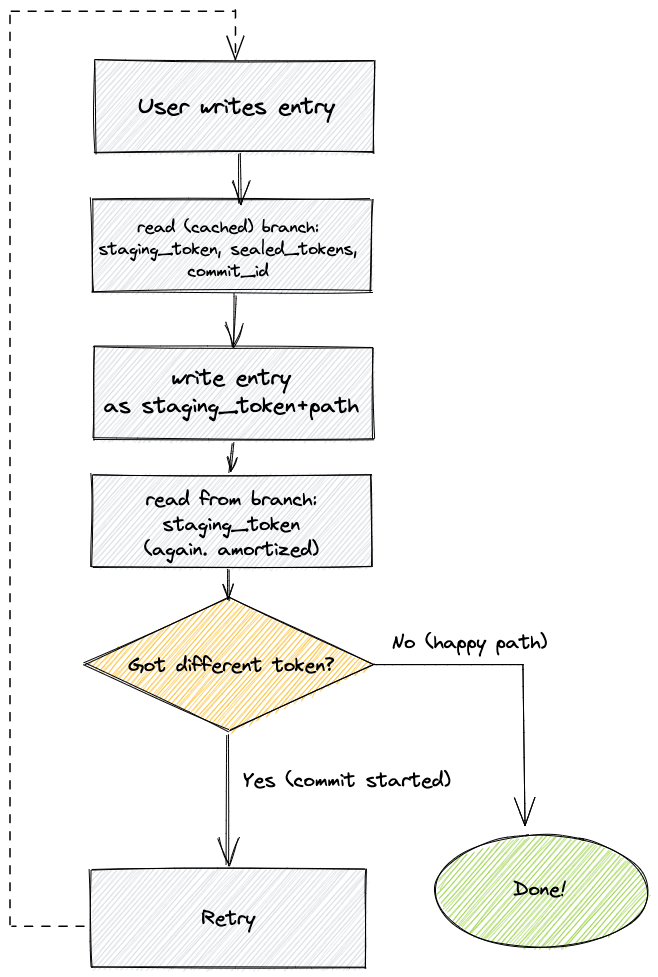

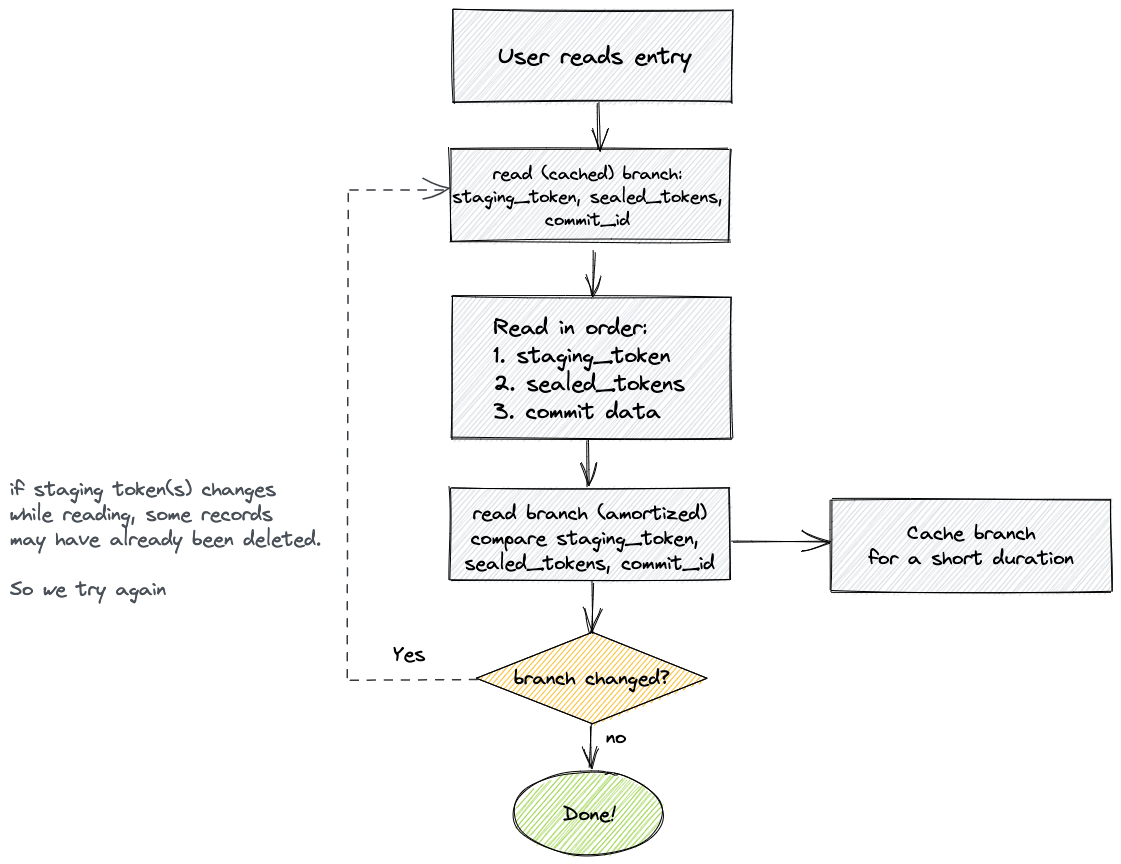

github.com/treeverse/lakefs@v1.24.1-0.20240520134607-95648127bfb0/design/accepted/metadata_kv/index.md (about) 1 # Design proposal - lakeFS on KV 2 3 lakeFS stores 2 types of metadata: 4 5 1. Immutable metadata, namely committed entries - stored as ranges and metaranges in the underlying object store) 6 2. Mutable metadata, namely IAM entities, branch pointers and uncommitted entries 7 8 This proposal describes an alternative implementation for the **mutable** metadata. 9 Currently, this type of metadata is stored on (and relies on the guarantees provided by) a PostgreSQL database. 10 11 In this proposal, we detail a relatively narrow database abstraction - one that could be satisfied by a wide variety of database systems. 12 We also detail how lakeFS operates on top of this abstraction, instead of relying on PostgreSQL specific behavior 13 14 ## Goals 15 16 1. Make it easy to run lakeFS as a managed service in a multitenant environment 17 1. Allow running production lakeFS systems on top of a database that operations teams are capable of managing, as not all ops teams are versed in scaling PostgreSQL. 18 1. Increase users trust in lakeFS, in terms of positioning: PostgreSQL is apparently not the first DB that comes to mind in relation to scalability 19 1. Make lakeFS easier to experiment with: Allow running a local lakeFS server without any external dependencies (e.g. no more `docker-compose` in the quickstart) 20 21 ## Non-Goals 22 23 1. Improve performance, latency or throughput of the system 24 1. Provide new features, capabilities or guarantees that weren't previously possible in the lakeFS API/UI/CLI 25 26 ## Design 27 28 At the heart of the design, is a simple Key/Value interface, used for all mutable metadata management. 29 30 ### Semantics 31 32 Order operations by a "happens before" operation: operation A happened before operation B if A 33 finished before B started. If operation A happened before B then we also say that B happened 34 after A. (As usual, these are not a total ordering!) 35 36 #### Consistency guarantees 37 38 * If a write succeeds, successive reads and listings will return the contents of either that 39 write or of some other write that did not happen before it. 40 41 * A successful read returns the contents of some write that did not happen after it. 42 43 * A listing returns the contents of some writes that did not happen after it. 44 45 * A successful commit holds the contents of some listing of the entire keyspace. 46 47 * Mutating operations (commits and writes) may succeed or fail. When they fail, their contents 48 might still be visible. 49 50 #### Consistency **non**-guarantees 51 52 These guarantees do *not* give linearizability of any kind. In particular, these are some 53 possible behaviours. 54 55 1. **Impossible ordering by application logic (the "1-3-2 problem"):** I write an application 56 that reads a number from a file, increments it, and writes back the same file (this 57 application performs an unsafe increment). I start with the file containing "1", and run the 58 application twice concurrently. An observer (some process that repeatedly reads the file) 59 may observe the value sequence "1", "3", "2". If the observer commits each version, it can 60 create a **history** of these values in this order. 61 2. **Non-monotonicity (the "B-A-N-A-N-A-N-A-... problem :banana:"):** A file has contents "B". 62 I start a continuous committer (some process that repeatedly commits). Now I run two 63 concurrent updates: one updates the file contents to "N", the other updates the file contents 64 to "A". Different orderings can cause histories that look like "B", "A", "N", "A", "N", "A", 65 "N", ... to any length. 66 67 ### Key/Value Store interface 68 69 This is roughly the API: 70 71 ```go 72 type Store interface { 73 // Get returns a value for the given key, or ErrNotFound if key doesn't exist 74 Get(partitionKey, key []byte) (value []byte, err error) 75 // Scan returns an iterator that scans keys in byte order, starting at or after the `start` position 76 Scan(partitionKey, start []byte) (iter KeyValueIterator, err error) 77 // Set stores the given value, overwriting an existing value if one exists 78 Set(partitionKey, key, value []byte) error 79 // Delete will delete the key/value at key, if any 80 Delete(partitionKey, key []byte) error 81 // SetIf returns an ErrPredicateFailed error if the valuePredicate passed 82 // doesn't match the currently stored value. SetIf is a simple compare-and-swap operator: 83 // valuePredicate is either the existing value, or an opaque value representing it (hash, index, etc). 84 // this is intentianally simplistic: we can model a better abstraction on top, keeping this interface simple for implementors 85 SetIf(partitionKey, key, value, valuePredicate []byte) error 86 } 87 ``` 88 89 Note: This API is roughly the one needed and is subject to change/tweaking. 90 It is meant to illustrate the required capabilities in order to build a functioning lakeFS system on top. 91 92 #### KV requirements 93 94 - read-after-write consistency: a read that follows a successful write should return the written value or newer 95 - keys could be enumerated lexicographically, in ascending byte order 96 - supports a key-level conditional operation based on a current value - or essentially, allow modeling a CAS operator 97 98 #### Databases that meet these requirements (Examples): 99 100 - PostgreSQL 101 - MySQL 102 - Embedded Pebble/RocksDB (great option for a "quickstart" environment?) 103 - MongoDB 104 - AWS DynamoDB 105 - FoundationDB 106 - Azure Cosmos 107 - Azure Blob Store 108 - Google BigTable 109 - Google Spanner 110 - Google Cloud Storage 111 - HBase 112 - Cassandra (or compatible systems such as ScyllaDB) 113 - Raft (embedded, or server implementations such as Consul/ETCd) 114 - Persistent Redis (Redis Enterprise, AWS MemoryDB) 115 - Simple in-memory tree (for simplifying and speeding up tests?) 116 117 ### Data Modeling: IAM 118 119 The API that exposes and manipulates IAM entities is already modeled as a lexicographically ordered key value store (pagination is based on sorted entity IDs, which are strings). 120 121 Relationships are modeled as auxiliary keys. For example, modeling a user, a group and a membership would look something like this (pseudo code): 122 123 ```go 124 func WriteUser(kv Store, user User) error { 125 data := proto.MustMarshal(user) 126 kv.Set([]byte(fmt.Sprintf("iam/users/%s", user.ID)), data) 127 } 128 129 func WriteGroup(kv Store, group Group) error { 130 data := proto.MustMarshal(group) 131 kv.Set([]byte(fmt.Sprintf("iam/groups/%s", group.ID)), data) 132 } 133 134 func AddUserToGroup(kv Store, user User, group Group) error { 135 data := proto.MustMarshal(&Membership{GroupID: group.ID, UserID: user.ID}) 136 kv.Set([]byte(fmt.Sprintf("iam/user_groups/%s/%s", user.ID, group.ID)), data) 137 } 138 139 func ListUserGroups(kv Store, userID string) []string { 140 groupIds := make([]string, 0) 141 prefix := []byte(fmt.Sprintf("iam/user_groups/%s/", user.ID)) 142 iter := kv.Scan(prefix) 143 for iter.Next() { 144 pair := iter.Pair() 145 if !bytes.HasPrefix(pair.Key(), prefix) { 146 break 147 } 148 membership := Membership{} 149 proto.MustUnmarshal(&membership) 150 groupIds = append(groupId, membership.GroupID) 151 } 152 ... 153 return groupIds 154 } 155 ``` 156 157 It is possible to create a 2-way index for many-to-many relationships, but this is not generally required - it would be simpler to simply do a scan when needed and filter only the relevant values. 158 This is because the IAM keyspace is relatively very small - it would be surprising if the biggest lakeFS installation to ever exist would contain more than, say, 50k users. 159 160 Some care does need to be applied when managing these secondary indices - for example, when deleting an entity, secondary indices need to be pruned first, to avoid inconsistencies. 161 162 ### Graveler Metadata - Commits, Tags, Repositories 163 164 These are simpler entities - commits and tags are immutable and could potentially be stored on the object store and not in the key/value store (they are cheap either way, so it doesn't make much of a difference). 165 Repositories and tags, are also returned in lexicographical order, which map well to the suggested abstraction. Commits are usually returned using parent traversal, so no scanning takes place anyway. 166 167 There are no special concurrency requirements for these entities, apart for last-write-wins which is already the case for all modern stores. 168 169 ### Graveler Metadata - Branches and Staged Writes 170 171 This is where concurrency control gets interesting, and where lakeFS is expected to provide a **correct** system whose semantics are well understood (lakeFS currently [falls short](https://github.com/treeverse/lakeFS/issues/2405) in that regard). 172 173 Concurrency is more of an issue here because of how a commit works: when a commit starts, it scans the currently staged changes, applies them to the current commit pointed to by the branch, updating the branch reference and removing the staged changes it applied. 174 175 Getting this right means we have to take care of the following: 176 177 1. Ensure all staged changes that finished successfully before the commit started are applied as part of the commit (causality) 178 1. Ensure acknowledged writes end up either in a resulting commit, or staged to be committed (no lost writes) 179 180 To do this, we will employ 3 mechanisms: 181 182 1. [Optimistic Concurrency Control](https://en.wikipedia.org/wiki/Optimistic_concurrency_control) on the branch pointer using `SetIf()` 183 1. Reliance on write [idempotency](https://en.wikipedia.org/wiki/Idempotence) provided by Graveler (i.e., Writing the same exact entry, with the same identity - will not appear as a change) 184 1. Lock freedom (see [_non-blocking 185 algorithms_](https://en.wikipedia.org/wiki/Non-blocking_algorithm)) 186 187 #### Committer flow 188 189 We add an additional field to each `Branch` object: In addition to the existing `staging_token`, we add an array of strings named `sealed_tokens`. 190 191 1. get branch, find current `staging_token` 192 1. use `SetIf()` to update the branch (if not modified by another process): push existing `staging_token` into `sealed_tokens`, set new UUID as `staging_token`. The branch is assuming to be represented by a single key/value pair that contains the `staging_token`, `sealed_tokens` and `commit_id` fields. 193 1. take the list of sealed tokens, and using the [`CombinedIterator()`](https://github.com/treeverse/lakeFS/blob/master/pkg/graveler/combined_iterator.go#L11), turn them into a single iterator to be applied on top of the existing commit 194 1. [_optional_] Once the commit has been persisted (metaranges and ranges 195 stored in object store, commit itself stored to KV using `Set()`), 196 attempt a "fast-path commit": perform another `SetIf()` that updates the 197 branch key/value pair again: replacing its commit ID with the new value, 198 and clearing `sealed_tokens`, as these have materialized into the new 199 commit.n 200 1. If fast-path commit isn't used or its `SetIf()` fails, repeatedly attempt 201 a "full commit": Regardless of concurrent commits racing against this 202 one, the 2 `sealed_tokens` will overlap: a _suffix_ of the 203 to-be-committed `sealed_tokens` will be a _prefix_ of the current 204 `sealed_tokens` on the branch. 205 206 The 2 edge cases are: 207 208 * _identical_ `sealed_tokens`: no concurrent commits won a race against 209 this commit. 210 * _nonoverlapping_ `sealed_tokens`: a concurrent **later** commit won a 211 race against this commit and committed all data that it intended to 212 commit. This is an "empty commit" state, and can be handled as an 213 error or not depending on business logic. 214 215 In other cases a concurrent **earlier** commit won a race against this 216 commit and committed _some_ of its data. But it is still safe to commit: 217 trim the prefix of the current `sealed_tokens` that is a suffix of the 218 to-be-committed `sealed_tokens`, and `SetIf` the branch to this new 219 record. If this update fails, everything is correct and safe and we can 220 apply business logic: retry a new full commit multiple times or 221 immediately abort with an error. 222 223 The "overlapping `sealed_tokens`" commit method gives lock freedom (if we 224 count per-thread time as number of KV operations performed by the thread): 225 at least one thread makes progress after it makes 3 KV operations. 226 Furthermore, the number of retries that a thread can make is at most the 227 number of concurrent preceding commits. 228 229 230  231 232 #### Caching branch pointers and amortized reads 233 234 In the current design, for each read/write operation we add a single amortized read of the branch record as well. 235 Let's define an "amortized read" as the act of batching requests for the same branch for a short duration, thus amortizing the DB lookup cost across those requests. 236 237 For this design, we don't want to change this, at least for most requests: Add 1 additional wait time for a KV lookup that could be amortized across requests for the same branch. 238 239 To do this, we introduce a small in-memory cache (can utilize the same caching mechanism that already exists for IAM). 240 Please note: this *does not violate consistency*, see the [Read flow](#reader-flow) and [Writer flow](writer-flow) below to understand how. 241 242 #### Writer flow 243 244 1. Read the branch's existing staging token: if branch exists in the cache, use it! Otherwise, do an amortized read (see [above](#caching-branch-pointers-and-amortized-reads)) and cache the result for a very short duration. 245 1. Write to the staging token received - this is another key/value record (e.g. `"graveler/staging/${repoId}/${stagingToken}/${path}"`) 246 1. Read the branch's existing staging token **again**. This is always an amortized read, not a cache read. If we get the same `staging_token` - great, no commit has *started while writing* the record, return success to the user. For a system with low contention between writes and commits, this will be the usual case. 247 1. If the `staging_token` *has* changed - **retry the operation**. If the previous write made it in time to be included in the commit, we'll end up writing a record with the same identity - an idempotent operation. 248 249  250 251 #### Reading/Listing flow 252 253 1. Read the branch's existing staging token(s): if branch exists in the cache, use it! Otherwise, do an amortized read (see [above](#caching-branch-pointers-and-amortized-reads)) and cache the result for a very short duration. 254 1. The length of `sealed_token` list will typically be *empty* or very small, see ((above)[#committer-flow]) 255 1. We now use the existing `CombinedIterator` to read through all staging tokens and underlying commit. 256 1. Read the branch's existing staging token(s) **again**. This is always an amortized read, not a cache read. If it hasn't changed - great, no commit has *started while reading* the record, return success to the user. For a system with low contention between writes and commits, this will be the usual case. 257 1. If it has changed, we're reading from a stale set of staging tokens. A committer might have already deleted records from it. Retry the process. 258 259  260 261 #### Important Note - exclusion duration 262 263 It is important to understand that the current pessimistic approach locks the branch for the entire duration of the commit. 264 This takes time proportional to the amount of changes to be committed and is unbounded. All writes to a branch are blocked for that period of time. 265 266 With the optimistic version, readers and writers end up retrying in during exactly 2 "constant time" operations during a commit: during the initial update with a new `staging_token`, and again when clearing `sealed_tokens`. 267 The duration of these operations is of course, not constant - it is (well, should be) very short, and not proportional to the size of the commit in any way. 268 269 ### Partitioning 270 271 Partitioning the KV key space designed to increase performance. The correctness of the KV interface without partitioning remains. 272 We rely on 2 assumptions for adding the partitioning: 273 1. lakeFS keys can be partitioned in a manner that doesn't require access to 2 partitions in a single operation. 274 The only operation of the API that handles more than a single key is `Scan` and there's no use-case for scanning more than a single partition. 275 1. Performance decreases if the keys are not partitioned. For example, a transaction on a kv Postgres table will lock the entire table 276 during the execution. Working on partitioned key-space will only block the single partition. 277 278 The KV store implementation is in charge of managing the partitioned storage. The user the KV store 279 is responsible for choosing the appropriate partitions. For example, the number of keys used for authentication 280 & authorization is relatively small, so using the same 'auth' partition for all auth entities is appropriate. 281 However, the number of entries (keys) under a single staging token is possibly the size of the entire repository, 282 so creating a partition for each staging token is the right strategy. 283 284 Guidelines: 285 - Partition keys are also a namespace for the key. i.e. the combination of (partitionKey,key) is unique in the KV database, 286 but (key) is not guaranteed to be unique. 287 - Although possible in some implementations, the interface will not support `dropPartition`. 288 A later addition of `dropPartition` could speed up commits by iterating over keys in a goroutine after the commit is done and not have to worry about iterator invalidation. 289 - New partition creation is implicit to keep the API free of additional `NewPartition` functionality. 290 291 ### Open Questions 292 293 1. Complexity cost - How complex is the system after implementing this? What would a real API look like? Serialization? 294 1. Performance - How does this affect critical path request latency? How does it affect overall system throughput? 295 1. Flexibility - Where could the narrow `Store` API become a constraint? What *won't* we be able to implement going forward due to lack of guarantees (e.g. no transactions)? 296 1. Alternatives - As this is also a solution to milestone #3, how does it fare against [known](https://github.com/treeverse/lakeFS/pull/1688) [proposals](https://github.com/treeverse/lakeFS/pull/1685)?