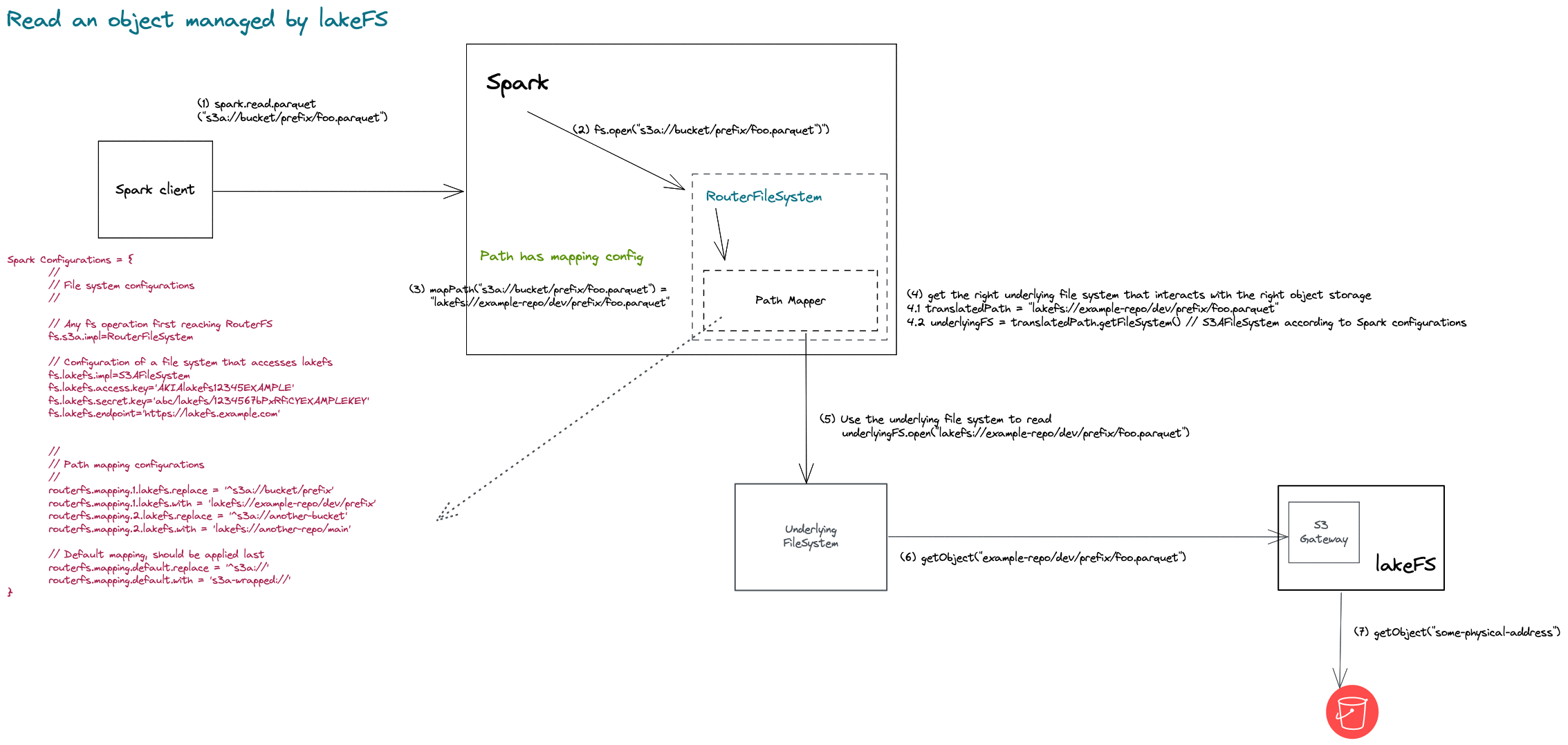

github.com/treeverse/lakefs@v1.24.1-0.20240520134607-95648127bfb0/design/accepted/spark-co-exist-with-object-storages.md (about) 1 # Spark: co-existing with existing underlying object store - Design proposal 2 3 This document includes a proposed solution for https://github.com/treeverse/lakeFS/issues/2625. 4 5 ## Goals 6 7 ### Must do 8 9 1. Provide a configurable set of overrides to translate paths at runtime from an old object store location to a new lakeFS location. 10 2. Support any object storage that lakeFS supports. 11 3. Do something that adds the least amount of friction to a Spark user as possible. 12 13 ### Nice to have 14 15 Make the solution usable by non-lakeFS users by: 16 1. Supporting path translation from and to any type of path. 17 2. Make the solution a standalone component with no direct dependency on anything other than Spark itself. 18 19 ## Non-goals 20 21 1. Convert users from [accessing lakeFS from S3 gateway](../docs/integrations/spark.md#access-lakefs-using-the-s3a-gateway) to [accessing lakeFS with lakeFS-specific Hadoop filesystem](../docs/integrations/spark.md#access-lakefs-using-the-lakefs-specific-hadoop-filesystem). 22 23 ## Proposal: Introducing RouterFileSystem 24 25 We would like to implement a [HadoopFileSystem](https://github.com/apache/hadoop/blob/2960d83c255a00a549f8809882cd3b73a6266b6d/hadoop-common-project/hadoop-common/src/main/java/org/apache/hadoop/fs/FileSystem.java) 26 that translates object store URIs into lakeFS URIs according to a configurable mapping, and uses a relevant (configured) Hadoop file system to 27 perform any file system operation. 28 29 For simplicity, this document uses `s3a` as the only URI scheme used inside the Spark application code, but this solution holds for any other URI scheme. 30 31 ### Handle any interaction with the underlying object store 32 33 To allow Spark users to integrate with lakeFS without changing their Spark code, we configure `RouterFileSystem` as the 34 file system for the URI scheme (or schemes) their Spark application is using. For example, for a Spark application that 35 reads and writes from S3 using `S3AFileSystem`, we configure `RouterFileSystem` to be the file system for URIs with `scheme=s3a`, as follows: 36 ```properties 37 fs.s3a.impl=RouterFileSystem 38 ``` 39 This will force any file system operation performed on an object URI with `scheme=s3a` to go through `RouterFileSystem` before it 40 interacts with the underlying storage. 41 42 ### URI translation 43 44 `RouterFileSystem` has access to a configurable mapping that maps any object store URI to any type of URI. This proposal 45 uses s3 object store paths (with URI `scheme=s3a`) and lakeFS paths as examples. 46 47 #### Mapping configurations 48 49 The mapping configurations are spark properties of the following form: 50 ```properties 51 routerfs.mapping.${toFsScheme}.${mappingIdx}.replace='^${fromFsScheme}://bucket/prefix' 52 routerfs.mapping.${toFsScheme}.${mappingIdx}.with='${toFsScheme}://another-bucket/prefix' 53 ``` 54 55 Where `mappingIdx` is an unbounded running index initialized for each `toFsScheme`, and `toFsScheme` is a URI scheme that 56 uses the `fs.toFsScheme.impl` property to point to the file system that handles the interaction with the underlying storage. 57 For example, the following mapping configurations 58 ```properties 59 routerfs.mapping.lakefs.1.replace='^s3a://bucket/prefix' 60 routerfs.mapping.lakefs.1.with='lakefs://example-repo/dev/prefix' 61 ``` 62 together with 63 ```properties 64 fs.lakefs.impl=S3AFileSystem 65 ``` 66 make `RouterFileSystem` translate `s3a://bucket/prefix/foo.parquet` into `lakefs://example-repo/dev/prefix/foo.parquet`, 67 and later use `S3AFileSystem` to interact with the underlying object storage which is S3 in this case. This example uses 68 [S3 gateway](../docs/integrations/spark.md#access-lakefs-using-the-s3a-gateway) as the Spark-lakeFS integration method. 69 70 ##### Multiple mapping configurations 71 72 Each `toFsScheme` can have any number of mapping configurations. E.g., below are two mapping configurations for `toFsScheme=lakefs`. 73 ```properties 74 routerfs.mapping.lakefs.1.replace='^s3a://bucket/prefix' 75 routerfs.mapping.lakefs.1.with='lakefs://example-repo/dev/prefix' 76 routerfs.mapping.lakefs.2.replace='^s3a://another-bucket' 77 routerfs.mapping.lakefs.2.with='lakefs://another-repo/main' 78 ``` 79 Mapping configurations applied in order, therefore in case of a conflict in mapping configurations the prior configuration 80 applies. 81 82 ##### Multiple `toFsScheme`s (and multiple mapping configuration groups) 83 84 With `RouterFileSystem`, Spark users can define any number of `toFsScheme`s. Each forms its own mapping configuration group, 85 and allows applying different set of Spark/Hadoop configurations. e.g. credentials, s3 endpoint, etc. Users would typically 86 define new `toFsScheme` while trying to migrate a collection from one storage space to another without changing their Spark code. 87 88 The example below demonstrates how routerFS mapping configurations and some Hadoop configurations will look like for 89 Spark application that accesses s3, MinIO, and lakeFS, but is using s3a as its sole URI scheme. 90 ```properties 91 # Mapping configurations 92 routerfs.mapping.lakefs.1.replace='^s3a://bucket/prefix' 93 routerfs.mapping.lakefs.1.with='lakefs://example-repo/dev/prefix' 94 routerfs.mapping.s3a1.1.replace='^s3a://bucket' 95 routerfs.mapping.s3a1.1.with='s3a1://another-bucket' 96 routerfs.mapping.minio.1.replace='^s3a://minio-bucket' 97 routerfs.mapping.minio.1.with='minio://another-minio-bucket' 98 99 # File System configurations 100 # Implementation 101 fs.s3a.impl=RouterFileSystem 102 fs.lakefs.impl=S3AFileSystem 103 fs.s3a1.impl=S3AFileSystem 104 fs.minio.impl=S3AFileSystem 105 106 # Access keys, the example `toFsScheme=s3a1` but required for each configured `toFsScheme` 107 fs.s3a1.access.key=AKIAIOSFODNN7EXAMPLE 108 fs.s3a1.secret.key=wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY 109 ``` 110 111 ##### Default mapping configuration 112 113 `RouterFileSystem` requires a default mapping configuration in case that none of the mappings matches a URI. 114 For example, the following default configuration states that in case or a URI with `scheme=s3a` that didn't match the 115 `^s3a://bucket/prefix` pattern, `RouterFileSystem` uses the default file system that's configured to be `S3AFileSystem`. 116 117 ```properties 118 routerfs.mapping.lakefs.1.replace='^s3a://bucket/prefix' 119 routerfs.mapping.lakefs.1.with='lakefs://example-repo/dev/prefix' 120 121 # Default mapping, should be applied last 122 routerfs.mapping.s3a-default.replace='^s3a://' 123 routerfs.mapping.s3a-default.with='s3a-default://' 124 125 # File System configurations 126 fs.s3a.impl=RouterFileSystem 127 fs.lakefs.impl=S3AFileSystem 128 fs.s3a-default.impl=S3AFileSystem 129 ``` 130 131 This configuration is required because otherwise `RouterFileSystem` will get stuck in an infinite loop, by calling itself 132 as the file system for `scheme=s3a` in the example. 133 134 ### Invoke file system operations 135 136 After translating URIs to their final form, `RouterFileSystem` will use the translated path and its relevant file system to 137 perform file system operations against the relevant object store. See example in [Mapping configurations](#mapping-configurations). 138 139 ### Getting the relevant File System 140 141 `RouterFileSystem` uses the `fs.<toFsScheme>.impl=S3AFileSystem` Hadoop configuration and the FileSystem method 142 [path.getFileSystem()](https://github.com/apache/hadoop/blob/2960d83c255a00a549f8809882cd3b73a6266b6d/hadoop-common-project/hadoop-common/src/main/java/org/apache/hadoop/fs/Path.java#L365) 143 to access the correct file system at runtime, according to user configurations. 144 145 ### Integrating with lakeFS 146 147 `RouterFileSystem` does not change the exiting [integration methods](../docs/integrations/spark.md#two-tiered-spark-support) 148 lakeFS and Spark have. that is, one can use both `S3AFileSystem` and `LakeFSFileSystem` and to read and write objects from lakeFS 149 (See configurations reference below). 150 151 #### Access lakeFS using S3 gateway 152 ```properties 153 routerfs.mapping.lakefs.1.replace='^s3a://bucket/prefix' 154 routerfs.mapping.lakefs.1.with='lakefs://example-repo/dev/prefix' 155 ``` 156 together with 157 ```properties 158 fs.s3a.impl=RouterFileSystem 159 fs.lakefs.impl=S3AFileSystem 160 ``` 161 162 #### Access lakeFS using lakeFS-specific Hadoop FileSystem 163 164 ```properties 165 routerfs.mapping.lakefs.1.replace='^s3a://bucket/prefix' 166 routerfs.mapping.lakefs.1.with='lakefs://example-repo/dev/prefix' 167 ``` 168 together with 169 ```properties 170 fs.s3a.impl=RouterFileSystem 171 fs.lakefs.impl=LakeFSFileSystem 172 ``` 173 174 **Note** There is a (solvable) open item here: during its initialization, LakeFSFileSystem [dynamically fetches](https://github.com/treeverse/lakeFS/blob/276ee87fe41841589d631aaeec1c4859308001c1/clients/hadoopfs/src/main/java/io/lakefs/LakeFSFileSystem.java#L93) 175 the underlying file system from the repository storage namespace. The file system configurations above will make `LakeFSFileSystem` 176 fetch `RouterFileSystem` for storage namespaces on s3, preventing from `LakeFSFileSystem` delegate file system operations to 177 the right underlying file system (`S3AFileSystem`). 178 179 ### Examples 180 181 #### Read an object managed by lakeFS 182 183  184 185 #### Read an object directly from the object store 186 187  188 189 ### Pros & Cons 190 191 ### Pros 192 193 1. We already have experience developing Hadoop file systems, therefore, the ramp up should not be significant. 194 2. `RouterFileSystem` suggests much simpler functionality than what lakeFSFS supports (it only needs to receive calls, translate paths, and route to the right file system), which reduces the estimated number of unknowns unknowns. 195 3. It does not change anything related to the existing Spark<>lakeFS integrations. 196 4. `RouterFileSystem` can support non-lakeFS use-cases because it does not relay on any lakeFS client. 197 5. `RouterFileSystem` can be developed in a separate repo and delivered as a standalone OSS product, we may be able to contribute it to Spark. 198 6. `RouterFileSystem` does not rely on per-bucket configurations that are only supported for hadoop-aws (includes S3AFileSystem implementation) versions >= [2.8.0](https://hadoop.apache.org/docs/r2.8.0/hadoop-aws/tools/hadoop-aws/index.html#Configurations_different_S3_buckets). 199 200 ### Cons 201 202 1. Based on our experience with lakeFSFS, we already know that supporting a hadoop file system is difficult. There are many things that can go wrong in terms of dependency conflicts, and unexpected behaviours working with managed frameworks (i.e. Databricks, EMR) 203 2. It's complex. 204 3. `RouterFileSystem` is unaware of the number of mapping configurations every `toFsScheme` has and needs to figure this out at runtime. 205 4. `toFsScheme` may be a confusing concept. 206 5. Requires adjustments of [LakeFSFileSystem](../clients/hadoopfs/src/main/java/io/lakefs/LakeFSFileSystem.java), see [this](#access-lakefs-using-lakefs-specific-hadoop-filesystem) for a reference. 207 6. Requires discovery and documentation of limitations some fs operation have in case of overlapping configurations. For example, a recursive delete operation can map paths to different file systems: recursive deletion of /data, while one file system is the complete package, and /data/lakefs is mapped to lakeFS.