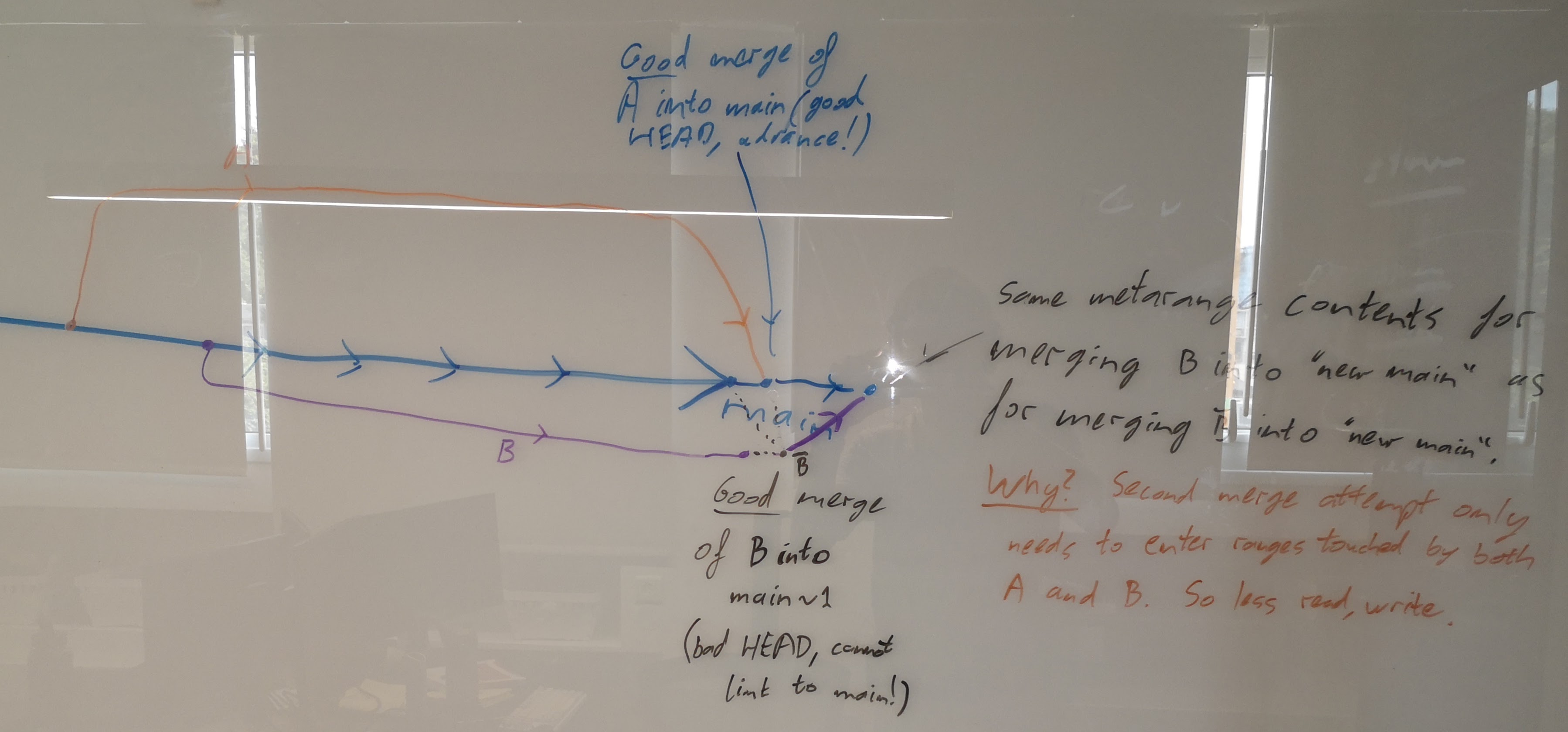

github.com/treeverse/lakefs@v1.24.1-0.20240520134607-95648127bfb0/design/open/reuse-contented-merge-metaranges/reuse-contented-merge-metaranges.md (about) 1 # Proposal: Re-use metaranges to retry contended merges 2 3 ## Why 4 5 Large merges can be slow, and we are performing a lot of work to speed them 6 up. This design is to speed up the case when multiple branches merge 7 concurrently into the same destination branch. The goal is to speed up 8 concurrent merges on both non-KV and KV systems. 9 10 On **Postgres-based (non-KV) systems**, merges wait for each other on the 11 branch lock, slowing successive merges down. What makes it _worse_ is that 12 the destination branch advances but the source does not. So in each 13 successive concurrent merge the destination is even more divergent from the 14 base. 15 16 On **KV-based systems** (the near future!), there is no branch locker. 17 Concurrent merges race each other for completion. The winning merge updates 18 the destination branch, and _all other merges lose and have to restart_. So 19 on each concurrent merge, every successive merge attempt takes even longer. 20 21 This proposal cannot prevent concurrent merges from racing. Instead it 22 makes retries considerably more efficient. Any range that is affected by 23 only one concurrent merge will be written just _once_, no matter how many 24 times it is retried. So additionally retries of the merge that is writing 25 that range will be able to skip _reading_ its two sources on every retry. 26 This should speed everything up! 27 28 ### KV or not KV? 29 30 Importantly, this proposal is independent of whether or not we use KV. 31 _Before_ KV you can use it by removing the branch locker and making the 32 branch update operation conditional. And then it **improves** concurrent 33 merge performance by allowing parallel execution of almost all of the 34 time-consuming tasks. So it increases the amount of work performed but it 35 performs that work in parallel and reduces the latency of the slow 36 concurrent merges. 37 38 _After_ KV, not only does it restore performance to this improved case -- it 39 also removes much of the duplicate work that concurrent merges perform. 40 41 ## How 42 43 The basic idea is to allow retries of a concurrent merge to re-use previous 44 work. This is similar to the "overlapping `sealed_tokens`" full-commit 45 method of the [commit flow][commit_flow]. However here there are no staging 46 tokens; the previous work of a merge attempt is the metarange that it 47 generated. 48 49  50 51 There are two ways for a merge to fail: 52 * **Conflict:** The objects at some path on the merge base, source and 53 destination are all different and no strategy was specified to resolve it. 54 55 This failure is inherent and occurs regardless of additional concurrent 56 merges. It occurs while generating the merged metarange. It cannot be 57 fixed; correct behaviour is simply to report it to the user. 58 * **Losing a race:** No merge conflict occurred, but when trying to add the 59 resulting merge commit to the destination we discover that the HEAD of the 60 destination branch has changed. 61 62 This failure is due to losing a race against a concurrent merge or commit. 63 It must either be retried or abandoned (and, presumably, retried later). 64 65 Naturally only race failures are of interest. When a merge fails because of 66 a merge, it must be retried. However we can restart it using _the generated 67 merged metarange_ as source and the original destination as the base! (See 68 "Correctness" below for why this yields the correct result.) 69 70 The performance gain occurs during the retry: We expect the new source to 71 share many ranges with the desired result and with the destination: 72 73 * A range that was the same in the base and the destination, but changed 74 only between base and original source will still generally be the same in 75 the base and destination. And rewriting it from the new source yields the 76 same range -- it will be reused in the result. 77 78 **Result:** The range created in the first attempt will be recreated in 79 the second attempt, and will not need to be uploaded to the backing object 80 store. (We might later decide to cache the merged range result in memory, 81 which will let us skip even recreating that range!) 82 * A range that was the same in the base and the source, but changed only 83 between base and original destination, will still be unchanged to the new 84 source. No range needs to be read or generated -- we did not slow this 85 case down. 86 * A range that changed in all of base, old source, and old destination, will 87 still need to be read and analyzed. But the resulting range will be 88 unchanged from the new source, so the merge is trivial: only changes from 89 the old to new destination need be applied, and we expect many ranges not 90 to have such changes. 91 92 One example where this assumption holds is when merging a branch that 93 branched out a while back. The first merge will bring in everything that 94 has changed in other areas of the repository, so the second and following 95 merges should be much smaller. 96 97 Another example where we _expect_ this to work well is repeated multi-object 98 writes that are powered by merges: These will have frequent merges that 99 consist solely of a "directory", which is just consecutive or 100 nearly-consecutive files. Regular writes use a new "directory" and will 101 quickly start using separate ranges. Overwrites write to the same 102 "directory" each time, and will continue to race other overwites to that 103 directory -- but _not_ with concurrent writes to _other_ directories. This 104 use-case is _currently in progress_ for Spark with the lakeFS 105 OutputCommitter, and we will likely perforn it for Iceberg and probably 106 Delta. 107 108 ### Alternative 109 110 Rather than immediately retry a merge that loses a race, lakeFS could return 111 a failure with an additional "hints" field containing the resulting 112 metarange ID. The client can then try again, supplying lakeFS with the same 113 hints. This allows lakeFS to use the correct metarange in the retry.[^1] 114 115 This improves client control of retries. In some cases it may cause retries 116 to be load-balanced onto a less busy lakeFS, which might improve fairness. 117 118 ### Correctness 119 120 Why is it correct to use the new metarange? It is sufficient to show that 121 merging from the new metarange as source will yield the same sequence of 122 objects as merging from the old metarange. How this sequence is split into 123 ranges is important for efficiency, but yields an indistinguishable object 124 store. 125 126 In the sequel it helps to think of "deleted" objects that as having 127 particular contents. The behaviour of deleted objects in a merge regarding 128 results and conflicts is exactly the same as of objects with some particular 129 unique contents. 130 131 | base | src | dst/base' | result/src' | dst' | final result | comment | 132 |------|-----|-----------|-------------|------|--------------|--------------------------------| 133 | A | B | A | B | A | B | | 134 | A | A | B | B | B | B | | 135 | A | A | A | A | B | B | (Only) dst changed during race | 136 | A | B | C | conflict | -- | conflict | Conflicts never proceed | 137 | A | B | A | B | C | conflict | Conflict with updated dst | 138 | A | A | B | B | C | C | | 139 140 141 [commit_flow]: ../../accepted/metadata_kv/index.md#committer-flow 142 143 [^1]: lakeFS could sign the metarange in some way, to prevent clients 144 attempting to cheat.