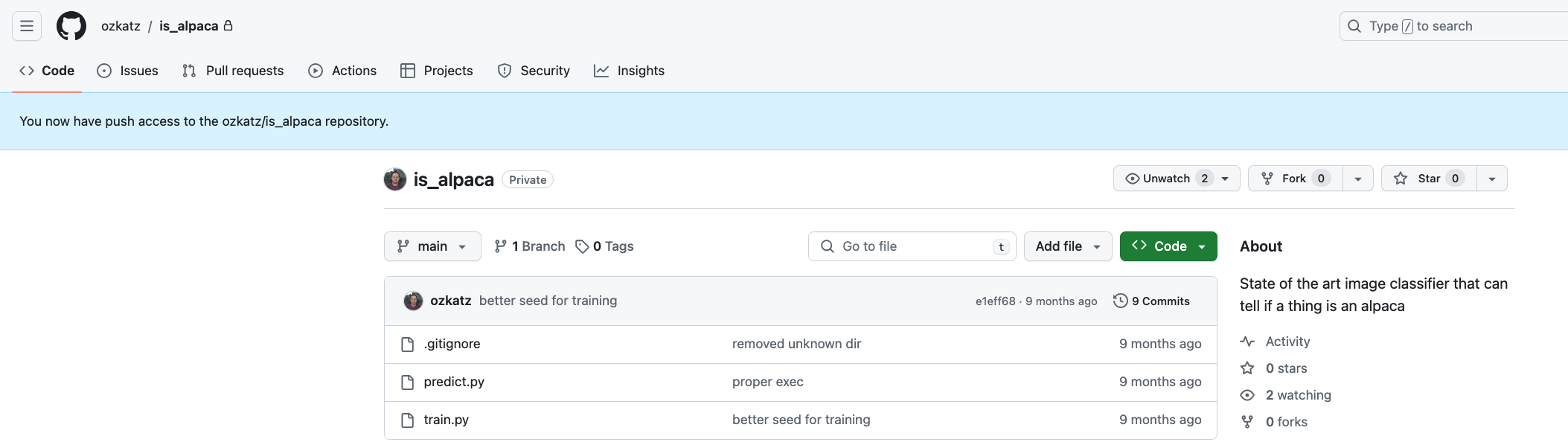

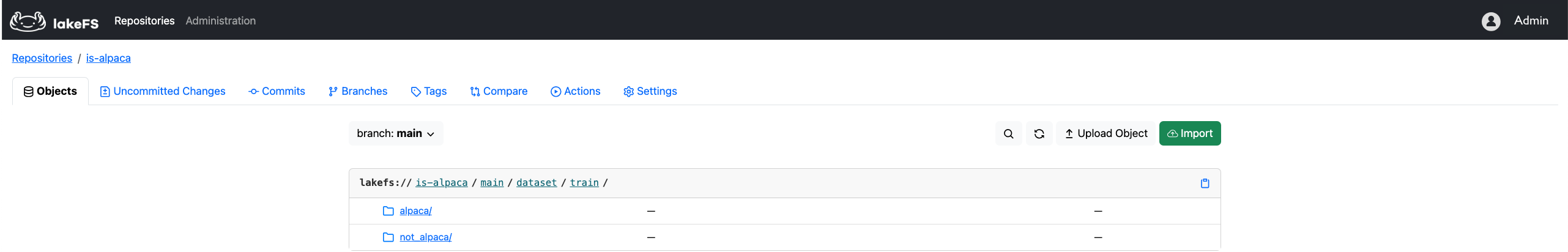

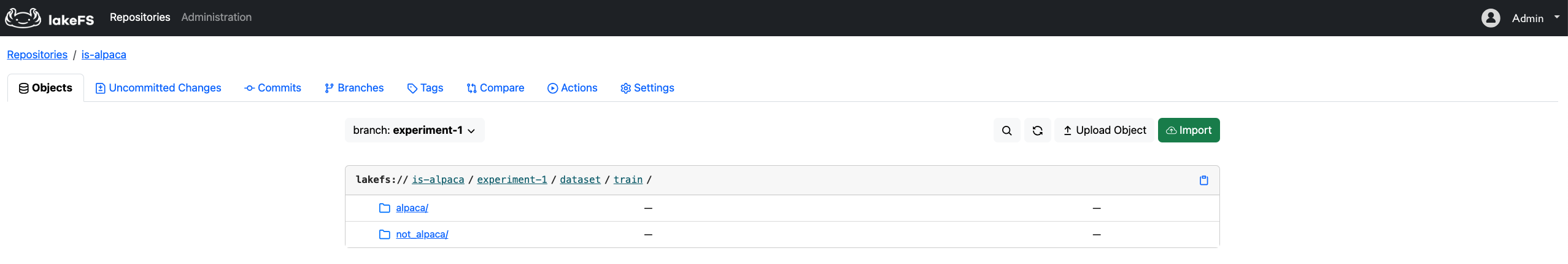

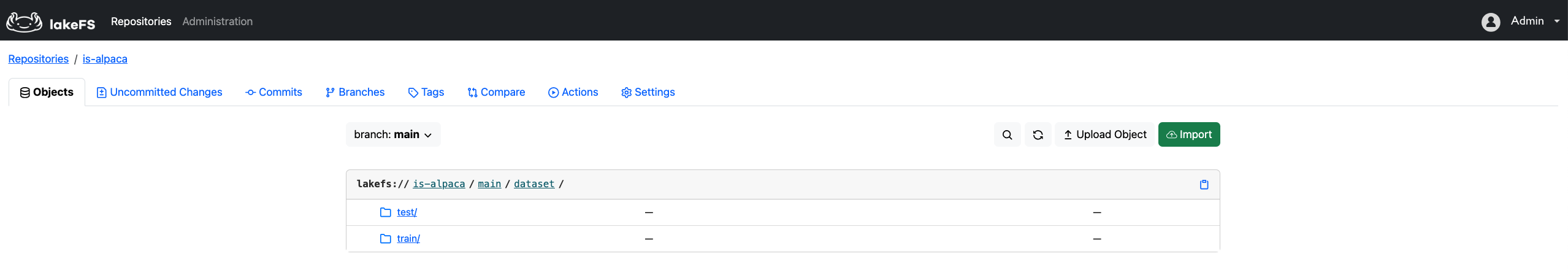

github.com/treeverse/lakefs@v1.24.1-0.20240520134607-95648127bfb0/docs/howto/local-checkouts.md (about) 1 --- 2 title: Work with lakeFS data locally 3 description: How to work with lakeFS data locally 4 parent: How-To 5 --- 6 7 # Working with lakeFS Data Locally 8 9 lakeFS is a scalable data version control system designed to scale to billions of objects. The larger the data, the less 10 feasible it becomes to consume it from a single machine. lakeFS addresses this challenge by enabling efficient management 11 of large-scale data stored remotely. In addition to its capability to manage large datasets, lakeFS offers the flexibility 12 to perform partial checkouts when necessary for working with specific portions of the data locally. 13 14 This page explains `lakectl local`, a command that lets you clone specific portions of lakeFS' data to your local environment, 15 and to keep remote and local locations in sync. 16 17 {% include toc.html %} 18 19 ## Use cases 20 21 ### Local development of ML models 22 23 The development of machine learning models is a dynamic and iterative process, including experimentation with various data versions, 24 transformations, algorithms, and hyperparameters. To optimize this iterative workflow, experiments must be conducted with speed, 25 ease of tracking, and reproducibility in mind. Localizing the model data during development enhances the development process. 26 It **accelerates the development process** by enabling interactive and offline development and reducing data access latency. 27 28 29 The local availability of data is required to **seamlessly integrate data version control systems and source control systems** 30 like Git. This integration is vital for achieving model reproducibility, allowing for a more efficient and collaborative 31 model development environment. 32 33 ### Data Locality for Optimized GPU Utilization 34 35 Training Deep Learning models requires expensive GPUs. In the context of running such programs, the goal is to optimize 36 GPU usage and prevent them from sitting idle. Many deep learning tasks involve accessing images, and in some cases, the 37 same images are accessed multiple times. Localizing the data can eliminate redundant round trip times to access remote 38 storage, resulting in cost savings. 39 40 ## **lakectl local**: The way to work with lakeFS data locally 41 42 The _local_ command of lakeFS' CLI _lakectl_ enables working with lakeFS data locally. 43 It allows cloning lakeFS data into a directory on any machine, syncing local directories with remote lakeFS locations, 44 and to [seamlessly integrate lakeFS with Git](#example-using-lakectl-local-in-tandem-with-git). 45 46 Here are the available _lakectl local_ commands: 47 48 | Command | What it does | Notes | 49 |:-----------------------------------------|:----------------------------------------------------------------------------------------------------------------------------------------------------|:--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------| 50 | **[init](../reference/cli.md#lakectl-local-init)** | Connects between a local directory and a lakeFS remote URI to enable data sync | To undo a directory init, delete the .lakefs_ref.yaml file created in the initialized directory | 51 | **[clone](../reference/cli.md#lakectl-local-clone)** | Clones lakeFS data from a path into an empty local directory and initializes the directory | A directory can only track a single lakeFS remote location. i.e., you cannot clone data into an already initialized directory | 52 | **[list](../reference/cli.md#lakectl-local-list)** | Lists directories that are synced with lakeFS | It is recommended to follow any _init_ or _clone_ command with a list command to verify its success | | 53 | **[status](../reference/cli.md#lakectl-local-status)** | Shows remote and local changes to the directory and the remote location it tracks | | 54 | **[commit](../reference/cli.md#lakectl-local-commit)** | Commits changes from local directory to the lakeFS branch it tracks | Uncommitted changes to directories connected to lakeFS remote locations will not reflect in lakeFS until after doing lakectl local _commit_. | 55 | **[pull](../reference/cli.md#lakectl-local-pull)** | Fetches latest changes from a lakeFS remote location into a connected local directory | | 56 | **[checkout](../reference/cli.md#lakectl-local-checkout)** | Syncs a local directory with the state of a lakeFS ref | | 57 58 59 **Note:** The data size you work with locally should be reasonable for smooth operation on a local machine which is typically no larger than 15 GB. 60 {: .note } 61 62 ## Example: Using _lakectl local_ in tandem with Git 63 64 We are going to develop an ML model that predicts whether an image is an Alpaca or not. Our goal is to improve the input 65 for the model. The code for the model is versioned by Git while the model dataset is versioned by lakeFS. We will 66 be using lakectl local to tie code versions to data versions to achieve model reproducibility. 67 68 ### Setup 69 {: .no_toc} 70 71 To get start with, we have initialized a Git repo called `is_alpaca` that includes the model code: 72  73 74 We also created a lakeFS repository and uploaded the _is_alpaca_ train [dataset](https://www.kaggle.com/datasets/sayedmahmoud/alpaca-dataset) 75 by Kaggel into it: 76  77 78 ### Create an Isolated Environment for Experiments 79 {: .no_toc} 80 81 Our goal is to improve the model predictions. To meet our goal, we will experiment with editing the training dataset. 82 We will run our experiments in isolation to not change anything until after we are certain the data is improved and ready. 83 84 Let's create a new lakeFS branch called `experiment-1`. Our _is_alpaca_ dataset is accessible on that branch, 85 and we will interact with the data from that branch only. 86  87 88 89 On the code side, we will create a Git branch also called `experiment-1` to not pollute our main branch with a dataset 90 which is under tuning. 91 92 ### Clone lakeFS Data into a Local Git Repository 93 {: .no_toc} 94 95 Inspecting the `train.py` script, we can see that it expects an input on the `input` directory. 96 ```python 97 #!/usr/bin/env python 98 import tensorflow as tf 99 100 input_location = './input' 101 model_location = './models/is_alpaca.h5' 102 103 def get_ds(subset): 104 return tf.keras.utils.image_dataset_from_directory( 105 input_location, validation_split=0.2, subset=subset, 106 seed=123, image_size=(244, 244), batch_size=32) 107 108 train_ds = get_ds("training") 109 val_ds = get_ds("validation") 110 111 model = tf.keras.Sequential([ 112 tf.keras.layers.Rescaling(1./255), 113 tf.keras.layers.Conv2D(32, 3, activation='relu'), 114 tf.keras.layers.MaxPooling2D(), 115 tf.keras.layers.Conv2D(32, 3, activation='relu'), 116 tf.keras.layers.MaxPooling2D(), 117 tf.keras.layers.Conv2D(32, 3, activation='relu'), 118 tf.keras.layers.MaxPooling2D(), 119 tf.keras.layers.Flatten(), 120 tf.keras.layers.Dense(128, activation='relu'), 121 tf.keras.layers.Dense(2)]) 122 123 # Fit and save 124 loss_fn = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True) 125 model.compile(optimizer='adam', loss=loss_fn, metrics=['accuracy']) 126 model.fit(train_ds, validation_data=val_ds, epochs=3) 127 model.save(model_location) 128 ``` 129 130 This means that to be able to locally develop our model and experiment with it we need to have the _is_alpaca_ dataset managed 131 by lakeFS available locally on that path. To do that, we will use the [`lakectl local clone`](../reference/cli.md#lakectl-local-clone) 132 command from our local Git repository root: 133 ```shell 134 lakectl local clone lakefs://is-alpaca/experiment-1/dataset/train/ input 135 ``` 136 This command will do a diff between out local input directory (that did not exist until now) and the provided lakeFS path 137 and identify that there are files to be downloaded from lakeFS. 138 ```shell 139 Successfully cloned lakefs://is-alpaca/experiment-1/dataset/train/ to ~/ml_models/is_alpaca/input 140 141 Clone Summary: 142 143 Downloaded: 250 144 Uploaded: 0 145 Removed: 0 146 ``` 147 148 Running [`lakectl local list`](../reference/cli.md#lakectl-local-list) from our Git repository root will show that the 149 `input` directory is now in sync with a lakeFS prefix (Remote URI), and what lakeFS version of the data (Synced Commit) 150 the is it tracking: 151 ```shell 152 is_alpaca % lakectl local list 153 +-----------+------------------------------------------------+------------------------------------------------------------------+ 154 | DIRECTORY | REMOTE URI | SYNCED COMMIT | 155 +-----------+------------------------------------------------+------------------------------------------------------------------+ 156 | input | lakefs://is-alpaca/experiment-1/dataset/train/ | 589f87704418c6bac80c5a6fc1b52c245af347b9ad1ea8d06597e4437fae4ca3 | 157 +-----------+------------------------------------------------+------------------------------------------------------------------+ 158 ``` 159 160 ### Tie Code Version and Data Version 161 {: .no_toc} 162 163 Now let's tell Git to stage the dataset we've added and inspect our Git branch status: 164 ```shell 165 is_alpaca % git add input/ 166 is_alpaca % git status 167 On branch experiment-1 168 Changes to be committed: 169 (use "git restore --staged <file>..." to unstage) 170 new file: input/.lakefs_ref.yaml 171 172 Changes not staged for commit: 173 (use "git add <file>..." to update what will be committed) 174 (use "git restore <file>..." to discard changes in working directory) 175 modified: .gitignore 176 ``` 177 178 We can see that the `.gitignore` file changed, and that the files we cloned from lakeFS into the `input` directory are not 179 tracked by git. This is intentional - remember that **lakeFS is the one managing the data**. But wait, what is this special 180 `input/.lakefs_ref.yaml` file that Git does track? 181 ```shell 182 is_alpaca % cat input/.lakefs_ref.yaml 183 184 src: lakefs://is-alpaca/experiment-1/dataset/train/s 185 at_head: 589f87704418c6bac80c5a6fc1b52c245af347b9ad1ea8d06597e4437fae4ca3 186 ``` 187 This file includes the lakeFS version of the **data** that the Git repository is currently pointing to. 188 189 Let's commit the changes to Git with: 190 ```shell 191 git commit -m "added is_alpaca dataset" 192 ``` 193 By committing to Git, we tie the current code version of the model to the dataset version in lakeFS as it appears in 194 `input/.lakefs_ref.yaml`. 195 196 ### Experiment and Version Results 197 {: .no_toc} 198 199 We ran the train script on the cloned input, and it generated a model. Now, let's use the model to predict whether an **axolotl** is an alpaca. 200 201 A reminder - this is how an axolotl looks like - not like an alpaca! 202 203 <img src="../assets/img/lakectl-local/axolotl.png" alt="axolotl" width="300"/> 204 205 Here are the (surprising) results: 206 ```shell 207 is_alpaca % ./predict.py ~/axolotl1.jpeg 208 {'alpaca': 0.32112, 'not alpaca': 0.07260383} 209 ``` 210 We expected the model to provide a more concise prediction, so let's try to improve it. To do that, we will add additional 211 images of axolotls to the model input directory: 212 ```shell 213 is_alpaca % cp ~/axolotls_images/* input/not_alpaca 214 ``` 215 216 To inspect what changes we made to out dataset we will use [lakectl local status](../reference/cli.md#lakectl-local-status). 217 ```shell 218 is_alpaca % lakectl local status input 219 diff 'local:///ml_models/is_alpaca/input' <--> 'lakefs://is-alpaca/589f87704418c6bac80c5a6fc1b52c245af347b9ad1ea8d06597e4437fae4ca3/dataset/train/'... 220 diff 'lakefs://is-alpaca/589f87704418c6bac80c5a6fc1b52c245af347b9ad1ea8d06597e4437fae4ca3/dataset/train/' <--> 'lakefs://is-alpaca/experiment-1/dataset/train/'... 221 222 ╔════════╦════════╦════════════════════════════╗ 223 ║ SOURCE ║ CHANGE ║ PATH ║ 224 ╠════════╬════════╬════════════════════════════╣ 225 ║ local ║ added ║ not_alpaca/axolotl2.jpeg ║ 226 ║ local ║ added ║ not_alpaca/axolotl3.png ║ 227 ║ local ║ added ║ not_alpaca/axolotl4.jpeg ║ 228 ╚════════╩════════╩════════════════════════════╝ 229 ``` 230 231 At this point, the dataset changes are not yet tracked by lakeFS. We will validate that by looking at the uncommitted changes 232 area of our experiment branch and verifying it is empty. 233 234 To commit these changes to lakeFS we will use [lakectl local commit](../reference/cli.md#lakectl-local-commit): 235 ```shell 236 is_alpaca % lakectl local commit input -m "add images of axolotls to the training dataset" 237 238 Getting branch: experiment-1 239 240 diff 'local:///ml_models/is_alpaca/input' <--> 'lakefs://is-alpaca/589f87704418c6bac80c5a6fc1b52c245af347b9ad1ea8d06597e4437fae4ca3/dataset/train/'... 241 upload not_alpaca/axolotl3.png ... done! [5.04KB in 679ms] 242 upload not_alpaca/axolotl2.jpeg ... done! [38.31KB in 685ms] 243 upload not_alpaca/axolotl4.jpeg ... done! [7.70KB in 718ms] 244 245 Sync Summary: 246 247 Downloaded: 0 248 Uploaded: 3 249 Removed: 0 250 251 Finished syncing changes. Perform commit on branch... 252 Commit for branch "experiment-1" completed. 253 254 ID: 0b376f01b925a075851bbaffacf104a80de04a43ed7e56054bf54c42d2c8cce6 255 Message: add images of axolotls to the training dataset 256 Timestamp: 2024-02-08 17:41:20 +0200 IST 257 Parents: 589f87704418c6bac80c5a6fc1b52c245af347b9ad1ea8d06597e4437fae4ca3 258 ``` 259 260 Looking at the lakeFS UI we can see that the lakeFS commit includes metadata that tells us what was the code version of 261 the linked Git repository at the time of the commit. 262  263 264 Inspecting the Git repository, we can see that the input/.lakefs_ref.yaml is pointing to the latest lakeFS commit `0b376f01b925a075851bbaffacf104a80de04a43ed7e56054bf54c42d2c8cce6`. 265 266 We will now re-train our model with the modified dataset and give a try to predict whether an axolotl is an alpaca: 267 ```shell 268 is_alpaca % ./predict.py ~/axolotl1.jpeg 269 {'alpaca': 0.12443, 'not alpaca': 0.47260383} 270 ``` 271 Results are indeed more accurate. 272 273 ### Sync a Local Directory with lakeFS 274 {: .no_toc} 275 276 Now that we think that the latest version of our model generates reliable predictions, let's validate it against a test dataset 277 rather than against a single picture. We will use the test dataset provided by [Kaggel](https://www.kaggle.com/datasets/sayedmahmoud/alpaca-dataset). 278 Let's create a local `testDataset` directory in our git repository and populate it with the test dataset. 279 280 Now, we will use [lakectl local init](../reference/cli.md#lakectl-local-init) to sync the `testDataset` directory with our lakeFS repository: 281 ```shell 282 is_alpaca % lakectl local init lakefs://is-alpaca/main/dataset/test/ testDataset 283 Location added to /is_alpaca/.gitignore 284 Successfully linked local directory '/is_alpaca/testDataset' with remote 'lakefs://is-alpaca/main/dataset/test/' 285 ``` 286 287 And validate that the directory was linked successfully: 288 ```shell 289 is_alpaca % lakectl local list 290 +-------------+-------------------------------------------------+------------------------------------------------------------------+ 291 | DIRECTORY | REMOTE URI | SYNCED COMMIT | 292 +-------------+-------------------------------------------------+------------------------------------------------------------------+ 293 | input | lakefs://is-alpaca/main/dataset/train/ | 0b376f01b925a075851bbaffacf104a80de04a43ed7e56054bf54c42d2c8cce6 | 294 | testDataset | lakefs://is-alpaca/main/dataset/test/ | 0b376f01b925a075851bbaffacf104a80de04a43ed7e56054bf54c42d2c8cce6 | 295 +-------------+-------------------------------------------------+------------------------------------------------------------------+ 296 ``` 297 298 Now we will tell Git to track the `testDataset` directory with `git add testDataset`, and as we saw earlier Git will only track the `testDataset/.lakefs_ref.yaml` 299 for that directory rather than its content. 300 301 To see the difference between our local `testDataset` directory and its lakeFS location `lakefs://is-alpaca/main/dataset/test/` 302 we will use [lakectl local status](../reference/cli.md#lakectl-local-status): 303 ```shell 304 is_alpaca % lakectl local status testDataset 305 306 diff 'local:///ml_models/is_alpaca/testDataset' <--> 'lakefs://is-alpaca/0b376f01b925a075851bbaffacf104a80de04a43ed7e56054bf54c42d2c8cce6/dataset/test/'... 307 diff 'lakefs://is-alpaca/0b376f01b925a075851bbaffacf104a80de04a43ed7e56054bf54c42d2c8cce6/dataset/test/' <--> 'lakefs://is-alpaca/main/dataset/test/'... 308 309 ╔════════╦════════╦════════════════════════════════╗ 310 ║ SOURCE ║ CHANGE ║ PATH ║ 311 ╠════════╬════════╬════════════════════════════════╣ 312 ║ local ║ added ║ alpaca/alpaca (1).jpg ║ 313 ║ local ║ added ║ alpaca/alpaca (10).jpg ║ 314 . . . 315 . . . 316 . . . 317 ║ local ║ added ║ not_alpaca/not_alpaca (9).jpg ║ 318 ╚════════╩════════╩════════════════════════════════╝ 319 ``` 320 321 We can see that multiple files were locally added to the synced directory. 322 323 To apply these changes to lakeFS we will commit them: 324 ```shell 325 is_alpaca % lakectl local commit testDataset -m "add is_alpaca test dataset to lakeFS" 326 327 Getting branch: experiment-1 328 329 diff 'local:///ml_models/is_alpaca/testDataset' <--> 'lakefs://is-alpaca/0b376f01b925a075851bbaffacf104a80de04a43ed7e56054bf54c42d2c8cce6/dataset/test/'... 330 upload alpaca/alpaca (23).jpg ... done! [113.81KB in 1.241s] 331 upload alpaca/alpaca (26).jpg ... done! [102.74KB in 1.4s] 332 . . 333 . . 334 upload not_alpaca/not_alpaca (42).jpg ... done! [886.93KB in 14.336s] 335 336 Sync Summary: 337 338 Downloaded: 0 339 Uploaded: 77 340 Removed: 0 341 342 Finished syncing changes. Perform commit on branch... 343 Commit for branch "experiment-1" completed. 344 345 ID: c8be7f4f5c13dd2e489ae85e6f747230bfde8e50f9cd9b6af20b2baebfb576cf 346 Message: add is_alpaca test dataset to lakeFS 347 Timestamp: 2024-02-10 12:31:53 +0200 IST 348 Parents: 0b376f01b925a075851bbaffacf104a80de04a43ed7e56054bf54c42d2c8cce6 349 ``` 350 351 Looking at the lakFS UI we see that our test data is now available at lakeFS: 352  353 354 Finally, we will Git commit the local changes to link between the Git and lakeFS repositories state. 355 356 **Note:** While syncing a local directory with a lakeF prefix, it is recommended to first commit the data to lakeFS and then 357 do a Git commit that will include the changes done to the `.lakefs_ref.yaml` for the synced directory. Reasoning is that 358 only after committing the data to lakeFS, the `.lakefs_ref.yaml` file points to a lakeFS commit that includes the added 359 content from the directory. 360 {: .note } 361 362 ### Reproduce Model Results 363 {: .no_toc} 364 365 What if we wanted to re-run the model that predicted that an axolotl is more likely to be an alpaca? 366 This question translates into the question: "How do I roll back my code and data to the time before we optimized the train dataset?" 367 Which translates to: "What was the Git commit ID at this point?" 368 369 Searching our Git log we find this commit: 370 ```shell 371 commit 5403ec29903942b692aabef404598b8dd3577f8a 372 373 added is_alpaca dataset 374 ``` 375 376 So, all we have to do now is `git checkout 5403ec29903942b692aabef404598b8dd3577f8a` and we are good to reproduce the model results! 377 378 Checkout our article about [ML Data Version Control and Reproducibility at Scale](https://lakefs.io/blog/scalable-ml-data-version-control-and-reproducibility/) to get another example for how lakeFS and Git work seamlessly together. 379