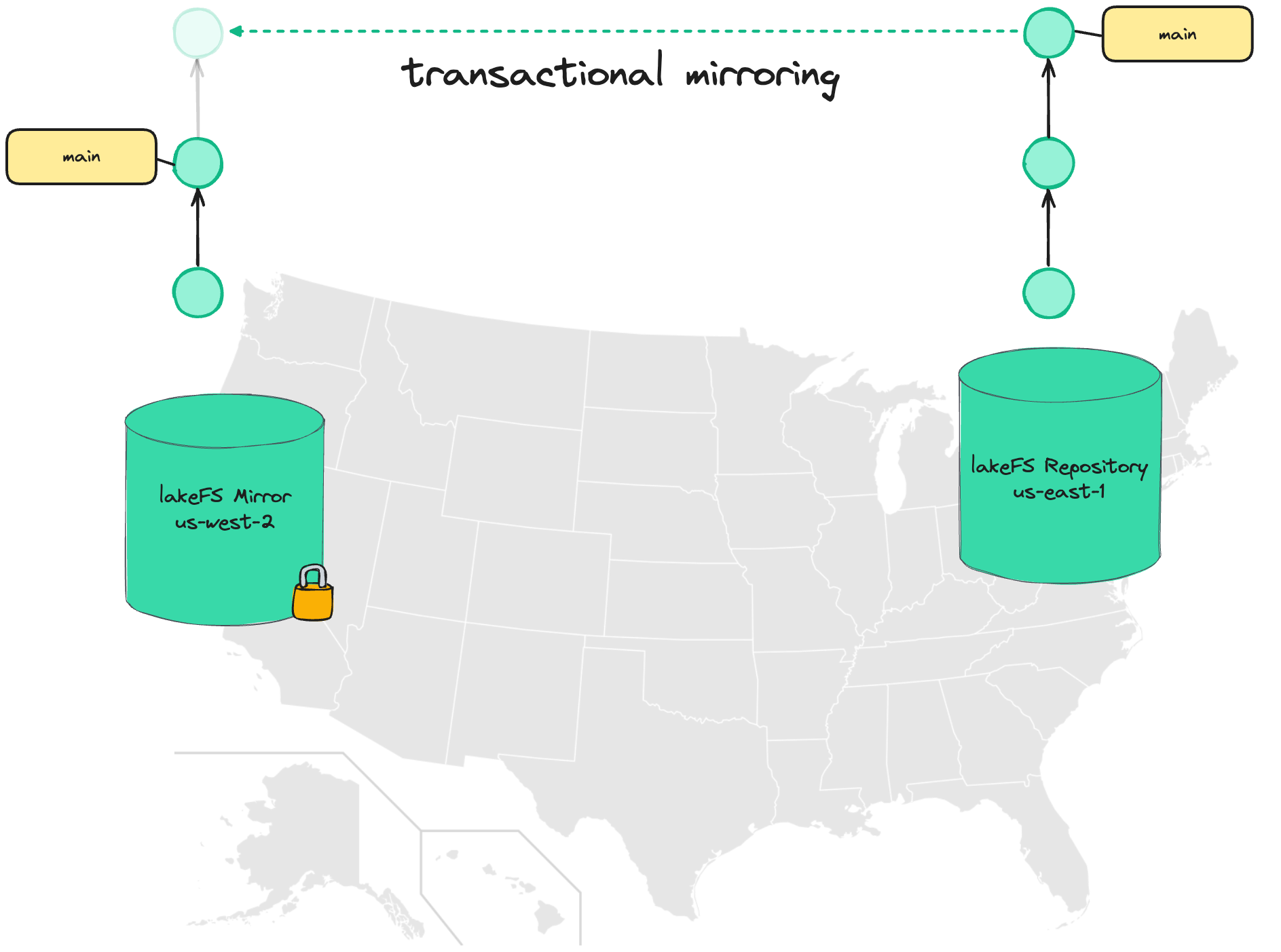

github.com/treeverse/lakefs@v1.24.1-0.20240520134607-95648127bfb0/docs/howto/mirroring.md (about) 1 --- 2 title: Mirroring 3 parent: How-To 4 description: Mirroring allows replicating commits between lakeFS installations in different geo-locations/regions 5 --- 6 7 # Mirroring 8 {: .d-inline-block } 9 lakeFS Cloud 10 {: .label .label-green } 11 {: .d-inline-block } 12 Public Preview 13 {: .label .label-green } 14 15 {: .note} 16 > Mirroring is only available for [lakeFS Cloud]({% link understand/lakefs-cloud.md %}). 17 18 19 {% include toc.html %} 20 21 22 ## What is lakeFS mirroring? 23 24 Mirroring in lakeFS allows replicating a lakeFS repository ("source") into read-only copies ("mirror") in different locations. 25 26 Unlike conventional mirroring, data isn't simply copied between regions - lakeFS Cloud tracks the state of each commit, advancing the commit log on the mirror only once a commit has been fully replicated and all data is available. 27 28 29  30 31 32 ## Uses cases 33 34 ### Disaster recovery 35 36 Typically, object stores provide a replication/batch copy API to allow for disaster recovery: as new objects are written, they are asynchronously copied to other geo locations. 37 38 In the case of regional failure, users can rely on the other geolocations which should contain relatively-up-to-date state. 39 40 The problem is reasoning about what managed to arrive by the time of disaster and what hasn't: 41 * have all the necessary files for a given dataset arrived? 42 * in cases there are dependencies between datasets, are all dependencies also up to date? 43 * what is currently in-flight or haven't even started replicating yet? 44 45 Reasoning about these is non-trivial, especially in the face of a regional disaster, however ensuring business continuity might require that we have these answers. 46 47 Using lakeFS mirroring makes it much easier to answer: we are guaranteed that the latest commit that exists in the replica is in a consistent state and is fully usable, even if it isn't the absolute latest commit - it still reflects a known, consistent, point in time. 48 49 50 ### Data Locality 51 52 For certain workloads, it might be cheaper to have data available in multiple regions: Expensive hardware such as GPUs might fluctuate in price, so we'd want to pick the region that currently offers the best pricing. The difference could easily offset to cost of the replicated data. 53 54 The challenge is reproducibility - Say we have an ML training job that reads image files from a path in the object store. Which files existed at the time of training? 55 56 If data is constantly flowing between regions, this might be harder to answer than we think. And even if we know - how can we recreate that exact state if we want to run the process again (for example, to rebuild that model for troubleshooting). 57 58 Using consistent commits solves this problem - with lakeFS mirroring, it is guaranteed that a commit ID, regardless of location, will always contain the exact same data. 59 60 We can train our model in region A, and a month later feed the same commit ID into another region - and get back the same results. 61 62 63 ## Setting up mirroring 64 65 ### Configuring bucket replication on S3 66 67 The objects within the repository are copied using your cloud provider's object store replication mechanism. 68 For AWS S3, please refer to the [AWS S3 replication documentation](https://docs.aws.amazon.com/AmazonS3/latest/userguide/replication-how-setup.html) to make sure your lakeFS repository's [storage namespace](../understand/glossary.html#storage-namespace) (source) is replicated to the region you'd like your mirror to be located on (target). 69 70 After setting the replication rule, new objects will be replicated to the destination bucket. 71 72 In order to replicate the existing objects, we'd need to manually copy them - however, we can use [S3 batch jobs](https://docs.aws.amazon.com/AmazonS3/latest/userguide/s3-batch-replication-batch.html) to do this. 73 74 75 ### Creating a lakeFS user with a "replicator" policy 76 77 On our source lakeFS installation, under **Administration** create a new user that will be used by the replication subsystem. 78 The user should have the following [RBAC policy](../reference/security/rbac.html) attached: 79 80 ```json 81 { 82 "id": "ReplicationPolicy", 83 "statement": [ 84 { 85 "action": [ 86 "fs:ReadRepository", 87 "fs:CreateRepository", 88 "fs:UpdateRepository", 89 "fs:DeleteRepository", 90 "fs:ListRepositories", 91 "fs:AttachStorageNamespace", 92 "fs:ReadObject", 93 "fs:WriteObject", 94 "fs:DeleteObject", 95 "fs:ListObjects", 96 "fs:CreateCommit", 97 "fs:CreateMetaRange", 98 "fs:ReadCommit", 99 "fs:ListCommits", 100 "fs:CreateBranch", 101 "fs:DeleteBranch", 102 "fs:RevertBranch", 103 "fs:ReadBranch", 104 "fs:ListBranches" 105 ], 106 "effect": "allow", 107 "resource": "*" 108 } 109 ] 110 } 111 ``` 112 113 **Alternatively**, we can create a policy with a narrower scope, only for a specific repository and/or mirror: 114 115 ```json 116 { 117 "id": "ReplicationPolicy", 118 "statement": [ 119 { 120 "action": [ 121 "fs:ListRepositories" 122 ], 123 "effect": "allow", 124 "resource": "*" 125 }, 126 { 127 "action": [ 128 "fs:ReadRepository", 129 "fs:ReadObject", 130 "fs:ListObjects", 131 "fs:ReadCommit", 132 "fs:ListCommits", 133 "fs:ReadBranch", 134 "fs:ListBranches" 135 ], 136 "effect": "allow", 137 "resource": "arn:lakefs:fs:::repository/{sourceRepositoryId}" 138 }, 139 { 140 "action": [ 141 "fs:ReadRepository", 142 "fs:CreateRepository", 143 "fs:UpdateRepository", 144 "fs:DeleteRepository", 145 "fs:AttachStorageNamespace", 146 "fs:ReadObject", 147 "fs:WriteObject", 148 "fs:DeleteObject", 149 "fs:ListObjects", 150 "fs:CreateCommit", 151 "fs:CreateMetaRange", 152 "fs:ReadCommit", 153 "fs:ListCommits", 154 "fs:CreateBranch", 155 "fs:DeleteBranch", 156 "fs:RevertBranch", 157 "fs:ReadBranch", 158 "fs:ListBranches" 159 ], 160 "effect": "allow", 161 "resource": "arn:lakefs:fs:::repository/{mirrorId}" 162 }, 163 { 164 "action": [ 165 "fs:AttachStorageNamespace" 166 ], 167 "effect": "allow", 168 "resource": "arn:lakefs:fs:::namespace/{DestinationStorageNamespace}" 169 } 170 ] 171 } 172 ``` 173 174 Once a user has been created and the replication policy attached to it, create an access key and secret to be used by the mirroring process. 175 176 177 ### Authorizing the lakeFS Mirror process to use the replication user 178 179 Please [contact Treeverse customer success](mailto:support@treeverse.io?subject=Setting up replication) to connect the newly created user with the mirroring process 180 181 182 ### Configuring repository replication 183 184 Replication has a stand-alone HTTP API. In this example, we'll use cURL, but feel free to use any HTTP client or library: 185 186 ```bashhttps://treeverse.us-east-1 187 curl --location 'https://<ORGANIZATION_ID>.<SOURCE_REGION>.lakefscloud.io/service/replication/v1/repositories/<SOURCE_REPO>/mirrors' \ 188 --header 'Content-Type: application/json' \ 189 -u <ACCESS_KEY_ID>:<SECRET_ACCESS_KEY> \ 190 -X POST \ 191 --data '{ 192 "name": "<MIRROR_NAME>", 193 "region": "<MIRROR_REGION>", 194 "storage_namespace": "<MIRROR_STORAGE_NAMESPACE>" 195 }' 196 ``` 197 Using the following parameters: 198 199 * `ORGANIZATION_ID` - The ID as it appears in the URL of your lakeFS installation (e.g. `https://my-org.us-east-1.lakefscloud.io/`) 200 * `SOURCE_REGION` - The region where our source repository is hosted 201 * `SOURCE_REPO` - Name of the repository acting as our replication source. It should exist 202 * `ACCESS_KEY_ID` & `SECRET_ACCESS_KEY` - Credentials for your lakeFS user (make sure you have the necessary RBAC permissions as [listed below](#rbac)) 203 * `MIRROR_NAME` - Name used for the read-only mirror to be created on the destination region 204 * `MIRROR_STORAGE_NAMESPACE` - Location acting as the replication target for the storage namespace of our source repository 205 206 ### Mirroring and Garbage Collection 207 208 Garbage collection won't run on mirrored repositories. 209 Deletions from garbage collection should be replicated from the source: 210 1. Enable [DELETED marker replication](https://docs.aws.amazon.com/AmazonS3/latest/userguide/delete-marker-replication.html) on the source bucket. 211 1. Create a [lifecycle policy](https://docs.aws.amazon.com/AmazonS3/latest/userguide/object-lifecycle-mgmt.html) on the destination bucket to delete the objects with the DELETED marker. 212 213 ## RBAC 214 215 These are the required RBAC permissions for working with the new cross-region replication feature: 216 217 Creating a Mirror: 218 219 | Action | ARN | 220 |----------------------------|-----------------------------------------------| 221 | `fs:CreateRepository` | `arn:lakefs:fs:::repository/{mirrorId}` | 222 | `fs:MirrorRepository` | `arn:lakefs:fs:::repository/{sourceRepositoryId}` | 223 | `fs:AttachStorageNamespace` | `arn:lakefs:fs:::namespace/{storageNamespace}`| 224 225 Editing Mirrored Branches: 226 227 | Action | ARN | 228 |-------------------------|-----------------------------------------------| 229 | `fs:MirrorRepository` | `arn:lakefs:fs:::repository/{sourceRepositoryId}` | 230 231 Deleting a Mirror: 232 233 | Action | ARN | 234 |----------------------|-----------------------------------------| 235 | `fs:DeleteRepository`| `arn:lakefs:fs:::repository/{mirrorId}` | 236 237 Listing/Getting Mirrors for a Repository: 238 239 | Action | ARN | 240 |-----------------------|-----| 241 | `fs:ListRepositories` | `*` | 242 243 244 ## Other replication operations 245 246 ### Listing all mirrors for a repository 247 248 ```bash 249 curl --location 'https://<ORGANIZATION_ID>.<SOURCE_REGION>.lakefscloud.io/service/replication/v1/repositories/<SOURCE_REPO>/mirrors' \ 250 -u <ACCESS_KEY_ID>:<SECRET_ACCESS_KEY> -s 251 ``` 252 253 ### Getting a specific mirror 254 255 ```bash 256 url --location 'https://<ORGANIZATION_ID>.<SOURCE_REGION>.lakefscloud.io/service/replication/v1/repositories/<SOURCE_REPO>/mirrors/<MIRROR_ID>' \ 257 -u <ACCESS_KEY_ID>:<SECRET_ACCESS_KEY> -s 258 ``` 259 260 ### Deleting a specific mirror 261 262 ```bash 263 curl --location --request DELETE 'https://<ORGANIZATION_ID>.<SOURCE_REGION>.lakefscloud.io/service/replication/v1/repositories/<SOURCE_REPO>/mirrors/<MIRROR_ID>' \ 264 -u <ACCESS_KEY_ID>:<SECRET_ACCESS_KEY> 265 ``` 266 267 ## Limitations 268 269 1. Mirroring is currently only supported on [AWS S3](https://aws.amazon.com/s3/) and [lakeFS Cloud for AWS](https://lakefs.cloud) 270 1. Read-only mirrors cannot be written to. Mirroring is one-way, from source to destination(s) 271 1. Currently, only branches are mirrored. Tags and arbitrary commits that do not belong to any branch are not replicated 272 1. [lakeFS Hooks](./hooks) will only run on the source repository, not its replicas 273 1. Replication is still asynchronous: reading from a branch will always return a valid commit that the source has pointed to, but it is not guaranteed to be the **latest commit** the source branch is pointing to.