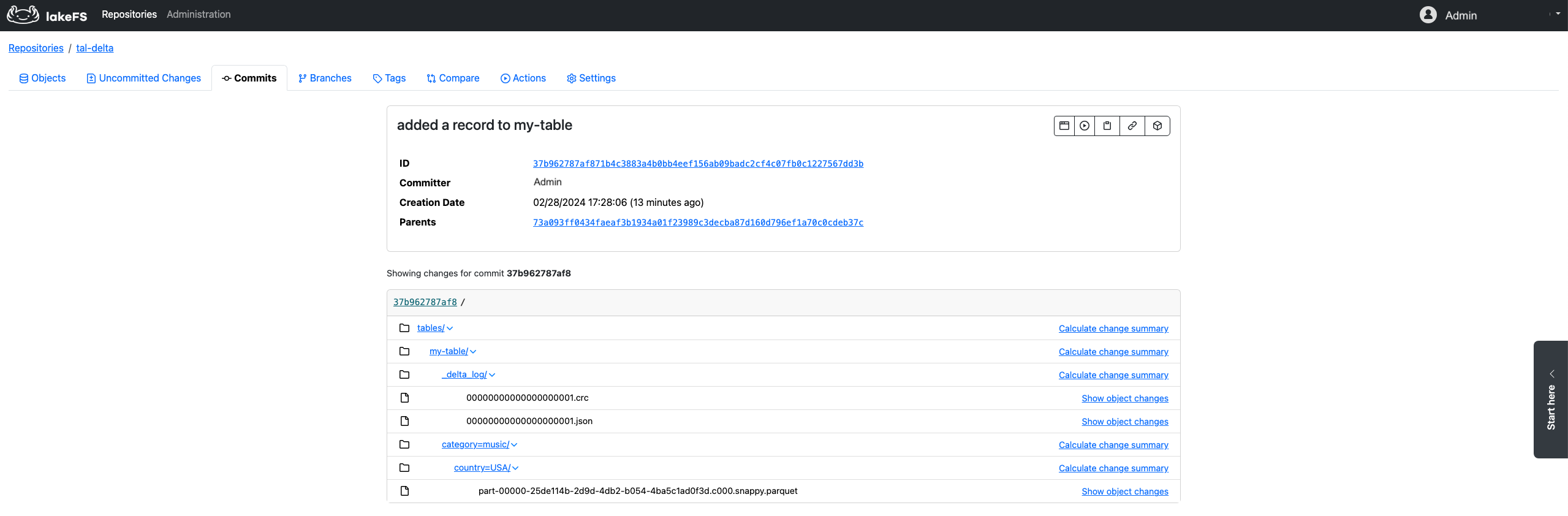

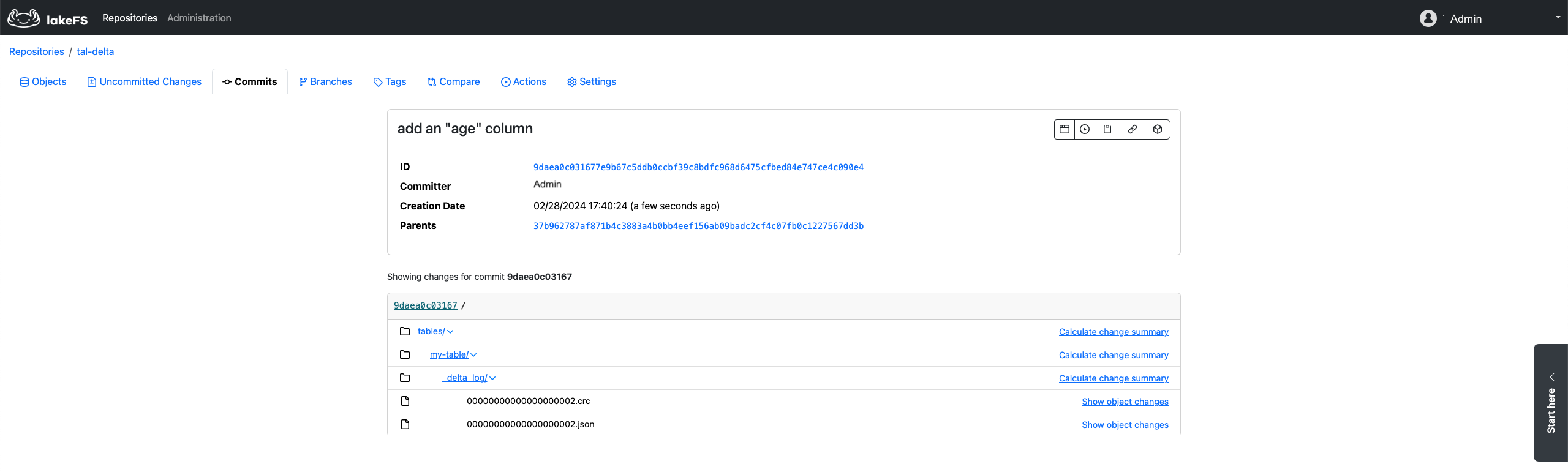

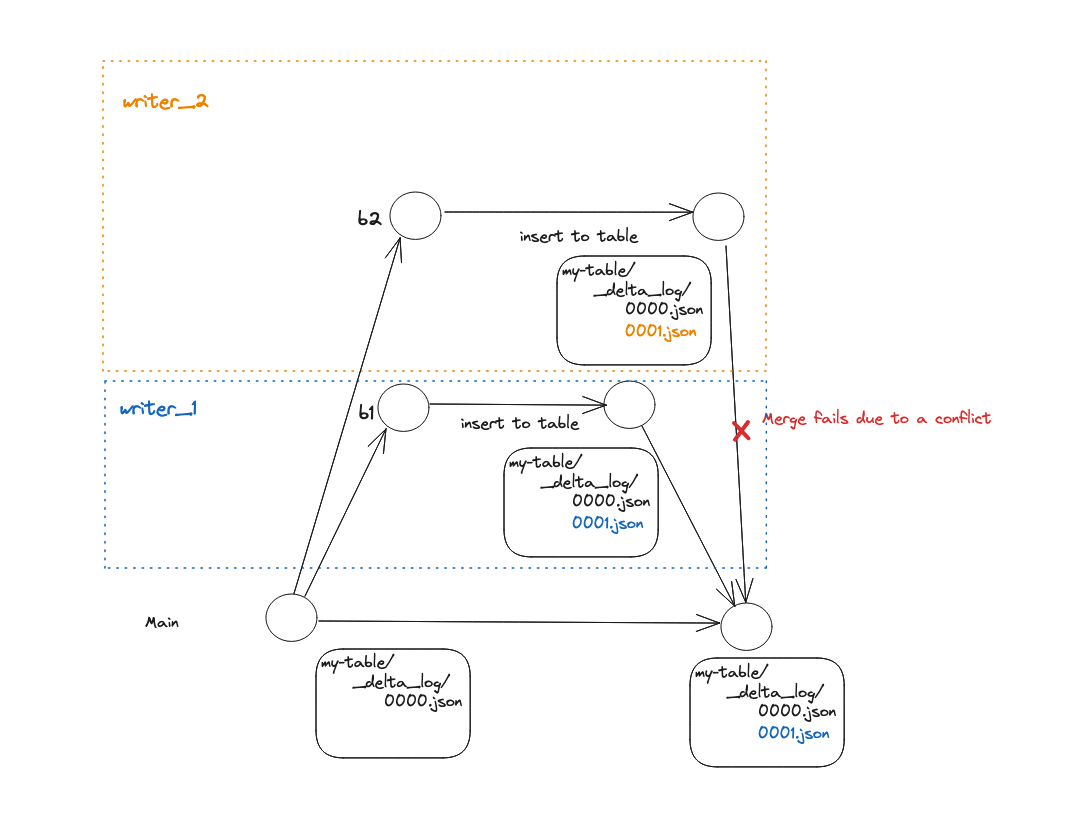

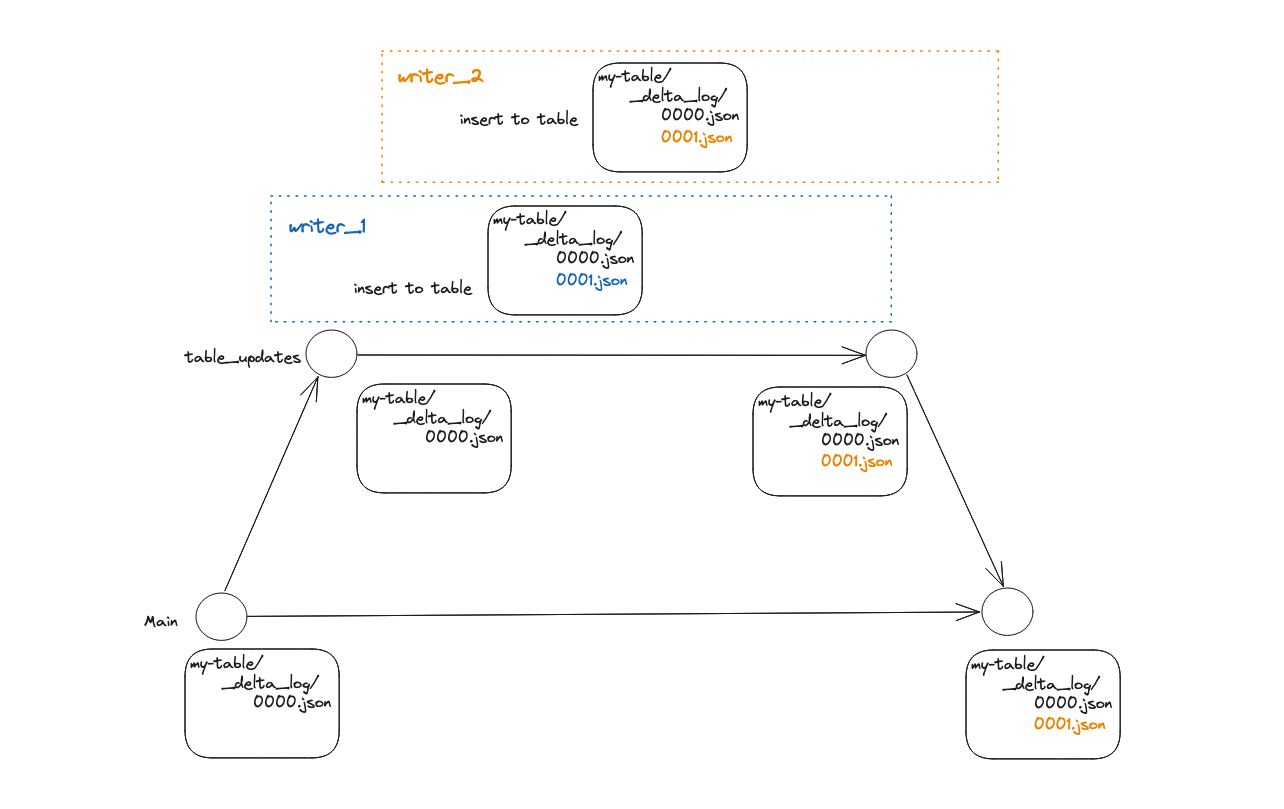

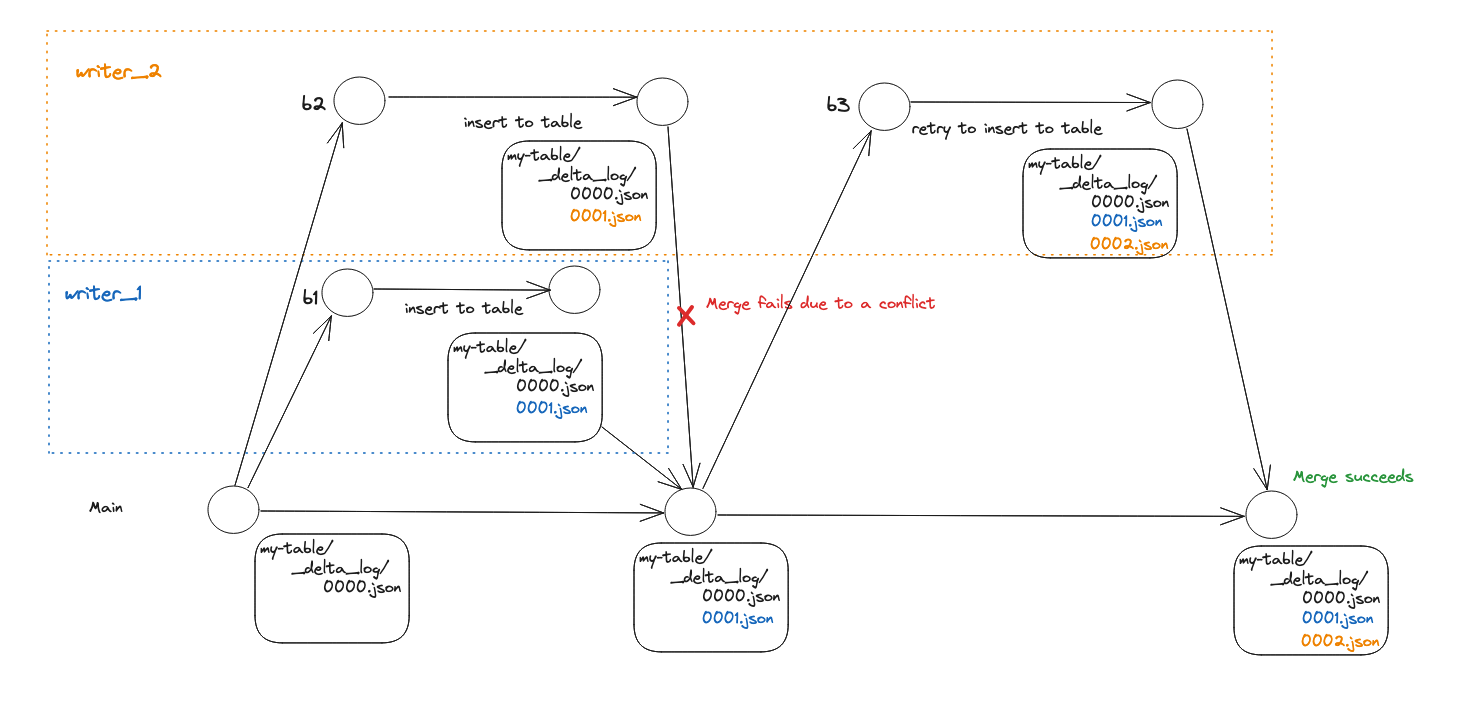

github.com/treeverse/lakefs@v1.24.1-0.20240520134607-95648127bfb0/docs/integrations/delta.md (about) 1 --- 2 title: Delta Lake 3 description: This section explains how to use Delta Lake with lakeFS. 4 parent: Integrations 5 --- 6 7 # Using lakeFS with Delta Lake 8 9 [Delta Lake](https://delta.io/) Delta Lake is an open-source storage framework designed to improve performance and provide transactional guarantees to data lake tables. 10 11 Because lakeFS is format-agnostic, you can save data in Delta format within a lakeFS repository and benefit from the advantages of both technologies. Specifically: 12 13 1. ACID operations can span across multiple Delta tables. 14 2. [CI/CD hooks][data-quality-gates] can validate Delta table contents, schema, or even referential integrity. 15 3. lakeFS supports zero-copy branching for quick experimentation with full isolation. 16 17 {% include toc.html %} 18 19 ## Delta Lake Tables from the lakeFS Perspective 20 21 lakeFS is a data versioning tool, functioning at the **object** level. This implies that, by default, lakeFS remains agnostic 22 to whether the objects within a Delta table location represent a table, table metadata, or data. As per the Delta Lake [protocol](https://github.com/delta-io/delta/blob/master/PROTOCOL.md), 23 any modification to a table—whether it involves adding data or altering table metadata—results in the creation of a new object 24 in the table's [transaction log](https://www.databricks.com/blog/2019/08/21/diving-into-delta-lake-unpacking-the-transaction-log.html). 25 Typically, residing under the `_delta_log` path, relative to the root of the table's directory. This new object has an incremented version compared to its predecessor. 26 27 Consequently, when making changes to a Delta table within the lakeFS environment, these changes are reflected as changes 28 to objects within the table location. For instance, inserting a record into a table named "my-table," which is partitioned 29 by 'category' and 'country,' is represented in lakeFS as added objects within the table prefix (i.e., the table data) and the table transaction log. 30  31 32 Similarly, when performing a metadata operation such as renaming a table column, new objects are appended to the table transaction log, 33 indicating the schema change. 34  35 36 ## Using Delta Lake with lakeFS from Apache Spark 37 38 _Given the native integration between Delta Lake and Spark, it's most common that you'll interact with Delta tables in a Spark environment._ 39 40 To configure a Spark environment to read from and write to a Delta table within a lakeFS repository, you need to set the proper credentials and endpoint in the S3 Hadoop configuration, like you'd do with any [Spark](./spark.md) environment. 41 42 Once set, you can interact with Delta tables using regular Spark path URIs. Make sure that you include the lakeFS repository and branch name: 43 44 ```scala 45 df.write.format("delta").save("s3a://<repo-name>/<branch-name>/path/to/delta-table") 46 ``` 47 48 Note: If using the Databricks Analytics Platform, see the [integration guide](./spark.md#installation) for configuring a Databricks cluster to use lakeFS. 49 50 To see the integration in action see [this notebook](https://github.com/treeverse/lakeFS-samples/blob/main/00_notebooks/delta-lake.ipynb) in the [lakeFS Samples Repository](https://github.com/treeverse/lakeFS-samples/). 51 52 ## Using Delta Lake with lakeFS from Python 53 54 The [delta-rs](https://github.com/delta-io/delta-rs) library provides bindings for Python. This means that you can use Delta Lake and lakeFS directly from Python without needing Spark. Integration is done through the [lakeFS S3 Gateway]({% link understand/architecture.md %}#s3-gateway) 55 56 The documentation for the `deltalake` Python module details how to [read](https://delta-io.github.io/delta-rs/python/usage.html#loading-a-delta-table), [write](https://delta-io.github.io/delta-rs/python/usage.html#writing-delta-tables), and [query](https://delta-io.github.io/delta-rs/python/usage.html#querying-delta-tables) Delta Lake tables. To use it with lakeFS use an `s3a` path for the table based on your repository and branch (for example, `s3a://delta-lake-demo/main/my_table/`) and specify the following `storage_options`: 57 58 ```python 59 storage_options = {"AWS_ENDPOINT": <your lakeFS endpoint>, 60 "AWS_ACCESS_KEY_ID": <your lakeFS access key>, 61 "AWS_SECRET_ACCESS_KEY": <your lakeFS secret key>, 62 "AWS_REGION": "us-east-1", 63 "AWS_S3_ALLOW_UNSAFE_RENAME": "true" 64 } 65 ``` 66 67 If your lakeFS is not using HTTPS (for example, you're just running it locally) then add the option 68 69 ```python 70 "AWS_STORAGE_ALLOW_HTTP": "true" 71 ``` 72 73 To see the integration in action see [this notebook](https://github.com/treeverse/lakeFS-samples/blob/main/00_notebooks/delta-lake-python.ipynb) in the [lakeFS Samples Repository](https://github.com/treeverse/lakeFS-samples/). 74 75 ## Exporting Delta Lake tables from lakeFS into Unity Catalog 76 77 This option is for users who are managing Delta Lake tables with lakeFS and access them through Databricks [Unity Catalog](https://www.databricks.com/product/unity-catalog). lakeFS offers 78 a [Data Catalog Export](../howto/catalog_exports.md) functionality that provides read-only access to your Delta tables from within Unity catalog. Using the data catalog exporters, 79 you can work on Delta tables in isolation and easily explore them within the Unity Catalog. 80 81 Once exported, you can query the versioned table data with: 82 ```sql 83 SELECT * FROM my_catalog.main.my_delta_table 84 ``` 85 Here, 'main' is the name of the lakeFS branch from which the delta table was exported. 86 87 To enable Delta table exports to Unity catalog use the Unity [catalog integration guide](unity-catalog.md). 88 89 ## Limitations 90 91 ### Multi-Writer Support in lakeFS for Delta Lake Tables 92 {: .no_toc} 93 94 lakeFS currently supports a single writer for Delta Lake tables. Attempting to utilize multiple writers for writing to a Delta table may result in two types of issues: 95 1. Merge Conflicts: These conflicts arise when multiple writers modify a Delta table on different branches, and an attempt is made to merge these branches. 96  97 2. Concurrent File Overwrite: This issue occurs when multiple writers concurrently modify a Delta table on the same branch. 98  99 100 Note: lakeFS currently lacks its own implementation for a LogStore, and the default Log store used does not control concurrency. 101 {: .note } 102 To address these limitations, consider following [best practices for implementing multi-writer support](#use-lakefs-branches-and-merges-to-support-multi-writers). 103 104 ## Best Practices 105 106 ### Implementing Multi-Writer Support through lakeFS Branches and Merges 107 108 To achieve safe multi-writes to a Delta Lake table on lakeFS, we recommend following these best practices: 109 1. **Isolate Changes:** Make modifications to your table in isolation. Each set of changes should be associated with a dedicated lakeFS branch, branching off from the main branch. 110 2. **Merge Atomically:** After making changes in isolation, try to merge them back into the main branch. This approach guarantees that the integration of changes is cohesive. 111 112 The workflow involves: 113 * Creating a new lakeFS branch from the main branch for any table change. 114 * Making modifications in isolation. 115 * Attempting to merge the changes back into the main branch. 116 * Iterating the process in case of a merge failure due to conflicts. 117 118 The diagram below provides a visual representation of how branches and merges can be utilized to manage concurrency effectively: 119  120 121 ### Follow Vacuum by Garbage Collection 122 123 To delete unused files from a table directory while working with Delta Lake over lakeFS you need to first use Delta lake 124 [Vacuum](https://docs.databricks.com/en/sql/language-manual/delta-vacuum.html) to soft-delete the files, and then use 125 [lakeFS Garbage Collection](../howto/garbage-collection) to hard-delete them from the storage. 126 127 **Note:** lakeFS enables you to recover from undesired vacuum runs by reverting the changes done by a vacuum run before running Garbage Collection. 128 {: .note } 129 130 ### When running lakeFS inside your VPC (on AWS) 131 132 When lakeFS runs inside your private network, your Databricks cluster needs to be able to access it. 133 This can be done by setting up a VPC peering between the two VPCs 134 (the one where lakeFS runs and the one where Databricks runs). For this to work on Delta Lake tables, you would also have to disable multi-cluster writes with: 135 136 ``` 137 spark.databricks.delta.multiClusterWrites.enabled false 138 ``` 139 140 ### Using multi cluster writes (on AWS) 141 142 When using multi-cluster writes, Databricks overrides Delta’s S3-commit action. 143 The new action tries to contact lakeFS from servers on Databricks’ own AWS account, which of course won’t be able to access your private network. 144 So, if you must use multi-cluster writes, you’ll have to allow access from Databricks’ AWS account to lakeFS. 145 If you are trying to achieve that, please reach out on Slack and the community will try to assist. 146 147 ## Further Reading 148 149 See [Guaranteeing Consistency in Your Delta Lake Tables With lakeFS](https://lakefs.io/blog/guarantee-consistency-in-your-delta-lake-tables-with-lakefs/) post on the lakeFS blog to learn how to 150 guarantee data quality in a Delta table by utilizing lakeFS branches. 151 152 153 [data-quality-gates]: {% link understand/use_cases/cicd_for_data.md %}#using-hooks-as-data-quality-gates 154 [deploy-docker]: {% link howto/deploy/onprem.md %}#docker 155