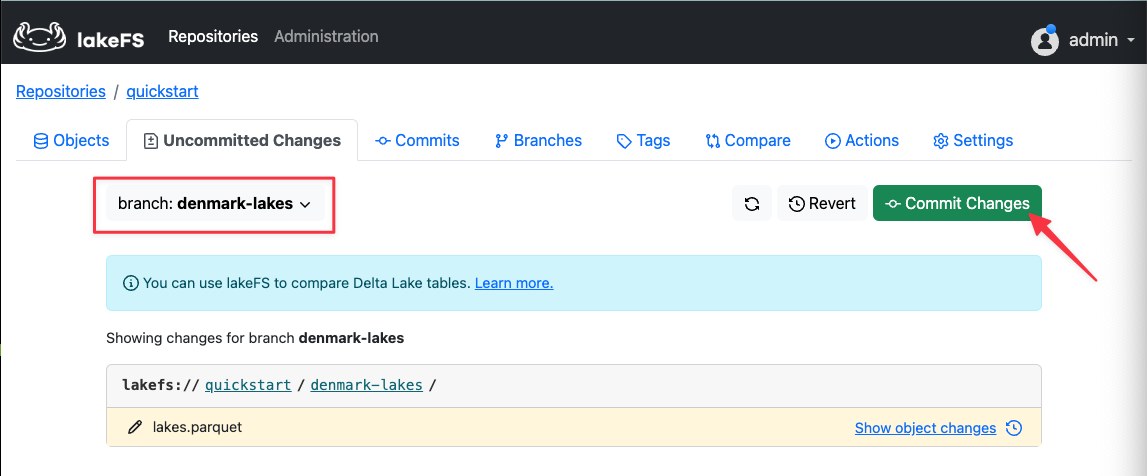

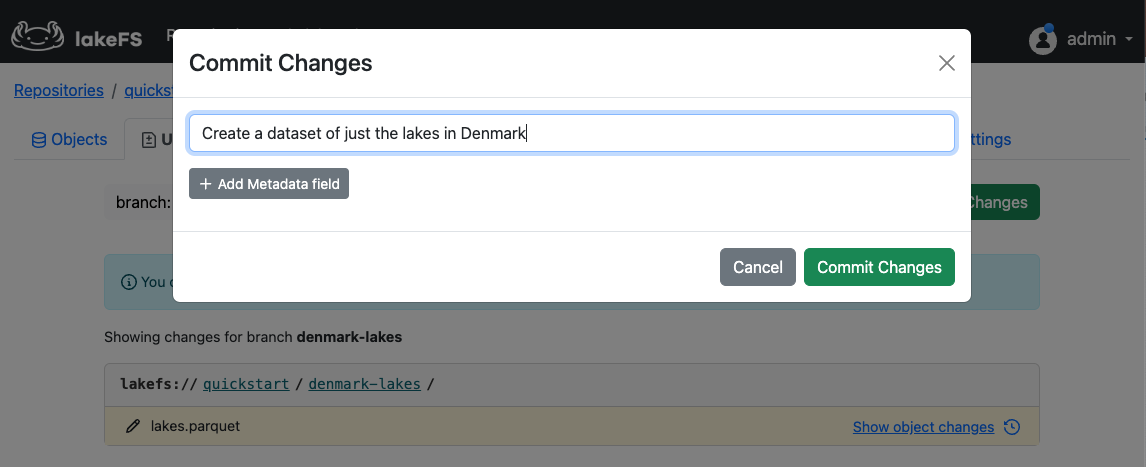

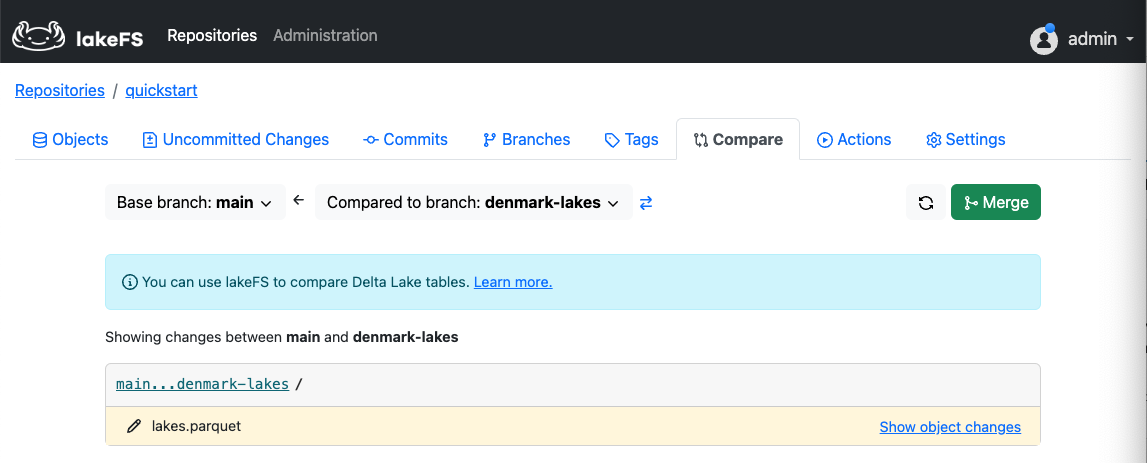

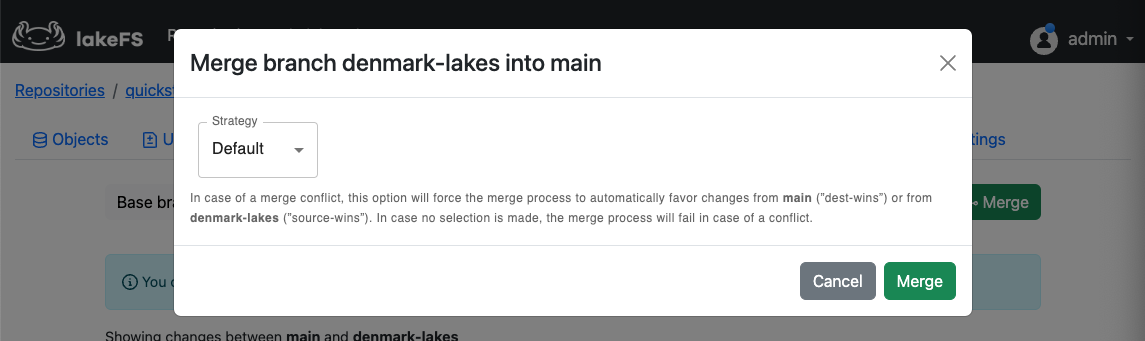

github.com/treeverse/lakefs@v1.24.1-0.20240520134607-95648127bfb0/pkg/samplerepo/assets/sample/README.md.tmpl (about) 1 # Welcome to the Lake! 2 3  4 5 **lakeFS brings software engineering best practices and applies them to data engineering**. 6 7 lakeFS provides version control over the data lake, and uses Git-like semantics to create and access those versions. If you know git, you'll be right at home with lakeFS. 8 9 With lakeFS, you can use concepts on your data lake such as **branch** to create an isolated version of the data, **commit** to create a reproducible point in time, and **merge** in order to incorporate your changes in one atomic action. 10 11 This quickstart will introduce you to some of the core ideas in lakeFS and show what you can do by illustrating the concept of branching, merging, and rolling back changes to data. It's laid out in four short sections. 12 13 *  [Query](#query) the pre-populated data on the `main` branch 14 *  [Make changes](#branch) to the data on a new branch 15 *  [Merge](#commit-and-merge) the changed data back to the `main` branch 16 *  [Change our mind](#rollback) and rollback the changes 17 *  Learn about [actions and hooks](#actions-and-hooks) in lakeFS 18 19 You might also be interested in this list of [additional lakeFS resources](#resources). 20 21 ## Setup 22 23 If you're reading this within the sample repository on lakeFS then you've already got lakeFS running! In this quickstart we'll reference different ways to perform tasks depending on how you're running lakeFS. See below for how you need to set up your environment for these. 24 25 <details> 26 <summary>Docker</summary> 27 28 If you're running lakeFS with Docker then all the tools you need (`lakectl`) are included in the image already. 29 30 ```bash 31 docker run --name lakefs --pull always \ 32 --rm --publish 8000:8000 \ 33 treeverse/lakefs:latest \ 34 run --quickstart 35 ``` 36 37 Configure `lakectl` by running the following in a new terminal window: 38 39 ```bash 40 docker exec -it lakefs lakectl config 41 ``` 42 43 Follow the prompts to enter your credentials that you created when you first setup lakeFS. Leave the **Server endpoint URL** as `http://127.0.0.1:8000`. 44 45 </details> 46 47 <details> 48 <summary>Local install</summary> 49 50 1. When you download [lakeFS from the GitHub repository](https://github.com/treeverse/lakeFS/releases) the distribution includes the `lakectl` tool. 51 52 Add this to your `$PATH`, or when invoking it reference it from the downloaded folder 53 2. [Configure](https://docs.lakefs.io/reference/cli.html#configuring-credentials-and-api-endpoint) `lakectl` by running 54 55 ```bash 56 lakectl config 57 ``` 58 </details> 59 60 61 <a name="query"></a> 62 63 # Let's get started 😺 64 65 _We'll start off by querying the sample data to orient ourselves around what it is we're working with. The lakeFS server has been loaded with a sample parquet datafile. Fittingly enough for a piece of software to help users of data lakes, the `lakes.parquet` file holds data about lakes around the world._ 66 67 _You'll notice that the branch is set to `main`. This is conceptually the same as your main branch in Git against which you develop software code._ 68 69 <img width="75%" src="/api/v1/repositories/{{.RepoName}}/refs/main/objects?path=images%2Frepo-contents.png" alt="The lakeFS objects list with a highlight to indicate that the branch is set to main." class="quickstart"/> 70 71 _Let's have a look at the data, ahead of making some changes to it on a branch in the following steps._. 72 73 Click on [`lakes.parquet`](object?ref=main&path=lakes.parquet) from the object browser and notice that the built-it DuckDB runs a query to show a preview of the file's contents. 74 75 <img width="75%" src="/api/v1/repositories/{{.RepoName}}/refs/main/objects?path=images%2Fduckdb-main-01.png" alt="The lakeFS object viewer with embedded DuckDB to query parquet files. A query has run automagically to preview the contents of the selected parquet file." class="quickstart"/> 76 77 _Now we'll run our own query on it to look at the top five countries represented in the data_. 78 79 Copy and paste the following SQL statement into the DuckDB query panel and click on Execute. 80 81 ```sql 82 SELECT country, COUNT(*) 83 FROM READ_PARQUET('lakefs://{{.RepoName}}/main/lakes.parquet') 84 GROUP BY country 85 ORDER BY COUNT(*) 86 DESC LIMIT 5; 87 ``` 88 89 <img width="75%" src="/api/v1/repositories/{{.RepoName}}/refs/main/objects?path=images%2Fduckdb-main-02.png" alt="An embedded DuckDB query showing a count of rows per country in the dataset." class="quickstart"/> 90 91 _Next we're going to make some changes to the data—but on a development branch so that the data in the main branch remains untouched._ 92 93 <a name="branch"></a> 94 # Create a Branch 🪓 95 96 _lakeFS uses branches in a similar way to Git. It's a great way to isolate changes until, or if, we are ready to re-integrate them. lakeFS uses a copy-on-write technique which means that it's very efficient to create branches of your data._ 97 98 _Having seen the lakes data in the previous step we're now going to create a new dataset to hold data only for lakes in 🇩🇰 Denmark. Why? Well, because 😄_ 99 100 _The first thing we'll do is create a branch for us to do this development against. Choose one of the following methods depending on your preferred interface and how you're running lakeFS._ 101 102 103 <details> 104 <summary>Web UI</summary> 105 106 From the [branches](./branches) page, click on **Create Branch**. Call the new branch `denmark-lakes` and click on **Create** 107 108  109 110 </details> 111 112 <details> 113 <summary>CLI (Docker)</summary> 114 115 _We'll use the `lakectl` tool to create the branch._ 116 117 In a new terminal window run the following: 118 119 ```bash 120 docker exec lakefs \ 121 lakectl branch create \ 122 lakefs://{{.RepoName}}/denmark-lakes \ 123 --source lakefs://{{.RepoName}}/main 124 ``` 125 126 _You should get a confirmation message like this:_ 127 128 ```bash 129 Source ref: lakefs://{{.RepoName}}/main 130 created branch 'denmark-lakes' 3384cd7cdc4a2cd5eb6249b52f0a709b49081668bb1574ce8f1ef2d956646816 131 ``` 132 </details> 133 134 <details> 135 <summary>CLI (local)</summary> 136 137 _We'll use the `lakectl` tool to create the branch._ 138 139 In a new terminal window run the following: 140 141 ```bash 142 lakectl branch create \ 143 lakefs://{{.RepoName}}/denmark-lakes \ 144 --source lakefs://{{.RepoName}}/main 145 ``` 146 147 _You should get a confirmation message like this:_ 148 149 ```bash 150 Source ref: lakefs://{{.RepoName}}/main 151 created branch 'denmark-lakes' 3384cd7cdc4a2cd5eb6249b52f0a709b49081668bb1574ce8f1ef2d956646816 152 ``` 153 </details> 154 155 ## Transforming the Data 156 157 _Now we'll make a change to the data. lakeFS has several native clients, as well as an [S3-compatible endpoint](https://docs.lakefs.io/understand/architecture.html#s3-gateway). This means that anything that can use S3 will work with lakeFS. Pretty neat._ 158 159 We're going to use DuckDB which is embedded within the web interface of lakeFS. 160 161 From the lakeFS [**Objects** page](/repositories/{{.RepoName}}/objects?ref=main) select the [`lakes.parquet`](/repositories/{{.RepoName}}/object?ref=main&path=lakes.parquet) file to open the DuckDB editor: 162 163 <img width="75%" src="/api/v1/repositories/{{.RepoName}}/refs/main/objects?path=images%2Fduckdb-main-01.png" alt="The lakeFS object viewer with embedded DuckDB to query parquet files. A query has run automagically to preview the contents of the selected parquet file." class="quickstart"/> 164 165 To start with, we'll load the lakes data into a DuckDB table so that we can manipulate it. Replace the previous text in the DuckDB editor with this: 166 167 ```sql 168 CREATE OR REPLACE TABLE lakes AS 169 SELECT * FROM READ_PARQUET('lakefs://{{.RepoName}}/denmark-lakes/lakes.parquet'); 170 ``` 171 172 You'll see a row count of 100,000 to confirm that the DuckDB table has been created. 173 174 Just to check that it's the same data that we saw before we'll run the same query. Note that now we are querying a DuckDB table (`lakes`), rather than using a function to query a parquet file directly. 175 176 ```sql 177 SELECT country, COUNT(*) 178 FROM lakes 179 GROUP BY country 180 ORDER BY COUNT(*) 181 DESC LIMIT 5; 182 ``` 183 184 <img width="75%" src="/api/v1/repositories/{{.RepoName}}/refs/main/objects?path=images%2Fduckdb-editor-02.png" alt="The DuckDB editor pane querying the lakes table" class="quickstart"/> 185 186 187 ### Making a Change to the Data 188 189 Now we can change our table, which was loaded from the original `lakes.parquet`, to remove all rows not for Denmark: 190 191 ```sql 192 DELETE FROM lakes WHERE Country != 'Denmark'; 193 ``` 194 195 <img src="/api/v1/repositories/{{.RepoName}}/refs/main/objects?path=images%2Fduckdb-editor-03.png" alt="The DuckDB editor pane deleting rows from the lakes table" class="quickstart"/> 196 197 We can verify that it's worked by reissuing the same query as before: 198 199 ```sql 200 SELECT country, COUNT(*) 201 FROM lakes 202 GROUP BY country 203 ORDER BY COUNT(*) 204 DESC LIMIT 5; 205 ``` 206 207 <img src="/api/v1/repositories/{{.RepoName}}/refs/main/objects?path=images%2Fduckdb-editor-04.png" alt="The DuckDB editor pane querying the lakes table showing only rows for Denmark remain" class="quickstart"/> 208 209 ## Write the Data back to lakeFS 210 211 _The changes so far have only been to DuckDB's copy of the data. Let's now push it back to lakeFS._ 212 213 _Note the lakeFS path is different this time as we're writing it to the `denmark-lakes` branch, not `main`._ 214 215 ```sql 216 COPY lakes TO 'lakefs://{{.RepoName}}/denmark-lakes/lakes.parquet'; 217 ``` 218 219 <img src="/api/v1/repositories/{{.RepoName}}/refs/main/objects?path=images%2Fduckdb-editor-05.png" alt="The DuckDB editor pane writing data back to the denmark-lakes branch" class="quickstart"/> 220 221 ## Verify that the Data's Changed on the Branch 222 223 _Let's just confirm for ourselves that the parquet file itself has the new data._ 224 225 _We'll drop the `lakes` table just to be sure, and then query the parquet file directly:_ 226 227 ```sql 228 DROP TABLE lakes; 229 230 SELECT country, COUNT(*) 231 FROM READ_PARQUET('lakefs://{{.RepoName}}/denmark-lakes/lakes.parquet') 232 GROUP BY country 233 ORDER BY COUNT(*) 234 DESC LIMIT 5; 235 ``` 236 237 <img src="/api/v1/repositories/{{.RepoName}}/refs/main/objects?path=images%2Fduckdb-editor-06.png" alt="The DuckDB editor pane show the parquet file on denmark-lakes branch has been changed" class="quickstart"/> 238 239 ## What about the data in `main`? 240 241 _So we've changed the data in our `denmark-lakes` branch, deleting swathes of the dataset. What's this done to our original data in the `main` branch? Absolutely nothing!_ 242 243 See for yourself by returning to [the lakeFS object view](object?ref=main&path=lakes.parquet) and re-running the same query: 244 245 ```sql 246 SELECT country, COUNT(*) 247 FROM READ_PARQUET('lakefs://{{.RepoName}}/main/lakes.parquet') 248 GROUP BY country 249 ORDER BY COUNT(*) 250 DESC LIMIT 5; 251 ``` 252 <img width="75%" src="/api/v1/repositories/{{.RepoName}}/refs/main/objects?path=images%2Fduckdb-main-02.png" alt="The lakeFS object browser showing DuckDB querying lakes.parquet on the main branch. The results are the same as they were before we made the changes to the denmark-lakes branch, which is as expected." class="quickstart"/> 253 254 _In the next step we'll see how to merge our branch back into main._ 255 256 <a name="commit-and-merge"></a> 257 # Committing Changes in lakeFS 🤝🏻 258 259 _In the previous step we branched our data from `main` into a new `denmark-lakes` branch, and overwrote the `lakes.parquet` to hold solely information about lakes in Denmark. Now we're going to commit that change (just like Git) and merge it back to main (just like Git)._ 260 261 _Having make the change to the datafile in the `denmark-lakes` branch, we now want to commit it. There are various options for interacting with lakeFS' API, including the web interface, [a Python client](https://pydocs.lakefs.io/), and `lakectl`._ 262 263 Choose one of the following methods depending on your preferred interface and how you're running lakeFS. 264 265 <details> 266 <summary>Web UI</summary> 267 268 1. Go to the [**Uncommitted Changes**](./changes?ref=denmark-lakes) and make sure you have the `denmark-lakes` branch selected 269 270 2. Click on **Commit Changes** 271 272  273 274 3. Enter a commit message and then click **Commits Changes** 275 276  277 278 </details> 279 280 <details> 281 <summary>CLI (Docker)</summary> 282 Run the following from a terminal window: 283 284 ```bash 285 docker exec lakefs \ 286 lakectl commit lakefs://{{.RepoName}}/denmark-lakes \ 287 -m "Create a dataset of just the lakes in Denmark" 288 ``` 289 290 _You will get confirmation of the commit including its hash._ 291 292 ```bash 293 Branch: lakefs://{{.RepoName}}/denmark-lakes 294 Commit for branch "denmark-lakes" completed. 295 296 ID: ba6d71d0965fa5d97f309a17ce08ad006c0dde15f99c5ea0904d3ad3e765bd74 297 Message: Create a dataset of just the lakes in Denmark 298 Timestamp: 2023-03-15 08:09:36 +0000 UTC 299 Parents: 3384cd7cdc4a2cd5eb6249b52f0a709b49081668bb1574ce8f1ef2d956646816 300 ``` 301 302 </details> 303 304 <details> 305 <summary>CLI (local)</summary> 306 Run the following from a terminal window: 307 308 ```bash 309 lakectl commit lakefs://{{.RepoName}}/denmark-lakes \ 310 -m "Create a dataset of just the lakes in Denmark" 311 ``` 312 313 _You will get confirmation of the commit including its hash._ 314 315 ```bash 316 Branch: lakefs://{{.RepoName}}/denmark-lakes 317 Commit for branch "denmark-lakes" completed. 318 319 ID: ba6d71d0965fa5d97f309a17ce08ad006c0dde15f99c5ea0904d3ad3e765bd74 320 Message: Create a dataset of just the lakes in Denmark 321 Timestamp: 2023-03-15 08:09:36 +0000 UTC 322 Parents: 3384cd7cdc4a2cd5eb6249b52f0a709b49081668bb1574ce8f1ef2d956646816 323 ``` 324 325 </details> 326 327 328 _With our change committed, it's now time to merge it to back to the `main` branch._ 329 330 # Merging Branches in lakeFS 🔀 331 332 _As with most operations in lakeFS, merging can be done through a variety of interfaces._ 333 334 <details> 335 <summary>Web UI</summary> 336 337 1. Click [here](./compare?ref=main&compare=denmark-lakes), or manually go to the **Compare** tab and set the **Compared to branch** to `denmark-lakes`. 338 339  340 341 2. Click on **Merge**, leave the **Strategy** as `Default` and click on **Merge** confirm 342 343  344 345 </details> 346 347 <details> 348 <summary>CLI (Docker)</summary> 349 350 _The syntax for `merge` requires us to specify the source and target of the merge._ 351 352 Run this from a terminal window. 353 354 ```bash 355 docker exec lakefs \ 356 lakectl merge \ 357 lakefs://{{.RepoName}}/denmark-lakes \ 358 lakefs://{{.RepoName}}/main 359 ``` 360 361 </details> 362 363 <details> 364 <summary>CLI (local)</summary> 365 366 _The syntax for `merge` requires us to specify the source and target of the merge._ 367 368 Run this from a terminal window. 369 370 ```bash 371 lakectl merge \ 372 lakefs://{{.RepoName}}/denmark-lakes \ 373 lakefs://{{.RepoName}}/main 374 ``` 375 376 </details> 377 378 379 _We can confirm that this has worked by returning to the same object view of [`lakes.parquet`](object?ref=main&path=lakes.parquet) as before and clicking on **Execute** to rerun the same query. You'll see that the country row counts have changed, and only Denmark is left in the data._ 380 381 <img width="75%" src="/api/v1/repositories/{{.RepoName}}/refs/main/objects?path=images%2Fduckdb-main-03.png" alt="The lakeFS object browser with a DuckDB query on lakes.parquet showing that there is only data for Denmark." class="quickstart"/> 382 383 **But…oh no!** 😬 A slow chill creeps down your spine, and the bottom drops out of your stomach. What have you done! 😱 *You were supposed to create **a separate file** of Denmark's lakes - not replace the original one* 🤦🏻🤦🏻♀. 384 385 _Is all lost? Will our hero overcome the obstacles? No, and yes respectively!_ 386 387 _Have no fear; lakeFS can revert changes. Keep reading for the final part of the quickstart to see how._ 388 389 <a name="rollback"></a> 390 # Rolling back Changes in lakeFS ↩️ 391 392 _Our intrepid user (you) merged a change back into the `main` branch and realised that they had made a mistake 🤦🏻._ 393 394 _The good news for them (you) is that lakeFS can revert changes made, similar to how you would in Git 😅._ 395 396 <details> 397 <summary>CLI (Docker)</summary> 398 399 From your terminal window run `lakectl` with the `revert` command: 400 401 ```bash 402 docker exec -it lakefs \ 403 lakectl branch revert \ 404 lakefs://{{.RepoName}}/main \ 405 main --parent-number 1 --yes 406 ``` 407 408 _You should see a confirmation of a successful rollback:_ 409 410 ```bash 411 Branch: lakefs://{{.RepoName}}/main 412 commit main successfully reverted 413 ``` 414 415 </details> 416 417 <details> 418 <summary>CLI (local)</summary> 419 420 From your terminal window run `lakectl` with the `revert` command: 421 422 ```bash 423 lakectl branch revert \ 424 lakefs://{{.RepoName}}/main \ 425 main --parent-number 1 --yes 426 ``` 427 428 _You should see a confirmation of a successful rollback:_ 429 430 ```bash 431 Branch: lakefs://{{.RepoName}}/main 432 commit main successfully reverted 433 ``` 434 435 </details> 436 437 Back in the object page and the DuckDB query we can see that the original file is now back to how it was. 438 439 <img width="75%" src="/api/v1/repositories/{{.RepoName}}/refs/main/objects?path=images%2Fduckdb-main-02.png" alt="The lakeFS object viewer with DuckDB query showing that the lakes dataset on main branch has been successfully returned to state prior to the merge." class="quickstart"/> 440 441 442 <a name="actions-and-hooks"></a> 443 # Actions and Hooks in lakeFS 🪝 444 445 When we interact with lakeFS it can be useful to have certain checks performed at stages along the way. Let's see how [actions in lakeFS](https://docs.lakefs.io/howto/hooks/) can be of benefit here. 446 447 We're going to enforce a rule that when a commit is made to any branch that begins with `etl`: 448 449 * the commit message must not be blank 450 * there must be `job_name` and `version` metadata 451 * the `version` must be numeric 452 453 To do this we'll create an _action_. In lakeFS, an action specifies one or more events that will trigger it, and references one or more _hooks_ to run when triggered. Actions are YAML files written to lakeFS under the `_lakefs_actions/` folder of the lakeFS repository. 454 455 _Hooks_ can be either a Lua script that lakeFS will execute itself, an external web hook, or an Airflow DAG. In this example, we're using a Lua hook. 456 457 ## Configuring the Action 458 459 1. In lakeFS create a new branch called `add_action`. You can do this through the UI or with `lakectl`: 460 461 ```bash 462 docker exec lakefs \ 463 lakectl branch create \ 464 lakefs://quickstart/add_action \ 465 --source lakefs://quickstart/main 466 ``` 467 468 1. Open up your favorite text editor (or emacs), and paste the following YAML: 469 470 ```yaml 471 name: Check Commit Message and Metadata 472 on: 473 pre-commit: 474 branches: 475 - etl** 476 hooks: 477 - id: check_metadata 478 type: lua 479 properties: 480 script: | 481 commit_message=action.commit.message 482 if commit_message and #commit_message>0 then 483 print("✅ The commit message exists and is not empty: " .. commit_message) 484 else 485 error("\n\n❌ A commit message must be provided") 486 end 487 488 job_name=action.commit.metadata["job_name"] 489 if job_name == nil then 490 error("\n❌ Commit metadata must include job_name") 491 else 492 print("✅ Commit metadata includes job_name: " .. job_name) 493 end 494 495 version=action.commit.metadata["version"] 496 if version == nil then 497 error("\n❌ Commit metadata must include version") 498 else 499 print("✅ Commit metadata includes version: " .. version) 500 if tonumber(version) then 501 print("✅ Commit metadata version is numeric") 502 else 503 error("\n❌ Version metadata must be numeric: " .. version) 504 end 505 end 506 ``` 507 508 1. Save this file as `/tmp/check_commit_metadata.yml` 509 510 * You can save it elsewhere, but make sure you change the path below when uploading 511 512 1. Upload the `check_commit_metadata.yml` file to the `add_action` branch under `_lakefs_actions/`. As above, you can use the UI (make sure you select the correct branch when you do), or with `lakectl`: 513 514 ```bash 515 docker exec lakefs \ 516 lakectl fs upload \ 517 lakefs://quickstart/add_action/_lakefs_actions/check_commit_metadata.yml \ 518 --source /tmp/check_commit_metadata.yml 519 ``` 520 521 1. Go to the **Uncommitted Changes** tab in the UI, and make sure that you see the new file in the path shown: 522 523 <img width="75%" src="/api/v1/repositories/{{.RepoName}}/refs/main/objects?path=images%2Fhooks-00.png" alt="lakeFS Uncommitted Changes view showing a file called `check_commit_metadata.yml` under the path `_lakefs_actions/`" class="quickstart"/> 524 525 Click **Commit Changes** and enter a suitable message to commit this new file to the branch. 526 527 1. Now we'll merge this new branch into `main`. From the **Compare** tab in the UI compare the `main` branch with `add_action` and click **Merge** 528 529 <img width="75%" src="/api/v1/repositories/{{.RepoName}}/refs/main/objects?path=images%2Fhooks-01.png" alt="lakeFS Compare view showing the difference between `main` and `add_action` branches" class="quickstart"/> 530 531 ## Testing the Action 532 533 Let's remind ourselves what the rules are that the action is going to enforce. 534 535 > When a commit is made to any branch that begins with `etl`: 536 537 > * the commit message must not be blank 538 > * there must be `job_name` and `version` metadata 539 > * the `version` must be numeric 540 541 We'll start by creating a branch that's going to match the `etl` pattern, and then go ahead and commit a change and see how the action works. 542 543 1. Create a new branch (see above instructions on how to do this if necessary) called `etl_20230504`. Make sure you use `main` as the source branch. 544 545 In your new branch you should see the action that you created and merged above: 546 547 <img width="75%" src="/api/v1/repositories/{{.RepoName}}/refs/main/objects?path=images%2Fhooks-02.png" alt="lakeFS branch etl_20230504 with object /_lakefs_actions/check_commit_metadata.yml" class="quickstart"/> 548 549 1. To simulate an ETL job we'll use the built-in DuckDB editor to run some SQL and write the result back to the lakeFS branch. 550 551 Open the `lakes.parquet` file on the `etl_20230504` branch from the **Objects** tab. Replace the SQL statement with the following: 552 553 ```sql 554 COPY ( 555 WITH src AS ( 556 SELECT lake_name, country, depth_m, 557 RANK() OVER ( ORDER BY depth_m DESC) AS lake_rank 558 FROM READ_PARQUET('lakefs://quickstart/etl_20230504/lakes.parquet')) 559 SELECT * FROM SRC WHERE lake_rank <= 10 560 ) TO 'lakefs://quickstart/etl_20230504/top10_lakes.parquet' 561 ``` 562 563 1. Head to the **Uncommitted Changes** tab in the UI and notice that there is now a file called `top10_lakes.parquet` waiting to be committed. 564 565 <img width="75%" src="/api/v1/repositories/{{.RepoName}}/refs/main/objects?path=images%2Fhooks-03.png" alt="lakeFS branch etl_20230504 with uncommitted file top10_lakes.parquet" class="quickstart"/> 566 567 Now we're ready to start trying out the commit rules, and seeing what happens if we violate them. 568 569 1. Click on **Commit Changes**, leave the _Commit message_ blank, and click **Commit Changes** to confirm. 570 571 Note that the commit fails because the hook did not succeed 572 573 `pre-commit hook aborted` 574 575 with the output from the hook's code displayed 576 577 `❌ A commit message must be provided` 578 579 <img width="75%" src="/api/v1/repositories/{{.RepoName}}/refs/main/objects?path=images%2Fhooks-04.png" alt="lakeFS blocking an attempt to commit with no commit message" class="quickstart"/> 580 581 1. Do the same as the previous step, but provide a message this time: 582 583 <img width="75%" src="/api/v1/repositories/{{.RepoName}}/refs/main/objects?path=images%2Fhooks-05.png" alt="A commit to lakeFS with commit message in place" class="quickstart"/> 584 585 The commit still fails as we need to include metadata too, which is what the error tells us 586 587 `❌ Commit metadata must include job_name` 588 589 1. Repeat the **Commit Changes** dialog and use the **Add Metadata field** to add the required metadata: 590 591 <img width="75%" src="/api/v1/repositories/{{.RepoName}}/refs/main/objects?path=images%2Fhooks-06.png" alt="A commit to lakeFS with commit message and metadata in place" class="quickstart"/> 592 593 We're almost there, but this still fails (as it should), since the version is not entirely numeric but includes `v` and `ß`: 594 595 `❌ Version metadata must be numeric: v1.00ß` 596 597 Repeat the commit attempt specify the version as `1.00` this time, and rejoice as the commit succeeds 598 599 <img width="75%" src="/api/v1/repositories/{{.RepoName}}/refs/main/objects?path=images%2Fhooks-07.png" alt="Commit history in lakeFS showing that the commit met the rules set by the action and completed successfully." class="quickstart"/> 600 601 --- 602 603 You can view the history of all action runs from the **Action** tab: 604 605 <img width="75%" src="/api/v1/repositories/{{.RepoName}}/refs/main/objects?path=images%2Fhooks-08.png" alt="Action run history in lakeFS" class="quickstart"/> 606 607 608 ## Bonus Challenge 609 610 And so with that, this quickstart for lakeFS draws to a close. If you're simply having _too much fun_ to stop then here's an exercise for you. 611 612 Implement the requirement from above *correctly*, such that you write `denmark-lakes.parquet` in the respective branch and successfully merge it back into main. Look up how to list the contents of the `main` branch and verify that it looks like this: 613 614 ```bash 615 object 2023-03-21 17:33:51 +0000 UTC 20.9 kB denmark-lakes.parquet 616 object 2023-03-21 14:45:38 +0000 UTC 916.4 kB lakes.parquet 617 ``` 618 619 <a name="resources"></a> 620 # Learn more about lakeFS 621 622 Here are some more resources to help you find out more about lakeFS. 623 624 ## Connecting lakeFS to your own object storage 625 626 Enjoyed the quickstart and want to try out lakeFS against your own data? The documentation explains [how to run lakeFS locally as a Docker container locally connecting to an object store](https://docs.lakefs.io/quickstart/learning-more-lakefs.html#connecting-lakefs-to-your-own-object-storage). 627 628 ## Deploying lakeFS 629 630 Ready to do this thing for real? The deployment guides show you how to deploy lakeFS [locally](https://docs.lakefs.io/deploy/onprem.html) (including on [Kubernetes](https://docs.lakefs.io/deploy/onprem.html#k8s)) or on [AWS](https://docs.lakefs.io/deploy/aws.html), [Azure](https://docs.lakefs.io/deploy/azure.html), or [GCP](https://docs.lakefs.io/deploy/gcp.html). 631 632 Alternatively you might want to have a look at [lakeFS Cloud](https://lakefs.cloud/) which provides a fully-managed, SOC-2 compliant, lakeFS service. 633 634 ## lakeFS Samples 635 636 The [lakeFS Samples](https://github.com/treeverse/lakeFS-samples) GitHub repository includes some excellent examples including: 637 638 * How to implement multi-table transaction on multiple Delta Tables 639 * Notebooks to show integration of lakeFS with Spark, Python, Delta Lake, Airflow and Hooks. 640 * Examples of using lakeFS webhooks to run automated data quality checks on different branches. 641 * Using lakeFS branching features to create dev/test data environments for ETL testing and experimentation. 642 * Reproducing ML experiments with certainty using lakeFS tags. 643 644 ## lakeFS Community 645 646 lakeFS' community is important to us. Our **guiding principles** are. 647 648 * Fully open, in code and conversation 649 * We learn and grow together 650 * Compassion and respect in every interaction 651 652 We'd love for you to join [our **Slack group**](https://lakefs.io/slack) and come and introduce yourself on `#announcements-and-more`. Or just lurk and soak up the vibes 😎 653 654 If you're interested in getting involved in lakeFS' development head over our [the **GitHub repo**](https://github.com/treeverse/lakeFS) to look at the code and peruse the issues. The comprehensive [contributing](https://docs.lakefs.io/contributing.html) document should have you covered on next steps but if you've any questions the `#dev` channel on [Slack](https://lakefs.io/slack) will be delighted to help. 655 656 We love speaking at meetups and chatting to community members at them - you can find a list of these [here](https://lakefs.io/community/). 657 658 Finally, make sure to drop by to say hi on [Twitter](https://twitter.com/lakeFS), [Mastodon](https://data-folks.masto.host/@lakeFS), and [LinkedIn](https://www.linkedin.com/company/treeverse/) 👋🏻 659 660 ## lakeFS Concepts and Internals 661 662 We describe lakeFS as "_Git for data_" but what does that actually mean? Have a look at the [concepts](https://docs.lakefs.io/understand/model.html) and [architecture](https://docs.lakefs.io/understand/architecture.html) guides, as well as the explanation of [how merges are handled](https://docs.lakefs.io/understand/how/merge.html). To go deeper you might be interested in [the internals of versioning](https://docs.lakefs.io/understand/how/versioning-internals.htm) and our [internal database structure](https://docs.lakefs.io/understand/how/kv.html).