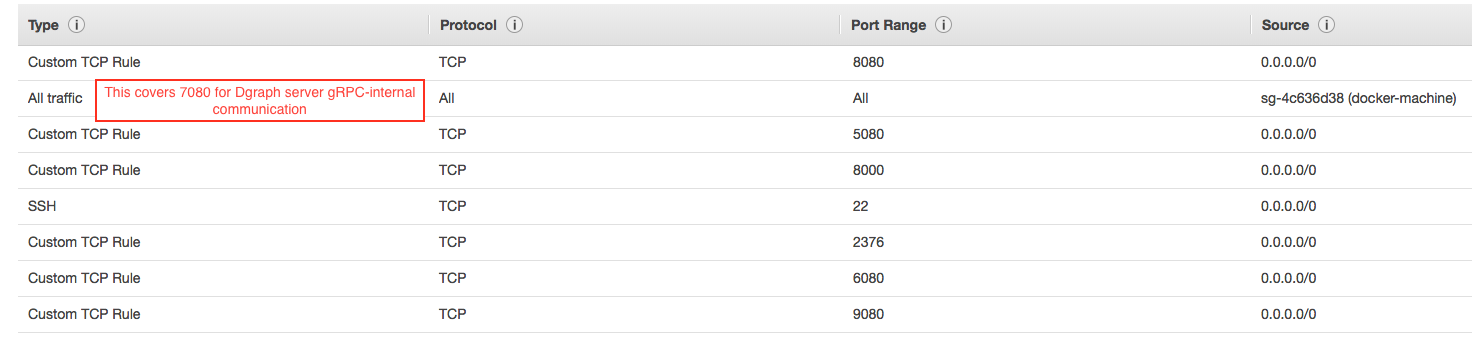

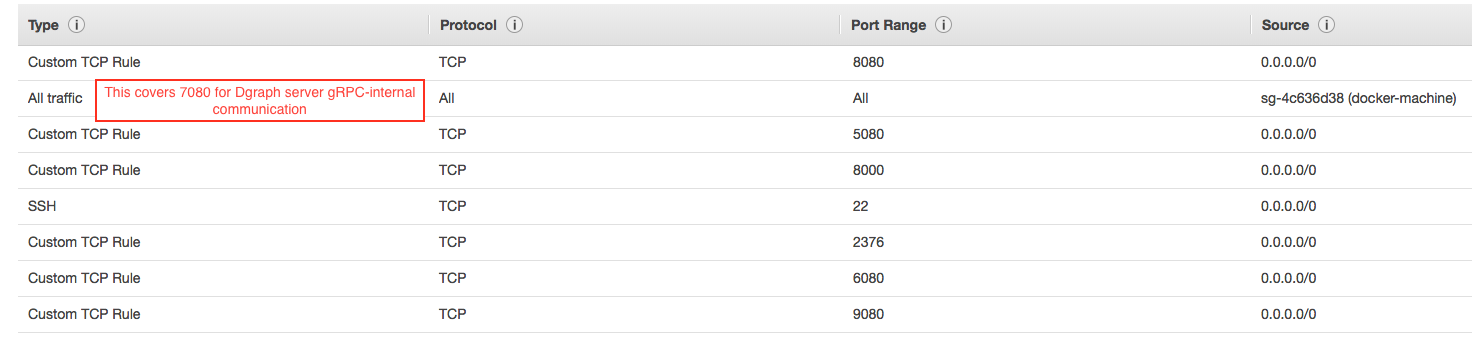

github.com/unigraph-dev/dgraph@v1.1.1-0.20200923154953-8b52b426f765/wiki/content/deploy/index.md (about) 1 +++ 2 date = "2017-03-20T22:25:17+11:00" 3 title = "Deploy" 4 +++ 5 6 This page talks about running Dgraph in various deployment modes, in a distributed fashion and involves 7 running multiple instances of Dgraph, over multiple servers in a cluster. 8 9 {{% notice "tip" %}} 10 For a single server setup, recommended for new users, please see [Get Started](/get-started) page. 11 {{% /notice %}} 12 13 ## Install Dgraph 14 #### Docker 15 16 ```sh 17 docker pull dgraph/dgraph:latest 18 19 # You can test that it worked fine, by running: 20 docker run -it dgraph/dgraph:latest dgraph 21 ``` 22 23 #### Automatic download 24 25 Running 26 ```sh 27 curl https://get.dgraph.io -sSf | bash 28 29 # Test that it worked fine, by running: 30 dgraph 31 ``` 32 would install the `dgraph` binary into your system. 33 34 #### Manual download [optional] 35 36 If you don't want to follow the automatic installation method, you could manually download the appropriate tar for your platform from **[Dgraph releases](https://github.com/dgraph-io/dgraph/releases)**. After downloading the tar for your platform from Github, extract the binary to `/usr/local/bin` like so. 37 38 ```sh 39 # For Linux 40 $ sudo tar -C /usr/local/bin -xzf dgraph-linux-amd64-VERSION.tar.gz 41 42 # For Mac 43 $ sudo tar -C /usr/local/bin -xzf dgraph-darwin-amd64-VERSION.tar.gz 44 45 # Test that it worked fine, by running: 46 dgraph 47 ``` 48 49 #### Building from Source 50 51 {{% notice "note" %}} 52 You can build the Ratel UI from source seperately following its build 53 [instructions](https://github.com/dgraph-io/ratel/blob/master/INSTRUCTIONS.md). 54 Ratel UI is distributed via Dgraph releases using any of the download methods 55 listed above. 56 {{% /notice %}} 57 58 Make sure you have [Go](https://golang.org/dl/) v1.11+ installed. 59 60 You'll need the following dependencies to install Dgraph using `make`: 61 ```bash 62 sudo apt-get update 63 sudo apt-get install gcc make 64 ``` 65 66 After installing Go, run 67 ```sh 68 # This should install dgraph binary in your $GOPATH/bin. 69 70 git clone https://github.com/dgraph-io/dgraph.git 71 cd ./dgraph 72 make install 73 ``` 74 75 If you get errors related to `grpc` while building them, your 76 `go-grpc` version might be outdated. We don't vendor in `go-grpc`(because it 77 causes issues while using the Go client). Update your `go-grpc` by running. 78 ```sh 79 go get -u -v google.golang.org/grpc 80 ``` 81 82 #### Config 83 84 The full set of dgraph's configuration options (along with brief descriptions) 85 can be viewed by invoking dgraph with the `--help` flag. For example, to see 86 the options available for `dgraph alpha`, run `dgraph alpha --help`. 87 88 The options can be configured in multiple ways (from highest precedence to 89 lowest precedence): 90 91 - Using command line flags (as described in the help output). 92 93 - Using environment variables. 94 95 - Using a configuration file. 96 97 If no configuration for an option is used, then the default value as described 98 in the `--help` output applies. 99 100 Multiple configuration methods can be used all at the same time. E.g. a core 101 set of options could be set in a config file, and instance specific options 102 could be set using environment vars or flags. 103 104 The environment variable names mirror the flag names as seen in the `--help` 105 output. They are the concatenation of `DGRAPH`, the subcommand invoked 106 (`ALPHA`, `ZERO`, `LIVE`, or `BULK`), and then the name of the flag (in 107 uppercase). For example, instead of using `dgraph alpha --lru_mb=8096`, you 108 could use `DGRAPH_ALPHA_LRU_MB=8096 dgraph alpha`. 109 110 Configuration file formats supported are JSON, TOML, YAML, HCL, and Java 111 properties (detected via file extension). The file extensions are .json, .toml, 112 .yml or .yaml, .hcl, and .properties for each format. 113 114 A configuration file can be specified using the `--config` flag, or an 115 environment variable. E.g. `dgraph zero --config my_config.json` or 116 `DGRAPH_ZERO_CONFIG=my_config.json dgraph zero`. 117 118 The config file structure is just simple key/value pairs (mirroring the flag 119 names). 120 121 Example JSON config file (config.json): 122 123 ```json 124 { 125 "my": "localhost:7080", 126 "zero": "localhost:5080", 127 "lru_mb": 4096, 128 "postings": "/path/to/p", 129 "wal": "/path/to/w" 130 } 131 ``` 132 133 Example TOML config file (config.toml): 134 135 ```toml 136 my = "localhost:7080" 137 zero = "localhost:5080" 138 lru_mb = 4096 139 postings = "/path/to/p" 140 wal = "/path/to/w" 141 ``` 142 143 144 Example YAML config file (config.yml): 145 146 ```yaml 147 my: "localhost:7080" 148 zero: "localhost:5080" 149 lru_mb: 4096 150 postings: "/path/to/p" 151 wal: "/path/to/w" 152 ``` 153 154 Example HCL config file (config.hcl): 155 156 ```hcl 157 my = "localhost:7080" 158 zero = "localhost:5080" 159 lru_mb = 4096 160 postings = "/path/to/p" 161 wal = "/path/to/w" 162 ``` 163 164 Example Java properties config file (config.properties): 165 ```text 166 my=localhost:7080 167 zero=localhost:5080 168 lru_mb=4096 169 postings=/path/to/p 170 wal=/path/to/w 171 ``` 172 173 ## Cluster Setup 174 175 ### Understanding Dgraph cluster 176 177 Dgraph is a truly distributed graph database - not a master-slave replication of 178 universal dataset. It shards by predicate and replicates predicates across the 179 cluster, queries can be run on any node and joins are handled over the 180 distributed data. A query is resolved locally for predicates the node stores, 181 and via distributed joins for predicates stored on other nodes. 182 183 For effectively running a Dgraph cluster, it's important to understand how 184 sharding, replication and rebalancing works. 185 186 **Sharding** 187 188 Dgraph colocates data per predicate (* P *, in RDF terminology), thus the 189 smallest unit of data is one predicate. To shard the graph, one or many 190 predicates are assigned to a group. Each Alpha node in the cluster serves a 191 single group. Dgraph Zero assigns a group to each Alpha node. 192 193 **Shard rebalancing** 194 195 Dgraph Zero tries to rebalance the cluster based on the disk usage in each 196 group. If Zero detects an imbalance, it would try to move a predicate along with 197 its indices to a group that has minimum disk usage. This can make the predicate 198 temporarily read-only. Queries for the predicate will still be serviced, but any 199 mutations for the predicate will be rejected and should be retried after the 200 move is finished. 201 202 Zero would continuously try to keep the amount of data on each server even, 203 typically running this check on a 10-min frequency. Thus, each additional 204 Dgraph Alpha instance would allow Zero to further split the predicates from 205 groups and move them to the new node. 206 207 **Consistent Replication** 208 209 If `--replicas` flag is set to something greater than one, Zero would assign the 210 same group to multiple nodes. These nodes would then form a Raft group aka 211 quorum. Every write would be consistently replicated to the quorum. To achieve 212 consensus, its important that the size of quorum be an odd number. Therefore, we 213 recommend setting `--replicas` to 1, 3 or 5 (not 2 or 4). This allows 0, 1, or 2 214 nodes serving the same group to be down, respectively without affecting the 215 overall health of that group. 216 217 ## Ports Usage 218 219 Dgraph cluster nodes use different ports to communicate over gRPC and HTTP. User has to pay attention while choosing these ports based on their topology and deployment-mode as each port needs different access security rules or firewall. 220 221 ### Types of ports 222 223 - **gRPC-internal:** Port that is used between the cluster nodes for internal communication and message exchange. 224 - **gRPC-external:** Port that is used by Dgraph clients, Dgraph Live Loader , and Dgraph Bulk loader to access APIs over gRPC. 225 - **http-external:** Port that is used by clients to access APIs over HTTP and other monitoring & administrative tasks. 226 227 ### Ports used by different nodes 228 229 Dgraph Node Type | gRPC-internal | gRPC-external | HTTP-external 230 ------------------|----------------|---------------|--------------- 231 zero | --Not Used-- | 5080 | 6080 232 alpha | 7080 | 9080 | 8080 233 ratel | --Not Used-- | --Not Used-- | 8000 234 235 Users have to modify security rules or open firewall depending up on their underlying network to allow communication between cluster nodes and between a server and a client. During development a general rule could be wide open *-external (gRPC/HTTP) ports to public and gRPC-internal to be open within the cluster nodes. 236 237 **Ratel UI** accesses Dgraph Alpha on the HTTP-external port (default localhost:8080) and can be configured to talk to remote Dgraph cluster. This way you can run Ratel on your local machine and point to a remote cluster. But if you are deploying Ratel along with Dgraph cluster, then you may have to expose 8000 to the public. 238 239 **Port Offset** To make it easier for user to setup the cluster, Dgraph defaults the ports used by Dgraph nodes and let user to provide an offset (through command option `--port_offset`) to define actual ports used by the node. Offset can also be used when starting multiple zero nodes in a HA setup. 240 241 For example, when a user runs a Dgraph Alpha by setting `--port_offset 2`, then the Alpha node binds to 7082 (gRPC-internal), 8082 (HTTP-external) & 9092 (gRPC-external) respectively. 242 243 **Ratel UI** by default listens on port 8000. You can use the `-port` flag to configure to listen on any other port. 244 245 {{% notice "tip" %}} 246 **For Dgraph v1.0.2 (or older)** 247 248 Zero's default ports are 7080 and 8080. When following instructions for the different setup guides below, override the Zero ports using `--port_offset` to match the current default ports. 249 250 ```sh 251 # Run Zero with ports 5080 and 6080 252 dgraph zero --idx=1 --port_offset -2000 253 # Run Zero with ports 5081 and 6081 254 dgraph zero --idx=2 --port_offset -1999 255 ``` 256 Likewise, Ratel's default port is 8081, so override it using `--port` to the current default port. 257 258 ```sh 259 dgraph-ratel --port 8080 260 ``` 261 {{% /notice %}} 262 263 ### HA Cluster Setup 264 265 In a high-availability setup, we need to run 3 or 5 replicas for Zero, and similarly, 3 or 5 replicas for Alpha. 266 {{% notice "note" %}} 267 If number of replicas is 2K + 1, up to **K servers** can be down without any impact on reads or writes. 268 269 Avoid keeping replicas to 2K (even number). If K servers go down, this would block reads and writes, due to lack of consensus. 270 {{% /notice %}} 271 272 **Dgraph Zero** 273 Run three Zero instances, assigning a unique ID(Integer) to each via `--idx` flag, and 274 passing the address of any healthy Zero instance via `--peer` flag. 275 276 To run three replicas for the alphas, set `--replicas=3`. Every time a new 277 Dgraph Alpha is added, Zero would check the existing groups and assign them to 278 one, which doesn't have three replicas. 279 280 **Dgraph Alpha** 281 Run as many Dgraph Alphas as you want. You can manually set `--idx` flag, or you 282 can leave that flag empty, and Zero would auto-assign an id to the Alpha. This 283 id would get persisted in the write-ahead log, so be careful not to delete it. 284 285 The new Alphas will automatically detect each other by communicating with 286 Dgraph zero and establish connections to each other. 287 288 Typically, Zero would first attempt to replicate a group, by assigning a new 289 Dgraph alpha to run the same group as assigned to another. Once the group has 290 been replicated as per the `--replicas` flag, Zero would create a new group. 291 292 Over time, the data would be evenly split across all the groups. So, it's 293 important to ensure that the number of Dgraph alphas is a multiple of the 294 replication setting. For e.g., if you set `--replicas=3` in Zero, then run three 295 Dgraph alphas for no sharding, but 3x replication. Run six Dgraph alphas, for 296 sharding the data into two groups, with 3x replication. 297 298 ## Single Host Setup 299 300 ### Run directly on the host 301 302 **Run dgraph zero** 303 304 ```sh 305 dgraph zero --my=IPADDR:5080 306 ``` 307 The `--my` flag is the connection that Dgraph alphas would dial to talk to 308 zero. So, the port `5080` and the IP address must be visible to all the Dgraph alphas. 309 310 For all other various flags, run `dgraph zero --help`. 311 312 **Run dgraph alpha** 313 314 ```sh 315 dgraph alpha --lru_mb=<typically one-third the RAM> --my=IPADDR:7080 --zero=localhost:5080 316 dgraph alpha --lru_mb=<typically one-third the RAM> --my=IPADDR:7081 --zero=localhost:5080 -o=1 317 ``` 318 319 Notice the use of `-o` for the second Alpha to add offset to the default ports used. Zero automatically assigns an unique ID to each Alpha, which is persisted in the write ahead log (wal) directory, users can specify the index using `--idx` option. Dgraph Alphas use two directories to persist data and 320 wal logs, and these directories must be different for each Alpha if they are running on the same host. You can use `-p` and `-w` to change the location of the data and WAL directories. For all other flags, run 321 322 `dgraph alpha --help`. 323 324 **Run dgraph UI** 325 326 ```sh 327 dgraph-ratel 328 ``` 329 330 ### Run using Docker 331 332 Dgraph cluster can be setup running as containers on a single host. First, you'd want to figure out the host IP address. You can typically do that via 333 334 ```sh 335 ip addr # On Arch Linux 336 ifconfig # On Ubuntu/Mac 337 ``` 338 We'll refer to the host IP address via `HOSTIPADDR`. 339 340 **Run dgraph zero** 341 342 ```sh 343 mkdir ~/zero # Or any other directory where data should be stored. 344 345 docker run -it -p 5080:5080 -p 6080:6080 -v ~/zero:/dgraph dgraph/dgraph:latest dgraph zero --my=HOSTIPADDR:5080 346 ``` 347 348 **Run dgraph alpha** 349 ```sh 350 mkdir ~/server1 # Or any other directory where data should be stored. 351 352 docker run -it -p 7080:7080 -p 8080:8080 -p 9080:9080 -v ~/server1:/dgraph dgraph/dgraph:latest dgraph alpha --lru_mb=<typically one-third the RAM> --zero=HOSTIPADDR:5080 --my=HOSTIPADDR:7080 353 354 mkdir ~/server2 # Or any other directory where data should be stored. 355 356 docker run -it -p 7081:7081 -p 8081:8081 -p 9081:9081 -v ~/server2:/dgraph dgraph/dgraph:latest dgraph alpha --lru_mb=<typically one-third the RAM> --zero=HOSTIPADDR:5080 --my=HOSTIPADDR:7081 -o=1 357 ``` 358 Notice the use of -o for server2 to override the default ports for server2. 359 360 **Run dgraph UI** 361 ```sh 362 docker run -it -p 8000:8000 dgraph/dgraph:latest dgraph-ratel 363 ``` 364 365 ### Run using Docker Compose (On single AWS instance) 366 367 We will use [Docker Machine](https://docs.docker.com/machine/overview/). It is a tool that lets you install Docker Engine on virtual machines and easily deploy applications. 368 369 * [Install Docker Machine](https://docs.docker.com/machine/install-machine/) on your machine. 370 371 {{% notice "note" %}}These instructions are for running Dgraph Alpha without TLS config. 372 Instructions for running with TLS refer [TLS instructions](#tls-configuration).{{% /notice %}} 373 374 Here we'll go through an example of deploying Dgraph Zero, Alpha and Ratel on an AWS instance. 375 376 * Make sure you have Docker Machine installed by following [instructions](https://docs.docker.com/machine/install-machine/), provisioning an instance on AWS is just one step away. You'll have to [configure your AWS credentials](http://docs.aws.amazon.com/sdk-for-java/v1/developer-guide/setup-credentials.html) for programmatic access to the Amazon API. 377 378 * Create a new docker machine. 379 380 ```sh 381 docker-machine create --driver amazonec2 aws01 382 ``` 383 384 Your output should look like 385 386 ```sh 387 Running pre-create checks... 388 Creating machine... 389 (aws01) Launching instance... 390 ... 391 ... 392 Docker is up and running! 393 To see how to connect your Docker Client to the Docker Engine running on this virtual machine, run: docker-machine env aws01 394 ``` 395 396 The command would provision a `t2-micro` instance with a security group called `docker-machine` 397 (allowing inbound access on 2376 and 22). You can either edit the security group to allow inbound access to '5080`, `8080`, `9080` (default ports for Dgraph Zero & Alpha) or you can provide your own security 398 group which allows inbound access on port 22, 2376 (required by Docker Machine), 5080, 8080 and 9080. Remember port *5080* is only required if you are running Dgraph Live Loader or Dgraph Bulk Loader from outside. 399 400 [Here](https://docs.docker.com/machine/drivers/aws/#options) is a list of full options for the `amazonec2` driver which allows you choose the instance type, security group, AMI among many other things. 401 402 {{% notice "tip" %}}Docker machine supports [other drivers](https://docs.docker.com/machine/drivers/gce/) like GCE, Azure etc.{{% /notice %}} 403 404 * Install and run Dgraph using docker-compose 405 406 Docker Compose is a tool for running multi-container Docker applications. You can follow the 407 instructions [here](https://docs.docker.com/compose/install/) to install it. 408 409 Copy the file below in a directory on your machine and name it `docker-compose.yml`. 410 411 ```sh 412 version: "3.2" 413 services: 414 zero: 415 image: dgraph/dgraph:latest 416 volumes: 417 - /data:/dgraph 418 ports: 419 - 5080:5080 420 - 6080:6080 421 restart: on-failure 422 command: dgraph zero --my=zero:5080 423 server: 424 image: dgraph/dgraph:latest 425 volumes: 426 - /data:/dgraph 427 ports: 428 - 8080:8080 429 - 9080:9080 430 restart: on-failure 431 command: dgraph alpha --my=server:7080 --lru_mb=2048 --zero=zero:5080 432 ratel: 433 image: dgraph/dgraph:latest 434 ports: 435 - 8000:8000 436 command: dgraph-ratel 437 ``` 438 439 {{% notice "note" %}}The config mounts `/data`(you could mount something else) on the instance to `/dgraph` within the 440 container for persistence.{{% /notice %}} 441 442 * Connect to the Docker Engine running on the machine. 443 444 Running `docker-machine env aws01` tells us to run the command below to configure 445 our shell. 446 ``` 447 eval $(docker-machine env aws01) 448 ``` 449 This configures our Docker client to talk to the Docker engine running on the AWS Machine. 450 451 Finally run the command below to start the Zero and Alpha. 452 ``` 453 docker-compose up -d 454 ``` 455 This would start 3 Docker containers running Dgraph Zero, Alpha and Ratel on the same machine. Docker would restart the containers in case there is any error. 456 You can look at the logs using `docker-compose logs`. 457 458 ## Multi Host Setup 459 460 ### Using Docker Swarm 461 462 #### Cluster Setup Using Docker Swarm 463 464 {{% notice "note" %}}These instructions are for running Dgraph Alpha without TLS config. 465 Instructions for running with TLS refer [TLS instructions](#tls-configuration).{{% /notice %}} 466 467 Here we'll go through an example of deploying 3 Dgraph Alpha nodes and 1 Zero on three different AWS instances using Docker Swarm with a replication factor of 3. 468 469 * Make sure you have Docker Machine installed by following [instructions](https://docs.docker.com/machine/install-machine/). 470 471 ```sh 472 docker-machine --version 473 ``` 474 475 * Create 3 instances on AWS and [install Docker Engine](https://docs.docker.com/engine/installation/) on them. This can be done manually or by using `docker-machine`. 476 You'll have to [configure your AWS credentials](http://docs.aws.amazon.com/sdk-for-java/v1/developer-guide/setup-credentials.html) to create the instances using Docker Machine. 477 478 Considering that you have AWS credentials setup, you can use the below commands to start 3 AWS 479 `t2-micro` instances with Docker Engine installed on them. 480 481 ```sh 482 docker-machine create --driver amazonec2 aws01 483 docker-machine create --driver amazonec2 aws02 484 docker-machine create --driver amazonec2 aws03 485 ``` 486 487 Your output should look like 488 489 ```sh 490 Running pre-create checks... 491 Creating machine... 492 (aws01) Launching instance... 493 ... 494 ... 495 Docker is up and running! 496 To see how to connect your Docker Client to the Docker Engine running on this virtual machine, run: docker-machine env aws01 497 ``` 498 499 The command would provision a `t2-micro` instance with a security group called `docker-machine` 500 (allowing inbound access on 2376 and 22). 501 502 You would need to edit the `docker-machine` security group to open inbound traffic on the following ports. 503 504 1. Allow all inbound traffic on all ports with Source being `docker-machine` 505 security ports so that Docker related communication can happen easily. 506 507 2. Also open inbound TCP traffic on the following ports required by Dgraph: 508 `5080`, `6080`, `8000`, `808[0-2]`, `908[0-2]`. Remember port *5080* is only 509 required if you are running Dgraph Live Loader or Dgraph Bulk Loader from 510 outside. You need to open `7080` to enable Alpha-to-Alpha communication in 511 case you have not opened all ports in #1. 512 513 If you are on AWS, below is the security group (**docker-machine**) after 514 necessary changes. 515 516  517 518 [Here](https://docs.docker.com/machine/drivers/aws/#options) is a list of full options for the `amazonec2` driver which allows you choose the 519 instance type, security group, AMI among many other 520 things. 521 522 {{% notice "tip" %}}Docker machine supports [other drivers](https://docs.docker.com/machine/drivers/gce/) like GCE, Azure etc.{{% /notice %}} 523 524 Running `docker-machine ps` shows all the AWS EC2 instances that we started. 525 ```sh 526 ➜ ~ docker-machine ls 527 NAME ACTIVE DRIVER STATE URL SWARM DOCKER ERRORS 528 aws01 - amazonec2 Running tcp://34.200.239.30:2376 v17.11.0-ce 529 aws02 - amazonec2 Running tcp://54.236.58.120:2376 v17.11.0-ce 530 aws03 - amazonec2 Running tcp://34.201.22.2:2376 v17.11.0-ce 531 ``` 532 533 * Start the Swarm 534 535 Docker Swarm has manager and worker nodes. Swarm can be started and updated on manager nodes. We 536 will setup `aws01` as swarm manager. You can first run the following commands to initialize the 537 swarm. 538 539 We are going to use the internal IP address given by AWS. Run the following command to get the 540 internal IP for `aws01`. Lets assume `172.31.64.18` is the internal IP in this case. 541 ``` 542 docker-machine ssh aws01 ifconfig eth0 543 ``` 544 545 Now that we have the internal IP, let's initiate the Swarm. 546 547 ```sh 548 # This configures our Docker client to talk to the Docker engine running on the aws01 host. 549 eval $(docker-machine env aws01) 550 docker swarm init --advertise-addr 172.31.64.18 551 ``` 552 553 Output: 554 ``` 555 Swarm initialized: current node (w9mpjhuju7nyewmg8043ypctf) is now a manager. 556 557 To add a worker to this swarm, run the following command: 558 559 docker swarm join \ 560 --token SWMTKN-1-1y7lba98i5jv9oscf10sscbvkmttccdqtkxg478g3qahy8dqvg-5r5cbsntc1aamsw3s4h3thvgk \ 561 172.31.64.18:2377 562 563 To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions. 564 ``` 565 566 Now we will make other nodes join the swarm. 567 568 ```sh 569 eval $(docker-machine env aws02) 570 docker swarm join \ 571 --token SWMTKN-1-1y7lba98i5jv9oscf10sscbvkmttccdqtkxg478g3qahy8dqvg-5r5cbsntc1aamsw3s4h3thvgk \ 572 172.31.64.18:2377 573 ``` 574 575 Output: 576 ``` 577 This node joined a swarm as a worker. 578 ``` 579 580 Similarly, aws03 581 ```sh 582 eval $(docker-machine env aws03) 583 docker swarm join \ 584 --token SWMTKN-1-1y7lba98i5jv9oscf10sscbvkmttccdqtkxg478g3qahy8dqvg-5r5cbsntc1aamsw3s4h3thvgk \ 585 172.31.64.18:2377 586 ``` 587 588 On the Swarm manager `aws01`, verify that your swarm is running. 589 ```sh 590 docker node ls 591 ``` 592 593 Output: 594 ```sh 595 ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS 596 ghzapjsto20c6d6l3n0m91zev aws02 Ready Active 597 rb39d5lgv66it1yi4rto0gn6a aws03 Ready Active 598 waqdyimp8llvca9i09k4202x5 * aws01 Ready Active Leader 599 ``` 600 601 * Start the Dgraph cluster 602 603 Copy the following file on your host machine and name it as `docker-compose.yml` 604 605 ```sh 606 version: "3" 607 networks: 608 dgraph: 609 services: 610 zero: 611 image: dgraph/dgraph:latest 612 volumes: 613 - data-volume:/dgraph 614 ports: 615 - 5080:5080 616 - 6080:6080 617 networks: 618 - dgraph 619 deploy: 620 placement: 621 constraints: 622 - node.hostname == aws01 623 command: dgraph zero --my=zero:5080 --replicas 3 624 alpha_1: 625 image: dgraph/dgraph:latest 626 hostname: "alpha_1" 627 volumes: 628 - data-volume:/dgraph 629 ports: 630 - 8080:8080 631 - 9080:9080 632 networks: 633 - dgraph 634 deploy: 635 placement: 636 constraints: 637 - node.hostname == aws01 638 command: dgraph alpha --my=alpha_1:7080 --lru_mb=2048 --zero=zero:5080 639 alpha_2: 640 image: dgraph/dgraph:latest 641 hostname: "alpha_2" 642 volumes: 643 - data-volume:/dgraph 644 ports: 645 - 8081:8081 646 - 9081:9081 647 networks: 648 - dgraph 649 deploy: 650 placement: 651 constraints: 652 - node.hostname == aws02 653 command: dgraph alpha --my=alpha_2:7081 --lru_mb=2048 --zero=zero:5080 -o 1 654 alpha_3: 655 image: dgraph/dgraph:latest 656 hostname: "alpha_3" 657 volumes: 658 - data-volume:/dgraph 659 ports: 660 - 8082:8082 661 - 9082:9082 662 networks: 663 - dgraph 664 deploy: 665 placement: 666 constraints: 667 - node.hostname == aws03 668 command: dgraph alpha --my=alpha_3:7082 --lru_mb=2048 --zero=zero:5080 -o 2 669 ratel: 670 image: dgraph/dgraph:latest 671 hostname: "ratel" 672 ports: 673 - 8000:8000 674 networks: 675 - dgraph 676 command: dgraph-ratel 677 volumes: 678 data-volume: 679 ``` 680 Run the following command on the Swarm leader to deploy the Dgraph Cluster. 681 682 ```sh 683 eval $(docker-machine env aws01) 684 docker stack deploy -c docker-compose.yml dgraph 685 ``` 686 687 This should run three Dgraph Alpha services (one on each VM because of the 688 constraint we have), one Dgraph Zero service on aws01 and one Dgraph Ratel. 689 690 These placement constraints (as seen in the compose file) are important so that 691 in case of restarting any containers, swarm places the respective Dgraph Alpha 692 or Zero containers on the same hosts to re-use the volumes. Also, if you are 693 running fewer than three hosts, make sure you use either different volumes or 694 run Dgraph Alpha with `-p p1 -w w1` options. 695 696 {{% notice "note" %}} 697 698 1. This setup would create and use a local volume called `dgraph_data-volume` on 699 the instances. If you plan to replace instances, you should use remote 700 storage like 701 [cloudstore](https://docs.docker.com/docker-for-aws/persistent-data-volumes) 702 instead of local disk. {{% /notice %}} 703 704 You can verify that all services were created successfully by running: 705 706 ```sh 707 docker service ls 708 ``` 709 710 Output: 711 ``` 712 ID NAME MODE REPLICAS IMAGE PORTS 713 vp5bpwzwawoe dgraph_ratel replicated 1/1 dgraph/dgraph:latest *:8000->8000/tcp 714 69oge03y0koz dgraph_alpha_2 replicated 1/1 dgraph/dgraph:latest *:8081->8081/tcp,*:9081->9081/tcp 715 kq5yks92mnk6 dgraph_alpha_3 replicated 1/1 dgraph/dgraph:latest *:8082->8082/tcp,*:9082->9082/tcp 716 uild5cqp44dz dgraph_zero replicated 1/1 dgraph/dgraph:latest *:5080->5080/tcp,*:6080->6080/tcp 717 v9jlw00iz2gg dgraph_alpha_1 replicated 1/1 dgraph/dgraph:latest *:8080->8080/tcp,*:9080->9080/tcp 718 ``` 719 720 To stop the cluster run 721 722 ``` 723 docker stack rm dgraph 724 ``` 725 726 ### HA Cluster setup using Docker Swarm 727 728 Here is a sample swarm config for running 6 Dgraph Alpha nodes and 3 Zero nodes on 6 different 729 ec2 instances. Setup should be similar to [Cluster setup using Docker Swarm]({{< relref "#cluster-setup-using-docker-swarm" >}}) apart from a couple of differences. This setup would ensure replication with sharding of data. The file assumes that there are six hosts available as docker-machines. Also if you are running on fewer than six hosts, make sure you use either different volumes or run Dgraph Alpha with `-p p1 -w w1` options. 730 731 You would need to edit the `docker-machine` security group to open inbound traffic on the following ports. 732 733 1. Allow all inbound traffic on all ports with Source being `docker-machine` security ports so that 734 docker related communication can happen easily. 735 736 2. Also open inbound TCP traffic on the following ports required by Dgraph: `5080`, `8000`, `808[0-5]`, `908[0-5]`. Remember port *5080* is only required if you are running Dgraph Live Loader or Dgraph Bulk Loader from outside. You need to open `7080` to enable Alpha-to-Alpha communication in case you have not opened all ports in #1. 737 738 If you are on AWS, below is the security group (**docker-machine**) after necessary changes. 739 740 741  742 743 Copy the following file on your host machine and name it as docker-compose.yml 744 745 ```sh 746 version: "3" 747 networks: 748 dgraph: 749 services: 750 zero_1: 751 image: dgraph/dgraph:latest 752 volumes: 753 - data-volume:/dgraph 754 ports: 755 - 5080:5080 756 - 6080:6080 757 networks: 758 - dgraph 759 deploy: 760 placement: 761 constraints: 762 - node.hostname == aws01 763 command: dgraph zero --my=zero_1:5080 --replicas 3 --idx 1 764 zero_2: 765 image: dgraph/dgraph:latest 766 volumes: 767 - data-volume:/dgraph 768 ports: 769 - 5081:5081 770 - 6081:6081 771 networks: 772 - dgraph 773 deploy: 774 placement: 775 constraints: 776 - node.hostname == aws02 777 command: dgraph zero -o 1 --my=zero_2:5081 --replicas 3 --peer zero_1:5080 --idx 2 778 zero_3: 779 image: dgraph/dgraph:latest 780 volumes: 781 - data-volume:/dgraph 782 ports: 783 - 5082:5082 784 - 6082:6082 785 networks: 786 - dgraph 787 deploy: 788 placement: 789 constraints: 790 - node.hostname == aws03 791 command: dgraph zero -o 2 --my=zero_3:5082 --replicas 3 --peer zero_1:5080 --idx 3 792 alpha_1: 793 image: dgraph/dgraph:latest 794 hostname: "alpha_1" 795 volumes: 796 - data-volume:/dgraph 797 ports: 798 - 8080:8080 799 - 9080:9080 800 networks: 801 - dgraph 802 deploy: 803 replicas: 1 804 placement: 805 constraints: 806 - node.hostname == aws01 807 command: dgraph alpha --my=alpha_1:7080 --lru_mb=2048 --zero=zero_1:5080 808 alpha_2: 809 image: dgraph/dgraph:latest 810 hostname: "alpha_2" 811 volumes: 812 - data-volume:/dgraph 813 ports: 814 - 8081:8081 815 - 9081:9081 816 networks: 817 - dgraph 818 deploy: 819 replicas: 1 820 placement: 821 constraints: 822 - node.hostname == aws02 823 command: dgraph alpha --my=alpha_2:7081 --lru_mb=2048 --zero=zero_1:5080 -o 1 824 alpha_3: 825 image: dgraph/dgraph:latest 826 hostname: "alpha_3" 827 volumes: 828 - data-volume:/dgraph 829 ports: 830 - 8082:8082 831 - 9082:9082 832 networks: 833 - dgraph 834 deploy: 835 replicas: 1 836 placement: 837 constraints: 838 - node.hostname == aws03 839 command: dgraph alpha --my=alpha_3:7082 --lru_mb=2048 --zero=zero_1:5080 -o 2 840 alpha_4: 841 image: dgraph/dgraph:latest 842 hostname: "alpha_4" 843 volumes: 844 - data-volume:/dgraph 845 ports: 846 - 8083:8083 847 - 9083:9083 848 networks: 849 - dgraph 850 deploy: 851 placement: 852 constraints: 853 - node.hostname == aws04 854 command: dgraph alpha --my=alpha_4:7083 --lru_mb=2048 --zero=zero_1:5080 -o 3 855 alpha_5: 856 image: dgraph/dgraph:latest 857 hostname: "alpha_5" 858 volumes: 859 - data-volume:/dgraph 860 ports: 861 - 8084:8084 862 - 9084:9084 863 networks: 864 - dgraph 865 deploy: 866 placement: 867 constraints: 868 - node.hostname == aws05 869 command: dgraph alpha --my=alpha_5:7084 --lru_mb=2048 --zero=zero_1:5080 -o 4 870 alpha_6: 871 image: dgraph/dgraph:latest 872 hostname: "alpha_6" 873 volumes: 874 - data-volume:/dgraph 875 ports: 876 - 8085:8085 877 - 9085:9085 878 networks: 879 - dgraph 880 deploy: 881 placement: 882 constraints: 883 - node.hostname == aws06 884 command: dgraph alpha --my=alpha_6:7085 --lru_mb=2048 --zero=zero_1:5080 -o 5 885 ratel: 886 image: dgraph/dgraph:latest 887 hostname: "ratel" 888 ports: 889 - 8000:8000 890 networks: 891 - dgraph 892 command: dgraph-ratel 893 volumes: 894 data-volume: 895 ``` 896 {{% notice "note" %}} 897 1. This setup assumes that you are using 6 hosts, but if you are running fewer than 6 hosts then you have to either use different volumes between Dgraph alphas or use `-p` & `-w` to configure data directories. 898 2. This setup would create and use a local volume called `dgraph_data-volume` on the instances. If you plan to replace instances, you should use remote storage like [cloudstore](https://docs.docker.com/docker-for-aws/persistent-data-volumes) instead of local disk. {{% /notice %}} 899 900 ## Using Kubernetes (v1.8.4) 901 902 {{% notice "note" %}}These instructions are for running Dgraph Alpha without TLS config. 903 Instructions for running with TLS refer [TLS instructions](#tls-configuration).{{% /notice %}} 904 905 * Install [kubectl](https://kubernetes.io/docs/tasks/tools/install-kubectl/) which is used to deploy 906 and manage applications on kubernetes. 907 * Get the kubernetes cluster up and running on a cloud provider of your choice. You can use [kops](https://github.com/kubernetes/kops/blob/master/docs/aws.md) to set it up on AWS. Kops does auto-scaling by default on AWS and creates the volumes and instances for you. 908 909 Verify that you have your cluster up and running using `kubectl get nodes`. If you used `kops` with 910 the default options, you should have a master and two worker nodes ready. 911 912 ```sh 913 ➜ kubernetes git:(master) ✗ kubectl get nodes 914 NAME STATUS ROLES AGE VERSION 915 ip-172-20-42-118.us-west-2.compute.internal Ready node 1h v1.8.4 916 ip-172-20-61-179.us-west-2.compute.internal Ready master 2h v1.8.4 917 ip-172-20-61-73.us-west-2.compute.internal Ready node 2h v1.8.4 918 ``` 919 920 ### Single Server 921 922 Once your Kubernetes cluster is up, you can use [dgraph-single.yaml](https://github.com/dgraph-io/dgraph/blob/master/contrib/config/kubernetes/dgraph-single/dgraph-single.yaml) to start a Zero and Alpha. 923 924 * From your machine, run the following command to start a StatefulSet that 925 creates a Pod with Zero and Alpha running in it. 926 927 ```sh 928 kubectl create -f https://raw.githubusercontent.com/dgraph-io/dgraph/master/contrib/config/kubernetes/dgraph-single/dgraph-single.yaml 929 ``` 930 931 Output: 932 ``` 933 service "dgraph-public" created 934 statefulset "dgraph" created 935 ``` 936 937 * Confirm that the pod was created successfully. 938 939 ```sh 940 kubectl get pods 941 ``` 942 943 Output: 944 ``` 945 NAME READY STATUS RESTARTS AGE 946 dgraph-0 3/3 Running 0 1m 947 ``` 948 949 {{% notice "tip" %}}You can check the logs for the containers in the pod using `kubectl logs -f dgraph-0 <container_name>`. For example, try `kubectl logs -f dgraph-0 alpha` for server logs.{{% /notice %}} 950 951 * Test the setup 952 953 Port forward from your local machine to the pod 954 955 ```sh 956 kubectl port-forward dgraph-0 8080 957 kubectl port-forward dgraph-0 8000 958 ``` 959 960 Go to `http://localhost:8000` and verify Dgraph is working as expected. 961 962 {{% notice "note" %}} You can also access the service on its External IP address.{{% /notice %}} 963 964 965 * Stop the cluster 966 967 Delete all the resources 968 969 ```sh 970 kubectl delete pods,statefulsets,services,persistentvolumeclaims,persistentvolumes -l app=dgraph 971 ``` 972 973 Stop the cluster. If you used `kops` you can run the following command. 974 975 ```sh 976 kops delete cluster ${NAME} --yes 977 ``` 978 979 ### HA Cluster Setup Using Kubernetes 980 981 This setup allows you to run 3 Dgraph Alphas and 3 Dgraph Zeros. We start Zero with `--replicas 982 3` flag, so all data would be replicated on 3 Alphas and form 1 alpha group. 983 984 {{% notice "note" %}} Ideally you should have at least three worker nodes as part of your Kubernetes 985 cluster so that each Dgraph Alpha runs on a separate node.{{% /notice %}} 986 987 * Check the nodes that are part of the Kubernetes cluster. 988 989 ```sh 990 kubectl get nodes 991 ``` 992 993 Output: 994 ```sh 995 NAME STATUS ROLES AGE VERSION 996 ip-172-20-34-90.us-west-2.compute.internal Ready master 6m v1.8.4 997 ip-172-20-51-1.us-west-2.compute.internal Ready node 4m v1.8.4 998 ip-172-20-59-116.us-west-2.compute.internal Ready node 4m v1.8.4 999 ip-172-20-61-88.us-west-2.compute.internal Ready node 5m v1.8.4 1000 ``` 1001 1002 Once your Kubernetes cluster is up, you can use [dgraph-ha.yaml](https://github.com/dgraph-io/dgraph/blob/master/contrib/config/kubernetes/dgraph-ha/dgraph-ha.yaml) to start the cluster. 1003 1004 * From your machine, run the following command to start the cluster. 1005 1006 ```sh 1007 kubectl create -f https://raw.githubusercontent.com/dgraph-io/dgraph/master/contrib/config/kubernetes/dgraph-ha/dgraph-ha.yaml 1008 ``` 1009 1010 Output: 1011 ```sh 1012 service "dgraph-zero-public" created 1013 service "dgraph-alpha-public" created 1014 service "dgraph-alpha-0-http-public" created 1015 service "dgraph-ratel-public" created 1016 service "dgraph-zero" created 1017 service "dgraph-alpha" created 1018 statefulset "dgraph-zero" created 1019 statefulset "dgraph-alpha" created 1020 deployment "dgraph-ratel" created 1021 ``` 1022 1023 * Confirm that the pods were created successfully. 1024 1025 ```sh 1026 kubectl get pods 1027 ``` 1028 1029 Output: 1030 ```sh 1031 NAME READY STATUS RESTARTS AGE 1032 dgraph-ratel-<pod-id> 1/1 Running 0 9s 1033 dgraph-alpha-0 1/1 Running 0 2m 1034 dgraph-alpha-1 1/1 Running 0 2m 1035 dgraph-alpha-2 1/1 Running 0 2m 1036 dgraph-zero-0 1/1 Running 0 2m 1037 dgraph-zero-1 1/1 Running 0 2m 1038 dgraph-zero-2 1/1 Running 0 2m 1039 1040 ``` 1041 1042 {{% notice "tip" %}}You can check the logs for the containers in the pod using `kubectl logs -f dgraph-alpha-0` and `kubectl logs -f dgraph-zero-0`.{{% /notice %}} 1043 1044 * Test the setup 1045 1046 Port forward from your local machine to the pod 1047 1048 ```sh 1049 kubectl port-forward dgraph-alpha-0 8080 1050 kubectl port-forward dgraph-ratel-<pod-id> 8000 1051 ``` 1052 1053 Go to `http://localhost:8000` and verify Dgraph is working as expected. 1054 1055 {{% notice "note" %}} You can also access the service on its External IP address.{{% /notice %}} 1056 1057 1058 * Stop the cluster 1059 1060 Delete all the resources 1061 1062 ```sh 1063 kubectl delete pods,statefulsets,services,persistentvolumeclaims,persistentvolumes -l app=dgraph-zero 1064 kubectl delete pods,statefulsets,services,persistentvolumeclaims,persistentvolumes -l app=dgraph-alpha 1065 kubectl delete pods,replicasets,services,persistentvolumeclaims,persistentvolumes -l app=dgraph-ratel 1066 ``` 1067 1068 Stop the cluster. If you used `kops` you can run the following command. 1069 1070 ```sh 1071 kops delete cluster ${NAME} --yes 1072 ``` 1073 1074 ### Kubernetes Storage 1075 1076 The Kubernetes configurations in the previous sections were configured to run 1077 Dgraph with any storage type (`storage-class: anything`). On the common cloud 1078 environments like AWS, GCP, and Azure, the default storage type are slow disks 1079 like hard disks or low IOPS SSDs. We highly recommend using faster disks for 1080 ideal performance when running Dgraph. 1081 1082 #### Local storage 1083 1084 The AWS storage-optimized i-class instances provide locally attached NVMe-based 1085 SSD storage which provide consistent very high IOPS. The Dgraph team uses 1086 i3.large instances on AWS to test Dgraph. 1087 1088 You can create a Kubernetes `StorageClass` object to provision a specific type 1089 of storage volume which you can then attach to your Dgraph pods. You can set up 1090 your cluster with local SSDs by using [Local Persistent 1091 Volumes](https://kubernetes.io/blog/2018/04/13/local-persistent-volumes-beta/). 1092 This Kubernetes feature is in beta at the time of this writing (Kubernetes 1093 v1.13.1). You can first set up an EC2 instance with locally attached storage. 1094 Once it is formatted and mounted properly, then you can create a StorageClass to 1095 access it.: 1096 1097 ```yaml 1098 apiVersion: storage.k8s.io/v1 1099 kind: StorageClass 1100 metadata: 1101 name: <your-local-storage-class-name> 1102 provisioner: kubernetes.io/no-provisioner 1103 volumeBindingMode: WaitForFirstConsumer 1104 ``` 1105 1106 Currently, Kubernetes does not allow automatic provisioning of local storage. So 1107 a PersistentVolume with a specific mount path should be created: 1108 1109 ```yaml 1110 apiVersion: v1 1111 kind: PersistentVolume 1112 metadata: 1113 name: <your-local-pv-name> 1114 spec: 1115 capacity: 1116 storage: 475Gi 1117 volumeMode: Filesystem 1118 accessModes: 1119 - ReadWriteOnce 1120 persistentVolumeReclaimPolicy: Delete 1121 storageClassName: <your-local-storage-class-name> 1122 local: 1123 path: /data 1124 nodeAffinity: 1125 required: 1126 nodeSelectorTerms: 1127 - matchExpressions: 1128 - key: kubernetes.io/hostname 1129 operator: In 1130 values: 1131 - <node-name> 1132 ``` 1133 1134 Then, in the StatefulSet configuration you can claim this local storage in 1135 .spec.volumeClaimTemplate: 1136 1137 ``` 1138 kind: StatefulSet 1139 ... 1140 volumeClaimTemplates: 1141 - metadata: 1142 name: datadir 1143 spec: 1144 accessModes: 1145 - ReadWriteOnce 1146 storageClassName: <your-local-storage-class-name> 1147 resources: 1148 requests: 1149 storage: 500Gi 1150 ``` 1151 1152 You can repeat these steps for each instance that's configured with local 1153 node storage. 1154 1155 #### Non-local persistent disks 1156 1157 EBS volumes on AWS and PDs on GCP are persistent disks that can be configured 1158 with Dgraph. The disk performance is much lower than locally attached storage 1159 but can be sufficient for your workload such as testing environments. 1160 1161 When using EBS volumes on AWS, we recommend using Provisioned IOPS SSD EBS 1162 volumes (the io1 disk type) which provide consistent IOPS. The available IOPS 1163 for AWS EBS volumes is based on the total disk size. With Kubernetes, you can 1164 request io1 disks to be provisioned with this config with 50 IOPS/GB using the 1165 `iopsPerGB` parameter: 1166 1167 ``` 1168 kind: StorageClass 1169 apiVersion: storage.k8s.io/v1 1170 metadata: 1171 name: <your-storage-class-name> 1172 provisioner: kubernetes.io/aws-ebs 1173 parameters: 1174 type: io1 1175 iopsPerGB: "50" 1176 fsType: ext4 1177 ``` 1178 1179 Example: Requesting a disk size of 250Gi with this storage class would provide 1180 12.5K IOPS. 1181 1182 ### Removing a Dgraph Pod 1183 1184 In the event that you need to completely remove a pod (e.g., its disk got 1185 corrupted and data cannot be recovered), you can use the `/removeNode` API to 1186 remove the node from the cluster. With a Kubernetes StatefulSet, you'll need to 1187 remove the node in this order: 1188 1189 1. Call `/removeNode` to remove the Dgraph instance from the cluster (see [More 1190 about Dgraph Zero]({{< relref "#more-about-dgraph-zero" >}})). The removed 1191 instance will immediately stop running. Any further attempts to join the 1192 cluster will fail for that instance since it has been removed. 1193 2. Remove the PersistentVolumeClaim associated with the pod to delete its data. 1194 This prepares the pod to join with a clean state. 1195 3. Restart the pod. This will create a new PersistentVolumeClaim to create new 1196 data directories. 1197 1198 When an Alpha pod restarts in a replicated cluster, it will join as a new member 1199 of the cluster, be assigned a group and an unused index from Zero, and receive 1200 the latest snapshot from the Alpha leader of the group. 1201 1202 When a Zero pod restarts, it must join the existing group with an unused index 1203 ID. The index ID is set with the `--idx` flag. This may require the StatefulSet 1204 configuration to be updated. 1205 1206 ### Kubernetes and Bulk Loader 1207 1208 You may want to initialize a new cluster with an existing data set such as data 1209 from the [Dgraph Bulk Loader]({{< relref "#bulk-loader" >}}). You can use [Init 1210 Containers](https://kubernetes.io/docs/concepts/workloads/pods/init-containers/) 1211 to copy the data to the pod volume before the Alpha process runs. 1212 1213 See the `initContainers` configuration in 1214 [dgraph-ha.yaml](https://github.com/dgraph-io/dgraph/blob/master/contrib/config/kubernetes/dgraph-ha/dgraph-ha.yaml) 1215 to learn more. 1216 1217 ## More about Dgraph Alpha 1218 1219 On its HTTP port, a Dgraph Alpha exposes a number of admin endpoints. 1220 1221 * `/health` returns HTTP status code 200 if the worker is running, HTTP 503 otherwise. 1222 * `/admin/shutdown` initiates a proper [shutdown]({{< relref "#shutdown">}}) of the Alpha. 1223 * `/admin/export` initiates a data [export]({{< relref "#export">}}). 1224 1225 By default the Alpha listens on `localhost` for admin actions (the loopback address only accessible from the same machine). The `--bindall=true` option binds to `0.0.0.0` and thus allows external connections. 1226 1227 {{% notice "tip" %}}Set max file descriptors to a high value like 10000 if you are going to load a lot of data.{{% /notice %}} 1228 1229 ## More about Dgraph Zero 1230 1231 Dgraph Zero controls the Dgraph cluster. It automatically moves data between 1232 different Dgraph Alpha instances based on the size of the data served by each Alpha instance. 1233 1234 It is mandatory to run at least one `dgraph zero` node before running any `dgraph alpha`. 1235 Options present for `dgraph zero` can be seen by running `dgraph zero --help`. 1236 1237 * Zero stores information about the cluster. 1238 * `--replicas` is the option that controls the replication factor. (i.e. number of replicas per data shard, including the original shard) 1239 * When a new Alpha joins the cluster, it is assigned a group based on the replication factor. If the replication factor is 1 then each Alpha node will serve different group. If replication factor is 2 and you launch 4 Alphas, then first two Alphas would serve group 1 and next two machines would serve group 2. 1240 * Zero also monitors the space occupied by predicates in each group and moves them around to rebalance the cluster. 1241 1242 Like Alpha, Zero also exposes HTTP on 6080 (+ any `--port_offset`). You can query (**GET** request) it 1243 to see useful information, like the following: 1244 1245 * `/state` Information about the nodes that are part of the cluster. Also contains information about 1246 size of predicates and groups they belong to. 1247 * `/assign?what=uids&num=100` This would allocate `num` uids and return a JSON map 1248 containing `startId` and `endId`, both inclusive. This id range can be safely assigned 1249 externally to new nodes during data ingestion. 1250 * `/assign?what=timestamps&num=100` This would request timestamps from Zero. 1251 This is useful to fast forward Zero state when starting from a postings 1252 directory, which already has commits higher than Zero's leased timestamp. 1253 * `/removeNode?id=3&group=2` If a replica goes down and can't be recovered, you 1254 can remove it and add a new node to the quorum. This endpoint can be used to 1255 remove a dead Zero or Dgraph Alpha node. To remove dead Zero nodes, pass 1256 `group=0` and the id of the Zero node. 1257 1258 {{% notice "note" %}} 1259 Before using the API ensure that the node is down and ensure that it doesn't come back up ever again. 1260 1261 You should not use the same `idx` of a node that was removed earlier. 1262 {{% /notice %}} 1263 1264 * `/moveTablet?tablet=name&group=2` This endpoint can be used to move a tablet to a group. Zero 1265 already does shard rebalancing every 8 mins, this endpoint can be used to force move a tablet. 1266 1267 1268 These are the **POST** endpoints available: 1269 1270 * `/enterpriseLicense` Use endpoint to apply an enterprise license to the cluster by supplying it 1271 as part of the body. 1272 1273 1274 1275 ## TLS configuration 1276 1277 {{% notice "note" %}} 1278 This section refers to the `dgraph cert` command which was introduced in v1.0.9. For previous releases, see the previous [TLS configuration documentation](https://docs.dgraph.io/v1.0.7/deploy/#tls-configuration). 1279 {{% /notice %}} 1280 1281 1282 Connections between client and server can be secured with TLS. Password protected private keys are **not supported**. 1283 1284 {{% notice "tip" %}}If you're generating encrypted private keys with `openssl`, be sure to specify encryption algorithm explicitly (like `-aes256`). This will force `openssl` to include `DEK-Info` header in private key, which is required to decrypt the key by Dgraph. When default encryption is used, `openssl` doesn't write that header and key can't be decrypted.{{% /notice %}} 1285 1286 ### Self-signed certificates 1287 1288 The `dgraph cert` program creates and manages self-signed certificates using a generated Dgraph Root CA. The _cert_ command simplifies certificate management for you. 1289 1290 ```sh 1291 # To see the available flags. 1292 $ dgraph cert --help 1293 1294 # Create Dgraph Root CA, used to sign all other certificates. 1295 $ dgraph cert 1296 1297 # Create node certificate (needed for Dgraph Live Loader using TLS) 1298 $ dgraph cert -n live 1299 1300 # Create client certificate 1301 $ dgraph cert -c dgraphuser 1302 1303 # Combine all in one command 1304 $ dgraph cert -n live -c dgraphuser 1305 1306 # List all your certificates and keys 1307 $ dgraph cert ls 1308 ``` 1309 1310 ### File naming conventions 1311 1312 To enable TLS you must specify the directory path to find certificates and keys. The default location where the _cert_ command stores certificates (and keys) is `tls` under the Dgraph working directory; where the data files are found. The default dir path can be overridden using the `--dir` option. 1313 1314 ```sh 1315 $ dgraph cert --dir ~/mycerts 1316 ``` 1317 1318 The following file naming conventions are used by Dgraph for proper TLS setup. 1319 1320 | File name | Description | Use | 1321 |-----------|-------------|-------| 1322 | ca.crt | Dgraph Root CA certificate | Verify all certificates | 1323 | ca.key | Dgraph CA private key | Validate CA certificate | 1324 | node.crt | Dgraph node certificate | Shared by all nodes for accepting TLS connections | 1325 | node.key | Dgraph node private key | Validate node certificate | 1326 | client._name_.crt | Dgraph client certificate | Authenticate a client _name_ | 1327 | client._name_.key | Dgraph client private key | Validate _name_ client certificate | 1328 1329 The Root CA certificate is used for verifying node and client certificates, if changed you must regenerate all certificates. 1330 1331 For client authentication, each client must have their own certificate and key. These are then used to connect to the Dgraph node(s). 1332 1333 The node certificate `node.crt` can support multiple node names using multiple host names and/or IP address. Just separate the names with commas when generating the certificate. 1334 1335 ```sh 1336 $ dgraph cert -n localhost,104.25.165.23,dgraph.io,2400:cb00:2048:1::6819:a417 1337 ``` 1338 1339 {{% notice "tip" %}}You must delete the old node cert and key before you can generate a new pair.{{% /notice %}} 1340 1341 {{% notice "note" %}}When using host names for node certificates, including _localhost_, your clients must connect to the matching host name -- such as _localhost_ not 127.0.0.1. If you need to use IP addresses, then add them to the node certificate.{{% /notice %}} 1342 1343 ### Certificate inspection 1344 1345 The command `dgraph cert ls` lists all certificates and keys in the `--dir` directory (default 'tls'), along with details to inspect and validate cert/key pairs. 1346 1347 Example of command output: 1348 1349 ```sh 1350 -rw-r--r-- ca.crt - Dgraph Root CA certificate 1351 Issuer: Dgraph Labs, Inc. 1352 S/N: 043c4d8fdd347f06 1353 Expiration: 02 Apr 29 16:56 UTC 1354 SHA-256 Digest: 4A2B0F0F 716BF5B6 C603E01A 6229D681 0B2AFDC5 CADF5A0D 17D59299 116119E5 1355 1356 -r-------- ca.key - Dgraph Root CA key 1357 SHA-256 Digest: 4A2B0F0F 716BF5B6 C603E01A 6229D681 0B2AFDC5 CADF5A0D 17D59299 116119E5 1358 1359 -rw-r--r-- client.admin.crt - Dgraph client certificate: admin 1360 Issuer: Dgraph Labs, Inc. 1361 CA Verify: PASSED 1362 S/N: 297e4cb4f97c71f9 1363 Expiration: 03 Apr 24 17:29 UTC 1364 SHA-256 Digest: D23EFB61 DE03C735 EB07B318 DB70D471 D3FE8556 B15D084C 62675857 788DF26C 1365 1366 -rw------- client.admin.key - Dgraph Client key 1367 SHA-256 Digest: D23EFB61 DE03C735 EB07B318 DB70D471 D3FE8556 B15D084C 62675857 788DF26C 1368 1369 -rw-r--r-- node.crt - Dgraph Node certificate 1370 Issuer: Dgraph Labs, Inc. 1371 CA Verify: PASSED 1372 S/N: 795ff0e0146fdb2d 1373 Expiration: 03 Apr 24 17:00 UTC 1374 Hosts: 104.25.165.23, 2400:cb00:2048:1::6819:a417, localhost, dgraph.io 1375 SHA-256 Digest: 7E243ED5 3286AE71 B9B4E26C 5B2293DA D3E7F336 1B1AFFA7 885E8767 B1A84D28 1376 1377 -rw------- node.key - Dgraph Node key 1378 SHA-256 Digest: 7E243ED5 3286AE71 B9B4E26C 5B2293DA D3E7F336 1B1AFFA7 885E8767 B1A84D28 1379 ``` 1380 1381 Important points: 1382 1383 * The cert/key pairs should always have matching SHA-256 digests. Otherwise, the cert(s) must be 1384 regenerated. If the Root CA pair differ, all cert/key must be regenerated; the flag `--force` 1385 can help. 1386 * All certificates must pass Dgraph CA verification. 1387 * All key files should have the least access permissions, especially the `ca.key`, but be readable. 1388 * Key files won't be overwritten if they have limited access, even with `--force`. 1389 * Node certificates are only valid for the hosts listed. 1390 * Client certificates are only valid for the named client/user. 1391 1392 ### TLS options 1393 1394 The following configuration options are available for Alpha: 1395 1396 * `--tls_dir string` - TLS dir path; this enables TLS connections (usually 'tls'). 1397 * `--tls_use_system_ca` - Include System CA with Dgraph Root CA. 1398 * `--tls_client_auth string` - TLS client authentication used to validate client connection. See [Client authentication](#client-authentication) for details. 1399 1400 ```sh 1401 # Default use for enabling TLS server (after generating certificates) 1402 $ dgraph alpha --tls_dir tls 1403 ``` 1404 1405 Dgraph Live Loader can be configured with following options: 1406 1407 * `--tls_dir string` - TLS dir path; this enables TLS connections (usually 'tls'). 1408 * `--tls_use_system_ca` - Include System CA with Dgraph Root CA. 1409 * `--tls_server_name string` - Server name, used for validating the server's TLS host name. 1410 1411 ```sh 1412 # First, create a client certificate for live loader. This will create 'tls/client.live.crt' 1413 $ dgraph cert -c live 1414 1415 # Now, connect to server using TLS 1416 $ dgraph live --tls_dir tls -s 21million.schema -f 21million.rdf.gz 1417 ``` 1418 1419 ### Client authentication 1420 1421 The server option `--tls_client_auth` accepts different values that change the security policty of client certificate verification. 1422 1423 | Value | Description | 1424 |-------|-------------| 1425 | REQUEST | Server accepts any certificate, invalid and unverified (least secure) | 1426 | REQUIREANY | Server expects any certificate, valid and unverified | 1427 | VERIFYIFGIVEN | Client certificate is verified if provided (default) | 1428 | REQUIREANDVERIFY | Always require a valid certificate (most secure) | 1429 1430 {{% notice "note" %}}REQUIREANDVERIFY is the most secure but also the most difficult to configure for remote clients. When using this value, the value of `--tls_server_name` is matched against the certificate SANs values and the connection host.{{% /notice %}} 1431 1432 ## Cluster Checklist 1433 1434 In setting up a cluster be sure the check the following. 1435 1436 * Is at least one Dgraph Zero node running? 1437 * Is each Dgraph Alpha instance in the cluster set up correctly? 1438 * Will each Dgraph Alpha instance be accessible to all peers on 7080 (+ any port offset)? 1439 * Does each instance have a unique ID on startup? 1440 * Has `--bindall=true` been set for networked communication? 1441 1442 ## Fast Data Loading 1443 1444 There are two different tools that can be used for fast data loading: 1445 1446 - `dgraph live` runs the Dgraph Live Loader 1447 - `dgraph bulk` runs the Dgraph Bulk Loader 1448 1449 {{% notice "note" %}} Both tools only accept [RDF N-Quad/Triple 1450 data](https://www.w3.org/TR/n-quads/) or JSON in plain or gzipped format. Data 1451 in other formats must be converted.{{% /notice %}} 1452 1453 ### Live Loader 1454 1455 Dgraph Live Loader (run with `dgraph live`) is a small helper program which reads RDF N-Quads from a gzipped file, batches them up, creates mutations (using the go client) and shoots off to Dgraph. 1456 1457 Dgraph Live Loader correctly handles assigning unique IDs to blank nodes across multiple files, and can optionally persist them to disk to save memory, in case the loader was re-run. 1458 1459 {{% notice "note" %}} Dgraph Live Loader can optionally write the xid->uid mapping to a directory specified using the `-x` flag, which can reused 1460 given that live loader completed successfully in the previous run.{{% /notice %}} 1461 1462 ```sh 1463 $ dgraph live --help # To see the available flags. 1464 1465 # Read RDFs or JSON from the passed file, and send them to Dgraph on localhost:9080. 1466 $ dgraph live -f <path-to-gzipped-RDF-or-JSON-file> 1467 1468 # Read multiple RDFs or JSON from the passed path, and send them to Dgraph on localhost:9080. 1469 $ dgraph live -f <./path-to-gzipped-RDF-or-JSON-files> 1470 1471 # Read multiple files strictly by name. 1472 $ dgraph live -f <file1.rdf, file2.rdf> 1473 1474 # Use compressed gRPC connections to and from Dgraph. 1475 $ dgraph live -C -f <path-to-gzipped-RDF-or-JSON-file> 1476 1477 # Read RDFs and a schema file and send to Dgraph running at given address. 1478 $ dgraph live -f <path-to-gzipped-RDf-or-JSON-file> -s <path-to-schema-file> -a <dgraph-alpha-address:grpc_port> -z <dgraph-zero-address:grpc_port> 1479 ``` 1480 1481 #### Other Live Loader options 1482 1483 `--new_uids` (default: false): Assign new UIDs instead of using the existing 1484 UIDs in data files. This is useful to avoid overriding the data in a DB already 1485 in operation. 1486 1487 `-f, --files`: Location of *.rdf(.gz) or *.json(.gz) file(s) to load. It can 1488 load multiple files in a given path. If the path is a directory, then all files 1489 ending in .rdf, .rdf.gz, .json, and .json.gz will be loaded. 1490 1491 `--format`: Specify file format (rdf or json) instead of getting it from 1492 filenames. This is useful if you need to define a strict format manually. 1493 1494 `-b, --batch` (default: 1000): Number of N-Quads to send as part of a mutation. 1495 1496 `-c, --conc` (default: 10): Number of concurrent requests to make to Dgraph. 1497 Do not confuse with `-C`. 1498 1499 `-C, --use_compression` (default: false): Enable compression for connections to and from the 1500 Alpha server. 1501 1502 `-a, --alpha` (default: `localhost:9080`): Dgraph Alpha gRPC server address to connect for live loading. This can be a comma-separated list of Alphas addresses in the same cluster to distribute the load, e.g., `"alpha:grpc_port,alpha2:grpc_port,alpha3:grpc_port"`. 1503 1504 ### Bulk Loader 1505 1506 {{% notice "note" %}} 1507 It's crucial to tune the bulk loader's flags to get good performance. See the 1508 section below for details. 1509 {{% /notice %}} 1510 1511 Dgraph Bulk Loader serves a similar purpose to the Dgraph Live Loader, but can 1512 only be used to load data into a new cluster. It cannot be run on an existing 1513 Dgraph cluster. Dgraph Bulk Loader is **considerably faster** than the Dgraph 1514 Live Loader and is the recommended way to perform the initial import of large 1515 datasets into Dgraph. 1516 1517 Only one or more Dgraph Zeros should be running for bulk loading. Dgraph Alphas 1518 will be started later. 1519 1520 {{% notice "warning" %}} 1521 Don't use bulk loader once the Dgraph cluster is up and running. Use it to import 1522 your existing data to a new cluster. 1523 {{% /notice %}} 1524 1525 You can [read some technical details](https://blog.dgraph.io/post/bulkloader/) 1526 about the bulk loader on the blog. 1527 1528 See [Fast Data Loading]({{< relref "#fast-data-loading" >}}) for more info about 1529 the expected N-Quads format. 1530 1531 **Reduce shards**: Before running the bulk load, you need to decide how many 1532 Alpha groups will be running when the cluster starts. The number of Alpha groups 1533 will be the same number of reduce shards you set with the `--reduce_shards` 1534 flag. For example, if your cluster will run 3 Alpha with 3 replicas per group, 1535 then there is 1 group and `--reduce_shards` should be set to 1. If your cluster 1536 will run 6 Alphas with 3 replicas per group, then there are 2 groups and 1537 `--reduce_shards` should be set to 2. 1538 1539 **Map shards**: The `--map_shards` option must be set to at least what's set for 1540 `--reduce_shards`. A higher number helps the bulk loader evenly distribute 1541 predicates between the reduce shards. 1542 1543 ```sh 1544 $ dgraph bulk -f goldendata.rdf.gz -s goldendata.schema --map_shards=4 --reduce_shards=2 --http localhost:8000 --zero=localhost:5080 1545 ``` 1546 ``` 1547 { 1548 "DataFiles": "goldendata.rdf.gz", 1549 "DataFormat": "", 1550 "SchemaFile": "goldendata.schema", 1551 "DgraphsDir": "out", 1552 "TmpDir": "tmp", 1553 "NumGoroutines": 4, 1554 "MapBufSize": 67108864, 1555 "ExpandEdges": true, 1556 "SkipMapPhase": false, 1557 "CleanupTmp": true, 1558 "NumShufflers": 1, 1559 "Version": false, 1560 "StoreXids": false, 1561 "ZeroAddr": "localhost:5080", 1562 "HttpAddr": "localhost:8000", 1563 "IgnoreErrors": false, 1564 "MapShards": 4, 1565 "ReduceShards": 2 1566 } 1567 The bulk loader needs to open many files at once. This number depends on the size of the data set loaded, the map file output size, and the level of indexing. 100,000 is adequate for most data set sizes. See `man ulimit` for details of how to change the limit. 1568 Current max open files limit: 1024 1569 MAP 01s rdf_count:176.0 rdf_speed:174.4/sec edge_count:564.0 edge_speed:558.8/sec 1570 MAP 02s rdf_count:399.0 rdf_speed:198.5/sec edge_count:1.291k edge_speed:642.4/sec 1571 MAP 03s rdf_count:666.0 rdf_speed:221.3/sec edge_count:2.164k edge_speed:718.9/sec 1572 MAP 04s rdf_count:952.0 rdf_speed:237.4/sec edge_count:3.014k edge_speed:751.5/sec 1573 MAP 05s rdf_count:1.327k rdf_speed:264.8/sec edge_count:4.243k edge_speed:846.7/sec 1574 MAP 06s rdf_count:1.774k rdf_speed:295.1/sec edge_count:5.720k edge_speed:951.5/sec 1575 MAP 07s rdf_count:2.375k rdf_speed:338.7/sec edge_count:7.607k edge_speed:1.085k/sec 1576 MAP 08s rdf_count:3.697k rdf_speed:461.4/sec edge_count:11.89k edge_speed:1.484k/sec 1577 MAP 09s rdf_count:71.98k rdf_speed:7.987k/sec edge_count:225.4k edge_speed:25.01k/sec 1578 MAP 10s rdf_count:354.8k rdf_speed:35.44k/sec edge_count:1.132M edge_speed:113.1k/sec 1579 MAP 11s rdf_count:610.5k rdf_speed:55.39k/sec edge_count:1.985M edge_speed:180.1k/sec 1580 MAP 12s rdf_count:883.9k rdf_speed:73.52k/sec edge_count:2.907M edge_speed:241.8k/sec 1581 MAP 13s rdf_count:1.108M rdf_speed:85.10k/sec edge_count:3.653M edge_speed:280.5k/sec 1582 MAP 14s rdf_count:1.121M rdf_speed:79.93k/sec edge_count:3.695M edge_speed:263.5k/sec 1583 MAP 15s rdf_count:1.121M rdf_speed:74.61k/sec edge_count:3.695M edge_speed:246.0k/sec 1584 REDUCE 16s [1.69%] edge_count:62.61k edge_speed:62.61k/sec plist_count:29.98k plist_speed:29.98k/sec 1585 REDUCE 17s [18.43%] edge_count:681.2k edge_speed:651.7k/sec plist_count:328.1k plist_speed:313.9k/sec 1586 REDUCE 18s [33.28%] edge_count:1.230M edge_speed:601.1k/sec plist_count:678.9k plist_speed:331.8k/sec 1587 REDUCE 19s [45.70%] edge_count:1.689M edge_speed:554.4k/sec plist_count:905.9k plist_speed:297.4k/sec 1588 REDUCE 20s [60.94%] edge_count:2.252M edge_speed:556.5k/sec plist_count:1.278M plist_speed:315.9k/sec 1589 REDUCE 21s [93.21%] edge_count:3.444M edge_speed:681.5k/sec plist_count:1.555M plist_speed:307.7k/sec 1590 REDUCE 22s [100.00%] edge_count:3.695M edge_speed:610.4k/sec plist_count:1.778M plist_speed:293.8k/sec 1591 REDUCE 22s [100.00%] edge_count:3.695M edge_speed:584.4k/sec plist_count:1.778M plist_speed:281.3k/sec 1592 Total: 22s 1593 ``` 1594 1595 The output will be generated in the `out` directory by default. Here's the bulk 1596 load output from the example above: 1597 1598 ```sh 1599 $ tree ./out 1600 ``` 1601 ``` 1602 ./out 1603 ├── 0 1604 │ └── p 1605 │ ├── 000000.vlog 1606 │ ├── 000002.sst 1607 │ └── MANIFEST 1608 └── 1 1609 └── p 1610 ├── 000000.vlog 1611 ├── 000002.sst 1612 └── MANIFEST 1613 1614 4 directories, 6 files 1615 ``` 1616 1617 Because `--reduce_shards` was set to 2, there are two sets of p directories: one 1618 in `./out/0` directory and another in the `./out/1` directory. 1619 1620 Once the output is created, they can be copied to all the servers that will run 1621 Dgraph Alphas. Each Dgraph Alpha must have its own copy of the group's p 1622 directory output. Each replica of the first group should have its own copy of 1623 `./out/0/p`, each replica of the second group should have its own copy of 1624 `./out/1/p`, and so on. 1625 1626 ```sh 1627 $ dgraph bulk --help # To see the available flags. 1628 1629 # Read RDFs or JSON from the passed file. 1630 $ dgraph bulk -f <path-to-gzipped-RDF-or-JSON-file> ... 1631 1632 # Read multiple RDFs or JSON from the passed path. 1633 $ dgraph bulk -f <./path-to-gzipped-RDF-or-JSON-files> ... 1634 1635 # Read multiple files strictly by name. 1636 $ dgraph bulk -f <file1.rdf, file2.rdf> ... 1637 1638 ``` 1639 1640 #### Other Bulk Loader options 1641 1642 `--new_uids` (default: false): Assign new UIDs instead of using the existing 1643 UIDs in data files. This is useful to avoid overriding the data in a DB already 1644 in operation. 1645 1646 `-f, --files`: Location of *.rdf(.gz) or *.json(.gz) file(s) to load. It can 1647 load multiple files in a given path. If the path is a directory, then all files 1648 ending in .rdf, .rdf.gz, .json, and .json.gz will be loaded. 1649 1650 `--format`: Specify file format (rdf or json) instead of getting it from 1651 filenames. This is useful if you need to define a strict format manually. 1652 1653 #### Tuning & monitoring 1654 1655 ##### Performance Tuning 1656 1657 {{% notice "tip" %}} 1658 We highly recommend [disabling swap 1659 space](https://askubuntu.com/questions/214805/how-do-i-disable-swap) when 1660 running Bulk Loader. It is better to fix the parameters to decrease memory 1661 usage, than to have swapping grind the loader down to a halt. 1662 {{% /notice %}} 1663 1664 Flags can be used to control the behaviour and performance characteristics of 1665 the bulk loader. You can see the full list by running `dgraph bulk --help`. In 1666 particular, **the flags should be tuned so that the bulk loader doesn't use more 1667 memory than is available as RAM**. If it starts swapping, it will become 1668 incredibly slow. 1669 1670 **In the map phase**, tweaking the following flags can reduce memory usage: 1671 1672 - The `--num_go_routines` flag controls the number of worker threads. Lowering reduces memory 1673 consumption. 1674 1675 - The `--mapoutput_mb` flag controls the size of the map output files. Lowering 1676 reduces memory consumption. 1677 1678 For bigger datasets and machines with many cores, gzip decoding can be a 1679 bottleneck during the map phase. Performance improvements can be obtained by 1680 first splitting the RDFs up into many `.rdf.gz` files (e.g. 256MB each). This 1681 has a negligible impact on memory usage. 1682 1683 **The reduce phase** is less memory heavy than the map phase, although can still 1684 use a lot. Some flags may be increased to improve performance, *but only if 1685 you have large amounts of RAM*: 1686 1687 - The `--reduce_shards` flag controls the number of resultant Dgraph alpha instances. 1688 Increasing this increases memory consumption, but in exchange allows for 1689 higher CPU utilization. 1690 1691 - The `--map_shards` flag controls the number of separate map output shards. 1692 Increasing this increases memory consumption but balances the resultant 1693 Dgraph alpha instances more evenly. 1694 1695 - The `--shufflers` controls the level of parallelism in the shuffle/reduce 1696 stage. Increasing this increases memory consumption. 1697 1698 ## Monitoring 1699 Dgraph exposes metrics via the `/debug/vars` endpoint in json format and the `/debug/prometheus_metrics` endpoint in Prometheus's text-based format. Dgraph doesn't store the metrics and only exposes the value of the metrics at that instant. You can either poll this endpoint to get the data in your monitoring systems or install **[Prometheus](https://prometheus.io/docs/introduction/install/)**. Replace targets in the below config file with the ip of your Dgraph instances and run prometheus using the command `prometheus -config.file my_config.yaml`. 1700 ```sh 1701 scrape_configs: 1702 - job_name: "dgraph" 1703 metrics_path: "/debug/prometheus_metrics" 1704 scrape_interval: "2s" 1705 static_configs: 1706 - targets: 1707 - 172.31.9.133:6080 #For Dgraph zero, 6080 is the http endpoint exposing metrics. 1708 - 172.31.15.230:8080 1709 - 172.31.0.170:8080 1710 - 172.31.8.118:8080 1711 ``` 1712 1713 {{% notice "note" %}} 1714 Raw data exported by Prometheus is available via `/debug/prometheus_metrics` endpoint on Dgraph alphas. 1715 {{% /notice %}} 1716 1717 Install **[Grafana](http://docs.grafana.org/installation/)** to plot the metrics. Grafana runs at port 3000 in default settings. Create a prometheus datasource by following these **[steps](https://prometheus.io/docs/visualization/grafana/#creating-a-prometheus-data-source)**. Import **[grafana_dashboard.json](https://github.com/dgraph-io/benchmarks/blob/master/scripts/grafana_dashboard.json)** by following this **[link](http://docs.grafana.org/reference/export_import/#importing-a-dashboard)**. 1718 1719 ## Metrics 1720 1721 Dgraph metrics follow the [metric and label conventions for 1722 Prometheus](https://prometheus.io/docs/practices/naming/). 1723 1724 ### Disk Metrics 1725 1726 The disk metrics let you track the disk activity of the Dgraph process. Dgraph does not interact 1727 directly with the filesystem. Instead it relies on [Badger](https://github.com/dgraph-io/badger) to 1728 read from and write to disk. 1729 1730 Metrics | Description 1731 ------- | ----------- 1732 `badger_disk_reads_total` | Total count of disk reads in Badger. 1733 `badger_disk_writes_total` | Total count of disk writes in Badger. 1734 `badger_gets_total` | Total count of calls to Badger's `get`. 1735 `badger_memtable_gets_total` | Total count of memtable accesses to Badger's `get`. 1736 `badger_puts_total` | Total count of calls to Badger's `put`. 1737 `badger_read_bytes` | Total bytes read from Badger. 1738 `badger_written_bytes` | Total bytes written to Badger. 1739 1740 ### Memory Metrics 1741 1742 The memory metrics let you track the memory usage of the Dgraph process. The idle and inuse metrics 1743 gives you a better sense of the active memory usage of the Dgraph process. The process memory metric 1744 shows the memory usage as measured by the operating system. 1745 1746 By looking at all three metrics you can see how much memory a Dgraph process is holding from the 1747 operating system and how much is actively in use. 1748 1749 Metrics | Description 1750 ------- | ----------- 1751 `dgraph_memory_idle_bytes` | Estimated amount of memory that is being held idle that could be reclaimed by the OS. 1752 `dgraph_memory_inuse_bytes` | Total memory usage in bytes (sum of heap usage and stack usage). 1753 `dgraph_memory_proc_bytes` | Total memory usage in bytes of the Dgraph process. On Linux/macOS, this metric is equivalent to resident set size. On Windows, this metric is equivalent to [Go's runtime.ReadMemStats](https://golang.org/pkg/runtime/#ReadMemStats). 1754 1755 ### Activity Metrics 1756 1757 The activity metrics let you track the mutations, queries, and proposals of an Dgraph instance. 1758 1759 Metrics | Description 1760 ------- | ----------- 1761 `dgraph_goroutines_total` | Total number of Goroutines currently running in Dgraph. 1762 `dgraph_active_mutations_total` | Total number of mutations currently running. 1763 `dgraph_pending_proposals_total` | Total pending Raft proposals. 1764 `dgraph_pending_queries_total` | Total number of queries in progress. 1765 `dgraph_num_queries_total` | Total number of queries run in Dgraph. 1766 1767 ### Health Metrics 1768 1769 The health metrics let you track to check the availability of an Dgraph Alpha instance. 1770 1771 Metrics | Description 1772 ------- | ----------- 1773 `dgraph_alpha_health_status` | **Only applicable to Dgraph Alpha**. Value is 1 when the Alpha is ready to accept requests; otherwise 0. 1774 1775 ### Go Metrics 1776 1777 Go's built-in metrics may also be useful to measure for memory usage and garbage collection time. 1778 1779 Metrics | Description 1780 ------- | ----------- 1781 `go_memstats_gc_cpu_fraction` | The fraction of this program's available CPU time used by the GC since the program started. 1782 `go_memstats_heap_idle_bytes` | Number of heap bytes waiting to be used. 1783 `go_memstats_heap_inuse_bytes` | Number of heap bytes that are in use. 1784 1785 ## Tracing 1786 1787 Dgraph is integrated with [OpenCensus](https://opencensus.io/zpages/) to collect distributed traces from the Dgraph cluster. 1788 1789 Trace data is always collected within Dgraph. You can adjust the trace sampling rate for Dgraph queries with the `--trace` option for Dgraph Alphas. By default, `--trace` is set to 1 to trace 100% of queries. 1790 1791 ### Examining Traces with zPages 1792 1793 The most basic way to view traces is with the integrated trace pages. 1794 1795 OpenCensus's [zPages](https://opencensus.io/zpages/) are accessible via the Zero or Alpha HTTP port at `/z/tracez`. 1796 1797 ### Examining Traces with Jaeger 1798 1799 Jaeger collects distributed traces and provides a UI to view and query traces across different services. This provides the necessary observability to figure out what is happening in the system. 1800 1801 Dgraph can be configured to send traces directly to a Jaeger collector with the `--jaeger.collector` flag. For example, if the Jaeger collector is running on `http://localhost:14268`, then pass the flag to the Dgraph Zero and Dgraph Alpha instances as `--jaeger.collector=http://localhost:14268`. 1802 1803 See [Jaeger's Getting Started docs](https://www.jaegertracing.io/docs/getting-started/) to get up and running with Jaeger. 1804 1805 ## Dgraph Administration 1806 1807 Each Dgraph Alpha exposes administrative operations over HTTP to export data and to perform a clean shutdown. 1808 1809 ### Whitelist Admin Operations 1810 1811 By default, admin operations can only be initiated from the machine on which the Dgraph Alpha runs. 1812 You can use the `--whitelist` option to specify whitelisted IP addresses and ranges for hosts from which admin operations can be initiated. 1813 1814 ```sh 1815 dgraph alpha --whitelist 172.17.0.0:172.20.0.0,192.168.1.1 --lru_mb <one-third RAM> ... 1816 ``` 1817 This would allow admin operations from hosts with IP between `172.17.0.0` and `172.20.0.0` along with 1818 the server which has IP address as `192.168.1.1`. 1819 1820 ### Restrict Mutation Operations 1821 1822 By default, you can perform mutation operations for any predicate. 1823 If the predicate in mutation doesn't exist in the schema, 1824 the predicate gets added to the schema with an appropriate 1825 [Dgraph Type](https://docs.dgraph.io/master/query-language/#schema-types). 1826 1827 You can use `--mutations disallow` to disable all mutations, 1828 which is set to `allow` by default. 1829 1830 ```sh 1831 dgraph alpha --mutations disallow 1832 ``` 1833 1834 Enforce a strict schema by setting `--mutations strict`. 1835 This mode allows mutations only on predicates already in the schema. 1836 Before performing a mutation on a predicate that doesn't exist in the schema, 1837 you need to perform an alter operation with that predicate and its schema type. 1838 1839 ```sh 1840 dgraph alpha --mutations strict 1841 ``` 1842 1843 ### Secure Alter Operations 1844 1845 Clients can use alter operations to apply schema updates and drop particular or all predicates from the database. 1846 By default, all clients are allowed to perform alter operations. 1847 You can configure Dgraph to only allow alter operations when the client provides a specific token. 1848 This can be used to prevent clients from making unintended or accidental schema updates or predicate drops. 1849 1850 You can specify the auth token with the `--auth_token` option for each Dgraph Alpha in the cluster. 1851 Clients must include the same auth token to make alter requests. 1852 1853 ```sh 1854 $ dgraph alpha --lru_mb=2048 --auth_token=<authtokenstring> 1855 ``` 1856 1857 ```sh 1858 $ curl -s localhost:8080/alter -d '{ "drop_all": true }' 1859 # Permission denied. No token provided. 1860 ``` 1861 1862 ```sh 1863 $ curl -s -H 'X-Dgraph-AuthToken: <wrongsecret>' localhost:8180/alter -d '{ "drop_all": true }' 1864 # Permission denied. Incorrect token. 1865 ``` 1866 1867 ```sh 1868 $ curl -H 'X-Dgraph-AuthToken: <authtokenstring>' localhost:8180/alter -d '{ "drop_all": true }' 1869 # Success. Token matches. 1870 ``` 1871 1872 {{% notice "note" %}} 1873 To fully secure alter operations in the cluster, the auth token must be set for every Alpha. 1874 {{% /notice %}} 1875 1876 1877 ### Export Database 1878 1879 An export of all nodes is started by locally accessing the export endpoint of any Alpha in the cluster. 1880 1881 ```sh 1882 $ curl localhost:8080/admin/export 1883 ``` 1884 {{% notice "warning" %}}By default, this won't work if called from outside the server where the Dgraph Alpha is running. 1885 You can specify a list or range of whitelisted IP addresses from which export or other admin operations 1886 can be initiated using the `--whitelist` flag on `dgraph alpha`. 1887 {{% /notice %}} 1888 1889 This also works from a browser, provided the HTTP GET is being run from the same server where the Dgraph alpha instance is running. 1890 1891 This triggers an export for all Alpha groups of the cluster. The data is exported from the following Dgraph instances: 1892 1893 1. For the Alpha instance that receives the GET request, the group's export data is stored with this Alpha. 1894 2. For every other group, its group's export data is stored with the Alpha leader of that group. 1895 1896 It is up to the user to retrieve the right export files from the Alphas in the 1897 cluster. Dgraph does not copy all files to the Alpha that initiated the export. 1898 The user must also ensure that there is sufficient space on disk to store the 1899 export. 1900 1901 Each Alpha leader for a group writes output as a gzipped file to the export 1902 directory specified via the `--export` flag (defaults to a directory called `"export"`). If any of the groups fail, the 1903 entire export process is considered failed and an error is returned. 1904 1905 The data is exported in RDF format by default. A different output format may be specified with the 1906 `format` URL parameter. For example: 1907 1908 ```sh 1909 $ curl 'localhost:8080/admin/export?format=json' 1910 ``` 1911 1912 Currently, "rdf" and "json" are the only formats supported. 1913 1914 ### Shutdown Database 1915 1916 A clean exit of a single Dgraph node is initiated by running the following command on that node. 1917 {{% notice "warning" %}}This won't work if called from outside the server where Dgraph is running. 1918 {{% /notice %}} 1919 1920 ```sh 1921 $ curl localhost:8080/admin/shutdown 1922 ``` 1923 1924 This stops the Alpha on which the command is executed and not the entire cluster. 1925 1926 ### Delete database 1927 1928 Individual triples, patterns of triples and predicates can be deleted as described in the [query languge docs](/query-language#delete). 1929 1930 To drop all data, you could send a `DropAll` request via `/alter` endpoint. 1931 1932 Alternatively, you could: 1933 1934 * [stop Dgraph]({{< relref "#shutdown-database" >}}) and wait for all writes to complete, 1935 * delete (maybe do an export first) the `p` and `w` directories, then 1936 * restart Dgraph. 1937 1938 ### Upgrade Database 1939 1940 Doing periodic exports is always a good idea. This is particularly useful if you wish to upgrade Dgraph or reconfigure the sharding of a cluster. The following are the right steps safely export and restart. 1941 1942 - Start an [export]({{< relref "#export">}}) 1943 - Ensure it's successful 1944 - Bring down the cluster 1945 - Run Dgraph using new data directories. 1946 - Reload the data via [bulk loader]({{< relref "#bulk-loader" >}}). 1947 - If all looks good, you can delete the old directories (export serves as an insurance) 1948 1949 These steps are necessary because Dgraph's underlying data format could have changed, and reloading the export avoids encoding incompatibilities. 1950 1951 ### Post Installation 1952 1953 Now that Dgraph is up and running, to understand how to add and query data to Dgraph, follow [Query Language Spec](/query-language). Also, have a look at [Frequently asked questions](/faq). 1954 1955 ## Troubleshooting 1956 1957 Here are some problems that you may encounter and some solutions to try. 1958 1959 #### Running OOM (out of memory) 1960 1961 During bulk loading of data, Dgraph can consume more memory than usual, due to high volume of writes. That's generally when you see the OOM crashes. 1962 1963 The recommended minimum RAM to run on desktops and laptops is 16GB. Dgraph can take up to 7-8 GB with the default setting `--lru_mb` set to 4096; so having the rest 8GB for desktop applications should keep your machine humming along. 1964 1965 On EC2/GCE instances, the recommended minimum is 8GB. It's recommended to set `--lru_mb` to one-third of RAM size. 1966 1967 You could also decrease memory usage of Dgraph by setting `--badger.vlog=disk`. 1968 1969 #### Too many open files 1970 1971 If you see an log error messages saying `too many open files`, you should increase the per-process file descriptors limit. 1972 1973 During normal operations, Dgraph must be able to open many files. Your operating system may set by default a open file descriptor limit lower than what's needed for a database such as Dgraph. 1974 1975 On Linux and Mac, you can check the file descriptor limit with `ulimit -n -H` for the hard limit and `ulimit -n -S` for the soft limit. The soft limit should be set high enough for Dgraph to run properly. A soft limit of 65535 is a good lower bound for a production setup. You can adjust the limit as needed. 1976 1977 ## See Also 1978 1979 * [Product Roadmap to v1.0](https://github.com/dgraph-io/dgraph/issues/1)