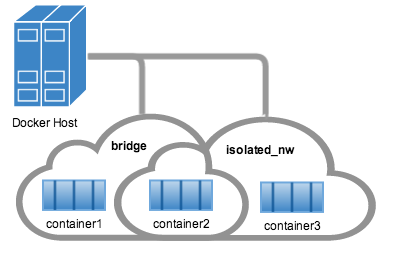

github.com/uriddle/docker@v0.0.0-20210926094723-4072e6aeb013/docs/userguide/networking/work-with-networks.md (about) 1 <!--[metadata]> 2 +++ 3 title = "Work with network commands" 4 description = "How to work with docker networks" 5 keywords = ["commands, Usage, network, docker, cluster"] 6 [menu.main] 7 parent = "smn_networking" 8 weight=-4 9 +++ 10 <![end-metadata]--> 11 12 # Work with network commands 13 14 This article provides examples of the network subcommands you can use to interact with Docker networks and the containers in them. The commands are available through the Docker Engine CLI. These commands are: 15 16 * `docker network create` 17 * `docker network connect` 18 * `docker network ls` 19 * `docker network rm` 20 * `docker network disconnect` 21 * `docker network inspect` 22 23 While not required, it is a good idea to read [Understanding Docker 24 network](dockernetworks.md) before trying the examples in this section. The 25 examples for the rely on a `bridge` network so that you can try them 26 immediately. If you would prefer to experiment with an `overlay` network see 27 the [Getting started with multi-host networks](get-started-overlay.md) instead. 28 29 ## Create networks 30 31 Docker Engine creates a `bridge` network automatically when you install Engine. 32 This network corresponds to the `docker0` bridge that Engine has traditionally 33 relied on. In addition to this network, you can create your own `bridge` or `overlay` network. 34 35 A `bridge` network resides on a single host running an instance of Docker Engine. An `overlay` network can span multiple hosts running their own engines. If you run `docker network create` and supply only a network name, it creates a bridge network for you. 36 37 ```bash 38 $ docker network create simple-network 39 69568e6336d8c96bbf57869030919f7c69524f71183b44d80948bd3927c87f6a 40 $ docker network inspect simple-network 41 [ 42 { 43 "Name": "simple-network", 44 "Id": "69568e6336d8c96bbf57869030919f7c69524f71183b44d80948bd3927c87f6a", 45 "Scope": "local", 46 "Driver": "bridge", 47 "IPAM": { 48 "Driver": "default", 49 "Config": [ 50 { 51 "Subnet": "172.22.0.0/16", 52 "Gateway": "172.22.0.1/16" 53 } 54 ] 55 }, 56 "Containers": {}, 57 "Options": {} 58 } 59 ] 60 ``` 61 62 Unlike `bridge` networks, `overlay` networks require some pre-existing conditions 63 before you can create one. These conditions are: 64 65 * Access to a key-value store. Engine supports Consul Etcd, and ZooKeeper (Distributed store) key-value stores. 66 * A cluster of hosts with connectivity to the key-value store. 67 * A properly configured Engine `daemon` on each host in the swarm. 68 69 The `docker daemon` options that support the `overlay` network are: 70 71 * `--cluster-store` 72 * `--cluster-store-opt` 73 * `--cluster-advertise` 74 75 It is also a good idea, though not required, that you install Docker Swarm 76 to manage the cluster. Swarm provides sophisticated discovery and server 77 management that can assist your implementation. 78 79 When you create a network, Engine creates a non-overlapping subnetwork for the 80 network by default. You can override this default and specify a subnetwork 81 directly using the the `--subnet` option. On a `bridge` network you can only 82 specify a single subnet. An `overlay` network supports multiple subnets. 83 84 > **Note** : It is highly recommended to use the `--subnet` option while creating 85 > a network. If the `--subnet` is not specified, the docker daemon automatically 86 > chooses and assigns a subnet for the network and it could overlap with another subnet 87 > in your infrastructure that is not managed by docker. Such overlaps can cause 88 > connectivity issues or failures when containers are connected to that network. 89 90 In addition to the `--subnetwork` option, you also specify the `--gateway` `--ip-range` and `--aux-address` options. 91 92 ```bash 93 $ docker network create -d overlay 94 --subnet=192.168.0.0/16 --subnet=192.170.0.0/16 95 --gateway=192.168.0.100 --gateway=192.170.0.100 96 --ip-range=192.168.1.0/24 97 --aux-address a=192.168.1.5 --aux-address b=192.168.1.6 98 --aux-address a=192.170.1.5 --aux-address b=192.170.1.6 99 my-multihost-network 100 ``` 101 102 Be sure that your subnetworks do not overlap. If they do, the network create fails and Engine returns an error. 103 104 When creating a custom network, the default network driver (i.e. `bridge`) has additional options that can be passed. 105 The following are those options and the equivalent docker daemon flags used for docker0 bridge: 106 107 | Option | Equivalent | Description | 108 |--------------------------------------------------|-------------|-------------------------------------------------------| 109 | `com.docker.network.bridge.name` | - | bridge name to be used when creating the Linux bridge | 110 | `com.docker.network.bridge.enable_ip_masquerade` | `--ip-masq` | Enable IP masquerading | 111 | `com.docker.network.bridge.enable_icc` | `--icc` | Enable or Disable Inter Container Connectivity | 112 | `com.docker.network.bridge.host_binding_ipv4` | `--ip` | Default IP when binding container ports | 113 | `com.docker.network.mtu` | `--mtu` | Set the containers network MTU | 114 | `com.docker.network.enable_ipv6` | `--ipv6` | Enable IPv6 networking | 115 116 For example, now let's use `-o` or `--opt` options to specify an IP address binding when publishing ports: 117 118 ```bash 119 $ docker network create -o "com.docker.network.bridge.host_binding_ipv4"="172.23.0.1" my-network 120 b1a086897963e6a2e7fc6868962e55e746bee8ad0c97b54a5831054b5f62672a 121 $ docker network inspect my-network 122 [ 123 { 124 "Name": "my-network", 125 "Id": "b1a086897963e6a2e7fc6868962e55e746bee8ad0c97b54a5831054b5f62672a", 126 "Scope": "local", 127 "Driver": "bridge", 128 "IPAM": { 129 "Driver": "default", 130 "Options": {}, 131 "Config": [ 132 { 133 "Subnet": "172.23.0.0/16", 134 "Gateway": "172.23.0.1/16" 135 } 136 ] 137 }, 138 "Containers": {}, 139 "Options": { 140 "com.docker.network.bridge.host_binding_ipv4": "172.23.0.1" 141 } 142 } 143 ] 144 $ docker run -d -P --name redis --net my-network redis 145 bafb0c808c53104b2c90346f284bda33a69beadcab4fc83ab8f2c5a4410cd129 146 $ docker ps 147 CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 148 bafb0c808c53 redis "/entrypoint.sh redis" 4 seconds ago Up 3 seconds 172.23.0.1:32770->6379/tcp redis 149 ``` 150 151 ## Connect containers 152 153 You can connect containers dynamically to one or more networks. These networks 154 can be backed the same or different network drivers. Once connected, the 155 containers can communicate using another container's IP address or name. 156 157 For `overlay` networks or custom plugins that support multi-host 158 connectivity, containers connected to the same multi-host network but launched 159 from different hosts can also communicate in this way. 160 161 Create two containers for this example: 162 163 ```bash 164 $ docker run -itd --name=container1 busybox 165 18c062ef45ac0c026ee48a83afa39d25635ee5f02b58de4abc8f467bcaa28731 166 167 $ docker run -itd --name=container2 busybox 168 498eaaaf328e1018042c04b2de04036fc04719a6e39a097a4f4866043a2c2152 169 ``` 170 171 Then create an isolated, `bridge` network to test with. 172 173 ```bash 174 $ docker network create -d bridge --subnet 172.25.0.0/16 isolated_nw 175 06a62f1c73c4e3107c0f555b7a5f163309827bfbbf999840166065a8f35455a8 176 ``` 177 178 Connect `container2` to the network and then `inspect` the network to verify the connection: 179 180 ``` 181 $ docker network connect isolated_nw container2 182 $ docker network inspect isolated_nw 183 [ 184 { 185 "Name": "isolated_nw", 186 "Id": "06a62f1c73c4e3107c0f555b7a5f163309827bfbbf999840166065a8f35455a8", 187 "Scope": "local", 188 "Driver": "bridge", 189 "IPAM": { 190 "Driver": "default", 191 "Config": [ 192 { 193 "Subnet": "172.21.0.0/16", 194 "Gateway": "172.21.0.1/16" 195 } 196 ] 197 }, 198 "Containers": { 199 "90e1f3ec71caf82ae776a827e0712a68a110a3f175954e5bd4222fd142ac9428": { 200 "Name": "container2", 201 "EndpointID": "11cedac1810e864d6b1589d92da12af66203879ab89f4ccd8c8fdaa9b1c48b1d", 202 "MacAddress": "02:42:ac:19:00:02", 203 "IPv4Address": "172.25.0.2/16", 204 "IPv6Address": "" 205 } 206 }, 207 "Options": {} 208 } 209 ] 210 ``` 211 212 You can see that the Engine automatically assigns an IP address to `container2`. 213 Given we specified a `--subnet` when creating the network, Engine picked 214 an address from that same subnet. Now, start a third container and connect it to 215 the network on launch using the `docker run` command's `--net` option: 216 217 ```bash 218 $ docker run --net=isolated_nw --ip=172.25.3.3 -itd --name=container3 busybox 219 467a7863c3f0277ef8e661b38427737f28099b61fa55622d6c30fb288d88c551 220 ``` 221 222 As you can see you were able to specify the ip address for your container. 223 As long as the network to which the container is connecting was created with 224 a user specified subnet, you will be able to select the IPv4 and/or IPv6 address(es) 225 for your container when executing `docker run` and `docker network connect` commands. 226 The selected IP address is part of the container networking configuration and will be 227 preserved across container reload. The feature is only available on user defined networks, 228 because they guarantee their subnets configuration does not change across daemon reload. 229 230 Now, inspect the network resources used by `container3`. 231 232 ```bash 233 $ docker inspect --format='{{json .NetworkSettings.Networks}}' container3 234 {"isolated_nw":{"IPAMConfig":{"IPv4Address":"172.25.3.3"},"NetworkID":"1196a4c5af43a21ae38ef34515b6af19236a3fc48122cf585e3f3054d509679b", 235 "EndpointID":"dffc7ec2915af58cc827d995e6ebdc897342be0420123277103c40ae35579103","Gateway":"172.25.0.1","IPAddress":"172.25.3.3","IPPrefixLen":16,"IPv6Gateway":"","GlobalIPv6Address":"","GlobalIPv6PrefixLen":0,"MacAddress":"02:42:ac:19:03:03"}} 236 ``` 237 Repeat this command for `container2`. If you have Python installed, you can pretty print the output. 238 239 ```bash 240 $ docker inspect --format='{{json .NetworkSettings.Networks}}' container2 | python -m json.tool 241 { 242 "bridge": { 243 "NetworkID":"7ea29fc1412292a2d7bba362f9253545fecdfa8ce9a6e37dd10ba8bee7129812", 244 "EndpointID": "0099f9efb5a3727f6a554f176b1e96fca34cae773da68b3b6a26d046c12cb365", 245 "Gateway": "172.17.0.1", 246 "GlobalIPv6Address": "", 247 "GlobalIPv6PrefixLen": 0, 248 "IPAMConfig": null, 249 "IPAddress": "172.17.0.3", 250 "IPPrefixLen": 16, 251 "IPv6Gateway": "", 252 "MacAddress": "02:42:ac:11:00:03" 253 }, 254 "isolated_nw": { 255 "NetworkID":"1196a4c5af43a21ae38ef34515b6af19236a3fc48122cf585e3f3054d509679b", 256 "EndpointID": "11cedac1810e864d6b1589d92da12af66203879ab89f4ccd8c8fdaa9b1c48b1d", 257 "Gateway": "172.25.0.1", 258 "GlobalIPv6Address": "", 259 "GlobalIPv6PrefixLen": 0, 260 "IPAMConfig": null, 261 "IPAddress": "172.25.0.2", 262 "IPPrefixLen": 16, 263 "IPv6Gateway": "", 264 "MacAddress": "02:42:ac:19:00:02" 265 } 266 } 267 ``` 268 269 You should find `container2` belongs to two networks. The `bridge` network 270 which it joined by default when you launched it and the `isolated_nw` which you 271 later connected it to. 272 273  274 275 In the case of `container3`, you connected it through `docker run` to the 276 `isolated_nw` so that container is not connected to `bridge`. 277 278 Use the `docker attach` command to connect to the running `container2` and 279 examine its networking stack: 280 281 ```bash 282 $ docker attach container2 283 ``` 284 285 If you look a the container's network stack you should see two Ethernet interfaces, one for the default bridge network and one for the `isolated_nw` network. 286 287 ```bash 288 / # ifconfig 289 eth0 Link encap:Ethernet HWaddr 02:42:AC:11:00:03 290 inet addr:172.17.0.3 Bcast:0.0.0.0 Mask:255.255.0.0 291 inet6 addr: fe80::42:acff:fe11:3/64 Scope:Link 292 UP BROADCAST RUNNING MULTICAST MTU:9001 Metric:1 293 RX packets:8 errors:0 dropped:0 overruns:0 frame:0 294 TX packets:8 errors:0 dropped:0 overruns:0 carrier:0 295 collisions:0 txqueuelen:0 296 RX bytes:648 (648.0 B) TX bytes:648 (648.0 B) 297 298 eth1 Link encap:Ethernet HWaddr 02:42:AC:15:00:02 299 inet addr:172.25.0.2 Bcast:0.0.0.0 Mask:255.255.0.0 300 inet6 addr: fe80::42:acff:fe19:2/64 Scope:Link 301 UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 302 RX packets:8 errors:0 dropped:0 overruns:0 frame:0 303 TX packets:8 errors:0 dropped:0 overruns:0 carrier:0 304 collisions:0 txqueuelen:0 305 RX bytes:648 (648.0 B) TX bytes:648 (648.0 B) 306 307 lo Link encap:Local Loopback 308 inet addr:127.0.0.1 Mask:255.0.0.0 309 inet6 addr: ::1/128 Scope:Host 310 UP LOOPBACK RUNNING MTU:65536 Metric:1 311 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 312 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 313 collisions:0 txqueuelen:0 314 RX bytes:0 (0.0 B) TX bytes:0 (0.0 B) 315 316 On the `isolated_nw` which was user defined, the Docker embedded DNS server enables name resolution for other containers in the network. Inside of `container2` it is possible to ping `container3` by name. 317 318 ```bash 319 / # ping -w 4 container3 320 PING container3 (172.25.3.3): 56 data bytes 321 64 bytes from 172.25.3.3: seq=0 ttl=64 time=0.070 ms 322 64 bytes from 172.25.3.3: seq=1 ttl=64 time=0.080 ms 323 64 bytes from 172.25.3.3: seq=2 ttl=64 time=0.080 ms 324 64 bytes from 172.25.3.3: seq=3 ttl=64 time=0.097 ms 325 326 --- container3 ping statistics --- 327 4 packets transmitted, 4 packets received, 0% packet loss 328 round-trip min/avg/max = 0.070/0.081/0.097 ms 329 ``` 330 331 This isn't the case for the default `bridge` network. Both `container2` and `container1` are connected to the default bridge network. Docker does not support automatic service discovery on this network. For this reason, pinging `container1` by name fails as you would expect based on the `/etc/hosts` file: 332 333 ```bash 334 / # ping -w 4 container1 335 ping: bad address 'container1' 336 ``` 337 338 A ping using the `container1` IP address does succeed though: 339 340 ```bash 341 / # ping -w 4 172.17.0.2 342 PING 172.17.0.2 (172.17.0.2): 56 data bytes 343 64 bytes from 172.17.0.2: seq=0 ttl=64 time=0.095 ms 344 64 bytes from 172.17.0.2: seq=1 ttl=64 time=0.075 ms 345 64 bytes from 172.17.0.2: seq=2 ttl=64 time=0.072 ms 346 64 bytes from 172.17.0.2: seq=3 ttl=64 time=0.101 ms 347 348 --- 172.17.0.2 ping statistics --- 349 4 packets transmitted, 4 packets received, 0% packet loss 350 round-trip min/avg/max = 0.072/0.085/0.101 ms 351 ``` 352 353 If you wanted you could connect `container1` to `container2` with the `docker 354 run --link` command and that would enable the two containers to interact by name 355 as well as IP. 356 357 Detach from a `container2` and leave it running using `CTRL-p CTRL-q`. 358 359 In this example, `container2` is attached to both networks and so can talk to 360 `container1` and `container3`. But `container3` and `container1` are not in the 361 same network and cannot communicate. Test, this now by attaching to 362 `container3` and attempting to ping `container1` by IP address. 363 364 ```bash 365 $ docker attach container3 366 / # ping 172.17.0.2 367 PING 172.17.0.2 (172.17.0.2): 56 data bytes 368 ^C 369 --- 172.17.0.2 ping statistics --- 370 10 packets transmitted, 0 packets received, 100% packet loss 371 372 ``` 373 374 You can connect both running and non-running containers to a network. However, 375 `docker network inspect` only displays information on running containers. 376 377 ### Linking containers in user-defined networks 378 379 In the above example, container_2 was able to resolve container_3's name automatically 380 in the user defined network `isolated_nw`, but the name resolution did not succeed 381 automatically in the default `bridge` network. This is expected in order to maintain 382 backward compatibility with [legacy link](default_network/dockerlinks.md). 383 384 The `legacy link` provided 4 major functionalities to the default `bridge` network. 385 386 * name resolution 387 * name alias for the linked container using `--link=CONTAINER-NAME:ALIAS` 388 * secured container connectivity (in isolation via `--icc=false`) 389 * environment variable injection 390 391 Comparing the above 4 functionalities with the non-default user-defined networks such as 392 `isolated_nw` in this example, without any additional config, `docker network` provides 393 394 * automatic name resolution using DNS 395 * automatic secured isolated environment for the containers in a network 396 * ability to dynamically attach and detach to multiple networks 397 * supports the `--link` option to provide name alias for the linked container 398 399 Continuing with the above example, create another container `container_4` in `isolated_nw` 400 with `--link` to provide additional name resolution using alias for other containers in 401 the same network. 402 403 ```bash 404 $ docker run --net=isolated_nw -itd --name=container4 --link container5:c5 busybox 405 01b5df970834b77a9eadbaff39051f237957bd35c4c56f11193e0594cfd5117c 406 ``` 407 408 With the help of `--link` container4 will be able to reach container5 using the 409 aliased name `c5` as well. 410 411 Please note that while creating container4, we linked to a container named `container5` 412 which is not created yet. That is one of the differences in behavior between the 413 `legacy link` in default `bridge` network and the new `link` functionality in user defined 414 networks. The `legacy link` is static in nature and it hard-binds the container with the 415 alias and it doesnt tolerate linked container restarts. While the new `link` functionality 416 in user defined networks are dynamic in nature and supports linked container restarts 417 including tolerating ip-address changes on the linked container. 418 419 Now let us launch another container named `container5` linking container4 to c4. 420 421 ```bash 422 $ docker run --net=isolated_nw -itd --name=container5 --link container4:c4 busybox 423 72eccf2208336f31e9e33ba327734125af00d1e1d2657878e2ee8154fbb23c7a 424 ``` 425 426 As expected, container4 will be able to reach container5 by both its container name and 427 its alias c5 and container5 will be able to reach container4 by its container name and 428 its alias c4. 429 430 ```bash 431 $ docker attach container4 432 / # ping -w 4 c5 433 PING c5 (172.25.0.5): 56 data bytes 434 64 bytes from 172.25.0.5: seq=0 ttl=64 time=0.070 ms 435 64 bytes from 172.25.0.5: seq=1 ttl=64 time=0.080 ms 436 64 bytes from 172.25.0.5: seq=2 ttl=64 time=0.080 ms 437 64 bytes from 172.25.0.5: seq=3 ttl=64 time=0.097 ms 438 439 --- c5 ping statistics --- 440 4 packets transmitted, 4 packets received, 0% packet loss 441 round-trip min/avg/max = 0.070/0.081/0.097 ms 442 443 / # ping -w 4 container5 444 PING container5 (172.25.0.5): 56 data bytes 445 64 bytes from 172.25.0.5: seq=0 ttl=64 time=0.070 ms 446 64 bytes from 172.25.0.5: seq=1 ttl=64 time=0.080 ms 447 64 bytes from 172.25.0.5: seq=2 ttl=64 time=0.080 ms 448 64 bytes from 172.25.0.5: seq=3 ttl=64 time=0.097 ms 449 450 --- container5 ping statistics --- 451 4 packets transmitted, 4 packets received, 0% packet loss 452 round-trip min/avg/max = 0.070/0.081/0.097 ms 453 ``` 454 455 ```bash 456 $ docker attach container5 457 / # ping -w 4 c4 458 PING c4 (172.25.0.4): 56 data bytes 459 64 bytes from 172.25.0.4: seq=0 ttl=64 time=0.065 ms 460 64 bytes from 172.25.0.4: seq=1 ttl=64 time=0.070 ms 461 64 bytes from 172.25.0.4: seq=2 ttl=64 time=0.067 ms 462 64 bytes from 172.25.0.4: seq=3 ttl=64 time=0.082 ms 463 464 --- c4 ping statistics --- 465 4 packets transmitted, 4 packets received, 0% packet loss 466 round-trip min/avg/max = 0.065/0.070/0.082 ms 467 468 / # ping -w 4 container4 469 PING container4 (172.25.0.4): 56 data bytes 470 64 bytes from 172.25.0.4: seq=0 ttl=64 time=0.065 ms 471 64 bytes from 172.25.0.4: seq=1 ttl=64 time=0.070 ms 472 64 bytes from 172.25.0.4: seq=2 ttl=64 time=0.067 ms 473 64 bytes from 172.25.0.4: seq=3 ttl=64 time=0.082 ms 474 475 --- container4 ping statistics --- 476 4 packets transmitted, 4 packets received, 0% packet loss 477 round-trip min/avg/max = 0.065/0.070/0.082 ms 478 ``` 479 480 Similar to the legacy link functionality the new link alias is localized to a container 481 and the aliased name has no meaning outside of the container using the `--link`. 482 483 Also, it is important to note that if a container belongs to multiple networks, the 484 linked alias is scoped within a given network. Hence the containers can be linked to 485 different aliases in different networks. 486 487 Extending the example, let us create another network named `local_alias` 488 489 ```bash 490 $ docker network create -d bridge --subnet 172.26.0.0/24 local_alias 491 76b7dc932e037589e6553f59f76008e5b76fa069638cd39776b890607f567aaa 492 ``` 493 494 let us connect container4 and container5 to the new network `local_alias` 495 496 ``` 497 $ docker network connect --link container5:foo local_alias container4 498 $ docker network connect --link container4:bar local_alias container5 499 ``` 500 501 ```bash 502 $ docker attach container4 503 504 / # ping -w 4 foo 505 PING foo (172.26.0.3): 56 data bytes 506 64 bytes from 172.26.0.3: seq=0 ttl=64 time=0.070 ms 507 64 bytes from 172.26.0.3: seq=1 ttl=64 time=0.080 ms 508 64 bytes from 172.26.0.3: seq=2 ttl=64 time=0.080 ms 509 64 bytes from 172.26.0.3: seq=3 ttl=64 time=0.097 ms 510 511 --- foo ping statistics --- 512 4 packets transmitted, 4 packets received, 0% packet loss 513 round-trip min/avg/max = 0.070/0.081/0.097 ms 514 515 / # ping -w 4 c5 516 PING c5 (172.25.0.5): 56 data bytes 517 64 bytes from 172.25.0.5: seq=0 ttl=64 time=0.070 ms 518 64 bytes from 172.25.0.5: seq=1 ttl=64 time=0.080 ms 519 64 bytes from 172.25.0.5: seq=2 ttl=64 time=0.080 ms 520 64 bytes from 172.25.0.5: seq=3 ttl=64 time=0.097 ms 521 522 --- c5 ping statistics --- 523 4 packets transmitted, 4 packets received, 0% packet loss 524 round-trip min/avg/max = 0.070/0.081/0.097 ms 525 ``` 526 527 Note that the ping succeeds for both the aliases but on different networks. 528 Let us conclude this section by disconnecting container5 from the `isolated_nw` 529 and observe the results 530 531 ``` 532 $ docker network disconnect isolated_nw container5 533 534 $ docker attach container4 535 536 / # ping -w 4 c5 537 ping: bad address 'c5' 538 539 / # ping -w 4 foo 540 PING foo (172.26.0.3): 56 data bytes 541 64 bytes from 172.26.0.3: seq=0 ttl=64 time=0.070 ms 542 64 bytes from 172.26.0.3: seq=1 ttl=64 time=0.080 ms 543 64 bytes from 172.26.0.3: seq=2 ttl=64 time=0.080 ms 544 64 bytes from 172.26.0.3: seq=3 ttl=64 time=0.097 ms 545 546 --- foo ping statistics --- 547 4 packets transmitted, 4 packets received, 0% packet loss 548 round-trip min/avg/max = 0.070/0.081/0.097 ms 549 550 ``` 551 552 In conclusion, the new link functionality in user defined networks provides all the 553 benefits of legacy links while avoiding most of the well-known issues with `legacy links`. 554 555 One notable missing functionality compared to `legacy links` is the injection of 556 environment variables. Though very useful, environment variable injection is static 557 in nature and must be injected when the container is started. One cannot inject 558 environment variables into a running container without significant effort and hence 559 it is not compatible with `docker network` which provides a dynamic way to connect/ 560 disconnect containers to/from a network. 561 562 ### Network-scoped alias 563 564 While `links` provide private name resolution that is localized within a container, 565 the network-scoped alias provides a way for a container to be discovered by an 566 alternate name by any other container within the scope of a particular network. 567 Unlike the `link` alias, which is defined by the consumer of a service, the 568 network-scoped alias is defined by the container that is offering the service 569 to the network. 570 571 Continuing with the above example, create another container in `isolated_nw` with a 572 network alias. 573 574 ```bash 575 $ docker run --net=isolated_nw -itd --name=container6 --net-alias app busybox 576 8ebe6767c1e0361f27433090060b33200aac054a68476c3be87ef4005eb1df17 577 ``` 578 579 ```bash 580 $ docker attach container4 581 / # ping -w 4 app 582 PING app (172.25.0.6): 56 data bytes 583 64 bytes from 172.25.0.6: seq=0 ttl=64 time=0.070 ms 584 64 bytes from 172.25.0.6: seq=1 ttl=64 time=0.080 ms 585 64 bytes from 172.25.0.6: seq=2 ttl=64 time=0.080 ms 586 64 bytes from 172.25.0.6: seq=3 ttl=64 time=0.097 ms 587 588 --- app ping statistics --- 589 4 packets transmitted, 4 packets received, 0% packet loss 590 round-trip min/avg/max = 0.070/0.081/0.097 ms 591 592 / # ping -w 4 container6 593 PING container5 (172.25.0.6): 56 data bytes 594 64 bytes from 172.25.0.6: seq=0 ttl=64 time=0.070 ms 595 64 bytes from 172.25.0.6: seq=1 ttl=64 time=0.080 ms 596 64 bytes from 172.25.0.6: seq=2 ttl=64 time=0.080 ms 597 64 bytes from 172.25.0.6: seq=3 ttl=64 time=0.097 ms 598 599 --- container6 ping statistics --- 600 4 packets transmitted, 4 packets received, 0% packet loss 601 round-trip min/avg/max = 0.070/0.081/0.097 ms 602 ``` 603 604 Now let us connect `container6` to the `local_alias` network with a different network-scoped 605 alias. 606 607 ``` 608 $ docker network connect --alias scoped-app local_alias container6 609 ``` 610 611 `container6` in this example now is aliased as `app` in network `isolated_nw` and 612 as `scoped-app` in network `local_alias`. 613 614 Let's try to reach these aliases from `container4` (which is connected to both these networks) 615 and `container5` (which is connected only to `isolated_nw`). 616 617 ```bash 618 $ docker attach container4 619 620 / # ping -w 4 scoped-app 621 PING foo (172.26.0.5): 56 data bytes 622 64 bytes from 172.26.0.5: seq=0 ttl=64 time=0.070 ms 623 64 bytes from 172.26.0.5: seq=1 ttl=64 time=0.080 ms 624 64 bytes from 172.26.0.5: seq=2 ttl=64 time=0.080 ms 625 64 bytes from 172.26.0.5: seq=3 ttl=64 time=0.097 ms 626 627 --- foo ping statistics --- 628 4 packets transmitted, 4 packets received, 0% packet loss 629 round-trip min/avg/max = 0.070/0.081/0.097 ms 630 631 $ docker attach container5 632 633 / # ping -w 4 scoped-app 634 ping: bad address 'scoped-app' 635 636 ``` 637 638 As you can see, the alias is scoped to the network it is defined on and hence only 639 those containers that are connected to that network can access the alias. 640 641 In addition to the above features, multiple containers can share the same network-scoped 642 alias within the same network. For example, let's launch `container7` in `isolated_nw` with 643 the same alias as `container6` 644 645 ```bash 646 $ docker run --net=isolated_nw -itd --name=container7 --net-alias app busybox 647 3138c678c123b8799f4c7cc6a0cecc595acbdfa8bf81f621834103cd4f504554 648 ``` 649 650 When multiple containers share the same alias, name resolution to that alias will happen 651 to one of the containers (typically the first container that is aliased). When the container 652 that backs the alias goes down or disconnected from the network, the next container that 653 backs the alias will be resolved. 654 655 Let us ping the alias `app` from `container4` and bring down `container6` to verify that 656 `container7` is resolving the `app` alias. 657 658 ```bash 659 $ docker attach container4 660 / # ping -w 4 app 661 PING app (172.25.0.6): 56 data bytes 662 64 bytes from 172.25.0.6: seq=0 ttl=64 time=0.070 ms 663 64 bytes from 172.25.0.6: seq=1 ttl=64 time=0.080 ms 664 64 bytes from 172.25.0.6: seq=2 ttl=64 time=0.080 ms 665 64 bytes from 172.25.0.6: seq=3 ttl=64 time=0.097 ms 666 667 --- app ping statistics --- 668 4 packets transmitted, 4 packets received, 0% packet loss 669 round-trip min/avg/max = 0.070/0.081/0.097 ms 670 671 $ docker stop container6 672 673 $ docker attach container4 674 / # ping -w 4 app 675 PING app (172.25.0.7): 56 data bytes 676 64 bytes from 172.25.0.7: seq=0 ttl=64 time=0.095 ms 677 64 bytes from 172.25.0.7: seq=1 ttl=64 time=0.075 ms 678 64 bytes from 172.25.0.7: seq=2 ttl=64 time=0.072 ms 679 64 bytes from 172.25.0.7: seq=3 ttl=64 time=0.101 ms 680 681 --- app ping statistics --- 682 4 packets transmitted, 4 packets received, 0% packet loss 683 round-trip min/avg/max = 0.072/0.085/0.101 ms 684 685 ``` 686 687 ## Disconnecting containers 688 689 You can disconnect a container from a network using the `docker network 690 disconnect` command. 691 692 ``` 693 $ docker network disconnect isolated_nw container2 694 695 docker inspect --format='{{json .NetworkSettings.Networks}}' container2 | python -m json.tool 696 { 697 "bridge": { 698 "NetworkID":"7ea29fc1412292a2d7bba362f9253545fecdfa8ce9a6e37dd10ba8bee7129812", 699 "EndpointID": "9e4575f7f61c0f9d69317b7a4b92eefc133347836dd83ef65deffa16b9985dc0", 700 "Gateway": "172.17.0.1", 701 "GlobalIPv6Address": "", 702 "GlobalIPv6PrefixLen": 0, 703 "IPAddress": "172.17.0.3", 704 "IPPrefixLen": 16, 705 "IPv6Gateway": "", 706 "MacAddress": "02:42:ac:11:00:03" 707 } 708 } 709 710 711 $ docker network inspect isolated_nw 712 [ 713 { 714 "Name": "isolated_nw", 715 "Id": "06a62f1c73c4e3107c0f555b7a5f163309827bfbbf999840166065a8f35455a8", 716 "Scope": "local", 717 "Driver": "bridge", 718 "IPAM": { 719 "Driver": "default", 720 "Config": [ 721 { 722 "Subnet": "172.21.0.0/16", 723 "Gateway": "172.21.0.1/16" 724 } 725 ] 726 }, 727 "Containers": { 728 "467a7863c3f0277ef8e661b38427737f28099b61fa55622d6c30fb288d88c551": { 729 "Name": "container3", 730 "EndpointID": "dffc7ec2915af58cc827d995e6ebdc897342be0420123277103c40ae35579103", 731 "MacAddress": "02:42:ac:19:03:03", 732 "IPv4Address": "172.25.3.3/16", 733 "IPv6Address": "" 734 } 735 }, 736 "Options": {} 737 } 738 ] 739 ``` 740 741 Once a container is disconnected from a network, it cannot communicate with 742 other containers connected to that network. In this example, `container2` can no longer talk to `container3` on the `isolated_nw` network. 743 744 ``` 745 $ docker attach container2 746 747 / # ifconfig 748 eth0 Link encap:Ethernet HWaddr 02:42:AC:11:00:03 749 inet addr:172.17.0.3 Bcast:0.0.0.0 Mask:255.255.0.0 750 inet6 addr: fe80::42:acff:fe11:3/64 Scope:Link 751 UP BROADCAST RUNNING MULTICAST MTU:9001 Metric:1 752 RX packets:8 errors:0 dropped:0 overruns:0 frame:0 753 TX packets:8 errors:0 dropped:0 overruns:0 carrier:0 754 collisions:0 txqueuelen:0 755 RX bytes:648 (648.0 B) TX bytes:648 (648.0 B) 756 757 lo Link encap:Local Loopback 758 inet addr:127.0.0.1 Mask:255.0.0.0 759 inet6 addr: ::1/128 Scope:Host 760 UP LOOPBACK RUNNING MTU:65536 Metric:1 761 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 762 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 763 collisions:0 txqueuelen:0 764 RX bytes:0 (0.0 B) TX bytes:0 (0.0 B) 765 766 / # ping container3 767 PING container3 (172.25.3.3): 56 data bytes 768 ^C 769 --- container3 ping statistics --- 770 2 packets transmitted, 0 packets received, 100% packet loss 771 ``` 772 773 The `container2` still has full connectivity to the bridge network 774 775 ```bash 776 / # ping container1 777 PING container1 (172.17.0.2): 56 data bytes 778 64 bytes from 172.17.0.2: seq=0 ttl=64 time=0.119 ms 779 64 bytes from 172.17.0.2: seq=1 ttl=64 time=0.174 ms 780 ^C 781 --- container1 ping statistics --- 782 2 packets transmitted, 2 packets received, 0% packet loss 783 round-trip min/avg/max = 0.119/0.146/0.174 ms 784 / # 785 ``` 786 787 There are certain scenarios such as ungraceful docker daemon restarts in multi-host network, 788 where the daemon is unable to cleanup stale connectivity endpoints. Such stale endpoints 789 may cause an error `container already connected to network` when a new container is 790 connected to that network with the same name as the stale endpoint. In order to cleanup 791 these stale endpoints, first remove the container and force disconnect 792 (`docker network disconnect -f`) the endpoint from the network. Once the endpoint is 793 cleaned up, the container can be connected to the network. 794 795 ``` 796 $ docker run -d --name redis_db --net multihost redis 797 ERROR: Cannot start container bc0b19c089978f7845633027aa3435624ca3d12dd4f4f764b61eac4c0610f32e: container already connected to network multihost 798 799 $ docker rm -f redis_db 800 $ docker network disconnect -f multihost redis_db 801 802 $ docker run -d --name redis_db --net multihost redis 803 7d986da974aeea5e9f7aca7e510bdb216d58682faa83a9040c2f2adc0544795a 804 ``` 805 806 ## Remove a network 807 808 When all the containers in a network are stopped or disconnected, you can remove a network. 809 810 ```bash 811 $ docker network disconnect isolated_nw container3 812 ``` 813 814 ```bash 815 docker network inspect isolated_nw 816 [ 817 { 818 "Name": "isolated_nw", 819 "Id": "06a62f1c73c4e3107c0f555b7a5f163309827bfbbf999840166065a8f35455a8", 820 "Scope": "local", 821 "Driver": "bridge", 822 "IPAM": { 823 "Driver": "default", 824 "Config": [ 825 { 826 "Subnet": "172.21.0.0/16", 827 "Gateway": "172.21.0.1/16" 828 } 829 ] 830 }, 831 "Containers": {}, 832 "Options": {} 833 } 834 ] 835 836 $ docker network rm isolated_nw 837 ``` 838 839 List all your networks to verify the `isolated_nw` was removed: 840 841 ``` 842 $ docker network ls 843 NETWORK ID NAME DRIVER 844 72314fa53006 host host 845 f7ab26d71dbd bridge bridge 846 0f32e83e61ac none null 847 ``` 848 849 ## Related information 850 851 * [network create](../../reference/commandline/network_create.md) 852 * [network inspect](../../reference/commandline/network_inspect.md) 853 * [network connect](../../reference/commandline/network_connect.md) 854 * [network disconnect](../../reference/commandline/network_disconnect.md) 855 * [network ls](../../reference/commandline/network_ls.md) 856 * [network rm](../../reference/commandline/network_rm.md)