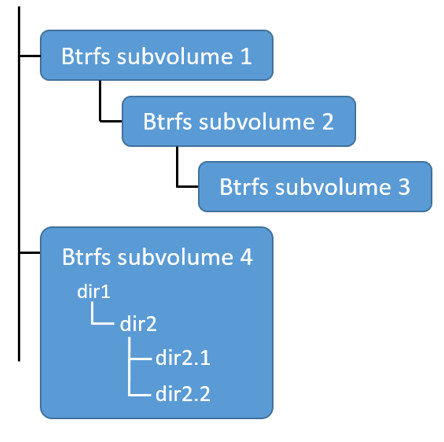

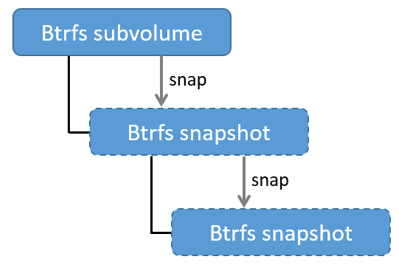

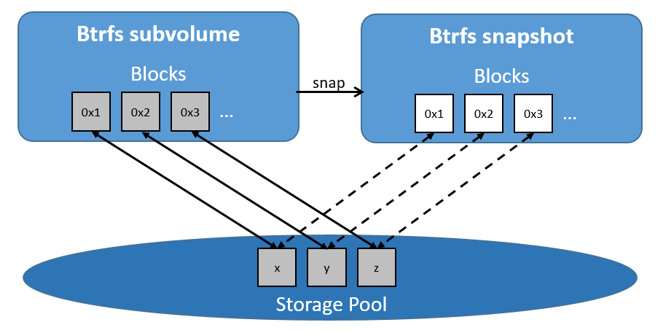

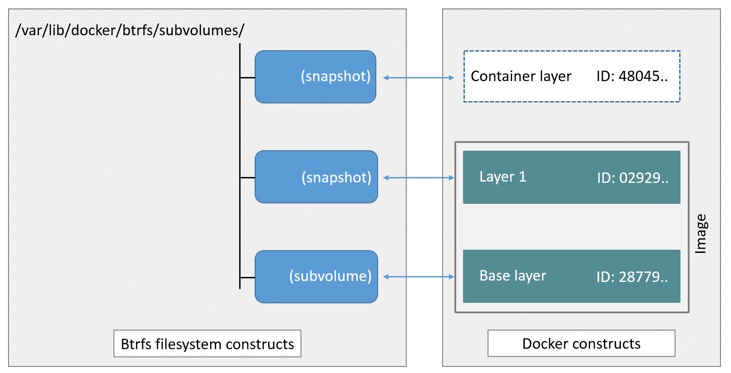

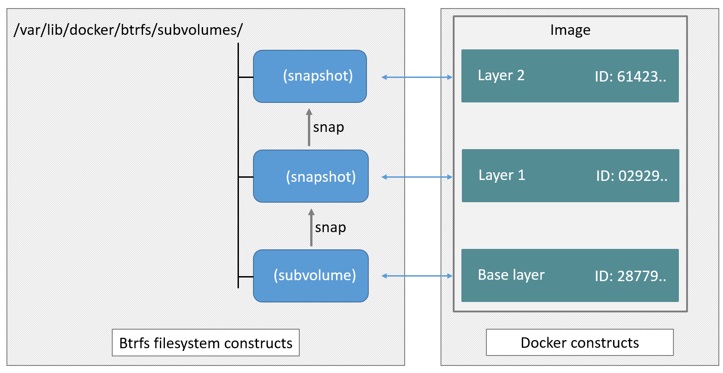

github.com/vieux/docker@v0.6.3-0.20161004191708-e097c2a938c7/docs/userguide/storagedriver/btrfs-driver.md (about) 1 <!--[metadata]> 2 +++ 3 title = "Btrfs storage in practice" 4 description = "Learn how to optimize your use of Btrfs driver." 5 keywords = ["container, storage, driver, Btrfs "] 6 [menu.main] 7 parent = "engine_driver" 8 +++ 9 <![end-metadata]--> 10 11 # Docker and Btrfs in practice 12 13 Btrfs is a next generation copy-on-write filesystem that supports many advanced 14 storage technologies that make it a good fit for Docker. Btrfs is included in 15 the mainline Linux kernel and its on-disk-format is now considered stable. 16 However, many of its features are still under heavy development and users 17 should consider it a fast-moving target. 18 19 Docker's `btrfs` storage driver leverages many Btrfs features for image and 20 container management. Among these features are thin provisioning, 21 copy-on-write, and snapshotting. 22 23 This article refers to Docker's Btrfs storage driver as `btrfs` and the overall 24 Btrfs Filesystem as Btrfs. 25 26 >**Note**: The [Commercially Supported Docker Engine (CS-Engine)](https://www.docker.com/compatibility-maintenance) does not currently support the `btrfs` storage driver. 27 28 ## The future of Btrfs 29 30 Btrfs has been long hailed as the future of Linux filesystems. With full 31 support in the mainline Linux kernel, a stable on-disk-format, and active 32 development with a focus on stability, this is now becoming more of a reality. 33 34 As far as Docker on the Linux platform goes, many people see the `btrfs` 35 storage driver as a potential long-term replacement for the `devicemapper` 36 storage driver. However, at the time of writing, the `devicemapper` storage 37 driver should be considered safer, more stable, and more *production ready*. 38 You should only consider the `btrfs` driver for production deployments if you 39 understand it well and have existing experience with Btrfs. 40 41 ## Image layering and sharing with Btrfs 42 43 Docker leverages Btrfs *subvolumes* and *snapshots* for managing the on-disk 44 components of image and container layers. Btrfs subvolumes look and feel like 45 a normal Unix filesystem. As such, they can have their own internal directory 46 structure that hooks into the wider Unix filesystem. 47 48 Subvolumes are natively copy-on-write and have space allocated to them 49 on-demand from an underlying storage pool. They can also be nested and snapped. 50 The diagram blow shows 4 subvolumes. 'Subvolume 2' and 'Subvolume 3' are 51 nested, whereas 'Subvolume 4' shows its own internal directory tree. 52 53  54 55 Snapshots are a point-in-time read-write copy of an entire subvolume. They 56 exist directly below the subvolume they were created from. You can create 57 snapshots of snapshots as shown in the diagram below. 58 59  60 61 Btfs allocates space to subvolumes and snapshots on demand from an underlying 62 pool of storage. The unit of allocation is referred to as a *chunk*, and 63 *chunks* are normally ~1GB in size. 64 65 Snapshots are first-class citizens in a Btrfs filesystem. This means that they 66 look, feel, and operate just like regular subvolumes. The technology required 67 to create them is built directly into the Btrfs filesystem thanks to its 68 native copy-on-write design. This means that Btrfs snapshots are space 69 efficient with little or no performance overhead. The diagram below shows a 70 subvolume and its snapshot sharing the same data. 71 72  73 74 Docker's `btrfs` storage driver stores every image layer and container in its 75 own Btrfs subvolume or snapshot. The base layer of an image is stored as a 76 subvolume whereas child image layers and containers are stored as snapshots. 77 This is shown in the diagram below. 78 79  80 81 The high level process for creating images and containers on Docker hosts 82 running the `btrfs` driver is as follows: 83 84 1. The image's base layer is stored in a Btrfs *subvolume* under 85 `/var/lib/docker/btrfs/subvolumes`. 86 87 2. Subsequent image layers are stored as a Btrfs *snapshot* of the parent 88 layer's subvolume or snapshot. 89 90 The diagram below shows a three-layer image. The base layer is a subvolume. 91 Layer 1 is a snapshot of the base layer's subvolume. Layer 2 is a snapshot of 92 Layer 1's snapshot. 93 94  95 96 As of Docker 1.10, image layer IDs no longer correspond to directory names 97 under `/var/lib/docker/`. 98 99 ## Image and container on-disk constructs 100 101 Image layers and containers are visible in the Docker host's filesystem at 102 `/var/lib/docker/btrfs/subvolumes/`. However, as previously stated, directory 103 names no longer correspond to image layer IDs. That said, directories for 104 containers are present even for containers with a stopped status. This is 105 because the `btrfs` storage driver mounts a default, top-level subvolume at 106 `/var/lib/docker/subvolumes`. All other subvolumes and snapshots exist below 107 that as Btrfs filesystem objects and not as individual mounts. 108 109 Because Btrfs works at the filesystem level and not the block level, each image 110 and container layer can be browsed in the filesystem using normal Unix 111 commands. The example below shows a truncated output of an `ls -l` command an 112 image layer: 113 114 $ ls -l /var/lib/docker/btrfs/subvolumes/0a17decee4139b0de68478f149cc16346f5e711c5ae3bb969895f22dd6723751/ 115 116 total 0 117 drwxr-xr-x 1 root root 1372 Oct 9 08:39 bin 118 drwxr-xr-x 1 root root 0 Apr 10 2014 boot 119 drwxr-xr-x 1 root root 882 Oct 9 08:38 dev 120 drwxr-xr-x 1 root root 2040 Oct 12 17:27 etc 121 drwxr-xr-x 1 root root 0 Apr 10 2014 home 122 ...output truncated... 123 124 ## Container reads and writes with Btrfs 125 126 A container is a space-efficient snapshot of an image. Metadata in the snapshot 127 points to the actual data blocks in the storage pool. This is the same as with 128 a subvolume. Therefore, reads performed against a snapshot are essentially the 129 same as reads performed against a subvolume. As a result, no performance 130 overhead is incurred from the Btrfs driver. 131 132 Writing a new file to a container invokes an allocate-on-demand operation to 133 allocate new data block to the container's snapshot. The file is then written to 134 this new space. The allocate-on-demand operation is native to all writes with 135 Btrfs and is the same as writing new data to a subvolume. As a result, writing 136 new files to a container's snapshot operate at native Btrfs speeds. 137 138 Updating an existing file in a container causes a copy-on-write operation 139 (technically *redirect-on-write*). The driver leaves the original data and 140 allocates new space to the snapshot. The updated data is written to this new 141 space. Then, the driver updates the filesystem metadata in the snapshot to 142 point to this new data. The original data is preserved in-place for subvolumes 143 and snapshots further up the tree. This behavior is native to copy-on-write 144 filesystems like Btrfs and incurs very little overhead. 145 146 With Btfs, writing and updating lots of small files can result in slow 147 performance. More on this later. 148 149 ## Configuring Docker with Btrfs 150 151 The `btrfs` storage driver only operates on a Docker host where 152 `/var/lib/docker` is mounted as a Btrfs filesystem. The following procedure 153 shows how to configure Btrfs on Ubuntu 14.04 LTS. 154 155 ### Prerequisites 156 157 If you have already used the Docker daemon on your Docker host and have images 158 you want to keep, `push` them to Docker Hub or your private Docker Trusted 159 Registry before attempting this procedure. 160 161 Stop the Docker daemon. Then, ensure that you have a spare block device at 162 `/dev/xvdb`. The device identifier may be different in your environment and you 163 should substitute your own values throughout the procedure. 164 165 The procedure also assumes your kernel has the appropriate Btrfs modules 166 loaded. To verify this, use the following command: 167 168 $ cat /proc/filesystems | grep btrfs 169 170 ### Configure Btrfs on Ubuntu 14.04 LTS 171 172 Assuming your system meets the prerequisites, do the following: 173 174 1. Install the "btrfs-tools" package. 175 176 $ sudo apt-get install btrfs-tools 177 178 Reading package lists... Done 179 Building dependency tree 180 <output truncated> 181 182 2. Create the Btrfs storage pool. 183 184 Btrfs storage pools are created with the `mkfs.btrfs` command. Passing 185 multiple devices to the `mkfs.btrfs` command creates a pool across all of those 186 devices. Here you create a pool with a single device at `/dev/xvdb`. 187 188 $ sudo mkfs.btrfs -f /dev/xvdb 189 190 WARNING! - Btrfs v3.12 IS EXPERIMENTAL 191 WARNING! - see http://btrfs.wiki.kernel.org before using 192 193 Turning ON incompat feature 'extref': increased hardlink limit per file to 65536 194 fs created label (null) on /dev/xvdb 195 nodesize 16384 leafsize 16384 sectorsize 4096 size 4.00GiB 196 Btrfs v3.12 197 198 Be sure to substitute `/dev/xvdb` with the appropriate device(s) on your 199 system. 200 201 > **Warning**: Take note of the warning about Btrfs being experimental. As 202 noted earlier, Btrfs is not currently recommended for production deployments 203 unless you already have extensive experience. 204 205 3. If it does not already exist, create a directory for the Docker host's local 206 storage area at `/var/lib/docker`. 207 208 $ sudo mkdir /var/lib/docker 209 210 4. Configure the system to automatically mount the Btrfs filesystem each time the system boots. 211 212 a. Obtain the Btrfs filesystem's UUID. 213 214 $ sudo blkid /dev/xvdb 215 216 /dev/xvdb: UUID="a0ed851e-158b-4120-8416-c9b072c8cf47" UUID_SUB="c3927a64-4454-4eef-95c2-a7d44ac0cf27" TYPE="btrfs" 217 218 b. Create an `/etc/fstab` entry to automatically mount `/var/lib/docker` 219 each time the system boots. Either of the following lines will work, just 220 remember to substitute the UUID value with the value obtained from the previous 221 command. 222 223 /dev/xvdb /var/lib/docker btrfs defaults 0 0 224 UUID="a0ed851e-158b-4120-8416-c9b072c8cf47" /var/lib/docker btrfs defaults 0 0 225 226 5. Mount the new filesystem and verify the operation. 227 228 $ sudo mount -a 229 230 $ mount 231 232 /dev/xvda1 on / type ext4 (rw,discard) 233 <output truncated> 234 /dev/xvdb on /var/lib/docker type btrfs (rw) 235 236 The last line in the output above shows the `/dev/xvdb` mounted at 237 `/var/lib/docker` as Btrfs. 238 239 Now that you have a Btrfs filesystem mounted at `/var/lib/docker`, the daemon 240 should automatically load with the `btrfs` storage driver. 241 242 1. Start the Docker daemon. 243 244 $ sudo service docker start 245 246 docker start/running, process 2315 247 248 The procedure for starting the Docker daemon may differ depending on the 249 Linux distribution you are using. 250 251 You can force the Docker daemon to start with the `btrfs` storage 252 driver by either passing the `--storage-driver=btrfs` flag to the `docker 253 daemon` at startup, or adding it to the `DOCKER_OPTS` line to the Docker config 254 file. 255 256 2. Verify the storage driver with the `docker info` command. 257 258 $ sudo docker info 259 260 Containers: 0 261 Images: 0 262 Storage Driver: btrfs 263 [...] 264 265 Your Docker host is now configured to use the `btrfs` storage driver. 266 267 ## Btrfs and Docker performance 268 269 There are several factors that influence Docker's performance under the `btrfs` 270 storage driver. 271 272 - **Page caching**. Btrfs does not support page cache sharing. This means that 273 *n* containers accessing the same file require *n* copies to be cached. As a 274 result, the `btrfs` driver may not be the best choice for PaaS and other high 275 density container use cases. 276 277 - **Small writes**. Containers performing lots of small writes (including 278 Docker hosts that start and stop many containers) can lead to poor use of Btrfs 279 chunks. This can ultimately lead to out-of-space conditions on your Docker 280 host and stop it working. This is currently a major drawback to using current 281 versions of Btrfs. 282 283 If you use the `btrfs` storage driver, closely monitor the free space on 284 your Btrfs filesystem using the `btrfs filesys show` command. Do not trust the 285 output of normal Unix commands such as `df`; always use the Btrfs native 286 commands. 287 288 - **Sequential writes**. Btrfs writes data to disk via journaling technique. 289 This can impact sequential writes, where performance can be up to half. 290 291 - **Fragmentation**. Fragmentation is a natural byproduct of copy-on-write 292 filesystems like Btrfs. Many small random writes can compound this issue. It 293 can manifest as CPU spikes on Docker hosts using SSD media and head thrashing 294 on Docker hosts using spinning media. Both of these result in poor performance. 295 296 Recent versions of Btrfs allow you to specify `autodefrag` as a mount 297 option. This mode attempts to detect random writes and defragment them. You 298 should perform your own tests before enabling this option on your Docker hosts. 299 Some tests have shown this option has a negative performance impact on Docker 300 hosts performing lots of small writes (including systems that start and stop 301 many containers). 302 303 - **Solid State Devices (SSD)**. Btrfs has native optimizations for SSD media. 304 To enable these, mount with the `-o ssd` mount option. These optimizations 305 include enhanced SSD write performance by avoiding things like *seek 306 optimizations* that have no use on SSD media. 307 308 Btfs also supports the TRIM/Discard primitives. However, mounting with the 309 `-o discard` mount option can cause performance issues. Therefore, it is 310 recommended you perform your own tests before using this option. 311 312 - **Use Data Volumes**. Data volumes provide the best and most predictable 313 performance. This is because they bypass the storage driver and do not incur 314 any of the potential overheads introduced by thin provisioning and 315 copy-on-write. For this reason, you should place heavy write workloads on data 316 volumes. 317 318 ## Related Information 319 320 * [Understand images, containers, and storage drivers](imagesandcontainers.md) 321 * [Select a storage driver](selectadriver.md) 322 * [AUFS storage driver in practice](aufs-driver.md) 323 * [Device Mapper storage driver in practice](device-mapper-driver.md)