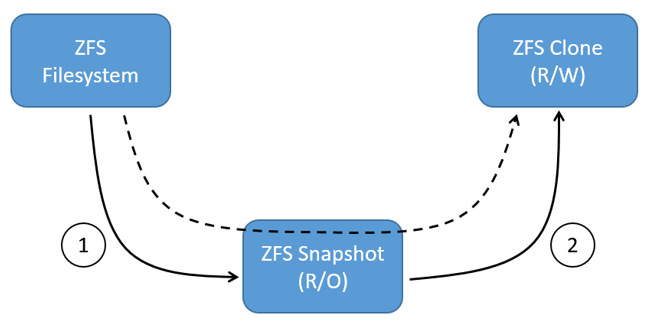

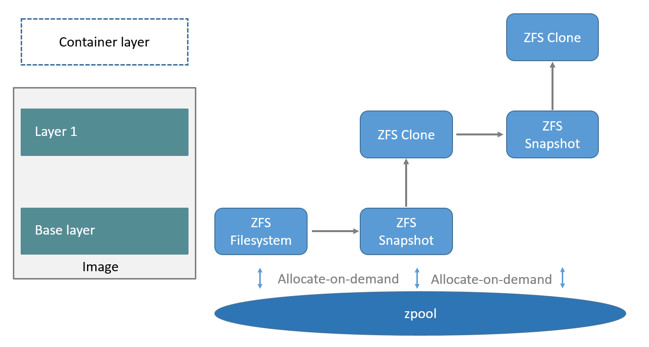

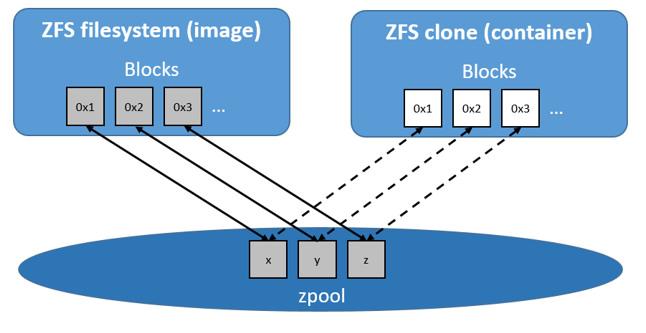

github.com/vieux/docker@v0.6.3-0.20161004191708-e097c2a938c7/docs/userguide/storagedriver/zfs-driver.md (about) 1 <!--[metadata]> 2 +++ 3 title = "ZFS storage in practice" 4 description = "Learn how to optimize your use of ZFS driver." 5 keywords = ["container, storage, driver, ZFS "] 6 [menu.main] 7 parent = "engine_driver" 8 +++ 9 <![end-metadata]--> 10 11 # Docker and ZFS in practice 12 13 ZFS is a next generation filesystem that supports many advanced storage 14 technologies such as volume management, snapshots, checksumming, compression 15 and deduplication, replication and more. 16 17 It was created by Sun Microsystems (now Oracle Corporation) and is open sourced 18 under the CDDL license. Due to licensing incompatibilities between the CDDL 19 and GPL, ZFS cannot be shipped as part of the mainline Linux kernel. However, 20 the ZFS On Linux (ZoL) project provides an out-of-tree kernel module and 21 userspace tools which can be installed separately. 22 23 The ZFS on Linux (ZoL) port is healthy and maturing. However, at this point in 24 time it is not recommended to use the `zfs` Docker storage driver for 25 production use unless you have substantial experience with ZFS on Linux. 26 27 > **Note:** There is also a FUSE implementation of ZFS on the Linux platform. 28 > This should work with Docker but is not recommended. The native ZFS driver 29 > (ZoL) is more tested, more performant, and is more widely used. The remainder 30 > of this document will relate to the native ZoL port. 31 32 33 ## Image layering and sharing with ZFS 34 35 The Docker `zfs` storage driver makes extensive use of three ZFS datasets: 36 37 - filesystems 38 - snapshots 39 - clones 40 41 ZFS filesystems are thinly provisioned and have space allocated to them from a 42 ZFS pool (zpool) via allocate on demand operations. Snapshots and clones are 43 space-efficient point-in-time copies of ZFS filesystems. Snapshots are 44 read-only. Clones are read-write. Clones can only be created from snapshots. 45 This simple relationship is shown in the diagram below. 46 47  48 49 The solid line in the diagram shows the process flow for creating a clone. Step 50 1 creates a snapshot of the filesystem, and step two creates the clone from 51 the snapshot. The dashed line shows the relationship between the clone and the 52 filesystem, via the snapshot. All three ZFS datasets draw space form the same 53 underlying zpool. 54 55 On Docker hosts using the `zfs` storage driver, the base layer of an image is a 56 ZFS filesystem. Each child layer is a ZFS clone based on a ZFS snapshot of the 57 layer below it. A container is a ZFS clone based on a ZFS Snapshot of the top 58 layer of the image it's created from. All ZFS datasets draw their space from a 59 common zpool. The diagram below shows how this is put together with a running 60 container based on a two-layer image. 61 62  63 64 The following process explains how images are layered and containers created. 65 The process is based on the diagram above. 66 67 1. The base layer of the image exists on the Docker host as a ZFS filesystem. 68 69 This filesystem consumes space from the zpool used to create the Docker 70 host's local storage area at `/var/lib/docker`. 71 72 2. Additional image layers are clones of the dataset hosting the image layer 73 directly below it. 74 75 In the diagram, "Layer 1" is added by making a ZFS snapshot of the base 76 layer and then creating a clone from that snapshot. The clone is writable and 77 consumes space on-demand from the zpool. The snapshot is read-only, maintaining 78 the base layer as an immutable object. 79 80 3. When the container is launched, a read-write layer is added above the image. 81 82 In the diagram above, the container's read-write layer is created by making 83 a snapshot of the top layer of the image (Layer 1) and creating a clone from 84 that snapshot. 85 86 As changes are made to the container, space is allocated to it from the 87 zpool via allocate-on-demand operations. By default, ZFS will allocate space in 88 blocks of 128K. 89 90 This process of creating child layers and containers from *read-only* snapshots 91 allows images to be maintained as immutable objects. 92 93 ## Container reads and writes with ZFS 94 95 Container reads with the `zfs` storage driver are very simple. A newly launched 96 container is based on a ZFS clone. This clone initially shares all of its data 97 with the dataset it was created from. This means that read operations with the 98 `zfs` storage driver are fast – even if the data being read was note 99 copied into the container yet. This sharing of data blocks is shown in the 100 diagram below. 101 102  103 104 Writing new data to a container is accomplished via an allocate-on-demand 105 operation. Every time a new area of the container needs writing to, a new block 106 is allocated from the zpool. This means that containers consume additional 107 space as new data is written to them. New space is allocated to the container 108 (ZFS Clone) from the underlying zpool. 109 110 Updating *existing data* in a container is accomplished by allocating new 111 blocks to the containers clone and storing the changed data in those new 112 blocks. The original blocks are unchanged, allowing the underlying image 113 dataset to remain immutable. This is the same as writing to a normal ZFS 114 filesystem and is an implementation of copy-on-write semantics. 115 116 ## Configure Docker with the ZFS storage driver 117 118 The `zfs` storage driver is only supported on a Docker host where 119 `/var/lib/docker` is mounted as a ZFS filesystem. This section shows you how to 120 install and configure native ZFS on Linux (ZoL) on an Ubuntu 14.04 system. 121 122 ### Prerequisites 123 124 If you have already used the Docker daemon on your Docker host and have images 125 you want to keep, `push` them Docker Hub or your private Docker Trusted 126 Registry before attempting this procedure. 127 128 Stop the Docker daemon. Then, ensure that you have a spare block device at 129 `/dev/xvdb`. The device identifier may be be different in your environment and 130 you should substitute your own values throughout the procedure. 131 132 ### Install Zfs on Ubuntu 16.04 LTS 133 134 1. If it is running, stop the Docker `daemon`. 135 136 2. Install the `zfs` package. 137 138 $ sudo apt-get install -y zfs 139 140 Reading package lists... Done 141 Building dependency tree 142 <output truncated> 143 144 3. Verify that the `zfs` module is loaded correctly. 145 146 $ lsmod | grep zfs 147 148 zfs 2813952 3 149 zunicode 331776 1 zfs 150 zcommon 57344 1 zfs 151 znvpair 90112 2 zfs,zcommon 152 spl 102400 3 zfs,zcommon,znvpair 153 zavl 16384 1 zfs 154 155 ### Install Zfs on Ubuntu 14.04 LTS 156 157 1. If it is running, stop the Docker `daemon`. 158 159 1. Install the `software-properties-common` package. 160 161 This is required for the `add-apt-repository` command. 162 163 $ sudo apt-get install -y software-properties-common 164 165 Reading package lists... Done 166 Building dependency tree 167 <output truncated> 168 169 2. Add the `zfs-native` package archive. 170 171 $ sudo add-apt-repository ppa:zfs-native/stable 172 173 The native ZFS filesystem for Linux. Install the ubuntu-zfs package. 174 <output truncated> 175 gpg: key F6B0FC61: public key "Launchpad PPA for Native ZFS for Linux" imported 176 gpg: Total number processed: 1 177 gpg: imported: 1 (RSA: 1) 178 OK 179 180 3. Get the latest package lists for all registered repositories and package 181 archives. 182 183 $ sudo apt-get update 184 185 Ign http://us-west-2.ec2.archive.ubuntu.com trusty InRelease 186 Get:1 http://us-west-2.ec2.archive.ubuntu.com trusty-updates InRelease [64.4 kB] 187 <output truncated> 188 Fetched 10.3 MB in 4s (2,370 kB/s) 189 Reading package lists... Done 190 191 4. Install the `ubuntu-zfs` package. 192 193 $ sudo apt-get install -y ubuntu-zfs 194 195 Reading package lists... Done 196 Building dependency tree 197 <output truncated> 198 199 5. Load the `zfs` module. 200 201 $ sudo modprobe zfs 202 203 6. Verify that it loaded correctly. 204 205 $ lsmod | grep zfs 206 207 zfs 2768247 0 208 zunicode 331170 1 zfs 209 zcommon 55411 1 zfs 210 znvpair 89086 2 zfs,zcommon 211 spl 96378 3 zfs,zcommon,znvpair 212 zavl 15236 1 zfs 213 214 ## Configure ZFS for Docker 215 216 Once ZFS is installed and loaded, you're ready to configure ZFS for Docker. 217 218 219 1. Create a new `zpool`. 220 221 $ sudo zpool create -f zpool-docker /dev/xvdb 222 223 The command creates the `zpool` and gives it the name "zpool-docker". The name is arbitrary. 224 225 2. Check that the `zpool` exists. 226 227 $ sudo zfs list 228 229 NAME USED AVAIL REFER MOUNTPOINT 230 zpool-docker 55K 3.84G 19K /zpool-docker 231 232 3. Create and mount a new ZFS filesystem to `/var/lib/docker`. 233 234 $ sudo zfs create -o mountpoint=/var/lib/docker zpool-docker/docker 235 236 4. Check that the previous step worked. 237 238 $ sudo zfs list -t all 239 240 NAME USED AVAIL REFER MOUNTPOINT 241 zpool-docker 93.5K 3.84G 19K /zpool-docker 242 zpool-docker/docker 19K 3.84G 19K /var/lib/docker 243 244 Now that you have a ZFS filesystem mounted to `/var/lib/docker`, the daemon 245 should automatically load with the `zfs` storage driver. 246 247 5. Start the Docker daemon. 248 249 $ sudo service docker start 250 251 docker start/running, process 2315 252 253 The procedure for starting the Docker daemon may differ depending on the 254 Linux distribution you are using. It is possible to force the Docker daemon 255 to start with the `zfs` storage driver by passing the 256 `--storage-driver=zfs`flag to the `dockerd` command, or to the 257 `DOCKER_OPTS` line in the Docker config file. 258 259 6. Verify that the daemon is using the `zfs` storage driver. 260 261 $ sudo docker info 262 263 Containers: 0 264 Images: 0 265 Storage Driver: zfs 266 Zpool: zpool-docker 267 Zpool Health: ONLINE 268 Parent Dataset: zpool-docker/docker 269 Space Used By Parent: 27648 270 Space Available: 4128139776 271 Parent Quota: no 272 Compression: off 273 Execution Driver: native-0.2 274 [...] 275 276 The output of the command above shows that the Docker daemon is using the 277 `zfs` storage driver and that the parent dataset is the 278 `zpool-docker/docker` filesystem created earlier. 279 280 Your Docker host is now using ZFS to store to manage its images and containers. 281 282 ## ZFS and Docker performance 283 284 There are several factors that influence the performance of Docker using the 285 `zfs` storage driver. 286 287 - **Memory**. Memory has a major impact on ZFS performance. This goes back to 288 the fact that ZFS was originally designed for use on big Sun Solaris servers 289 with large amounts of memory. Keep this in mind when sizing your Docker hosts. 290 291 - **ZFS Features**. Using ZFS features, such as deduplication, can 292 significantly increase the amount of memory ZFS uses. For memory consumption 293 and performance reasons it is recommended to turn off ZFS deduplication. 294 However, deduplication at other layers in the stack (such as SAN or NAS arrays) 295 can still be used as these do not impact ZFS memory usage and performance. If 296 using SAN, NAS or other hardware RAID technologies you should continue to 297 follow existing best practices for using them with ZFS. 298 299 - **ZFS Caching**. ZFS caches disk blocks in a memory structure called the 300 adaptive replacement cache (ARC). The *Single Copy ARC* feature of ZFS allows a 301 single cached copy of a block to be shared by multiple clones of a filesystem. 302 This means that multiple running containers can share a single copy of cached 303 block. This means that ZFS is a good option for PaaS and other high density use 304 cases. 305 306 - **Fragmentation**. Fragmentation is a natural byproduct of copy-on-write 307 filesystems like ZFS. However, ZFS writes in 128K blocks and allocates *slabs* 308 (multiple 128K blocks) to CoW operations in an attempt to reduce fragmentation. 309 The ZFS intent log (ZIL) and the coalescing of writes (delayed writes) also 310 help to reduce fragmentation. 311 312 - **Use the native ZFS driver for Linux**. Although the Docker `zfs` storage 313 driver supports the ZFS FUSE implementation, it is not recommended when high 314 performance is required. The native ZFS on Linux driver tends to perform better 315 than the FUSE implementation. 316 317 The following generic performance best practices also apply to ZFS. 318 319 - **Use of SSD**. For best performance it is always a good idea to use fast 320 storage media such as solid state devices (SSD). However, if you only have a 321 limited amount of SSD storage available it is recommended to place the ZIL on 322 SSD. 323 324 - **Use Data Volumes**. Data volumes provide the best and most predictable 325 performance. This is because they bypass the storage driver and do not incur 326 any of the potential overheads introduced by thin provisioning and 327 copy-on-write. For this reason, you should place heavy write workloads on data 328 volumes.