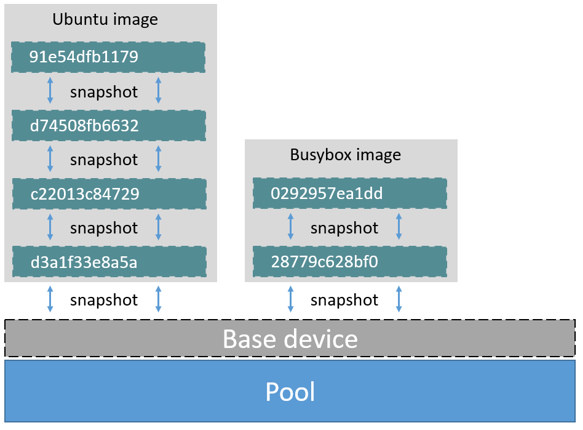

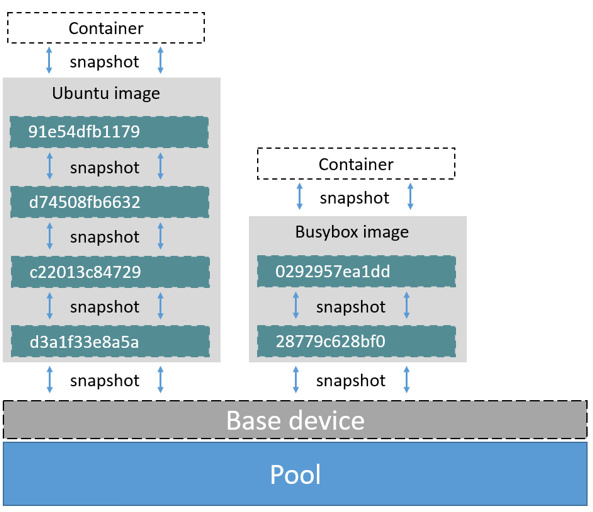

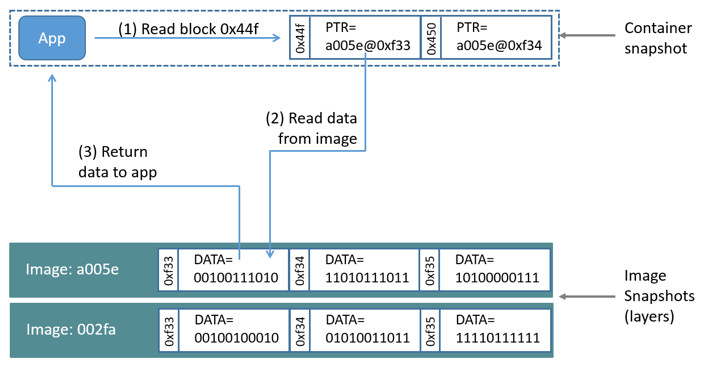

github.com/walkingsparrow/docker@v1.4.2-0.20151218153551-b708a2249bfa/docs/userguide/storagedriver/device-mapper-driver.md (about) 1 <!--[metadata]> 2 +++ 3 title="Device mapper storage in practice" 4 description="Learn how to optimize your use of device mapper driver." 5 keywords=["container, storage, driver, device mapper"] 6 [menu.main] 7 parent="mn_storage_docker" 8 +++ 9 <![end-metadata]--> 10 11 # Docker and the Device Mapper storage driver 12 13 Device Mapper is a kernel-based framework that underpins many advanced 14 volume management technologies on Linux. Docker's `devicemapper` storage driver 15 leverages the thin provisioning and snapshotting capabilities of this framework 16 for image and container management. This article refers to the Device Mapper 17 storage driver as `devicemapper`, and the kernel framework as `Device Mapper`. 18 19 20 >**Note**: The [Commercially Supported Docker Engine (CS-Engine) running on RHEL and CentOS Linux](https://www.docker.com/compatibility-maintenance) requires that you use the `devicemapper` storage driver. 21 22 23 ## An alternative to AUFS 24 25 Docker originally ran on Ubuntu and Debian Linux and used AUFS for its storage 26 backend. As Docker became popular, many of the companies that wanted to use it 27 were using Red Hat Enterprise Linux (RHEL). Unfortunately, because the upstream 28 mainline Linux kernel did not include AUFS, RHEL did not use AUFS either. 29 30 To correct this Red Hat developers investigated getting AUFS into the mainline 31 kernel. Ultimately, though, they decided a better idea was to develop a new 32 storage backend. Moreover, they would base this new storage backend on existing 33 `Device Mapper` technology. 34 35 Red Hat collaborated with Docker Inc. to contribute this new driver. As a result 36 of this collaboration, Docker's Engine was re-engineered to make the storage 37 backend pluggable. So it was that the `devicemapper` became the second storage 38 driver Docker supported. 39 40 Device Mapper has been included in the mainline Linux kernel since version 41 2.6.9. It is a core part of RHEL family of Linux distributions. This means that 42 the `devicemapper` storage driver is based on stable code that has a lot of 43 real-world production deployments and strong community support. 44 45 46 ## Image layering and sharing 47 48 The `devicemapper` driver stores every image and container on its own virtual 49 device. These devices are thin-provisioned copy-on-write snapshot devices. 50 Device Mapper technology works at the block level rather than the file level. 51 This means that `devicemapper` storage driver's thin provisioning and 52 copy-on-write operations work with blocks rather than entire files. 53 54 >**Note**: Snapshots are also referred to as *thin devices* or *virtual devices*. They all mean the same thing in the context of the `devicemapper` storage driver. 55 56 With the `devicemapper` the high level process for creating images is as follows: 57 58 1. The `devicemapper` storage driver creates a thin pool. 59 60 The pool is created from block devices or loop mounted sparse files (more on this later). 61 62 2. Next it creates a *base device*. 63 64 A base device is a thin device with a filesystem. You can see which filesystem is in use by running the `docker info` command and checking the `Backing filesystem` value. 65 66 3. Each new image (and image layer) is a snapshot of this base device. 67 68 These are thin provisioned copy-on-write snapshots. This means that they are initially empty and only consume space from the pool when data is written to them. 69 70 With `devicemapper`, container layers are snapshots of the image they are created from. Just as with images, container snapshots are thin provisioned copy-on-write snapshots. The container snapshot stores all updates to the container. The `devicemapper` allocates space to them on-demand from the pool as and when data is written to the container. 71 72 The high level diagram below shows a thin pool with a base device and two images. 73 74  75 76 If you look closely at the diagram you'll see that it's snapshots all the way down. Each image layer is a snapshot of the layer below it. The lowest layer of each image is a snapshot of the the base device that exists in the pool. This base device is a `Device Mapper` artifact and not a Docker image layer. 77 78 A container is a snapshot of the image it is created from. The diagram below shows two containers - one based on the Ubuntu image and the other based on the Busybox image. 79 80  81 82 83 ## Reads with the devicemapper 84 85 Let's look at how reads and writes occur using the `devicemapper` storage driver. The diagram below shows the high level process for reading a single block (`0x44f`) in an example container. 86 87  88 89 1. An application makes a read request for block 0x44f in the container. 90 91 Because the container is a thin snapshot of an image it does not have the data. Instead, it has a pointer (PTR) to where the data is stored in the image snapshot lower down in the image stack. 92 93 2. The storage driver follows the pointer to block `0xf33` in the snapshot relating to image layer `a005...`. 94 95 3. The `devicemapper` copies the contents of block `0xf33` from the image snapshot to memory in the container. 96 97 4. The storage driver returns the data to the requesting application. 98 99 ### Write examples 100 101 With the `devicemapper` driver, writing new data to a container is accomplished by an *allocate-on-demand* operation. Updating existing data uses a copy-on-write operation. Because Device Mapper is a block-based technology these operations occur at the block level. 102 103 For example, when making a small change to a large file in a container, the `devicemapper` storage driver does not copy the entire file. It only copies the blocks to be modified. Each block is 64KB. 104 105 #### Writing new data 106 107 To write 56KB of new data to a container: 108 109 1. An application makes a request to write 56KB of new data to the container. 110 111 2. The allocate-on-demand operation allocates a single new 64KB block to the containers snapshot. 112 113 If the write operation is larger than 64KB, multiple new blocks are allocated to the container snapshot. 114 115 3. The data is written to the newly allocated block. 116 117 #### Overwriting existing data 118 119 To modify existing data for the first time: 120 121 1. An application makes a request to modify some data in the container. 122 123 2. A copy-on-write operation locates the blocks that need updating. 124 125 3. The operation allocates new blocks to the container snapshot and copies the data into those blocks. 126 127 4. The modified data is written into the newly allocated blocks. 128 129 The application in the container is unaware of any of these 130 allocate-on-demand and copy-on-write operations. However, they may add latency 131 to the application's read and write operations. 132 133 ## Configuring Docker with Device Mapper 134 135 The `devicemapper` is the default Docker storage driver on some Linux 136 distributions. This includes RHEL and most of its forks. Currently, the following distributions support the driver: 137 138 * RHEL/CentOS/Fedora 139 * Ubuntu 12.04 140 * Ubuntu 14.04 141 * Debian 142 143 Docker hosts running the `devicemapper` storage driver default to a 144 configuration mode known as `loop-lvm`. This mode uses sparse files to build 145 the thin pool used by image and container snapshots. The mode is designed to work out-of-the-box 146 with no additional configuration. However, production deployments should not run 147 under `loop-lvm` mode. 148 149 You can detect the mode by viewing the `docker info` command: 150 151 $ sudo docker info 152 Containers: 0 153 Images: 0 154 Storage Driver: devicemapper 155 Pool Name: docker-202:2-25220302-pool 156 Pool Blocksize: 65.54 kB 157 Backing Filesystem: xfs 158 ... 159 Data loop file: /var/lib/docker/devicemapper/devicemapper/data 160 Metadata loop file: /var/lib/docker/devicemapper/devicemapper/metadata 161 Library Version: 1.02.93-RHEL7 (2015-01-28) 162 ... 163 164 The output above shows a Docker host running with the `devicemapper` storage driver operating in `loop-lvm` mode. This is indicated by the fact that the `Data loop file` and a `Metadata loop file` are on files under `/var/lib/docker/devicemapper/devicemapper`. These are loopback mounted sparse files. 165 166 ### Configure direct-lvm mode for production 167 168 The preferred configuration for production deployments is `direct lvm`. This 169 mode uses block devices to create the thin pool. The following procedure shows 170 you how to configure a Docker host to use the `devicemapper` storage driver in a 171 `direct-lvm` configuration. 172 173 > **Caution:** If you have already run the Docker daemon on your Docker host and have images you want to keep, `push` them Docker Hub or your private Docker Trusted Registry before attempting this procedure. 174 175 The procedure below will create a 90GB data volume and 4GB metadata volume to use as backing for the storage pool. It assumes that you have a spare block device at `/dev/xvdf` with enough free space to complete the task. The device identifier and volume sizes may be be different in your environment and you should substitute your own values throughout the procedure. The procedure also assumes that the Docker daemon is in the `stopped` state. 176 177 1. Log in to the Docker host you want to configure and stop the Docker daemon. 178 179 2. If it exists, delete your existing image store by removing the `/var/lib/docker` directory. 180 181 $ sudo rm -rf /var/lib/docker 182 183 3. Create an LVM physical volume (PV) on your spare block device using the `pvcreate` command. 184 185 $ sudo pvcreate /dev/xvdf 186 Physical volume `/dev/xvdf` successfully created 187 188 The device identifier may be different on your system. Remember to substitute your value in the command above. 189 190 4. Create a new volume group (VG) called `vg-docker` using the PV created in the previous step. 191 192 $ sudo vgcreate vg-docker /dev/xvdf 193 Volume group `vg-docker` successfully created 194 195 5. Create a new 90GB logical volume (LV) called `data` from space in the `vg-docker` volume group. 196 197 $ sudo lvcreate -L 90G -n data vg-docker 198 Logical volume `data` created. 199 200 The command creates an LVM logical volume called `data` and an associated block device file at `/dev/vg-docker/data`. In a later step, you instruct the `devicemapper` storage driver to use this block device to store image and container data. 201 202 If you receive a signature detection warning, make sure you are working on the correct devices before continuing. Signature warnings indicate that the device you're working on is currently in use by LVM or has been used by LVM in the past. 203 204 6. Create a new logical volume (LV) called `metadata` from space in the `vg-docker` volume group. 205 206 $ sudo lvcreate -L 4G -n metadata vg-docker 207 Logical volume `metadata` created. 208 209 This creates an LVM logical volume called `metadata` and an associated block device file at `/dev/vg-docker/metadata`. In the next step you instruct the `devicemapper` storage driver to use this block device to store image and container metadata. 210 211 5. Start the Docker daemon with the `devicemapper` storage driver and the `--storage-opt` flags. 212 213 The `data` and `metadata` devices that you pass to the `--storage-opt` options were created in the previous steps. 214 215 $ sudo docker daemon --storage-driver=devicemapper --storage-opt dm.datadev=/dev/vg-docker/data --storage-opt dm.metadatadev=/dev/vg-docker/metadata & 216 [1] 2163 217 [root@ip-10-0-0-75 centos]# INFO[0000] Listening for HTTP on unix (/var/run/docker.sock) 218 INFO[0027] Option DefaultDriver: bridge 219 INFO[0027] Option DefaultNetwork: bridge 220 <output truncated> 221 INFO[0027] Daemon has completed initialization 222 INFO[0027] Docker daemon commit=0a8c2e3 execdriver=native-0.2 graphdriver=devicemapper version=1.8.2 223 224 It is also possible to set the `--storage-driver` and `--storage-opt` flags in 225 the Docker config file and start the daemon normally using the `service` or 226 `systemd` commands. 227 228 6. Use the `docker info` command to verify that the daemon is using `data` and `metadata` devices you created. 229 230 $ sudo docker info 231 INFO[0180] GET /v1.20/info 232 Containers: 0 233 Images: 0 234 Storage Driver: devicemapper 235 Pool Name: docker-202:1-1032-pool 236 Pool Blocksize: 65.54 kB 237 Backing Filesystem: xfs 238 Data file: /dev/vg-docker/data 239 Metadata file: /dev/vg-docker/metadata 240 [...] 241 242 The output of the command above shows the storage driver as `devicemapper`. The last two lines also confirm that the correct devices are being used for the `Data file` and the `Metadata file`. 243 244 ### Examine devicemapper structures on the host 245 246 You can use the `lsblk` command to see the device files created above and the `pool` that the `devicemapper` storage driver creates on top of them. 247 248 $ sudo lsblk 249 NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT 250 xvda 202:0 0 8G 0 disk 251 └─xvda1 202:1 0 8G 0 part / 252 xvdf 202:80 0 100G 0 disk 253 ├─vg--docker-data 253:0 0 90G 0 lvm 254 │ └─docker-202:1-1032-pool 253:2 0 100G 0 dm 255 └─vg--docker-metadata 253:1 0 4G 0 lvm 256 └─docker-202:1-1032-pool 253:2 0 100G 0 dm 257 258 The diagram below shows the image from prior examples updated with the detail from the `lsblk` command above. 259 260  261 262 In the diagram, the pool is named `Docker-202:1-1032-pool` and spans the `data` and `metadata` devices created earlier. The `devicemapper` constructs the pool name as follows: 263 264 ``` 265 Docker-MAJ:MIN-INO-pool 266 ``` 267 268 `MAJ`, `MIN` and `INO` refer to the major and minor device numbers and inode. 269 270 Because Device Mapper operates at the block level it is more difficult to see 271 diffs between image layers and containers. However, there are two key 272 directories. The `/var/lib/docker/devicemapper/mnt` directory contains the mount 273 points for images and containers. The `/var/lib/docker/devicemapper/metadata` 274 directory contains one file for every image and container snapshot. The files 275 contain metadata about each snapshot in JSON format. 276 277 ## Device Mapper and Docker performance 278 279 It is important to understand the impact that allocate-on-demand and copy-on-write operations can have on overall container performance. 280 281 ### Allocate-on-demand performance impact 282 283 The `devicemapper` storage driver allocates new blocks to a container via an allocate-on-demand operation. This means that each time an app writes to somewhere new inside a container, one or more empty blocks has to be located from the pool and mapped into the container. 284 285 All blocks are 64KB. A write that uses less than 64KB still results in a single 64KB block being allocated. Writing more than 64KB of data uses multiple 64KB blocks. This can impact container performance, especially in containers that perform lots of small writes. However, once a block is allocated to a container subsequent reads and writes can operate directly on that block. 286 287 ### Copy-on-write performance impact 288 289 Each time a container updates existing data for the first time, the `devicemapper` storage driver has to perform a copy-on-write operation. This copies the data from the image snapshot to the container's snapshot. This process can have a noticeable impact on container performance. 290 291 All copy-on-write operations have a 64KB granularity. As a results, updating 32KB of a 1GB file causes the driver to copy a single 64KB block into the container's snapshot. This has obvious performance advantages over file-level copy-on-write operations which would require copying the entire 1GB file into the container layer. 292 293 In practice, however, containers that perform lots of small block writes (<64KB) can perform worse with `devicemapper` than with AUFS. 294 295 ### Other device mapper performance considerations 296 297 There are several other things that impact the performance of the `devicemapper` storage driver.. 298 299 - **The mode.** The default mode for Docker running the `devicemapper` storage driver is `loop-lvm`. This mode uses sparse files and suffers from poor performance. It is **not recommended for production**. The recommended mode for production environments is `direct-lvm` where the storage driver writes directly to raw block devices. 300 301 - **High speed storage.** For best performance you should place the `Data file` and `Metadata file` on high speed storage such as SSD. This can be direct attached storage or from a SAN or NAS array. 302 303 - **Memory usage.** `devicemapper` is not the most memory efficient Docker storage driver. Launching *n* copies of the same container loads *n* copies of its files into memory. This can have a memory impact on your Docker host. As a result, the `devicemapper` storage driver may not be the best choice for PaaS and other high density use cases. 304 305 One final point, data volumes provide the best and most predictable performance. This is because they bypass the storage driver and do not incur any of the potential overheads introduced by thin provisioning and copy-on-write. For this reason, you may want to place heavy write workloads on data volumes. 306 307 ## Related Information 308 309 * [Understand images, containers, and storage drivers](imagesandcontainers.md) 310 * [Select a storage driver](selectadriver.md) 311 * [AUFS storage driver in practice](aufs-driver.md) 312 * [Btrfs storage driver in practice](btrfs-driver.md)