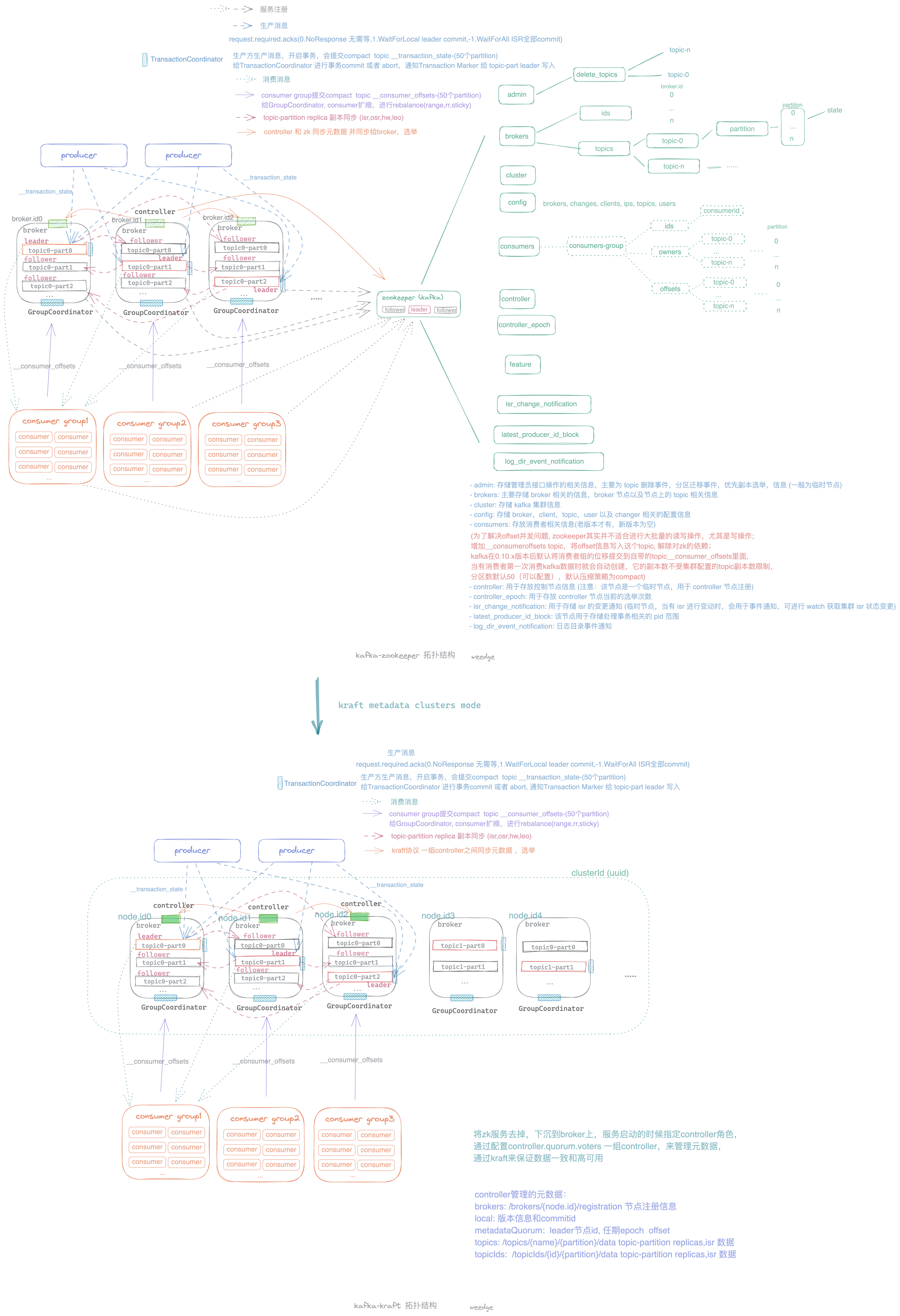

github.com/weedge/lib@v0.0.0-20230424045628-a36dcc1d90e4/client/mq/kafka/readme.md (about) 1 #### 介绍 2 3 对Kafka client 开源库 [Shopify/sarama](https://github.com/Shopify/sarama) ,本身兼容kraft mode(kafka version 2.8.0+); 进行单独封装,提供单一功能接口 4 5 #### 功能接口 6 7 ##### Authentication Options: 8 9 ```go 10 type AuthOptions struct { 11 // security-protocol: SSL (broker service config default PLAINTEXT, need security.inter.broker.protocol=SSL) 12 enableSSL bool 13 certFile string // the optional certificate file for client authentication 14 keyFile string // the optional key file for client authentication 15 caFile string // the optional certificate authority file for TLS client authentication 16 verifySSL bool // the optional verify ssl certificates chain 17 18 //SASL: PLAINTEXT(Kafka 0.10.0.0+), SCRAM(kafka 0.10.2.0+ dynamic add user), OAUTHBEARER(Kafka2.0.0+,JWT) 19 enableSASL bool 20 authentication string // PLAINTEXT,SCRAM,OAUTHBEARER,if enableSASL true,default SCRAM 21 saslUser string // The SASL username 22 saslPassword string // The SASL password 23 scramAlgorithm string // The SASL SCRAM SHA algorithm sha256 or sha512 as mechanism 24 } 25 ``` 26 27 ##### Consumer Options: 28 29 ```go 30 type ConsumerGroupOptions struct { 31 version string // kafka version 32 brokerList []string // kafka broker ip:port list 33 topicList []string // subscribe topic list 34 groupId string // consumer groupId 35 reBalanceStrategy string // consumer group partition assignment strategy (range, roundrobin, sticky) 36 initialOffset string // initial offset to consumer (oldest, newest) 37 38 *auth.AuthOptions 39 } 40 ``` 41 42 ##### Consumer Group: 43 44 ```go 45 // user just defined open consumer group option, init consumer conf to new ConsumerGroup 46 func NewConsumerGroup(name string, msg IConsumerMsg, authOpts []auth.Option, options ...Option) (consumer *ConsumerGroup, err error) { 47 48 // start with ctx to cancel 49 func (consumer *ConsumerGroup) Start() 50 func (consumer *ConsumerGroup) StartWithTimeOut(timeout time.Duration) 51 func (consumer *ConsumerGroup) StartWithDeadline(time time.Time) 52 53 // cancel to close consumer group client 54 func (consumer *ConsumerGroup) Close() 55 56 // user instance interface to do(ConsumerMessage) 57 type IConsumerMsg interface { 58 Consumer(msg *sarama.ConsumerMessage) error 59 } 60 ``` 61 62 ##### Producer Options: 63 64 ```go 65 type ProducerOptions struct { 66 version string // kafka version 67 clientID string // The client ID sent with every request to the brokers. 68 brokerList []string // broker list 69 partitioning string // key {partition}(manual),hash,random 70 requiredAcks int // required ack 71 timeOut time.Duration // The duration the producer will wait to receive -required-acks 72 retryMaxCn int // retry max cn (default: 3) 73 compression string // msg compression(gzip,snappy,lz4,zstd) 74 maxOpenRequests int // The maximum number of unacknowledged requests the client will send on a single connection before blocking (default: 5) 75 maxMessageBytes int // The max permitted size of a message (default: 1000000) 76 channelBufferSize int // The number of events to buffer in internal and external channels. 77 78 // flush batches 79 flushFrequencyMs int // The best-effort frequency of flushes 80 flushBytes int // The best-effort number of bytes needed to trigger a flush. 81 flushMessages int // The best-effort number of messages needed to trigger a flush. 82 flushMaxMessages int // The maximum number of messages the producer will send in a single request. 83 84 *auth.AuthOptions 85 } 86 ``` 87 88 ##### Producer: 89 90 ```go 91 // new sync/async producer to topic with option(requiredAcks,retryMaxCn,partitioning,compressions,TLS ...etc) 92 func NewProducer(topic string, pType string, authOpts []auth.Option, options ...Option) (p *Producer) 93 94 // send string msg no key 95 func (p *Producer) Send(val string) 96 97 // send string msg by string key 98 func (p *Producer) SendByKey(key, val string) 99 100 // close sync/async producer 101 func (p *Producer) Close() 102 ``` 103 104 具体操作见example test 105 106 #### Kafka 拓扑结构 107 108  109 110 111 112 #### reference 113 114 1. [Kafka 0.10.0 doc](https://kafka.apache.org/0100/documentation.html) 115 2. [Kafka doc](https://kafka.apache.org/documentation.html) 最新版文档(2021/9/21 3.0版本) 116 3. [Apache Kafka 3.0 发布,离彻底去掉 ZooKeeper 更进一步](https://www.infoq.cn/article/RTTzLOMBPOx2TsL7dM9T) 117 4. [KIP-500: Replace ZooKeeper with a Self-Managed Metadata Quorum](https://cwiki.apache.org/confluence/display/KAFKA/KIP-500%3A+Replace+ZooKeeper+with+a+Self-Managed+Metadata+Quorum) 118 5. [KRaft (aka KIP-500) mode Early Access Release](https://github.com/apache/kafka/blob/6d1d68617ecd023b787f54aafc24a4232663428d/config/kraft/README.md) 119 6. [2.8 版本去掉zk简单操作视频](https://asciinema.org/a/403794/embed) 120 7. [GoLang:你真的了解 HTTPS 吗?](https://mp.weixin.qq.com/s/ibwNtDc2zd2tdhMN7iROJw) 121 8. [知乎基于Kubernetes的kafka平台的设计和实现](https://zhuanlan.zhihu.com/p/36366473) 122