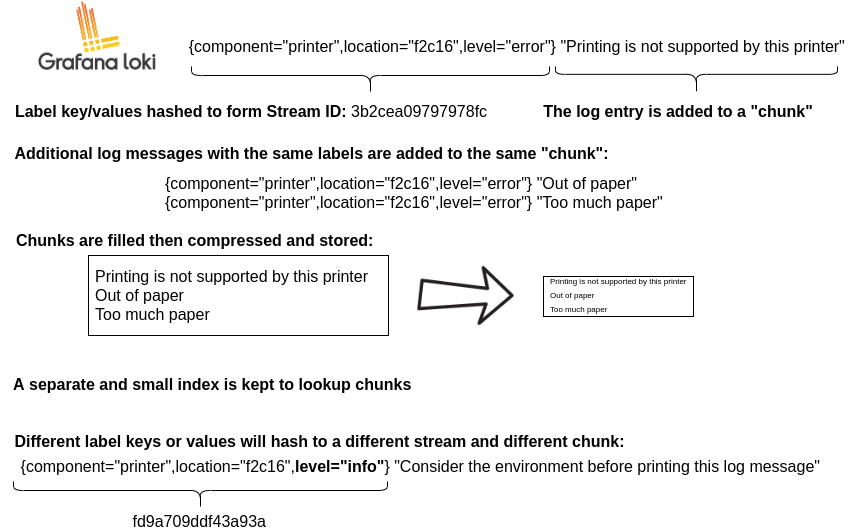

github.com/yankunsam/loki/v2@v2.6.3-0.20220817130409-389df5235c27/docs/sources/fundamentals/architecture/_index.md (about) 1 --- 2 title: Architecture 3 weight: 200 4 aliases: 5 - /docs/loki/latest/architecture/ 6 --- 7 # Grafana Loki's Architecture 8 9 ## Multi-tenancy 10 11 All data, both in memory and in long-term storage, may be partitioned by a 12 tenant ID, pulled from the `X-Scope-OrgID` HTTP header in the request when Grafana Loki 13 is running in multi-tenant mode. When Loki is **not** in multi-tenant mode, the 14 header is ignored and the tenant ID is set to "fake", which will appear in the 15 index and in stored chunks. 16 17 ## Chunk Format 18 19 ``` 20 ------------------------------------------------------------------- 21 | | | 22 | MagicNumber(4b) | version(1b) | 23 | | | 24 ------------------------------------------------------------------- 25 | block-1 bytes | checksum (4b) | 26 ------------------------------------------------------------------- 27 | block-2 bytes | checksum (4b) | 28 ------------------------------------------------------------------- 29 | block-n bytes | checksum (4b) | 30 ------------------------------------------------------------------- 31 | #blocks (uvarint) | 32 ------------------------------------------------------------------- 33 | #entries(uvarint) | mint, maxt (varint) | offset, len (uvarint) | 34 ------------------------------------------------------------------- 35 | #entries(uvarint) | mint, maxt (varint) | offset, len (uvarint) | 36 ------------------------------------------------------------------- 37 | #entries(uvarint) | mint, maxt (varint) | offset, len (uvarint) | 38 ------------------------------------------------------------------- 39 | #entries(uvarint) | mint, maxt (varint) | offset, len (uvarint) | 40 ------------------------------------------------------------------- 41 | checksum(from #blocks) | 42 ------------------------------------------------------------------- 43 | #blocks section byte offset | 44 ------------------------------------------------------------------- 45 ``` 46 47 `mint` and `maxt` describe the minimum and maximum Unix nanosecond timestamp, 48 respectively. 49 50 ### Block Format 51 52 A block is comprised of a series of entries, each of which is an individual log 53 line. 54 55 Note that the bytes of a block are stored compressed using Gzip. The following 56 is their form when uncompressed: 57 58 ``` 59 ------------------------------------------------------------------- 60 | ts (varint) | len (uvarint) | log-1 bytes | 61 ------------------------------------------------------------------- 62 | ts (varint) | len (uvarint) | log-2 bytes | 63 ------------------------------------------------------------------- 64 | ts (varint) | len (uvarint) | log-3 bytes | 65 ------------------------------------------------------------------- 66 | ts (varint) | len (uvarint) | log-n bytes | 67 ------------------------------------------------------------------- 68 ``` 69 70 `ts` is the Unix nanosecond timestamp of the logs, while len is the length in 71 bytes of the log entry. 72 73 ## Storage 74 75 ### Single Store 76 77 Loki stores all data in a single object storage backend. This mode of operation became generally available with Loki 2.0 and is fast, cost-effective, and simple, not to mention where all current and future development lies. This mode uses an adapter called [`boltdb_shipper`](../../operations/storage/boltdb-shipper) to store the `index` in object storage (the same way we store `chunks`). 78 79 ### Deprecated: Multi-store 80 81 The **chunk store** is Loki's long-term data store, designed to support 82 interactive querying and sustained writing without the need for background 83 maintenance tasks. It consists of: 84 85 - An index for the chunks. This index can be backed by: 86 - [Amazon DynamoDB](https://aws.amazon.com/dynamodb) 87 - [Google Bigtable](https://cloud.google.com/bigtable) 88 - [Apache Cassandra](https://cassandra.apache.org) 89 - A key-value (KV) store for the chunk data itself, which can be: 90 - [Amazon DynamoDB](https://aws.amazon.com/dynamodb) 91 - [Google Bigtable](https://cloud.google.com/bigtable) 92 - [Apache Cassandra](https://cassandra.apache.org) 93 - [Amazon S3](https://aws.amazon.com/s3) 94 - [Google Cloud Storage](https://cloud.google.com/storage/) 95 96 > Unlike the other core components of Loki, the chunk store is not a separate 97 > service, job, or process, but rather a library embedded in the two services 98 > that need to access Loki data: the [ingester](#ingester) and [querier](#querier). 99 100 The chunk store relies on a unified interface to the 101 "[NoSQL](https://en.wikipedia.org/wiki/NoSQL)" stores (DynamoDB, Bigtable, and 102 Cassandra) that can be used to back the chunk store index. This interface 103 assumes that the index is a collection of entries keyed by: 104 105 - A **hash key**. This is required for *all* reads and writes. 106 - A **range key**. This is required for writes and can be omitted for reads, 107 which can be queried by prefix or range. 108 109 The interface works somewhat differently across the supported databases: 110 111 - DynamoDB supports range and hash keys natively. Index entries are thus 112 modelled directly as DynamoDB entries, with the hash key as the distribution 113 key and the range as the DynamoDB range key. 114 - For Bigtable and Cassandra, index entries are modelled as individual column 115 values. The hash key becomes the row key and the range key becomes the column 116 key. 117 118 A set of schemas are used to map the matchers and label sets used on reads and 119 writes to the chunk store into appropriate operations on the index. Schemas have 120 been added as Loki has evolved, mainly in an attempt to better load balance 121 writes and improve query performance. 122 123 ## Read Path 124 125 To summarize, the read path works as follows: 126 127 1. The querier receives an HTTP/1 request for data. 128 1. The querier passes the query to all ingesters for in-memory data. 129 1. The ingesters receive the read request and return data matching the query, if 130 any. 131 1. The querier lazily loads data from the backing store and runs the query 132 against it if no ingesters returned data. 133 1. The querier iterates over all received data and deduplicates, returning a 134 final set of data over the HTTP/1 connection. 135 136 ## Write Path 137 138  139 140 To summarize, the write path works as follows: 141 142 1. The distributor receives an HTTP/1 request to store data for streams. 143 1. Each stream is hashed using the hash ring. 144 1. The distributor sends each stream to the appropriate ingesters and their 145 replicas (based on the configured replication factor). 146 1. Each ingester will create a chunk or append to an existing chunk for the 147 stream's data. A chunk is unique per tenant and per labelset. 148 1. The distributor responds with a success code over the HTTP/1 connection.