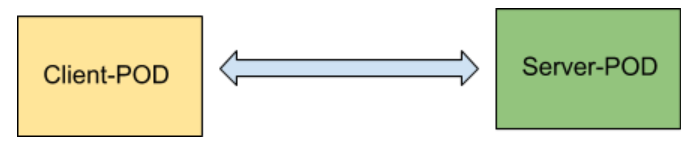

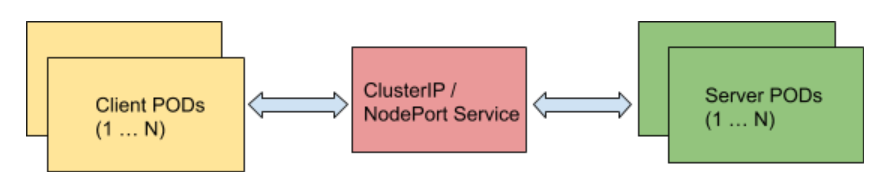

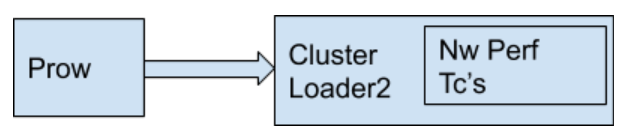

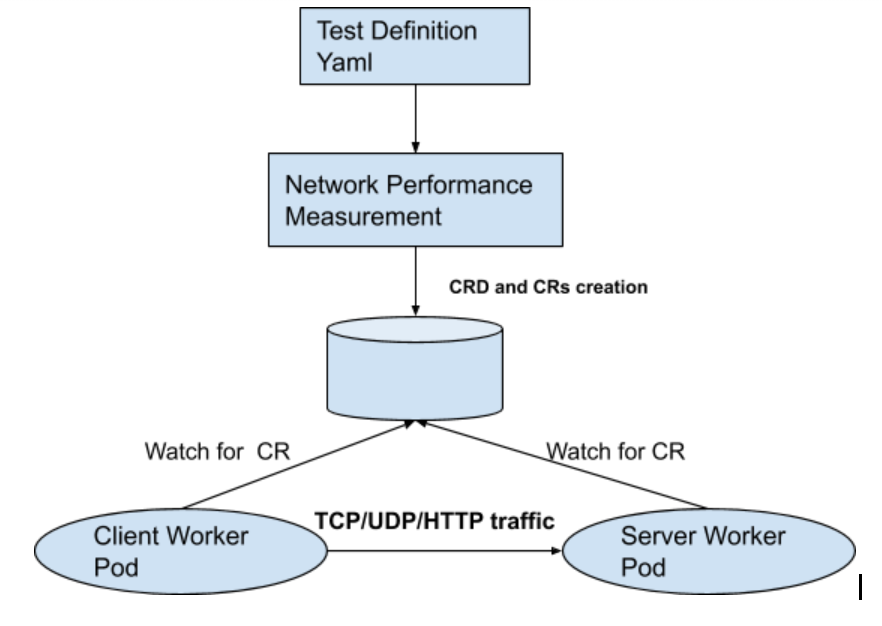

k8s.io/perf-tests/clusterloader2@v0.0.0-20240304094227-64bdb12da87e/docs/network-perf-testcase-enhancement/README.md (about) 1 # Enhancing Network Performance Benchmarking Testcases 2 3 - [Summary](#summary) 4 - [Motivation](#motivation) 5 - [Goals](#goals) 6 - [Proposal](#proposal) 7 - [Test Scenarios](#test-scenarios) 8 - [Tools](#tools) 9 - [Resource Requirements](#resource-requirements) 10 - [Future Enhancements](#future-enhancements) 11 - [Benefits](#benefits) 12 - [Design Details](#design-details) 13 - [NetworkPerformanceMetric measurement](#networkperformancemetric-measurement) 14 - [Metrics visualization](#metrics-visualization) 15 16 ## Summary 17 18 We are proposing to add more testcases for network performance benchmarking. 19 20 ## Motivation 21 22 As Kubernetes is increasingly being adopted by various telco cloud providers for deployments, 23 it brings additional networking requirements/scenarios to be supported. 24 With wide array of network functions being containerized, the need to analyse and 25 monitor the networking performance metrics such as latency, throughput etc has arisen. 26 27 We have evaluated testcases in following 3 frameworks which have limited testcases for network performance benchmarking. 28 29 * [E2E](https://github.com/kubernetes/community/blob/master/contributors/devel/sig-testing/e2e-tests.md): This framework has only 2 testcases for measuring throughput. 30 31 * [netperf](https://github.com/kubernetes/perf-tests/tree/master/network/benchmarks/netperf) : This framework 32 measures only the throughput between client-server pods for various MSS(Maximum Segment size). 33 Other performance metrics such as latency , loss-rate , response time are not measured. 34 35 * [clusterloader2](https://github.com/kubernetes/perf-tests/tree/master/clusterloader2) : This framework 36 measures only network latency. Other performance metrics are not measured. 37 38 ### Goals 39 40 The primary goal of this proposal is to enhance the testcases to cover various 41 networking scenarios : 42 43 1. IPv4 , IPv6 , Dual-Stack. 44 2. Protocols: TCP, UDP, HTTP/HTTPS. 45 3. Pod-to-Pod , with services ClusterIP , NodePort etc. 46 47 We intend to submit tests to measure the following network performance metrics for various 48 scenarios such as different services (ClusterIP/NodePort), with IPv4/IPv6 configured etc. 49 50 1. Throughput (For TCP traffic) 51 2. Packets per second (For UDP traffic) 52 3. Loss rate (For UDP traffic) 53 4. Latency/Jitter (For UDP/HTTP/HTTPS) 54 (Note: Latency being measured is a one-way latency) 55 5. Response time (For HTTP/HTTPs) 56 (Note: Response time is a two-way latency plus processing time) 57 58 Our important goal is to make sure these benchmarking testcases are run periodically, 59 so that any degradation in performance is detected in early phase of feature delivery. 60 61 62 ## Proposal 63 64 ### Test Scenarios 65 66 1. Baseline Testcases 67 68 Following testcases will be able to gather the baseline values for network performance metrics. 69 70 1.1 Point-To-Point (P2P) 71 72  73 74 1.1.1 Client-Server POD on different worker node (1:1) 75 76 Testcase-Id | Testcase Description | Tool | Metrics Measured 77 ----------- | ---------------------|------|----------------- 78 TC1.1.1.1 | Send TCP traffic from client pod IP to server pod IP | iperf3 | Throughput in Gbits/s 79 TC1.1.1.2 | Send UDP traffic from client pod IP to server pod IP | iperf2 | Packets per second (pps), Loss Rate, Latency, jitter 80 TC1.1.1.3 | Send HTTP traffic from client pod IP to server pod IP | siege | Latency , ResponseTime 81 82 83 1.2 ClusterIP / NodePort Services 84 85  86 87 1.2.1 Client-Server POD on different worker node (1:1) 88 89 TestcaseId | Testcase Description | Tool | Metrics Measured 90 ----------- | ---------------------|------|----------------- 91 TC1.2.1.1 | Send TCP traffic from client pod IP to ClusterIP. 1 server pod is located behind ClusterIP service. | iperf3 | Throughput in Gbits/s 92 TC1.2.1.2 | Send UDP traffic from client pod IP to ClusterIP. 1 server pod is located behind ClusterIP service. | iperf2 | Packets per second (pps), Loss Rate, Latency, jitter 93 TC1.2.1.3 | Send HTTP traffic from client pod IP to ClusterIP. 1 server pod is located behind ClusterIP service. | siege | Latency , ResponseTime 94 TC1.2.1.4 | Send TCP traffic from client pod IP to NodePort svc IP. 1 server pod is located behind NodePort service. | iperf3 | Throughput in Gbits/s 95 TC1.2.1.5 | Send UDP traffic from client pod IP to NodePort svc IP. 1 server pod is located behind NodePort service. | iperf2 | Packets per second (pps), Loss Rate, Latency, jitter 96 TC1.2.1.6 | Send HTTP traffic from client pod IP to NodePort svcIP. 1 server pod is located behind NodePort service. | siege | Latency , ResponseTime 97 98 99 2. Scale Testcases 100 101 2.1 Point-To-Point (P2P) 102 103 2.1.1 Client-Server POD on different worker node (N:1) 104 105 TestcaseId | Testcase Description | Tool | Metrics Measured 106 ----------- | ---------------------|------|----------------- 107 TC2.1.1.1 | Send TCP traffic from N=50 client pods to 1 server pod IP. | iperf3 | Throughput in Gbits/s 108 TC2.1.1.2 | Send TCP traffic from N=100 client pods to 1 server pod IP. | iperf3 | Throughput in Gbits/s 109 TC2.1.1.3 | Send UDP traffic from N=50 client pods to 1 server pod IP. | iperf2 | Packets per second (pps), Loss Rate, Latency, jitter 110 TC2.1.1.4 | Send UDP traffic from N=100 client pods to 1 server pod IP. | iperf2 | Packets per second (pps), Loss Rate, Latency, jitter 111 TC2.1.1.5 | Send HTTP traffic from N=50 client pods to 1 server pod IP. | siege | Latency , ResponseTime 112 TC2.1.1.6 | Send HTTP traffic from N=100 client pods to 1 server pod IP. | siege | Latency , ResponseTime 113 114 115 2.1.2 Client-Server POD on different worker node (N:M) 116 117 * A pair of client and server pod will be selected out of N/M pod. 118 * Client POD size N=50 and Server POD size M=50. 119 * This test will require 100 worker nodes i.e N+M, so that we can make sure client and server pods are not co-located. 120 * Each metric will be a percentile of 50 pairs. 121 122 123 TestcaseId | Testcase Description | Tool | Metrics Measured 124 ----------- | ---------------------|------|----------------- 125 TC2.1.2.1 | Send TCP traffic from client pod IP to server pod IP (1:1) | iperf3 | Throughput in Gbits/s 126 TC2.1.2.2 | Send UDP traffic from client pod IP to server pod IP (1:1) | iperf2 | Packets per second (pps), Loss Rate, Latency, jitter 127 TC2.1.2.3 | Send HTTP traffic from client pod IP to server pod IP (1:1) | siege | Latency , ResponseTime 128 129 130 2.2 ClusterIP / NodePort Services 131 132 N:1 Scenario has been intentionally omitted because, in real-world deployment scenarios application 133 pods will be more than one behind services such as ClusterIP/NodePort. 134 135 2.2.1 Client-Server POD on different worker node (N:M) (HTTP traffic) 136 137 * Client POD size N=50 and Server POD size M=50. 5 HTTP Server PODs will be grouped under 1 Service. 138 * 5 Client PODS will send traffic to 1 ClusterIP/NodePort svc IP. 139 * Below testcases will measure the network performance metrics with scaled service IPs (1 ClientPOD -> 1 ServiceIP -> 1 ServerPOD), as well as with load-balancing (N ClientPOD -> 1 ServiceIP -> N ServerPOD). 140 141 TestcaseId | Testcase Description | Tool | Metrics Measured 142 ----------- | ---------------------|------|----------------- 143 TC2.2.1.1 | Send HTTP traffic from 1 client pod IPs to ClusterIP. 1 server pod is located behind ClusterIP service (1:1) | siege | Latency , ResponseTime 144 TC2.2.1.2 | Send HTTP traffic from 1 client pod IPs to NodePort svc IP. 1 server pod is located behind NodePort service (1:1) | siege | Latency , ResponseTime 145 TC2.2.1.3 | Send HTTP traffic from 5 client pod IPs to ClusterIP. 5 server pods are located behind ClusterIP service. | siege | Latency , ResponseTime 146 TC2.2.1.4 | Send HTTP traffic from 5 client pod IPs to NodePort svc IP. 5 server pods are located behind NodePort service. | siege | Latency , ResponseTime 147 148 2.2.2 Client-Server POD on different worker node (N:M) (TCP/UDP traffic) 149 150 * Client POD size N=50 and Server POD size M=50. Each server pod will be behind one unique ClusterIP/NodePort Svc. So 50 clusterIP will be created for TCP/UDP traffic only. ( 1 Client POD ---> 1 ClusterIP ---> 1 Server POD) 151 * This test will require 100 worker nodes i.e N+M, so that we can make sure client and server pods are not co-located. 152 * Each metric will be a percentile of 50 pairs. 153 154 TestcaseId | Testcase Description | Tool | Metrics Measured 155 ----------- | ---------------------|------|----------------- 156 TC2.2.2.1 | Send TCP traffic from 1 client pod IPs to 1 server pod is located behind respective unique ClusterIP (1:1) | iperf3 | Throughput in Gbits/s 157 TC2.2.2.2 | Send UDP traffic from 1 client pod IPs to 1 server pods is located behind respective unique ClusterIP (1:1) | iperf2 | Packets per second (pps), Loss Rate, Latency, jitter 158 TC2.2.2.3 | Send TCP traffic from 1 client pod IPs to NodePort svc IP. 1 server pod is located behind NodePort service (1:1) | iperf3 | Throughput in Gbits/s 159 TC2.2.2.4 | Send UDP traffic from 1 client pod IPs to NodePort svc IP. 1 server pod is located behind NodePort service (1:1) | iperf2 | Packets per second (pps), Loss Rate, Latency, jitter 160 161 162 ### Tools 163 164 We intend to use the following tools for testing : 165 166 1. iperf3: For TCP throughput measurement. 167 168 2. iperf2: For UDP latency, loss rate , pps measurement. 169 170 3. Siege: Siege is an open source benchmark utility. 171 It will be used for measuring HTTP/HTTPs metrics such as total number of hits recorded, 172 bytes transferred, response time, concurrency, and return status. 173 174 Other tools such as Trex will be used in future for further enhancements. 175 176 ### Resource Requirements 177 178 1. Worker nodes : We will need worker nodes (VMs) to run client and server pods. 179 As mentioned in test scenarios, we require 100 worker nodes to run the testcases. 180 181 2. Memory : Each worker node should have atleast 4 Gb of memory. 182 183 3. vCPUs : Each worker node should have atleast 2 vCPUs to run tools at full capacity. 184 We can pin the vCPUs to tools to run them efficienlty. If multiple client-server pods 185 are run on same worker node, then more then 2 vCPUs might be required. 186 187 ### Future Enhancements 188 189 Performance benchmarking frameworks can be enhanced to include testcases related to various CNIs. 190 191 ### Benefits 192 193 1) Our proposal attempts to standardize the test scenarios and cover most common performance metrics. 194 2) Detecting performance degradation in early phase of feature delivery. 195 3) With standard test scenarios available, kubernetes users will be able adopt these test frameworks 196 to validate their downstream offerings. 197 198 ## Design Details 199 200 201 Kubernetes uses the Prow framework for CI/CD and the new network performance related tests are also 202 planned to be added as part of prow jobs. 203 204 There were multiple test frameworks analysed for running the test cases like: k8s E2E tests 205 and sig-scalability’s Netperf and ClusterLoader2 frameworks. Since we are planning to have 206 the test cases under sig scalability where ClusterLoader2 is already running many test cases 207 as part of kubernetes CI/CD and since the framework is easy to extend for creating new tests 208 and test scenarios ,it is decided to use ClusterLoader2 for the implementation. These tests 209 needs a statistical distribution on a large number of pods to smooth out infrastructure dependencies 210 and cluster loader framework already run tests with similar requirements. 211 212  213 214 215 The below 3PP tools will be used for benchmarking network performance: 216 * Iperf2 217 * Iperf3 218 * Siege 219 220 These tools would be included as part of a container image and would be run as pods(as client pods 221 and server pods) for collecting measurements. 222 223 This proposal would be implemented using the measurement mechanism of the clusterloader framework. 224 225 ### NetworkPerformanceMetric measurement 226 227 228  229 230 Following design changes are being made for the test case implementation: 231 232 * New measurement is being created for measuring network performance metrics. 233 As part of start action of measurement, new CustomResourceDefinition(CRD) is applied on k8s cluster. 234 Based on the pod ratio specified(1:1 , N:M) in test yaml, so many worker pods are created. 235 Unique client-server worker pods are chosen and a corresponding CR object is created. 236 CR object contains attributes (protocol,duration,IPs) to execute the testcase. 237 238 Measurement watches for the updates of CR objects by the worker pods. Worker pods update the CR objects 239 with the status of testcase execution(either success or failure). 240 241 As part of gather action of measurement, metric values are aggregated and result summary is created. 242 243 * Worker pods watch for respective CR object creation by the measurement. 244 When CR is created, client worker pod starts sending traffic to respective server worker pod. 245 Based on the attributes in the CR (protocol,duration), specific performance metric measurements are 246 collected and updated back in the CR object. 247 248 249 ### Metrics visualization 250 251 The metrics collected would be displayed in the Perfdash dashboard by modifying the perfdash config.go to 252 include all the new metrics collected as part of the new test cases. Perfdash shows graphs containing data 253 points from multiple builds(along with historical data) ,this would help in analyzing variation of metrics 254 across builds and help identify issues wrt network performance in new builds. The metrics to be displayed 255 will be put into a file in the required file format and file name format in the artifacts directory so that 256 Perfdash picks up the metrics and displays in its dashboard. 257