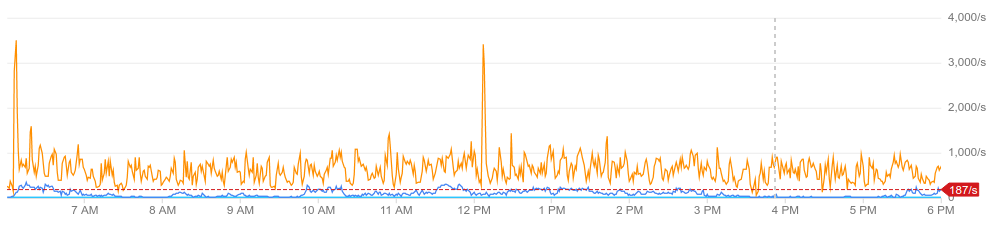

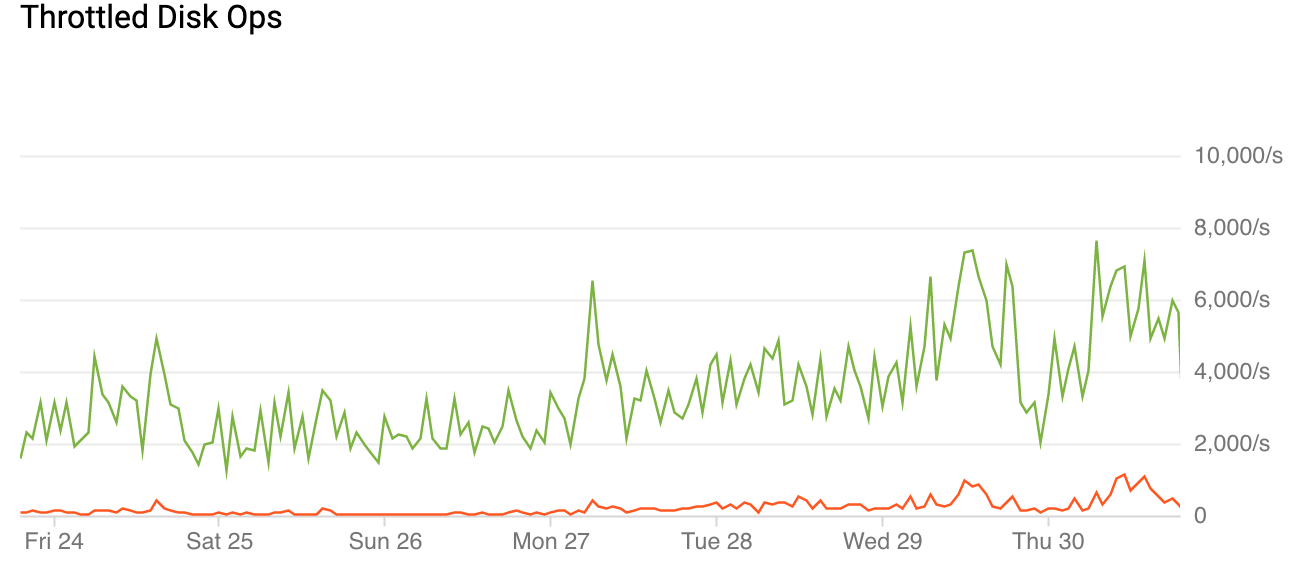

k8s.io/test-infra@v0.0.0-20240520184403-27c6b4c223d8/docs/post-mortems/2019-05-30.md (about) 1 # May 30th, 2019 Prow Flakiness 2 3 Created by: [Katharine Berry](https://github.com/Katharine) 4 5 All times PDT. 6 7 ## Summary 8 9 Increasing test load over time has lead to increased use of I/O resources by the k8s-prow-build 10 cluster. In the run-up to the Kubernetes 1.15 code freeze, a spike in usage lead to hitting hard 11 throttling limits, causing I/O operations to take so long that nodes experienced kernel panics 12 and test code wildly misbehaved. 13 14 ## Impact 15 16 Many tests were failing in surprising ways, or being aborted partway through execution for no clear 17 reason. Artifacts were frequently not uploaded, causing Spyglass and Testgrid to be unable to see 18 that the job had ever finished running. The problem lasted for at least seven hours. Due to PRs 19 failing to merge as expected, 1.15 code freeze slipped a day. 20 21 Due to an unrelated monitoring failure, it is difficult to tell exactly what the problem first 22 became substantial. At peak, 60 PRs were stuck in the merge queue, and no PRs at all had merged for many hours. 23 24 ## Root Cause 25 26 Prow jobs are run in a 100-node cluster. Each node has eight CPUs, 52 GB of RAM, and 250 GB of disk 27 space. On GCP, IOPS are limited proportionally to disk size. With our 250 GB disks, that gave us 28 187.5 IOPS per instance. While the majority of our nodes are not touching the disk at all most of 29 the time, nodes under certain workloads would spike up to large multiples of that: 30 31  32 33 The affected nodes suffered assorted failure modes, which ranged from pods failing, to kubelet 34 crashes, to kernel panics. While only a minority of nodes were affected at any given time, the 35 large number of pods that must successfully complete for a job to merge effectively brought us to 36 a standstill. 37 38 ## Detection 39 40 Multiple users reported jobs that were reported to GitHub as having failed, but which upon 41 checking the job status actually appeared to still be running. This behaviour is caused by pods 42 exiting without successfully uploading `finished.json`, which most of our tooling uses to 43 determine job state. 44 45 Due to an unrelated failure, most prow/tide monitoring for the week leading to this incident is 46 permanently lost, making it difficult to establish how long the cluster was faulty. 47 48 ## Resolution 49 50 We requested and received quota for additional disk space and migrated the cluster to 500 GB disks 51 instead of 250 GB disks, with a corresponding increase in available IOPS. The cluster became 52 healthier once the migration was completed. 53 54 ## Lessons Learned 55 56 ### What went well 57 58 Not much. 59 60 ### What went poorly 61 62 * Prow metrics were not being recorded in the runup to the incident, making it difficult to 63 figure out what was going on. 64 * Alerting for these failures either does not exist or does not work. 65 * Once the cause had been determined and a fix devised, nothing was done for another two hours. 66 * The incident caused 1.15 code freeze to slip 67 68 ### Where we got lucky 69 70 * Someone who realised that IOPS were the problem happened to be around to take a look. 71 72 ## Action items 73 74 * Create and/or fix monitoring so that when tide stops doing things the oncall gets paged 75 ([#12829](https://github.com/kubernetes/test-infra/issues/12829)) 76 * Establish a process and guidelines for adding new members to the oncall rotation so they are not 77 thrown in the deep end without any preparation or extra support. 78 79 ## Timeline 80 81 * [???] IOPS reached a critical point such that cluster availability began degrading 82 * [13:26] @cblecker reports on Slack that something is wrong with Prow 83 * [14:11] @Katharine determines that many things are wrong with the Kubernetes nodes and the test pods 84 * [14:17] @BenTheElder loops in @Random-Liu 85 * [14:43] @Random-Liu determines the root cause is severe I/O throttling 86 * [15:02] The current oncaller is given directions on increasing capacity and requests additional disk quota 87 * [15:14] Additional disk quota is granted 88 * [17:10] @Katharine realises that capacity was not increased and brings up 55 nodes with 500 GB disks 89 * [17:41] @BenTheElder begins gradually migrating nodes to the 500GB node pool 90 * [23:55] @BenTheElder completes the migration to the new node pool, all nodes now have 500 GB disks 91 * [07:00] Prow is once again merging at its usual rate 92 93 ## Appendinx 94 95 ### #testing-ops chat log 96 97 >cblecker [1:26 PM] 98 @test-infra-oncall there's something broken. I'm seeing failures being reported to GitHub, but Deck says jobs are still running. Seen this on a couple PRs now 99 > 100 >tony-yang [1:27 PM] 101 Thanks, will take a look 102 > 103 >tony-yang [1:50 PM] 104 @cblecker we don't see any significant red flag at the moment. Unfortunately, the job you provided is too old for us to obtain any useful information. It seems that there might be a transient issue with the job that uploads the finish state to Prow. 105 If you have a more recent job that ended in similar state (within the last 15 min), we can take a look 106 Thanks 107 > 108 >cblecker [1:55 PM] 109 @tony-yang sounds good. if I see it again, will let you know 110 > 111 >Katharine [1:57 PM] 112 I can see a big burst of failures 1-3 hours ago 113 Can't find anything more recent 114 ooh I found one I think 115 > ``` 116 > State: Terminated 117 > Exit Code: 0 118 > Started: Mon, 01 Jan 0001 00:00:00 +0000 119 > Finished: Mon, 01 Jan 0001 00:00:00 +0000 120 > ``` 121 >what even is metadata (edited) 122 > 123 >cblecker [2:07 PM] 124 haha.. well that explains why Deck is all ¯\\\_(ツ)\_/¯ 125 > 126 >Katharine [2:11 PM] 127 upon further investigation, there are a bunch of pods and nodes in very questionable states 128 Kubernetes is happening 129 And it looks like pods are being evicted by something that is not us 130 > 131 >cblecker [2:12 PM] 132 oh that's always fun 133 > 134 >Katharine [2:13 PM] 135 Also not super convinced that this one job pod I see that it has tried to kill 2,420 times over the last four days is doing what it's supposed to. 136 > 137 >Katharine [2:46 PM] 138 everything is on fiiiiire 139 :fire: 140 (we've managed to exhaust our IOPS quota... by a factor of two.) 141 stuff should probably still be mostly working actually. 142 but flakes abound 143 > 144 >tony-yang [2:47 PM] 145 We noticed that we have used 2x disk IO, which caused the slow down we were experiencing earlier. We are actively working to on an upgrade plan and will notify the community when we will start the maintenance. Thanks 146 > 147 >cblecker [3:34 PM] 148 Thank you both :pray: 149 > 150 >alejandrox1 [4:04 PM] 151 hi all, is tide working? 152 i’ve seen a couple prs that have been lgtm’d, approved, and all tests passed (even part of the v1.15 milestone) and they haven’t been merged in 12+ hrs 153 > 154 >liggitt [4:05 PM] 155 Last merge was 5 hours ago. https://github.com/kubernetes/kubernetes/commits/master 156 The queue is long 157 > 158 >liggitt [4:05 PM] 159 https://prow.k8s.io/tide 160 > 161 >liggitt [4:05 PM] 162 and CI has been very red, which means it drains slowly 163 > 164 >cjwagner [4:09 PM] 165 Yeah AFAICT Tide is working properly, the problem is that the nodes are on fire. See the batch jobs: https://prow.k8s.io/?repo=kubernetes%2Fkubernetes&type=batch 166 > 167 >cblecker [5:07 PM] 168 @tony-yang @Katharine can I get an update on the health of things? It looks still very unhealthy from the outside 169 > 170 >Katharine [5:07 PM] 171 things are worse, tony disappeared somewhere, I'm working on it now 172 > 173 >cblecker [5:08 PM] 174 okay thank you. good luck. let me know if there is anything help a non-googler can provide 175 > 176 >Katharine [5:10 PM] 177 I'm bringing up 80 nodes with more disk space, which is what I can pull off given our quota headroom. hopefully this alleviates our current issue 178 > 179 >cjwagner [5:13 PM] 180 Anything I can do to help Katharine? Is bumping disk capacity our only mitigation? 181 > 182 >Katharine [5:14 PM] 183 It looks like most/all of our problems basically come back to I/O calls variously failing or being slow enough to look like they failed 184 more disk capacity and, really, the associated I/O capacity should bring us back below GCP aggressively throttling us 185 (Additionally we'll have almost twice as many nodes until we clean up, so that probably also helps) 186 > 187 >cjwagner [5:15 PM] 188 We could set a global `max_concurrency` for plank to throttle the overall job load on the cluster. 189 > 190 >Katharine [5:17 PM] 191 and by 80 I mean 55 because I forgot what the original quota request we made was for 192 anyway, 55 now, then I'll start shifting over capacity from the existing nodepool until we get back up to 100 193 (so we'll have 155 nodes in the interim) 194 new nodes appear to be up 195 > 196 >cjwagner [5:19 PM] 197 Cool. Lets hold off on a global throttle then and see if the additional nodes are sufficient. 198 Oh, sweet. That was fast. (edited) 199 > 200 >liggitt [5:20 PM] 201 sometimes the cloud is cool 202 > 203 >cblecker [5:20 PM] 204 don't lie. computers are awful. 205 > 206 >Katharine [5:21 PM] 207 jobs are now being scheduled to them 208 > 209 >liggitt [5:21 PM] 210 "I do exactly what you tell me to. You told me to burn my CPUs into a slag heap repeatedly running CI jobs. Yes sir!" 211 > 212 >Katharine [5:21 PM] 213 I'll be back shortly 214 for now, working theory is that the extra 55 nodes should reduce pressure such that everything returns to normal shortly without needing to actually migrate capacity to the new nodepool so we'll probably just leave it like this for a bit unless behaviour fails to improve 215 > 216 >tony-yang [5:26 PM] 217 Thanks Katharine for helping out 218 > 219 >liggitt [5:28 PM] 220 >>liggitt [5:26 PM] 221 shoutout to the #testing-ops folks who cheerfully keep our *insane* CI load moving forward during code freeze crunches 222 Posted in #shoutouts 223 > 224 >bentheelder [5:39 PM] 225 joined #testing-ops. 226 > 227 >bentheelder [5:41 PM] 228 I'm migrating some capacity from the smaller-disk node pool to the bigger one. (draining the old nodes -> increasing the new pool), will be a lot slower but we'll start to standardize on the bigger disks. 229 > 230 >Thankfully most of the time we're already doing _okay_ on I/O for the lower capacity, but not with consistently comfortable enough overhead... 231 This _should_ resolve a number of intermittent unexplained issues. Thanks @random-liu for root-causing this :slightly_smiling_face: 232 > 233 >Katharine [7:04 PM] 234  235 > 236 >it looks like this did get a lot worse over the last day or two. 237 (I like how that doesn't have a key. green is throttled writes, red is throttled reads.) 238 > 239 >liggitt [7:14 PM] 240 is most of that on the scale jobs? 241 or do we have any way to slice by job 242 > 243 >Katharine [8:08 PM] 244 @liggitt I don't think we have any easy way to slice that by job - our best is probably nodes and times (edited) 245 > 246 >cblecker [8:09 PM] 247 The 5k node job is still pretty unhealthy: https://prow.k8s.io/?repo=kubernetes%2Fkubernetes&job=pull-kubernetes-kubemark-e2e-gce-big 248 > 249 >Katharine [8:10 PM] 250 That is should only be counting the build cluster though, so unless the scale jobs are doing consistently high writes in that build for hours, it seems unlikely to me that it's the sole cause. 251 Hm 252 > 253 >cblecker [8:11 PM] 254 the ones that are showing as "pending" don't appear to be running if you look at some the logs 255 and I think it's hit max concurrency as all the newer jobs are just sitting in triggers/aborted 256 > 257 >Katharine [8:14 PM] 258 max_concurrency on that job is 12 259 there are 12 running. 260 so it's not sad, just behaving as expected 261 the oldest running one started an hour ago and it takes 1-3 hours (edited) 262 so none of this seems unusual 263 at the earliest the current backlog on that job cannot be cleared for another probably six or seven hours 264 > 265 >cblecker [8:17 PM] 266 hmm.. I must have clicked the wrong link or something because I swear I saw logs that show it finished but was still in a pending state.. but I don't see it now (things look just as you say) 267 thanks for checking 268 > 269 >bentheelder [11:55 PM] 270 done migrating. we've standardized on the bigger disks now 271 272 ### GKE EngProd Chat Log 273 274 >Benjamin Elder [2:17 PM] 275 >FYI poking node team about k8s-prow-builds issues, we have some unhappy nodes in the containerd pool. Looks like kubelet <-> CRI RPC issues (?) 276 @Katharine Berry is also looking, we have pods that aren't being killed successfully and lots of testgrid entries with partial uploads. Getting spurious testgrid flake alerts due to jobs not uploading. 277 > 278 >Benjamin Elder [2:22 PM] 279 >Lantao is looking at these two: 280 >``` 281 >gke-prow-containerd-pool-99179761-02q6 NotReady <none> 14d v1.13.5-gke.10 282 >gke-prow-containerd-pool-99179761-313j NotReady <none> 14d v1.13.5-gke.10 283 >``` 284 >sounds like there's some kind of deadlock deleting pods sometimes 285 @Tony Yang 286 > 287 >Cole Wagner [2:37 PM] 288 The set of nodes in the NotReady state is changing. 289 >``` 290 >$ kg nodes | grep -v "\bReady\b" 291 >NAME STATUS ROLES AGE VERSION 292 >gke-prow-containerd-pool-99179761-02q6 NotReady <none> 14d v1.13.5-gke.10 293 >gke-prow-containerd-pool-99179761-4377 NotReady <none> 14d v1.13.5-gke.10 294 >gke-prow-containerd-pool-99179761-t05g NotReady <none> 13d v1.13.5-gke.10 295 >$ kg nodes | grep -v "\bReady\b" 296 >Cluster: gke_k8s-prow-builds_us-central1-f_prow 297 >NAME STATUS ROLES AGE VERSION 298 >gke-prow-containerd-pool-99179761-02q6 NotReady <none> 14d v1.13.5-gke.10 299 >gke-prow-containerd-pool-99179761-s4k7 NotReady <none> 162d v1.13.5-gke.10 300 >``` 301 > 302 >Benjamin Elder [2:37 PM] 303 yeah, talking to lantao offfline, TLDR: 304 >- we're seeing kernel panics (yay!) 305 >- we're getting absurd I/O throttling because we do too much disk I/O and GCE is throttling us (glares at bazel) 306 >- probably we can increase the disk sizes to avoid this 307 > 308 >Benjamin Elder [2:43 PM] 309 Estimated performance 310 Operation type Read Write 311 Sustained random IOPS limit 187.50 375.00 312 Sustained throughput limit (MB/s) 30.00 30.00 313 whereas we're actually hitting 80MB/s and > 450 IOPS when we're getting throttled 314 > 315 >Katharine Berry [2:47 PM] 316 other weirdness: pods that have been in the PodInitializing state for half an hour, kubelets that are in reboot loops 317 > 318 >Benjamin Elder [3:02 PM] 319 @Tony Yang https://github.com/kubernetes/test-infra/blob/master/experiment/maintenance/shift_nodepool_capacity.py 320 > 321 >Sen Lu [3:10 PM] 322 sounds like this gonna be one of those very exciting oncall rotation 323 > 324 >Benjamin Elder [3:11 PM] 325 probably not, this issue has been going on for a long time 326 this is what was previously written off as COS / containerd issues but is actually us using ridiculously high amounts of I/O vs our capacity. 327 it sounds like some of the other prows have this issue too 328 > 329 >Sen Lu [3:14 PM] 330 yes we've been making larger nodes for another prow as well 331 didn't know it's hitting k8s-prow as well 332 > 333 >Benjamin Elder [9:03 PM] 334 Katharine span up 55 nodes in a pool with 2x (500GB) disks, I'm waiting on the migrate script to move over the rest of the capacity still, we've crossed the majority of nodes being the 500GB type. 335 more in #testing-ops on slack 336 > 337 >Katharine Berry [9:03 PM] 338 Looking at prow, it looks good at a glance 339 We should probably work on a postmortem tomorrow 340 Especially given this caused code freeze to slip 341 > 342 >Benjamin Elder [11:55 PM] 343 migrating capacity is done