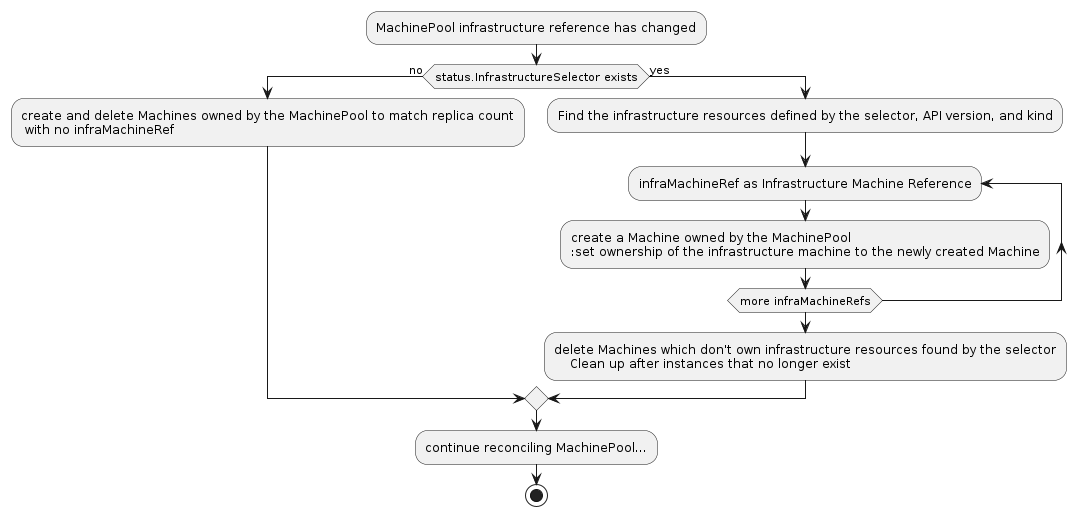

sigs.k8s.io/cluster-api@v1.6.3/docs/proposals/20220209-machinepool-machines.md (about) 1 --- 2 title: MachinePool Machines 3 authors: 4 - "@devigned" 5 - "@jont828" 6 - "@mboersma" 7 reviewers: 8 - "@CecileRobertMichon" 9 - "@enxebre" 10 - "@fabriziopandini" 11 - "@richardcase" 12 - "@sbueringer" 13 - "@shyamradhakrishnan" 14 - "@vincepri" 15 creation-date: 2022-02-09 16 last-updated: 2022-05-18 17 status: implementable 18 --- 19 20 # MachinePool Machines 21 22 ## Table of Contents 23 24 <!-- START doctoc generated TOC please keep comment here to allow auto update --> 25 <!-- DON'T EDIT THIS SECTION, INSTEAD RE-RUN doctoc TO UPDATE --> 26 27 - [Glossary](#glossary) 28 - [Summary](#summary) 29 - [Motivation](#motivation) 30 - [Goals](#goals) 31 - [Non-Goals/Future Work](#non-goalsfuture-work) 32 - [Proposal](#proposal) 33 - [User Stories](#user-stories) 34 - [Story U1](#story-u1) 35 - [Story U2](#story-u2) 36 - [Story U3](#story-u3) 37 - [Requirements](#requirements) 38 - [Implementation Details/Notes/Constraints](#implementation-detailsnotesconstraints) 39 - [Risks and Mitigations](#risks-and-mitigations) 40 - [Alternatives](#alternatives) 41 - [Upgrade Strategy](#upgrade-strategy) 42 - [Additional Details](#additional-details) 43 - [clusterctl client](#clusterctl-client) 44 - [Graduation Criteria](#graduation-criteria) 45 - [Implementation History](#implementation-history) 46 47 <!-- END doctoc generated TOC please keep comment here to allow auto update --> 48 49 ## Glossary 50 51 * **Machine**: a declarative specification for an infrastructure component that hosts a Kubernetes Node. Commonly, a Machine represents a VM instance. 52 * **MachinePool**: a spec for an infrastructure component that can scale a group of Machines. A MachinePool acts similarly to a [MachineDeployment][], but leverages scaling up resources that are specific to an infrastructure provider. However, while individual Machines in a MachineDeployment are visible and can be removed, individual Machines in a MachinePool are not represented. 53 * **MachinePool Machine**: a Machine that is owned by a MachinePool. It requires no special treatment in Cluster API in general; a Machine is a Machine without regard to ownership. 54 55 See also the [Cluster API Book Glossary][]. 56 57 ## Summary 58 59 MachinePools should be enhanced to own Machines that represent each of its `Replicas`. 60 61 These "MachinePool Machines" will open up the following opportunities: 62 63 - Share common behavior for Machines in MachinePools, which otherwise would need to be implemented by each infrastructure provider. 64 - Allow both Cluster API-driven and externally driven interactions with individual Machines in a MachinePool. 65 - Enable MachinePools to use deployment strategies similarly to MachineDeployments. 66 67 ## Motivation 68 69 MachineDeployments and Machines provide solutions for common scenarios such as deployment strategy, machine health checks, and retired node deletion. Exposing MachinePool Machines at the CAPI level will enable similar integrations with external projects. 70 71 For example, Cluster Autoscaler needs to delete individual Machines. Without a representation in the CAPI API of individual Machines in MachinePools, projects building integrations with Cluster API would need to be specialized to delete the cloud-specific resources for each MachinePool infrastructure provider. By exposing MachinePool Machines in CAPI, Cluster Autoscaler can treat Machines built by MachineDeployments and MachinePools in a uniform fashion through CAPI APIs. 72 73 As another example, currently each MachinePool infrastructure provider must implement their own node drain functionality. When MachinePool Machines become available in the CAPI API, all providers can use its existing node drain implementation. 74 75 ### Goals 76 77 - Enable CAPI MachinePool to create Machines to represent infrastructure provider MachinePool Machines 78 - Ensure that Machines in MachinePools can participate in the same behaviors as MachineDeployment Machines (node drain, machine health checks, retired node deletion), although note that some implementations will not support node deletion. 79 - Enhance `clusterctl describe` to display MachinePool Machines 80 81 ### Non-Goals/Future Work 82 83 - Implement Cluster Autoscaler MachinePool integration (a separate task, although we have a working POC) 84 - Allow CAPI MachinePool to include deployment strategies mirroring those of MachineDeployments 85 - Move the MachinePools implementation into a `exp/machinepools` package. The current code location makes its separation from `exp/addons` unclear and this would be an improvement. 86 - Improve bootstrap token handling for MachinePools. It would be preferable to have a single token for each Machine instead of the current shared token. 87 88 ## Proposal 89 90 To create MachinePool Machines, a MachinePool in CAPI needs information about the instances or replicas associated with the provider's implementation of the MachinePool. This information is attached to the provider's MachinePool infrastructure resource in new status fields `InfrastructureMachineSelector` and `InfrastructureMachineKind`. These fields should be populated by the infrastructure provider. 91 92 ```golang 93 // FooMachinePoolStatus defines the observed state of FooMachinePool. 94 type FooMachinePoolStatus struct { 95 // InfrastructureMachineSelector is a label query over the infrastructure resources behind MachinePool Machines. 96 // More info: https://kubernetes.io/docs/concepts/overview/working-with-objects/labels/#label-selectors 97 // +optional 98 InfrastructureMachineSelector metav1.LabelSelector `json:"infrastructureMachineSelector,omitempty"` 99 // InfrastructureMachineKind is the kind of the infrastructure resources behind MachinePool Machines. 100 // +optional 101 InfrastructureMachineKind string `json:"infrastructureMachineKind,omitempty"` 102 } 103 ``` 104 105 These fields are an addition to the optional status fields of `InfrastructureMachinePool` in the [provider contract][]. 106 107 If the fields are populated, CAPI's MachinePool controller will query for the provider-specific infrastructure resources. That query uses the Selector and Kind fields with the API version of the \<Provider\>MachinePool, which is assumed to match the API version of the infrastructure resources. 108 109 Once found, CAPI will create and connect MachinePool Machines to each resource. A MachinePool Machine is implemented as a Cluster API Machine that is owned by a MachinePool, with its BootstrapRef omitted. CAPI's MachinePool controller will loop through the \<Provider\>MachinePoolMachines found by the Selector and if needed, create a Machine and set its infrastructure reference to the \<Provider\>MachinePoolMachine, while setting the \<Provider\>MachinePoolMachine's owner reference to the newly created Machine. 110 111 If the field is empty, "CAPI-only" MachinePool Machines will be created. They will contain only basic information and exist to make a more consistent user experience across all MachinePools and MachineDeployments. These machines will not be connected to any infrastructure resources and a user will be prevented from deleting them. CAPI's MachinePool controller will reconcile their count with its replica count. 112 113 It is the responsibility of each provider to populate `InfrastructureMachineSelector` and `InfrastructureMachineKind`, and to create provider-specific MachinePool Machine resources behind each Machine. For example, the Docker provider may reuse the existing DockerMachine resource to represent the container instance behind the Machine in the infrastructure provider's MachinePool. It will also ensure that the DockerMachine is labeled such that the `InfrastructureMachineSelector` can be used to find it. 114 115  116 117 When a MachinePool Machine is deleted manually, the system will delete the corresponding provider-specific resource. The opposite is also true: when a provider-specific resource is deleted, the system will delete the corresponding MachinePool Machine. This happens by virtue of the infrastructureRef <-> ownerRef relationship. 118 119 In both cases, the MachinePool will notice the missing replica and create a new one in order to maintain the desired number of replicas. To scale down by removing a specific instance, that Machine should be given the "cluster.x-k8s.io/delete-machine" annotation and then the replicaCount on the MachinePool should be decremented. 120 121 ### User Stories 122 123 -------------------------------------------- 124 | ID | Story | 125 |----|-------------------------------------| 126 | U1 | Cluster Autoscaler | 127 | U2 | MachinePool Machine Remediation | 128 | U3 | MachinePool Machine Rolling Updates | 129 -------------------------------------------- 130 131 #### Story U1 132 133 A cluster admin is tasked with using Cluster Autoscaler to scale a MachinePool as required by machine load. The MachinePool should provide a mechanism for Cluster Autoscaler to increase replica count, and provide a mechanism for Cluster Autoscaler to reduce replica count by selecting an optimal machine for deletion. 134 135 #### Story U2 136 137 A cluster admin would like to configure CAPI to remediate unhealthy MachinePool Machines to ensure a healthy fleet of Machines. 138 139 #### Story U3 140 141 A cluster admin updates a MachinePool to a newer Kubernetes version and would like to configure the strategy for that deployment so that the MachinePool will progressively roll out the new version of the machines. They would like this operation to cordon and drain each node to minimize workload disruptions. 142 143 ### Requirements 144 145 -------------------------------------- 146 | ID | Requirement | Related Stories | 147 |----|-------------|-----------------| 148 | R1 | The MachinePool controller MUST create Machines representing the provider-specific resources in the MachinePool and enable cascading delete of infrastructure<br>machine resources upon delete of CAPI Machine. | U1 | 149 | R2 | The machine health check controller MUST be able to select machines by label which belong to a MachinePool and remediate. | U2 | 150 | R3 | The MachinePool API MUST provide an optional deployment strategy using the same type as MachineDeployment.Spec.Strategy. | U3 | 151 | R4 | The Machine Controller MUST handle node drain for Machine Pool Machines with the same behavior as MachineDeployment Machines. | U1, U3 | 152 153 ### Implementation Details/Notes/Constraints 154 155 - As an alternative, the `InfrastructureMachineSelector` field could be attached to the CAPI MachinePool resource. Then the CAPI MachinePool controller could check it directly and it would be more clearly documented in code. Feedback seemed to prefer not changing the MachinePool API and putting it in the provider contract instead. 156 157 - Some existing MachinePool implementations cannot support deletion of individual replicas / instances. Specifically, AWSManagedMachinePool and AKSManagedMachinePool can only scale the number of replicas. 158 159 To provide a more consistent UX, "CAPI-only" MachinePool Machines will be implemented for this case. This will allow a basic representation of each MachinePool Machine, but will not allow their deletion. 160 161 ### Risks and Mitigations 162 163 - A previous version of this proposal suggested using a pre-populated `infrastructureRefList` instead of the `InfrastructureMachineSelector` and related fields. Effectively, this means the query "what provider resources exist for this MachinePool's instances?" would be pre-populated, rather than run as needed. This approach would not scale well to MachinePools with hundreds or thousands of instances, since that list becomes part of the representation of that MachinePool, possibly causing storage or network transfer issues. 164 165 It was pointed out that the existing `providerIDList` suffers the same scalability issue as an `infrastructureRefList` would. It would be nice to refactor it away, but doing so is not considered a goal of this proposal effort. 166 167 - The logic to convey the intent of replicas in MachinePool (and MachineDeployment) is currently provider-agnostic and driven by the core controllers. The approach in this proposal inverts that, delegating the responsibility to the provider first to create the infrastructure resources, and then having the CAPI core controllers sync up with those resources by creating and deleting Machines. 168 169 One goal of creating these resources first in the provider code is to allow responsiveness to a MachinePool's native resource. A provider's MachinePool controller can keep the infrastructure representation of the actual scalable resource more current, perhaps responding to native platform events. 170 171 There is a potential that this approach creates a bad representation of intent with regard to MachinePool Machines depending on the provider's implementation. We assume that the number of implementors is small, and that the providers are well-known. A "reference implementation" of MachinePool Machines for Docker should guide providers towards reliable code. 172 173 ## Alternatives 174 175 We could consider refactoring MachineDeployment/MachineSet to support the option of using cloud infrastructure-native scaling group resources. That is, merge MachinePool into MachineDeployment. MachinePool and MachineDeployment do overlap significantly, and this proposal aims to eliminate one of their major differences: that individual Machines aren’t represented in a MachinePool. 176 177 While it is valuable that MachineDeployments are provider-agnostic, MachinePools take advantage of scalable resources unique to an infra provider, which may have advantages in speed or reliability. MachinePools allow an infrastructure provider to decide which native features to use, while still conforming to some basic common behaviors. 178 179 To merge MachinePool behavior — along with the changes proposed here — into MachineDeployment would effectively deprecate the experimental MachinePool API and would increase the overall scope of work significantly, while increasing challenges to maintaining backward compatibility in the stable API. 180 181 ## Upgrade Strategy 182 183 MachinePool Machines will be a backward-compatible feature. Existing infrastructure providers can make no changes and will observe the same behavior with MachinePools they always have. 184 185 ## Additional Details 186 187 ### clusterctl client 188 189 The clusterctl client will be updated to discover and list MachinePool Machines. 190 191 ### Graduation Criteria 192 193 This feature is linked to experimental MachinePools, and therefore awaits its graduation. 194 195 ## Implementation History 196 197 - [x] 01/11/2021: Proposed idea in a [GitHub issue](https://github.com/kubernetes-sigs/cluster-api/issues/4063) 198 - [x] 01/11/2021: Proposed idea at a [community meeting](https://youtu.be/Un_KXV4be-E) 199 - [x] 06/23/2021: Compile a [Google Doc](https://docs.google.com/document/d/1y40ayUDX9myNPHvotnlWCvysDb81BhU_MQ7G9_yqK0A/edit?usp=sharing) following the CAEP template 200 - [x] 08/01/2021: First round of feedback from community 201 - [x] 10/06/2021: Present proposal at a [community meeting](https://www.youtube.com/watch?v=fCHx2iRWMLM) 202 - [x] 02/09/2022: Open proposal PR 203 - [x] 03/09/2022: Update proposal to be required implementation after six months, other edits 204 - [x] 03/20/2022: Use selector-based approach and remove six-month implementation deadline 205 - [x] 04/05/2022: Update proposal to address all outstanding feedback 206 - [x] 04/20/2022: Update proposal to address newer feedback 207 - [x] 04/29/2022: Zoom meeting to answer questions and collect feedback 208 - [x] 05/03/2022: Update proposal to address newer feedback 209 - [x] 05/11/2022: Lazy consensus started 210 - [x] 05/18/2022: Update proposal to address newer feedback 211 212 <!-- Links --> 213 [Cluster API Book Glossary]: https://cluster-api.sigs.k8s.io/reference/glossary.html 214 [MachineDeployment]: https://cluster-api.sigs.k8s.io/user/concepts.html#machinedeployment 215 [provider contract]: https://cluster-api.sigs.k8s.io/developer/architecture/controllers/machine-pool.html#infrastructure-provider