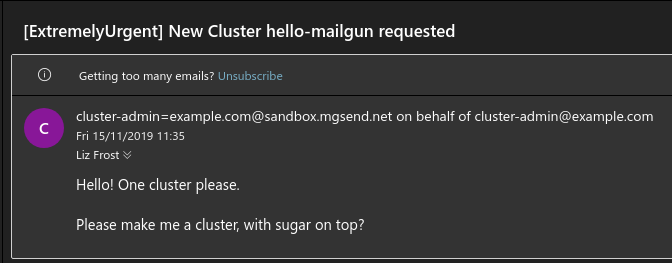

sigs.k8s.io/cluster-api@v1.7.1/docs/book/src/developer/providers/implementers-guide/building_running_and_testing.md (about) 1 # Building, Running, Testing 2 3 ## Docker Image Name 4 5 The patch in `config/manager/manager_image_patch.yaml` will be applied to the manager pod. 6 Right now there is a placeholder `IMAGE_URL`, which you will need to change to your actual image. 7 8 ### Development Images 9 It's likely that you will want one location and tag for release development, and another during development. 10 11 The approach most Cluster API projects is using [a `Makefile` that uses `sed` to replace the image URL][sed] on demand during development. 12 13 [sed]: https://github.com/kubernetes-sigs/cluster-api/blob/e0fb83a839b2755b14fbefbe6f93db9a58c76952/Makefile#L201-L204 14 15 ## Deployment 16 17 ### cert-manager 18 19 Cluster API uses [cert-manager] to manage the certificates it needs for its webhooks. 20 Before you apply Cluster API's yaml, you should [install `cert-manager`][cm-install] 21 22 [cert-manager]: https://github.com/cert-manager/cert-manager 23 [cm-install]: https://cert-manager.io/docs/installation/ 24 25 ```bash 26 kubectl apply -f https://github.com/cert-manager/cert-manager/releases/download/<version>/cert-manager.yaml 27 ``` 28 29 ### Cluster API 30 31 Before you can deploy the infrastructure controller, you'll need to deploy Cluster API itself to the management cluster. 32 33 You can use a precompiled manifest from the [release page][releases], run `clusterctl init`, or clone [`cluster-api`][capi] and apply its manifests using `kustomize`: 34 35 ```bash 36 cd cluster-api 37 make envsubst 38 kustomize build config/default | ./hack/tools/bin/envsubst | kubectl apply -f - 39 ``` 40 41 Check the status of the manager to make sure it's running properly: 42 43 ```bash 44 kubectl describe -n capi-system pod | grep -A 5 Conditions 45 ``` 46 ```bash 47 Conditions: 48 Type Status 49 Initialized True 50 Ready True 51 ContainersReady True 52 PodScheduled True 53 ``` 54 55 [capi]: https://github.com/kubernetes-sigs/cluster-api 56 [releases]: https://github.com/kubernetes-sigs/cluster-api/releases 57 58 ### Your provider 59 60 In this guide, we are building an _infrastructure provider_. We must tell cluster-api and its developer tooling which type of provider it is. Edit `config/default/kustomization.yaml` and add the following common label. The prefix `infrastructure-` [is used][label_prefix] to detect the provider type. 61 62 ```sh 63 commonLabels: 64 cluster.x-k8s.io/provider: infrastructure-mailgun 65 ``` 66 67 Now you can apply your provider as well: 68 69 ```bash 70 cd cluster-api-provider-mailgun 71 72 # Install CRD and controller to current kubectl context 73 make install deploy 74 75 kubectl describe -n cluster-api-provider-mailgun-system pod | grep -A 5 Conditions 76 ``` 77 78 ```text 79 Conditions: 80 Type Status 81 Initialized True 82 Ready True 83 ContainersReady True 84 PodScheduled True 85 ``` 86 87 [label_prefix]: https://github.com/kubernetes-sigs/cluster-api/search?q=%22infrastructure-%22 88 89 ### Tiltfile 90 Cluster API development requires a lot of iteration, and the "build, tag, push, update deployment" workflow can be very tedious. 91 [Tilt](https://tilt.dev) makes this process much simpler by watching for updates, then automatically building and deploying them. 92 93 See [Developing Cluster API with Tilt](../../tilt.md) on all details how to develop both Cluster API and your provider at the same time. In short, you need to perform these steps for a basic Tilt-based development environment: 94 95 - Create file `tilt-provider.yaml` in your provider directory: 96 97 ```yaml 98 name: mailgun 99 config: 100 image: controller:latest # change to remote image name if desired 101 label: CAPM 102 live_reload_deps: ["main.go", "go.mod", "go.sum", "api", "controllers", "pkg"] 103 ``` 104 105 - Create file `tilt-settings.yaml` in the cluster-api directory: 106 107 ```yaml 108 default_registry: "" # change if you use a remote image registry 109 provider_repos: 110 # This refers to your provider directory and loads settings 111 # from `tilt-provider.yaml` 112 - ../cluster-api-provider-mailgun 113 enable_providers: 114 - mailgun 115 ``` 116 117 - Create a kind cluster. By default, Tiltfile assumes the kind cluster is named `capi-test`. 118 119 ```bash 120 kind create cluster --name capi-test 121 122 # If you want a more sophisticated setup of kind cluster + image registry, try: 123 # --- 124 # cd cluster-api 125 # hack/kind-install-for-capd.sh 126 ``` 127 128 - Run `tilt up` in the cluster-api folder 129 130 You can then use Tilt to watch the container logs. 131 132 On any changed file in the listed places (`live_reload_deps` and those watched inside cluster-api repo), Tilt will build and deploy again. In the regular case of a changed file, only your controller's binary gets rebuilt, copied into the running container, and the process restarted. This is much faster than a full re-build and re-deployment of a Docker image and restart of the Kubernetes pod. 133 134 You best watch the Kubernetes pods with something like `k9s -A` or `watch kubectl get pod -A`. Particularly in case your provider implementation crashes, Tilt has no chance to deploy any code changes into the container since it might be crash-looping indefinitely. In such a case – which you will notice in the log output – terminate Tilt (hit Ctrl+C) and start it again to deploy the Docker image from scratch. 135 136 ## Your first Cluster 137 138 Let's try our cluster out. We'll make some simple YAML: 139 140 ```yaml 141 apiVersion: cluster.x-k8s.io/v1beta1 142 kind: Cluster 143 metadata: 144 name: hello-mailgun 145 spec: 146 clusterNetwork: 147 pods: 148 cidrBlocks: ["192.168.0.0/16"] 149 infrastructureRef: 150 apiVersion: infrastructure.cluster.x-k8s.io/v1alpha1 151 kind: MailgunCluster 152 name: hello-mailgun 153 --- 154 apiVersion: infrastructure.cluster.x-k8s.io/v1alpha1 155 kind: MailgunCluster 156 metadata: 157 name: hello-mailgun 158 spec: 159 priority: "ExtremelyUrgent" 160 request: "Please make me a cluster, with sugar on top?" 161 requester: "cluster-admin@example.com" 162 ``` 163 164 We apply it as normal with `kubectl apply -f <filename>.yaml`. 165 166 If all goes well, you should be getting an email to the address you configured when you set up your management cluster: 167 168  169 170 ## Conclusion 171 172 Obviously, this is only the first step. 173 We need to implement our Machine object too, and log events, handle updates, and many more things. 174 175 Hopefully you feel empowered to go out and create your own provider now. 176 The world is your Kubernetes-based oyster!