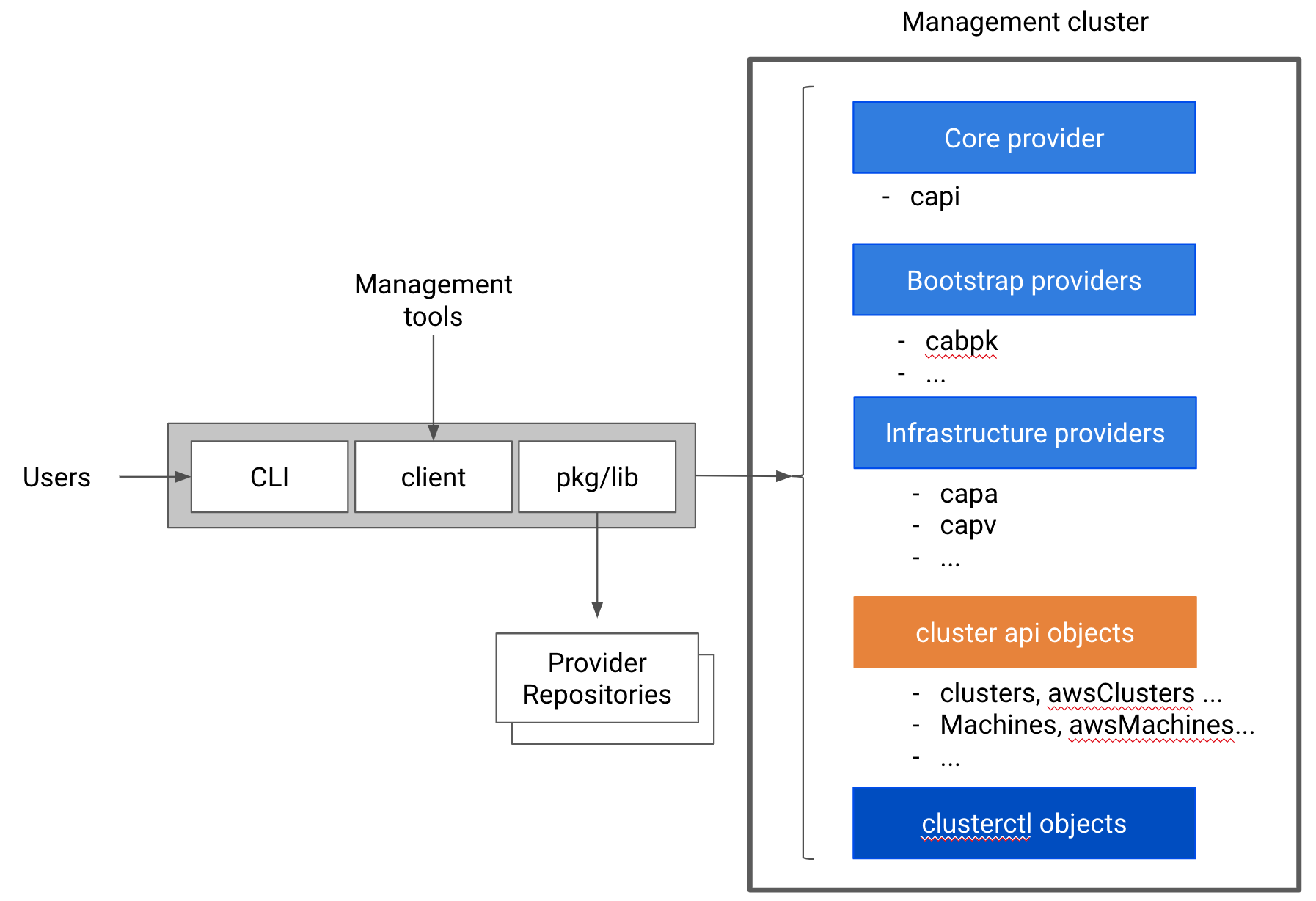

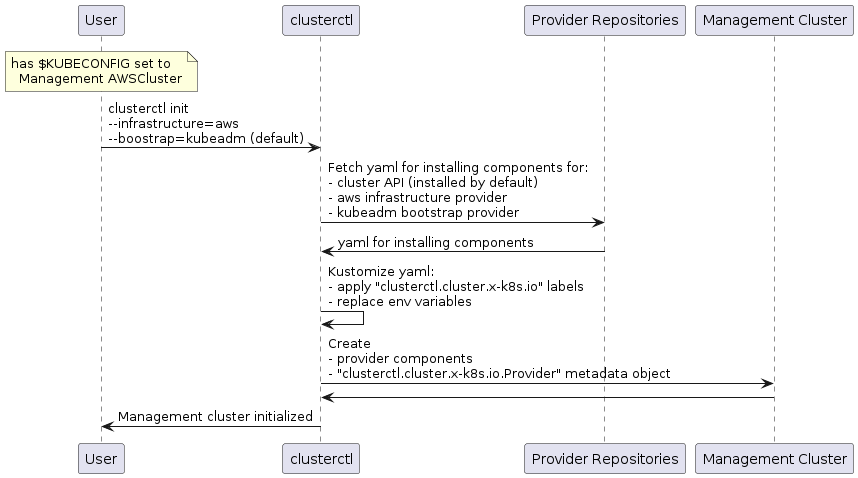

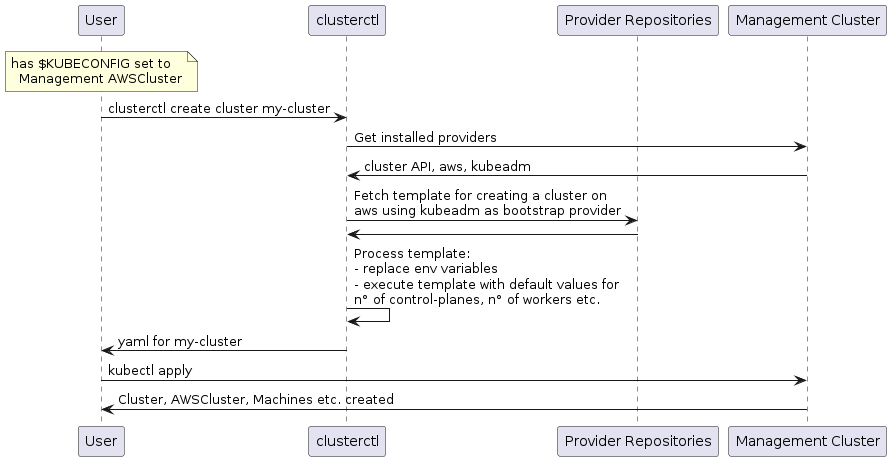

sigs.k8s.io/cluster-api@v1.7.1/docs/proposals/20191016-clusterctl-redesign.md (about) 1 --- 2 title: Clusterctl redesign - Improve user experience and management across Cluster API providers 3 authors: 4 - "@timothysc" 5 - "@frapposelli" 6 - "@fabriziopandini" 7 reviewers: 8 - "@detiber" 9 - "@ncdc" 10 - "@vincepri" 11 - "@pablochacin" 12 - "@voor" 13 - "@jzhoucliqr" 14 - "@andrewsykim" 15 creation-date: 2019-10-16 16 last-updated: 2019-10-16 17 status: implementable 18 --- 19 20 # Clusterctl redesign - Improve user experience and management across Cluster API providers 21 22 ## Table of Contents 23 24 <!-- START doctoc generated TOC please keep comment here to allow auto update --> 25 <!-- DON'T EDIT THIS SECTION, INSTEAD RE-RUN doctoc TO UPDATE --> 26 27 - [Glossary](#glossary) 28 - [Summary](#summary) 29 - [Motivation](#motivation) 30 - [Goals](#goals) 31 - [Non-Goals/Future Work](#non-goalsfuture-work) 32 - [Proposal](#proposal) 33 - [Preconditions](#preconditions) 34 - [User Stories](#user-stories) 35 - [Initial Deployment](#initial-deployment) 36 - [Day Two Operations “Lifecycle Management”](#day-two-operations-lifecycle-management) 37 - [Target Cluster Pivot Management](#target-cluster-pivot-management) 38 - [Provider Enablement](#provider-enablement) 39 - [Implementation Details/Notes/Constraints](#implementation-detailsnotesconstraints) 40 - [Init sequence](#init-sequence) 41 - [Day 2 operations](#day-2-operations) 42 - [Risks and Mitigations](#risks-and-mitigations) 43 - [Upgrade Strategy](#upgrade-strategy) 44 - [Additional Details](#additional-details) 45 - [Test Plan [optional]](#test-plan-optional) 46 - [Graduation Criteria [optional]](#graduation-criteria-optional) 47 - [Version Skew Strategy](#version-skew-strategy) 48 - [Implementation History](#implementation-history) 49 50 <!-- END doctoc generated TOC please keep comment here to allow auto update --> 51 52 ## Glossary 53 54 Refer to the [Cluster API Book Glossary](https://cluster-api.sigs.k8s.io/reference/glossary.html). 55 56 ## Summary 57 58 Cluster API is a Kubernetes project that brings declarative, [Kubernetes-style APIs](https://kubernetes.io/docs/concepts/overview/kubernetes-api/) to cluster creation, configuration, and management. The project is highly successful, and has been adopted by a number of different providers. Despite its popularity, the end user experience is fragmented across providers, and users are often confused by different version and release semantics and tools. 59 60 In this proposal we outline the rationale for a redesign of clusterctl, with the primary purpose of unifying the user experience and lifecycle management across Cluster API based providers. 61 62 ## Motivation 63 64 One of the most relevant areas for improvement of Cluster API is the end user experience, which is fragmented across providers. The user experience is currently not optimized for the “day one” user story despite the improvements in the documentation. 65 66 Originally, one of the root causes of this problem was the existence of a unique copy of clusterctl for each cluster API provider, each having a slightly different set of features (different flags, different subcommands). 67 68 Another source of confusion is the current scope of clusterctl, which in some cases expanded outside of lifecycle management of Cluster API providers. For example, the support for different mechanisms for creating a bootstrap cluster (minikube, kind) takes on an unnecessary complexity and support burden. 69 70 As a result, we are proposing a redesign of clusterctl with an emphasis on lifecycle management of Cluster API providers, and a unified user experience across all the Cluster API based providers 71 72 ### Goals 73 74 - Create a CLI that is optimized for the “day one” experience of using Cluster API. 75 - Simplify lifecycle management (installation, upgrade, removal) of provider specific components. This includes CRDs, controllers, etc. 76 - Enable new providers, or new versions of existing providers, to be added without recompiling. 77 - Provide a consistent user experience across Cluster API providers. 78 - Enable provider specific deployment templates, or flavors, to end users. E.g. dev, test, prod, etc. 79 - Provide support for air gapped environments 80 - To create a well factored client library, that can be leveraged by management tools. 81 82 ### Non-Goals/Future Work 83 84 - To control the lifecycle of the management cluster. Users are expected to bring their own management cluster, either local (minikube/kind) or remote (aws,vsphere etc.). 85 - To own provider specific preconditions (this can be handled a number of different ways). 86 - To manage the lifecycle of target, or workload, clusters. This is the domain of Cluster API providers, and not clusterctl. (at this time). 87 - To install network addons or any other components in the workload clusters. 88 - To become a general purpose cluster management tool. E.g. collect logs, monitor resources, etc. 89 - To abstract away provider specific details. 90 91 ## Proposal 92 93 In this section we will outline a high level overview of the proposed tool and its associated user stories. 94 95 ### Preconditions 96 97 Prior to running clusterctl it is required that the operator has a valid KUBECONFIG + context to a running management cluster, following the same rules as kubectl. 98 99 ### User Stories 100 101 #### Initial Deployment 102 103 - As a Kubernetes operator, I’d like to have a simple way for clusterctl to install Cluster API providers into a management cluster, using a very limited set of resources and prerequisites, ideally from a laptop with something I can install with just a couple of terminal commands. 104 - As a Kubernetes operator, I’d like to have the ability to provision clusters on different providers, using the same management cluster. 105 - As a Kubernetes operator I would like to have a consistent user experience to configure and deploy clusters across cloud providers. 106 107 #### Day Two Operations “Lifecycle Management” 108 109 - As a Kubernetes operator I would like to be able to install new Cluster API providers into a management cluster. 110 - As a Kubernetes operator I would like to be able to upgrade Cluster API components (including CRDs, controllers, etc). 111 - As a Kubernetes operator I would like to have a simple user experience to cleanly remove the Cluster API objects from a management cluster. 112 113 #### Target Cluster Pivot Management 114 115 - As a Kubernetes operator I would like to pivot Cluster API components from a management cluster to a target cluster. 116 117 #### Provider Enablement 118 119 - As a Cluster API provider developer, I would like to use my current implementation, or a new version, with clusterctl without recompiling. 120 121 ### Implementation Details/Notes/Constraints 122 123 > Portions of clusterctl may require changes to the Cluster API data model and/or the definition of new conventions for the providers in order to enable sets of user stories listed above, therefore a roadmap and feature deliverables will need to be coordinated over time. 124 125 As of this writing, we envision clusterctl’s component model to look similar to the diagram below: 126 127  128 129 Clusterctl consists of a single binary artifact implementing a CLI; the same features exposed by the CLI will be made available to other management tools via a client library. 130 131 During provider installation, clusterctl internal pkg/lib is expected to access provider repositories and read the yaml file specifying all the provider components to be installed in the management cluster. 132 133 If the provider repository contains deployment templates, or flavors, clusterctl could be used to generate yaml for creating new workload clusters. 134 135 Behind the scenes clusterctl will apply labels to any and all providers’ components and use a set of custom objects for keeping track of some metadata about installed providers, like e.g. the current version or the namespace where the components are installed. 136 137 #### Init sequence 138 139 The clusterctl CLI is optimized for the “day one” experience of using Cluster API, and it will be possible to create a first cluster with two commands after the pre-requisites are met: 140 141 ```bash 142 clusterctl init --infrastructure aws 143 clusterctl config cluster my-first-cluster | kubectl apply -f - 144 ``` 145 146 Then, as of today, the CNI of choice should be applied to the new cluster: 147 148 ```bash 149 kubectl get secret my-first-cluster-kubeconfig -o=jsonpath='{.data.value}' | base64 -D > my-first-cluster.kubeconfig 150 kubectl apply --kubeconfig=my-first-cluster.kubeconfig -f MY_CNI 151 ``` 152 153 The internal flow for the above commands is the following: 154 155 **clusterctl init (AWS):** 156 157  158 159 Please note that “day one” experience: 160 161 1. Is based on a list of pre-configured provider repositories; it is possible to add new provider configurations to the list of known providers by using the clusterctl config file (see day 2 operations for more details about this). 162 2. Assumes init will replace env variables defined in the components yaml read from provider repositories, or error out if such variables are missing. 163 3. Assumes providers will be installed in the namespace defined in the components yaml; at the time of this writing, all the major providers ship with components yaml creating dedicated namespaces. This could be customized by specifying the --target-namespace flag. 164 4. Assumes to not change the namespace each provider is watching on objects; at the time of this writing, all the major providers ship with components yaml watching for objects in all namespaces. Pending [1490](https://github.com/kubernetes-sigs/cluster-api/issues/1490) issue addressed, this could be customized by specifying the --watching-namespace flag. 165 166 **clusterctl config:** 167 168  169 170 Please note that “day one” experience: 171 172 1. Assumes only one infrastructure provider and only one bootstrap provider installed in the cluster; in case of more than one provider installed, it will be possible to specify the target provider using the --infrastructure and --bootstrap flag. 173 2. In order to fetch template from a provider repository, Assumes a naming convention should be established (details TBD). 174 3. Similarly, also template variables should be defined (details TBD). 175 176 #### Day 2 operations 177 178 The clusterctl command will provide support for following operations 179 180 - Add more provider configurations using the clusterctl config file [1] 181 - Override default provider configurations using the clusterctl config file [1] 182 - Add more providers after init, eventually specifying the target namespace and the watching namespace [2] 183 - Add more instances of an existing provider in another namespace/with a non-overlapping watching namespace [2] 184 - Get the yaml for installing a provider, thus allowing full customization for advanced users 185 - Create additional clusters, eventually specifying template parameters 186 - Get the yaml for creating a new cluster, thus allowing full customization for advanced users 187 - Pivot the management cluster to another management cluster (see next paragraph) 188 - Upgrade a provider (see next paragraph) 189 - Delete a provider (see next paragraph) 190 - Reset the management cluster (see next paragraph) 191 192 *[1] clusterctl will provide support pluggable provider repository implementations. Current plan is to support:* 193 194 - *GitHub release assets* 195 - *GitHub tree e.g. for deploying a provider from a specific commit* 196 *http/https web servers e.g. as a cheap alternative for mirroring - GitHub in air-gapped environments* 197 - *file system e.g. for deploying a provider from the local dev environment* 198 199 *Please note that GitHub release assets is the reference implementation of a provider repository; other providers type might have specific limitations with respect to the reference implementation (details TBD).* 200 201 *[2] clusterctl command will try to prevent the user to create invalid configurations, e.g. (details TBD):* 202 203 - *Install different versions of the same provider because providers have a mix of namespaced objects and global objects, and thus it is not possible to fully isolate a provider versions.* 204 - *Install more instances of the same provider fighting for objects (watching objects in overlapping namespaces).* 205 206 *In any case, will be allowed to ignore above warnings with a --force flag.* 207 208 **clusterctl pivot --target-cluster** 209 210 With the new version of clusterctl the pivoting sequence is not critical to the init workflow. Nevertheless, clusterctl will preserve the possibility to pivot an existing management cluster to a target cluster. 211 212 The implementation of pivoting will take benefit of the labels applied by clusterctl for identifying provider components, and of the auto labeling cluster resources (see [1489](https://github.com/kubernetes-sigs/cluster-api/issues/1489)) for identifying cluster objects. 213 214 **clusterctl upgrade [provider]** 215 216 The clusterctl upgrade sequence is responsible for upgrading a provider version [1] [2]. 217 218 At high level, the upgrade sequence consist of two operations: 219 220 1. Delete all the provider components of the current version [3] 221 1. Create provider components for the new version 222 223 The new provider version will be then responsible for conversion of the related cluster API objects when necessary. 224 225 *[1] Upgrading the management cluster and upgrading workload clusters are considered out of scope of clusterctl upgrades.* 226 227 *[2] in case of more than one instance of the same provider is installed on the management cluster, all the instance of the same provider will be upgraded in a single operation because providers have a mix of namespaced objects and global objects, and thus it is not possible to fully isolate a provider versions.* 228 229 *[3] TBD exact details with regards to provider CRDs in order to make object conversion possible* 230 231 **clusterctl delete [provider]** 232 233 Deleting a provider sequence consist of the following actions: 234 Identify all the provider components for a provider using the labels applied by clusterctl during install 235 Delete all the provider components [1] with the exception of CRD definitions [2] 236 237 *[1] in case of more than one instance of the same provider is installed on the management cluster, only the provider components in the provider namespace are going to be deleted thus preserving global components required for the proper functioning of other instances.* 238 *TBD: if delete force deleting workload cluster or preserve them / how to make this behavior configurable* 239 240 *[2] The clusterctl tools always try to preserve what is actually running and deployed, in that case the Cluster API objects for workload clusters. The --hard flag can be used to force deletion of the Cluster API objects and CRD definitions. 241 242 **clusterctl reset** 243 244 Reset sequence goal is to restore the management cluster to its initial state [1], and this basically will be implemented as hard deletion of all the installed providers 245 246 *[1] TBD: we should make delete force deleting workload cluster or preserve them (or give an option to the user for this)* 247 248 ### Risks and Mitigations 249 250 - R: Change in clusterctl behavior can disrupt some current users 251 - M: Work with the community to refine this spec and take their feedback into account. 252 - R: Precondition of having a working Kubernetes cluster might increase the adoption bar, especially for new users. 253 - M: Document requirements and quick ways to get a working Kubernetes cluster either locally (e.g. kind) or in the cloud (e.g. GKE, EKS, etc.). 254 255 ## Upgrade Strategy 256 257 Upgrading clusterctl should be a simple binary replace. 258 259 TBD. How to make the new clusterctl version read metadata from existing clusters with potentially an older CRD version. 260 261 ## Additional Details 262 263 ### Test Plan [optional] 264 265 Standard unit/integration & e2e behavioral test plans will apply. 266 267 ### Graduation Criteria [optional] 268 269 TBD - At the time of this writing it is too early to determine graduation criteria. 270 271 ### Version Skew Strategy 272 273 TBD - At the time of this writing it is too early to determine version skew limitations/constraints. 274 275 ## Implementation History 276 277 - [timothysc/frapposelli] 2019-01-07: Initial creation of clusteradm precursor proposal 278 - [fabriziopandini/timothysc/frapposelli] 2019-10-16: Rescoped to clusterctl redesign