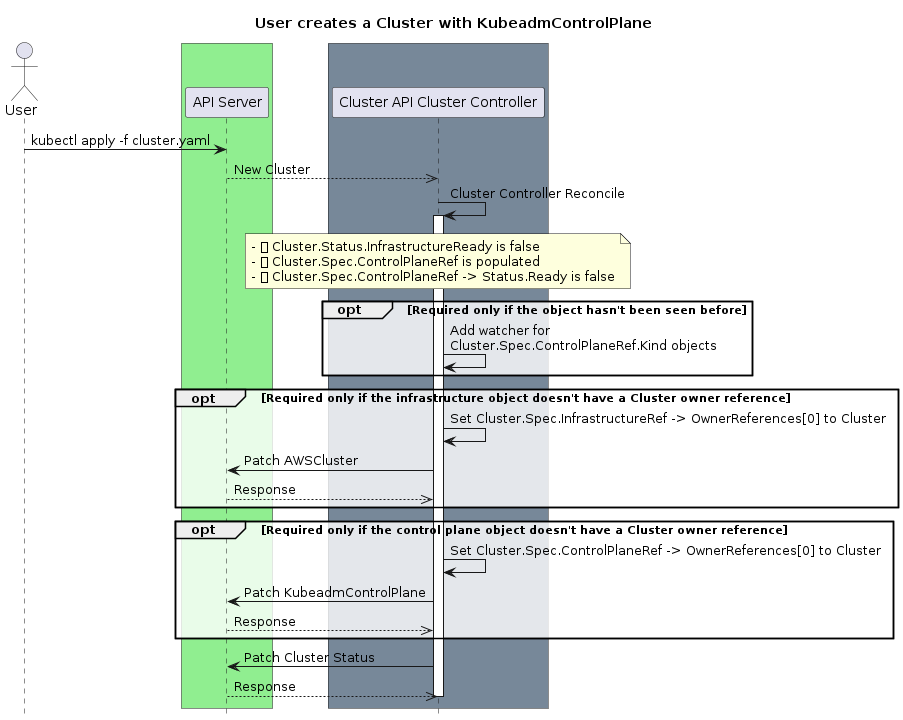

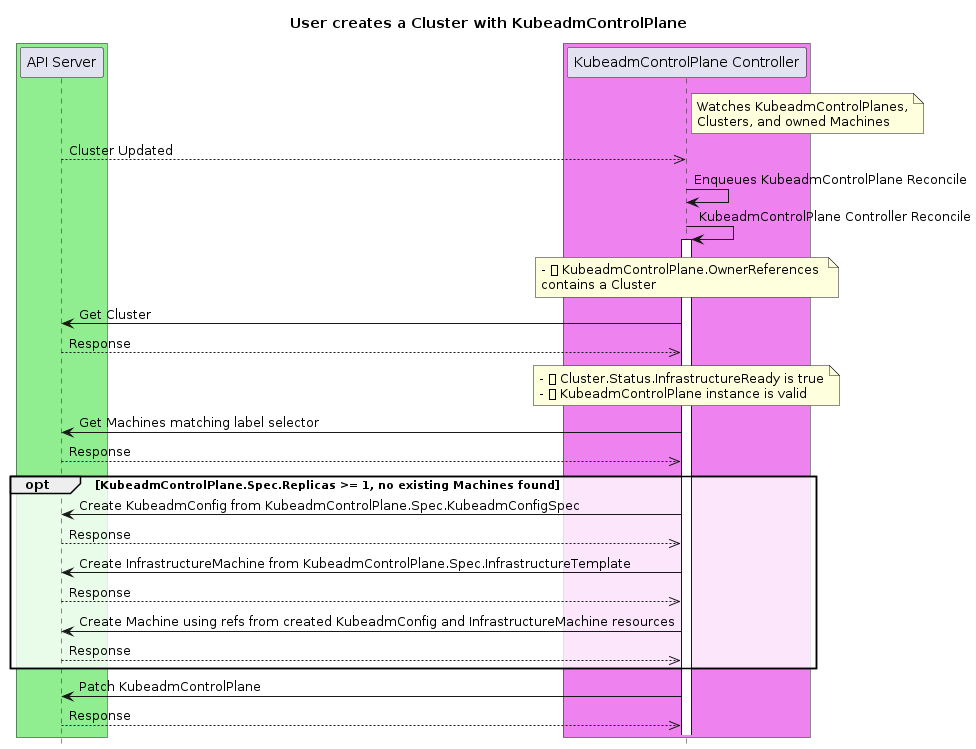

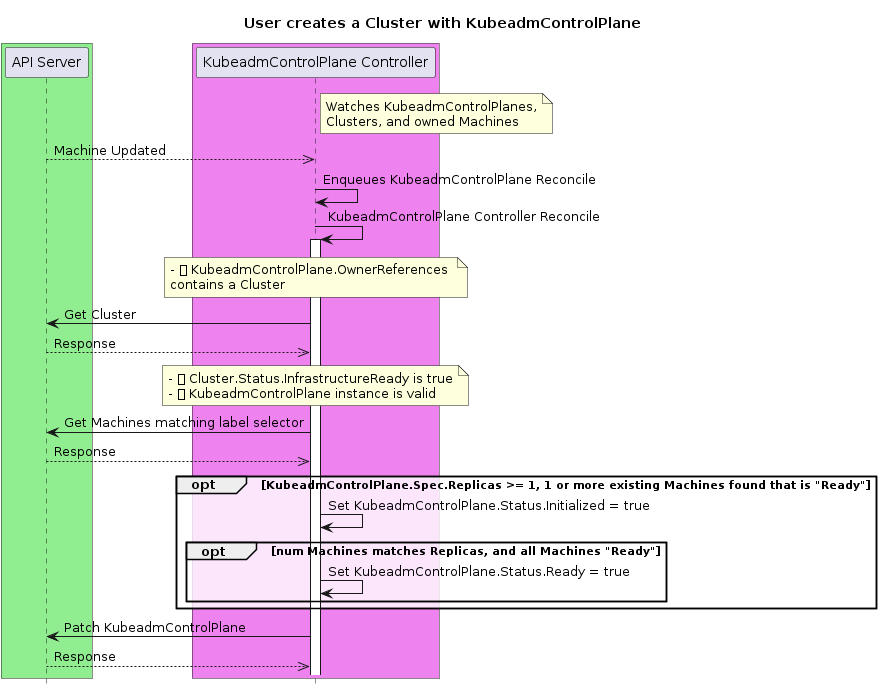

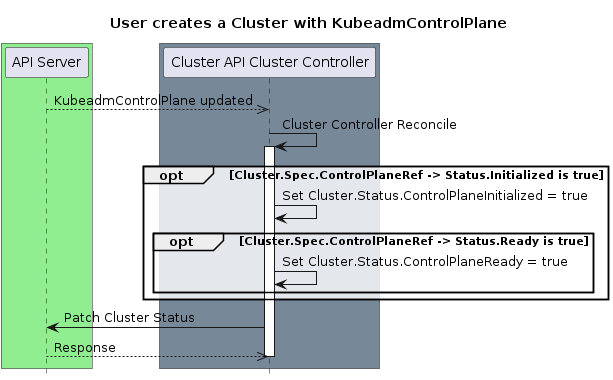

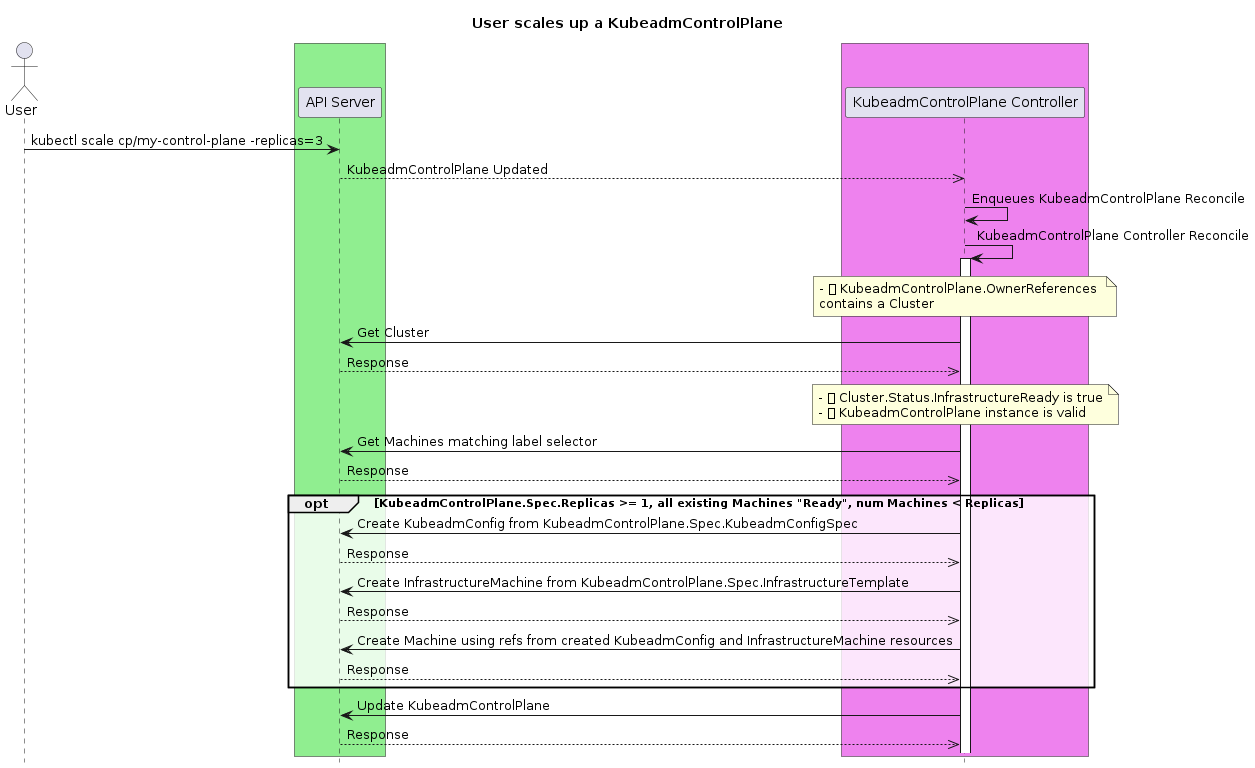

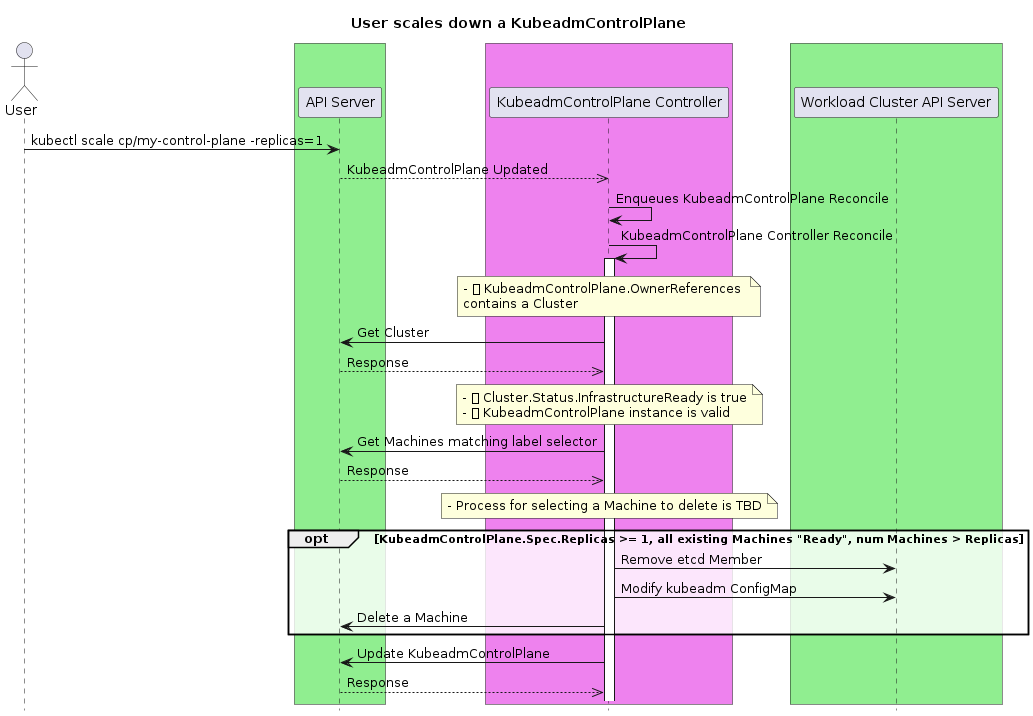

sigs.k8s.io/cluster-api@v1.7.1/docs/proposals/20191017-kubeadm-based-control-plane.md (about) 1 --- 2 title: Kubeadm Based Control Plane Management 3 authors: 4 - "@detiber" 5 - "@chuckha" 6 - "@randomvariable" 7 - "@dlipovetsky" 8 - "@amy" 9 reviewers: 10 - "@ncdc" 11 - "@timothysc" 12 - "@vincepri" 13 - "@akutz" 14 - "@jaypipes" 15 - "@pablochacin" 16 - "@rsmitty" 17 - "@CecileRobertMichon" 18 - "@hardikdr" 19 - "@sbueringer" 20 creation-date: 2019-10-17 21 last-updated: 2021-01-07 22 status: implementable 23 --- 24 25 # Kubeadm Based Control Plane Management 26 27 ## Table of Contents 28 29 <!-- START doctoc generated TOC please keep comment here to allow auto update --> 30 <!-- DON'T EDIT THIS SECTION, INSTEAD RE-RUN doctoc TO UPDATE --> 31 32 - [Glossary](#glossary) 33 - [References](#references) 34 - [Summary](#summary) 35 - [Motivation](#motivation) 36 - [Goals](#goals) 37 - [Non-Goals / Future Work](#non-goals--future-work) 38 - [Proposal](#proposal) 39 - [User Stories](#user-stories) 40 - [Identified features from user stories](#identified-features-from-user-stories) 41 - [Implementation Details/Notes/Constraints](#implementation-detailsnotesconstraints) 42 - [New API Types](#new-api-types) 43 - [Rollout strategy](#rollout-strategy) 44 - [Modifications required to existing API Types](#modifications-required-to-existing-api-types) 45 - [Behavioral Changes from v1alpha2](#behavioral-changes-from-v1alpha2) 46 - [Behaviors](#behaviors) 47 - [Create](#create) 48 - [Scale Up](#scale-up) 49 - [Scale Down](#scale-down) 50 - [Delete of the entire KubeadmControlPlane (kubectl delete controlplane my-controlplane)](#delete-of-the-entire-kubeadmcontrolplane-kubectl-delete-controlplane-my-controlplane) 51 - [KubeadmControlPlane rollout](#kubeadmcontrolplane-rollout) 52 - [Rolling update strategy](#rolling-update-strategy) 53 - [Constraints and Assumptions](#constraints-and-assumptions) 54 - [Remediation (using delete-and-recreate)](#remediation-using-delete-and-recreate) 55 - [Why delete and recreate](#why-delete-and-recreate) 56 - [Scenario 1: Three replicas, one machine marked for remediation](#scenario-1-three-replicas-one-machine-marked-for-remediation) 57 - [Scenario 2: Three replicas, two machines marked for remediation](#scenario-2-three-replicas-two-machines-marked-for-remediation) 58 - [Scenario 3: Three replicas, one unresponsive etcd member, one (different) unhealthy machine](#scenario-3-three-replicas-one-unresponsive-etcd-member-one-different-unhealthy-machine) 59 - [Scenario 4: Unhealthy machines combined with rollout](#scenario-4-unhealthy-machines-combined-with-rollout) 60 - [Preflight checks](#preflight-checks) 61 - [Etcd (external)](#etcd-external) 62 - [Etcd (stacked)](#etcd-stacked) 63 - [Kubernetes Control Plane](#kubernetes-control-plane) 64 - [Adoption of pre-v1alpha3 Control Plane Machines](#adoption-of-pre-v1alpha3-control-plane-machines) 65 - [Code organization](#code-organization) 66 - [Risks and Mitigations](#risks-and-mitigations) 67 - [etcd membership](#etcd-membership) 68 - [Upgrade where changes needed to KubeadmConfig are not currently possible](#upgrade-where-changes-needed-to-kubeadmconfig-are-not-currently-possible) 69 - [Design Details](#design-details) 70 - [Test Plan](#test-plan) 71 - [Graduation Criteria](#graduation-criteria) 72 - [Alpha -> Beta Graduation](#alpha---beta-graduation) 73 - [Upgrade Strategy](#upgrade-strategy) 74 - [Alternatives](#alternatives) 75 - [Implementation History](#implementation-history) 76 77 <!-- END doctoc generated TOC please keep comment here to allow auto update --> 78 79 ## Glossary 80 81 The lexicon used in this document is described in more detail [here](https://github.com/kubernetes-sigs/cluster-api/blob/main/docs/book/src/reference/glossary.md). Any discrepancies should be rectified in the main Cluster API glossary. 82 83 ### References 84 85 [Kubernetes Control Plane Management Overview](https://docs.google.com/document/d/1nlWKEr9OP3IeZO5W2cMd3A55ZXLOXcnc6dFu0K9_bms/edit#) 86 87 ## Summary 88 89 This proposal outlines a new process for Cluster API to manage control plane machines as a single concept. This includes 90 upgrading, scaling up, and modifying the underlying image (e.g. AMI) of the control plane machines. 91 92 The control plane covered by this document is defined as the Kubernetes API server, scheduler, controller manager, DNS 93 and proxy services, and the underlying etcd data store. 94 95 ## Motivation 96 97 During 2019 we saw control plane management implementations in each infrastructure provider. Much like 98 bootstrapping was identified as being reimplemented in every infrastructure provider and then extracted into Cluster API 99 Bootstrap Provider Kubeadm (CABPK), we believe we can reduce the redundancy of control plane management across providers 100 and centralize the logic in Cluster API. We also wanted to ensure that default control plane management and use of any alternative control plane management solutions are separated. 101 102 ### Goals 103 104 - To establish new resource types for control plane management 105 - To support single node and multiple node control plane instances, with the requirement that the infrastructure provider supports some type of a stable endpoint for the API Server (Load Balancer, VIP, etc). 106 - To enable scaling of the number of control plane nodes 107 - To enable declarative orchestrated control plane upgrades 108 - To provide a default machine-based implementation using kubeadm 109 - To provide a kubeadm-based implementation that is infrastructure provider agnostic 110 - To enable declarative orchestrated replacement of control plane machines, such as to roll out an OS-level CVE fix. 111 - To support Rolling Update type of rollout strategy (similar to MachineDeployment) in KubeadmControlPlane. 112 - To manage a kubeadm-based, "stacked etcd" control plane 113 - To manage a kubeadm-based, "external etcd" control plane (using a pre-existing, user-managed, etcd clusters). 114 - To manage control plane deployments across failure domains. 115 - To support user-initiated remediation: 116 E.g. user deletes a Machine. Control Plane Provider reconciles by removing the corresponding etcd member and updating related metadata 117 - To support auto remediation triggered by MachineHealthCheck objects: 118 E.g. a MachineHealthCheck marks a machine for remediation. Control Plane Provider reconciles by removing the machine and replaces it with a new one 119 **if and only if** the operation is not potentially destructive for the cluster (e.g. the operation could cause a permanent quorum loss). 120 121 ### Non-Goals / Future Work 122 123 Non-Goals listed in this document are intended to scope bound the current v1alpha3 implementation and are subject to change based on user feedback over time. 124 125 - To manage non-machine based topologies, e.g. 126 - Pod based control planes. 127 - Non-node control planes (i.e. EKS, GKE, AKS). 128 - To define a mechanism for providing a stable API endpoint for providers that do not currently have one, follow up work for this will be tracked on [this issue](https://github.com/kubernetes-sigs/cluster-api/issues/1687) 129 - To predefine the exact contract/interoperability mechanism for alternative control plane providers, follow up work for this will be tracked on [this issue](https://github.com/kubernetes-sigs/cluster-api/issues/1727) 130 - To manage CA certificates outside of what is provided by Kubeadm bootstrapping 131 - To manage etcd clusters in any topology other than stacked etcd (externally managed etcd clusters can still be leveraged). 132 - To address disaster recovery constraints, e.g. restoring a control plane from 0 replicas using a filesystem or volume snapshot copy of data persisted in etcd. 133 - To support rollbacks, as there is no data store rollback guarantee for Kubernetes. Consumers should perform backups of the cluster prior to performing potentially destructive operations. 134 - To mutate the configuration of live, running clusters (e.g. changing api-server flags), as this is the responsibility of the [component configuration working group](https://github.com/orgs/kubernetes/projects/26). 135 - To provide configuration of external cloud providers (i.e. the [cloud-controller-manager](https://kubernetes.io/docs/tasks/administer-cluster/running-cloud-controller/)). This is deferred to kubeadm. 136 - To provide CNI configuration. This is deferred to external, higher level tooling. 137 - To provide the upgrade logic to handle changes to infrastructure (networks, firewalls etc…) that may need to be done to support a control plane on a newer version of Kubernetes (e.g. a cloud controller manager requires updated permissions against infrastructure APIs). We expect the work on [add-on components](https://git.k8s.io/community/sig-cluster-lifecycle#cluster-addons) to help to resolve some of these issues. 138 - To provide automation around the horizontal or vertical scaling of control plane components, especially as etcd places hard performance limits beyond 3 nodes (due to latency). 139 - To support upgrades where the infrastructure does not rely on a Load Balancer for access to the API Server. 140 - To implement a fully modeled state machine and/or Conditions, a larger effort for Cluster API more broadly is being organized on [this issue](https://github.com/kubernetes-sigs/cluster-api/issues/1658) 141 142 ## Proposal 143 144 ### User Stories 145 146 1. As a cluster operator, I want my Kubernetes clusters to have multiple control plane machines to meet my SLOs with application developers. 147 2. As a developer, I want to be able to deploy the smallest possible cluster, e.g. to meet my organization’s cost requirements. 148 3. As a cluster operator, I want to be able to scale up my control plane to meet the increased demand that workloads are placing on my cluster. 149 4. As a cluster operator, I want to be able to remove a control plane replica that I have determined is faulty and should be replaced. 150 5. As a cluster operator, I want my cluster architecture to be always consistent with best practices, in order to have reliable cluster provisioning without having to understand the details of underlying data stores, replication etc… 151 6. As a cluster operator, I want to know if my cluster’s control plane is healthy in order to understand if I am meeting my SLOs with my end users. 152 7. As a cluster operator, I want to be able to quickly respond to a Kubernetes CVE by upgrading my clusters in an automated fashion. 153 8. As a cluster operator, I want to be able to quickly respond to a non-Kubernetes CVE that affects my base image or Kubernetes dependencies by upgrading my clusters in an automated fashion. 154 9. As a cluster operator, I would like to upgrade to a new minor version of Kubernetes so that my cluster remains supported. 155 10. As a cluster operator, I want to know that my cluster isn’t working properly after creation. I have ended up with an API server I can access, but kube-proxy isn’t functional or new machines are not registering themselves with the control plane. 156 11. As a cluster operator I would like to use MachineDeployment like rollout strategy to upgrade my control planes. For example in resource constrained environments I would like to my machines to be removed one-by-one before creating a new ones. I would also like to be able to rely on the default control plane upgrade mechanism without any extra effort when specific rollout strategy is not needed. 157 158 #### Identified features from user stories 159 160 1. Based on the function of kubeadm, the control plane provider must be able to scale the number of replicas of a control plane from 1 to X, meeting user stories 1 through 4. 161 2. To address user story 5, the control plane provider must provide validation of the number of replicas in a control plane. Where the stacked etcd topology is used (i.e., in the default implementation), the number of replicas must be an odd number, as per [etcd best practice](https://etcd.io/docs/v3.3.12/faq/#why-an-odd-number-of-cluster-members). When external etcd is used, any number is valid. 162 3. In service of user story 5, the kubeadm control plane provider must also manage etcd membership via kubeadm as part of scaling down (`kubeadm` takes care of adding the new etcd member when joining). 163 4. The control plane provider should provide indicators of health to meet user story 6 and 10. This should include at least the state of etcd and information about which replicas are currently healthy or not. For the default implementation, health attributes based on artifacts kubeadm installs on the cluster may also be of interest to cluster operators. 164 5. The control plane provider must be able to upgrade a control plane’s version of Kubernetes as well as updating the underlying machine image on where applicable (e.g. virtual machine based infrastructure). 165 6. To address user story 11, the control plane provider must provide Rolling Update strategy similar to MachineDeployment. With `MaxSurge` field user is able to delete old machine first during upgrade. Control plane provider should default the `RolloutStrategy` and `MaxSurge` fields such a way that scaling up is the default behavior during upgrade. 166 167 ### Implementation Details/Notes/Constraints 168 169 #### New API Types 170 171 See [kubeadm_control_plane_types.go](https://github.com/kubernetes-sigs/cluster-api/blob/release-0.3/controlplane/kubeadm/api/v1alpha3/kubeadm_control_plane_types.go) 172 173 With the following validations: 174 175 - If `KubeadmControlPlane.Spec.KubeadmConfigSpec` does not define external etcd (webhook): 176 - `KubeadmControlPlane.Spec.Replicas` is an odd number. 177 - Configuration of external etcd is determined by introspecting the provided `KubeadmConfigSpec`. 178 - `KubeadmControlPlane.Spec.Replicas` is >= 0 or is nil 179 - `KubeadmControlPlane.Spec.Version` should be a valid semantic version 180 - `KubeadmControlPlane.Spec.KubeadmConfigSpec` allows mutations required for supporting following use cases: 181 - Change of imagesRepository/imageTags (with validation of CoreDNS supported upgrade) 182 - Change of node registration options 183 - Change of pre/post kubeadm commands 184 - Change of cloud init files 185 186 And the following defaulting: 187 188 - `KubeadmControlPlane.Spec.Replicas: 1` 189 190 ##### Rollout strategy 191 192 ```go 193 type RolloutStrategyType string 194 195 const ( 196 // Replace the old control planes by new one using rolling update 197 // i.e. gradually scale up or down the old control planes and scale up or down the new one. 198 RollingUpdateStrategyType RolloutStrategyType = "RollingUpdate" 199 ) 200 ``` 201 202 - Add `KubeadmControlPlane.Spec.RolloutStrategy` defined as: 203 204 ```go 205 // The RolloutStrategy to use to replace control plane machines with 206 // new ones. 207 // +optional 208 RolloutStrategy *RolloutStrategy `json:"strategy,omitempty"` 209 ``` 210 211 - Add `KubeadmControlPlane.RolloutStrategy` struct defined as: 212 213 ```go 214 // RolloutStrategy describes how to replace existing machines 215 // with new ones. 216 type RolloutStrategy struct { 217 // Type of rollout. Currently the only supported strategy is 218 // "RollingUpdate". 219 // Default is RollingUpdate. 220 // +optional 221 Type RolloutStrategyType `json:"type,omitempty"` 222 223 // Rolling update config params. Present only if 224 // RolloutStrategyType = RollingUpdate. 225 // +optional 226 RollingUpdate *RollingUpdate `json:"rollingUpdate,omitempty"` 227 } 228 ``` 229 230 - Add `KubeadmControlPlane.RollingUpdate` struct defined as: 231 232 ```go 233 // RollingUpdate is used to control the desired behavior of rolling update. 234 type RollingUpdate struct { 235 // The maximum number of control planes that can be scheduled above or under the 236 // desired number of control planes. 237 // Value can be an absolute number 1 or 0. 238 // Defaults to 1. 239 // Example: when this is set to 1, the control plane can be scaled 240 // up immediately when the rolling update starts. 241 // +optional 242 MaxSurge *intstr.IntOrString `json:"maxSurge,omitempty"` 243 } 244 ``` 245 246 #### Modifications required to existing API Types 247 248 - Add `Cluster.Spec.ControlPlaneRef` defined as: 249 250 ```go 251 // ControlPlaneRef is an optional reference to a provider-specific resource that holds 252 // the details for provisioning the Control Plane for a Cluster. 253 // +optional 254 ControlPlaneRef *corev1.ObjectReference `json:"controlPlaneRef,omitempty"` 255 ``` 256 257 - Add `Cluster.Status.ControlPlaneReady` defined as: 258 259 ```go 260 // ControlPlaneReady defines if the control plane is ready. 261 // +optional 262 ControlPlaneReady bool `json:"controlPlaneReady,omitempty"` 263 ``` 264 265 #### Behavioral Changes from v1alpha2 266 267 - If Cluster.Spec.ControlPlaneRef is set: 268 - [Status.ControlPlaneInitialized](https://github.com/kubernetes-sigs/cluster-api/issues/1243) is set based on the value of Status.Initialized for the referenced resource. 269 - Status.ControlPlaneReady is set based on the value of Status.Ready for the referenced resource, this field is intended to eventually replace Status.ControlPlaneInitialized as a field that will be kept up to date instead of set only once. 270 - Current behavior will be preserved if `Cluster.Spec.ControlPlaneRef` is not set. 271 - CA certificate secrets that were previously generated by the Kubeadm bootstrapper will now be generated by the KubeadmControlPlane Controller, maintaining backwards compatibility with the previous behavior if the KubeadmControlPlane is not used. 272 - The kubeconfig secret that was previously created by the Cluster Controller will now be generated by the KubeadmControlPlane Controller, maintaining backwards compatibility with the previous behavior if the KubeadmControlPlane is not used. 273 274 #### Behaviors 275 276 ##### Create 277 278 - After a KubeadmControlPlane object is created, it must bootstrap a control plane with a given number of replicas. 279 - `KubeadmControlPlane.Spec.Replicas` must be an odd number. 280 - Can create an arbitrary number of control planes if etcd is external to the control plane, which will be determined by introspecting `KubeadmControlPlane.Spec.KubeadmConfigSpec`. 281 - Creating a KubeadmControlPlane with > 1 replicas is equivalent to creating a KubeadmControlPlane with 1 replica followed by scaling the KubeadmControlPlane to the desired number of replicas 282 - The kubeadm bootstrapping configuration provided via `KubeadmControlPlane.Spec.KubeadmConfigSpec` should specify the `InitConfiguration`, `ClusterConfiguration`, and `JoinConfiguration` stanzas, and the KubeadmControlPlane controller will be responsible for splitting the config and passing it to the underlying Machines created as appropriate. 283 - This is different than current usage of `KubeadmConfig` and `KubeadmConfigTemplate` where it is recommended to specify `InitConfiguration`/`ClusterConfiguration` OR `JoinConfiguration` but not both. 284 - The underlying query used to find existing Control Plane Machines is based on the following hardcoded label selector: 285 286 ```yaml 287 selector: 288 matchLabels: 289 cluster.x-k8s.io/cluster-name: my-cluster 290 cluster.x-k8s.io/control-plane: "" 291 ``` 292 293 - Generate CA certificates if they do not exist 294 - Generate the kubeconfig secret if it does not exist 295 296 Given the following `cluster.yaml` file: 297 298 ```yaml 299 kind: Cluster 300 apiVersion: cluster.x-k8s.io/v1alpha3 301 metadata: 302 name: my-cluster 303 namespace: default 304 spec: 305 clusterNetwork: 306 pods: 307 cidrBlocks: ["192.168.0.0/16"] 308 controlPlaneRef: 309 kind: KubeadmControlPlane 310 apiVersion: cluster.x-k8s.io/v1alpha3 311 name: my-controlplane 312 namespace: default 313 infrastructureRef: 314 kind: AcmeCluster 315 apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2 316 name: my-acmecluster 317 namespace: default 318 --- 319 kind: KubeadmControlPlane 320 apiVersion: cluster.x-k8s.io/v1alpha3 321 metadata: 322 name: my-control-plane 323 namespace: default 324 spec: 325 replicas: 1 326 version: v1.16.0 327 infrastructureTemplate: 328 kind: AcmeProviderMachineTemplate 329 apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2 330 namespace: default 331 name: my-acmemachinetemplate 332 kubeadmConfigSpec: 333 initConfiguration: 334 nodeRegistration: 335 name: '{{ ds.meta_data.local_hostname }}' 336 kubeletExtraArgs: 337 cloud-provider: acme 338 clusterConfiguration: 339 apiServer: 340 extraArgs: 341 cloud-provider: acme 342 controllerManager: 343 extraArgs: 344 cloud-provider: acme 345 joinConfiguration: 346 controlPlane: {} 347 nodeRegistration: 348 name: '{{ ds.meta_data.local_hostname }}' 349 kubeletExtraArgs: 350 cloud-provider: acme 351 --- 352 apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2 353 kind: AcmeProviderMachineTemplate 354 metadata: 355 name: my-acmemachinetemplate 356 namespace: default 357 spec: 358 osImage: 359 id: objectstore-123456abcdef 360 instanceType: θ9.medium 361 iamInstanceProfile: "control-plane.cluster-api-provider-acme.x-k8s.io" 362 sshKeyName: my-ssh-key 363 --- 364 apiVersion: infrastructure.cluster.x-k8s.io/v1alpha2 365 kind: AcmeCluster 366 metadata: 367 name: my-acmecluster 368 namespace: default 369 spec: 370 region: antarctica-1 371 ``` 372 373  374  375  376  377 378 ##### Scale Up 379 380 - Allow scale up a control plane with stacked etcd to only odd numbers, as per 381 [etcd best practice](https://etcd.io/docs/v3.3.12/faq/#why-an-odd-number-of-cluster-members). 382 - However, allow a control plane using an external etcd cluster to scale up to other numbers such as 2 or 4. 383 - Scale up operations must not be done in conjunction with: 384 - Adopting machines 385 - Upgrading machines 386 - Scale up operations are blocked based on Etcd and control plane preflight checks. 387 - See [Preflight checks](#preflight-checks) below. 388 - Scale up operations creates the next machine in the failure domain with the fewest number of machines. 389 390  391 392 ##### Scale Down 393 394 - Allow scale down a control plane with stacked etcd to only odd numbers, as per 395 [etcd best practice](https://etcd.io/docs/v3.3.12/faq/#why-an-odd-number-of-cluster-members). 396 - However, allow a control plane using an external etcd cluster to scale down to other numbers such as 2 or 4. 397 - Scale down operations must not be done in conjunction with: 398 - Adopting machines 399 - Upgrading machines 400 - Scale down operations are blocked based on Etcd and control plane preflight checks. 401 - See [Preflight checks](#preflight-checks) below. 402 - Scale down operations removes the oldest machine in the failure domain that has the most control-plane machines on it. 403 - Allow scaling down of KCP with the possibility of marking specific control plane machine(s) to be deleted with delete annotation key. The presence of the annotation will affect the rollout strategy in a way that, it implements the following prioritization logic in descending order, while selecting machines for scale down: 404 - outdated machines with the delete annotation 405 - machines with the delete annotation 406 - outdated machines with unhealthy control plane component pods 407 - outdated machines 408 - all machines 409 410  411 412 ##### Delete of the entire KubeadmControlPlane (kubectl delete controlplane my-controlplane) 413 414 - KubeadmControlPlane deletion should be blocked until all the worker nodes are deleted. 415 - Completely removing the control plane and issuing a delete on the underlying machines. 416 - User documentation should focus on deletion of the Cluster resource rather than the KubeadmControlPlane resource. 417 418 ##### KubeadmControlPlane rollout 419 420 KubeadmControlPlane rollout operations rely on [scale up](#scale up) and [scale down](#scale_down) which are be blocked based on Etcd and control plane preflight checks. 421 - See [Preflight checks](#preflight-checks) below. 422 423 KubeadmControlPlane rollout is triggered by: 424 425 - Changes to Version 426 - Changes to the kubeadmConfigSpec 427 - Changes to the infrastructureRef 428 - The `rolloutAfter` field, which can be set to a specific time in the future 429 - Set to `nil` or the zero value of `time.Time` if no upgrades are desired 430 - An upgrade will run after that timestamp is passed 431 - Good for scheduling upgrades/SLOs 432 - Set `rolloutAfter` to now (in RFC3339 form) if an upgrade is required immediately 433 434 - The controller should tolerate the manual or automatic removal of a replica during the upgrade process. A replica that fails during the upgrade may block the completion of the upgrade. Removal or other remedial action may be necessary to allow the upgrade to complete. 435 436 - In order to determine if a Machine to be rolled out, KCP implements the following: 437 - The infrastructureRef link used by each machine at creation time is stored in annotations at machine level. 438 - The kubeadmConfigSpec used by each machine at creation time is stored in annotations at machine level. 439 - If the annotation is not present (machine is either old or adopted), we won't roll out on any possible changes made in KCP's ClusterConfiguration given that we don't have enough information to make a decision. Users should use KCP.Spec.RolloutAfter field to force a rollout in this case. 440 441 ##### Rolling update strategy 442 443 Currently KubeadmControlPlane supports only one rollout strategy type the `RollingUpdateStrategyType`. Rolling upgrade strategy's behavior can be modified by using `MaxSurge` field. The field values can be an absolute number 0 or 1. 444 445 When `MaxSurge` is set to 1 the rollout algorithm is as follows: 446 447 - Find Machines that have an outdated spec 448 - If there is a machine requiring rollout 449 - Scale up control plane creating a machine with the new spec 450 - Scale down control plane by removing one of the machine that needs rollout (the oldest out-of date machine in the failure domain that has the most control-plane machines on it) 451 452 When `MaxSurge` is set to 0 the rollout algorithm is as follows: 453 454 - KubeadmControlPlane verifies that control plane replica count is >= 3 455 - Find Machines that have an outdated spec and scale down the control plane by removing the oldest out-of-date machine. 456 - Scale up control plane by creating a new machine with the updated spec 457 458 > NOTE: Setting `MaxSurge` to 0 could be use in resource constrained environment like bare-metal, OpenStack or vSphere resource pools, etc when there is no capacity to Scale up the control plane. 459 460 ###### Constraints and Assumptions 461 462 * A stable endpoint (provided by DNS or IP) for the API server will be required in order 463 to allow for machines to maintain a connection to control plane machines as they are swapped out 464 during upgrades. This proposal is agnostic to how this is achieved, and is being tracked 465 in https://github.com/kubernetes-sigs/cluster-api/issues/1687. The control plane controller will use 466 the presence of the apiEndpoints status field of the cluster object to determine whether or not to proceed. 467 This behaviour is currently implicit in the implementations for cloud providers that provider a load balancer construct. 468 469 * Infrastructure templates are expected to be immutable, so infrastructure template contents do not have to be hashed in order to detect 470 changes. 471 472 ##### Remediation (using delete-and-recreate) 473 474 - KCP remediation is triggered by the MachineHealthCheck controller marking a machine for remediation. See 475 [machine-health-checking proposal](https://github.com/kubernetes-sigs/cluster-api/blob/11485f4f817766c444840d8ea7e4e7d1a6b94cc9/docs/proposals/20191030-machine-health-checking.md) 476 for additional details. When there are multiple machines that are marked for remediation, the oldest machine marked as unhealthy not yet provisioned (if any) 477 or the oldest machine marked as unhealthy will be remediated first. 478 479 - Following rules should be satisfied in order to start remediation 480 - One of the following apply: 481 - The cluster MUST not be initialized yet (the failure happens before KCP reaches the initialized state) 482 - The cluster MUST have at least two control plane machines, because this is the smallest cluster size that can be remediated. 483 - Previous remediation (delete and re-create) MUST have been completed. This rule prevents KCP to remediate more machines while the 484 replacement for the previous machine is not yet created. 485 - The cluster MUST NOT have healthy machines still provisioning (without a node). This rule prevents KCP taking actions while the cluster is in a transitional state. 486 - The cluster MUST have no machines with a deletion timestamp. This rule prevents KCP taking actions while the cluster is in a transitional state. 487 - Remediation MUST preserve etcd quorum. This rule ensures that we will not remove a member that would result in etcd 488 losing a majority of members and thus become unable to field new requests (note: this rule applies only to CP already 489 initialized and with managed etcd) 490 491 - Additionally following opt-in safeguards will be put in place: 492 - If we are remediating the same machine (delete, re-create, replacement machine gets unhealthy), it will be possible 493 to define a maximum number of retries, thus preventing unnecessary load on infrastructure provider e.g. in case of quota problems. 494 - If we are remediating the same machine (delete, re-create, replacement machine gets unhealthy), it will be possible 495 to define a delay between each retry, thus allowing the infrastructure provider to stabilize in case of temporary problems. 496 497 - When all the conditions for starting remediation are satisfied, KCP temporarily suspend any operation in progress 498 in order to perform remediation. 499 - Remediation will be performed by issuing a delete on the unhealthy machine; after deleting the machine, KCP 500 will restore the target number of machines by triggering a scale up (current replicas<desired replicas) and then 501 eventually resume the rollout action. 502 See [why delete and recreate](#why-delete-and-recreate) for an explanation about why KCP should remove the member first and then add its replacement. 503 504 ###### Why delete and recreate 505 506 When replacing a KCP machine the most critical component to be taken into account is etcd, and 507 according to the [etcd documentation](https://etcd.io/docs/v3.5/faq/#should-i-add-a-member-before-removing-an-unhealthy-member), 508 it's important to remove an unhealthy etcd member first and then add its replacement: 509 510 - etcd employs distributed consensus based on a quorum model; (n/2)+1 members, a majority, must agree on a proposal before 511 it can be committed to the cluster. These proposals include key-value updates and membership changes. This model totally 512 avoids potential split brain inconsistencies. The downside is permanent quorum loss is catastrophic. 513 514 - How this applies to membership: If a 3-member cluster has 1 downed member, it can still make forward progress because 515 the quorum is 2 and 2 members are still live. However, adding a new member to a 3-member cluster will increase the quorum 516 to 3 because 3 votes are required for a majority of 4 members. Since the quorum increased, this extra member buys nothing 517 in terms of fault tolerance; the cluster is still one node failure away from being unrecoverable. 518 519 - Additionally, adding new members to an unhealthy control plane might be risky because it may turn out to be misconfigured 520 or incapable of joining the cluster. In that case, there's no way to recover quorum because the cluster has two members 521 down and two members up, but needs three votes to change membership to undo the botched membership addition. etcd will 522 by default reject member add attempts that could take down the cluster in this manner. 523 524 - On the other hand, if the downed member is removed from cluster membership first, the number of members becomes 2 and 525 the quorum remains at 2. Following that removal by adding a new member will also keep the quorum steady at 2. So, even 526 if the new node can't be brought up, it's still possible to remove the new member through quorum on the remaining live members. 527 528 As a consequence KCP remediation should remove unhealthy machine first and then add its replacement. 529 530 Additionally, in order to make this approach more robust, KCP will test each etcd member for responsiveness before 531 using the Status endpoint and determine as best as possible that we would not exceed failure tolerance by removing a machine. 532 This should ensure we are not taking actions in case there are other etcd members not properly working on machines 533 not (yet) marked for remediation by the MachineHealthCheck. 534 535 ###### Scenario 1: Three replicas, one machine marked for remediation 536 537 If MachineHealthCheck marks one machine for remediation in a control-plane with three replicas, we will look at the etcd 538 status of each machine to determine if we have at most one failed member. Assuming the etcd cluster is still all healthy, 539 or the only unresponsive member is the one to be remediated, we will scale down the machine that failed the MHC and 540 then scale up a new machine to replace it. 541 542 ###### Scenario 2: Three replicas, two machines marked for remediation 543 544 If MachineHealthCheck marks two machines for remediation in a control-plane with three replicas, remediation might happen 545 depending on the status of the etcd members on the three replicas. 546 547 As long as we continue to only have at most one unhealthy etcd member, we will scale down an unhealthy machine, 548 wait for it to provision and join the cluster, and then scale down the other machine. 549 550 However, if more than one etcd member is unhealthy, remediation would not happen and manual intervention would be required 551 to fix the unhealthy machine. 552 553 ###### Scenario 3: Three replicas, one unresponsive etcd member, one (different) unhealthy machine 554 555 It is possible to have a scenario where a different machine than the one that failed the MHC has an unresponsive etcd. 556 In this scenario, remediation would not happen and manual intervention would be required to fix the unhealthy machine. 557 558 ###### Scenario 4: Unhealthy machines combined with rollout 559 560 When there exist unhealthy machines and there also have been configuration changes that trigger a rollout of new machines to occur, 561 remediation and rollout will occur in tandem. 562 563 This is to say that unhealthy machines will first be scaled down, and replaced with new machines that match the desired new spec. 564 Once the unhealthy machines have been replaced, the remaining healthy machines will also be replaced one-by-one as well to complete the rollout operation. 565 566 ##### Preflight checks 567 568 This paragraph describes KCP preflight checks specifically designed to ensure a kubeadm 569 generated control-plane is stable before proceeding with KCP actions like scale up, scale down and rollout. 570 571 Preflight checks status is accessible via conditions on the KCP object and/or on the controlled machines. 572 573 ###### Etcd (external) 574 575 Etcd connectivity is the only metric used to assert etcd cluster health. 576 577 ###### Etcd (stacked) 578 579 Etcd is considered healthy if: 580 581 - There are an equal number of control plane Machines and members in the etcd cluster. 582 - This ensures there are no members that are unaccounted for. 583 - Each member reports the same list of members. 584 - This ensures that there is only one etcd cluster. 585 - Each member does not have any active alarms. 586 - This ensures there is nothing wrong with the individual member. 587 588 The KubeadmControlPlane controller uses port-forwarding to get to a specific etcd member. 589 590 ###### Kubernetes Control Plane 591 592 - There are an equal number of control plane Machines and api server pods checked. 593 - This ensures that Cluster API is tracking all control plane machines. 594 - Each control plane node has an api server pod that has the Ready condition. 595 - This ensures that the API server can contact etcd and is ready to accept requests. 596 - Each control plane node has a controller manager and a scheduler pod that has the Ready condition. 597 - This ensures the control plane can manage default Kubernetes resources. 598 599 ##### Adoption of pre-v1alpha3 Control Plane Machines 600 601 - Existing control plane Machines will need to be updated with labels matching the expected label selector. 602 - The KubeadmConfigSpec can be re-created from the referenced KubeadmConfigs for the Machines matching the label selector. 603 - If there is not an existing initConfiguration/clusterConfiguration only the joinConfiguration will be populated. 604 - In v1alpha2, the Cluster API Bootstrap Provider is responsible for generating certificates based upon the first machine to join a cluster. The OwnerRef for these certificates are set to that of the initial machine, which causes an issue if that machine is later deleted. For v1alpha3, control plane certificate generation will be replicated in the KubeadmControlPlane provider. Given that for v1alpha2 these certificates are generated with deterministic names, i.e. prefixed with the cluster name, the migration mechanism should replace the owner reference of these certificates during migration. The bootstrap provider will need to be updated to only fallback to the v1alpha2 secret generation behavior if Cluster.Spec.ControlPlaneRef is nil. 605 - In v1alpha2, the Cluster API Bootstrap Provider is responsible for generating the kubeconfig secret; during adoption the adoption of this secret is set to the KubeadmConfig object. 606 - To ease the adoption of v1alpha3, the migration mechanism should be built into Cluster API controllers. 607 608 #### Code organization 609 610 The types introduced in this proposal will live in the `cluster.x-k8s.io` API group. The controller(s) will also live inside `sigs.k8s.io/cluster-api`. 611 612 ### Risks and Mitigations 613 614 #### etcd membership 615 616 - If the leader is selected for deletion during a replacement for upgrade or scale down, the etcd cluster will be unavailable during that period as leader election takes place. Small time periods of unavailability should not significantly impact the running of the managed cluster’s API server. 617 - Replication of the etcd log, if done for a sufficiently large data store and saturates the network, machines may fail leader election, bringing down the cluster. To mitigate this, the control plane provider will only create machines serially, ensuring cluster health before moving onto operations for the next machine. 618 - When performing a scaling operation, or an upgrade using create-swap-delete, there are periods when there are an even number of nodes. Any network partitions or host failures that occur at this point will cause the etcd cluster to split brain. Etcd 3.4 is under consideration for Kubernetes 1.17, which brings non-voting cluster members, which can be used to safely add new machines without affecting quorum. [Changes to kubeadm](https://github.com/kubernetes/kubeadm/issues/1793) will be required to support this and is out of scope for the time frame of v1alpha3. 619 620 #### Upgrade where changes needed to KubeadmConfig are not currently possible 621 622 - We don't anticipate that this will immediately cause issues, but could potentially cause problems when adopt new versions of the Kubeadm configuration that include features such as kustomize templates. These potentially would need to be modified as part of an upgrade. 623 624 ## Design Details 625 626 ### Test Plan 627 628 Standard unit/integration & e2e behavioral test plans will apply. 629 630 ### Graduation Criteria 631 632 #### Alpha -> Beta Graduation 633 634 This work is too early to detail requirements for graduation to beta. At a minimum, etcd membership and quorum risks will need to be addressed prior to beta. 635 636 ### Upgrade Strategy 637 638 - v1alpha2 managed clusters that match certain known criteria should be able to be adopted as part of the upgrade to v1alpha3, other clusters should continue to function as they did previously. 639 640 ## Alternatives 641 642 For the purposes of designing upgrades, two existing lifecycle managers were examined in detail: kOps and Cloud Foundry Container Runtime. Their approaches are detailed in the accompanying "[Cluster API Upgrade Experience Reports](https://docs.google.com/document/d/1RnUG9mHrS_qmhmm052bO6Wu0dldCamwPmTA1D0jCWW8/edit#)" document. 643 644 ## Implementation History 645 646 - [x] 10/17/2019: Initial Creation 647 - [x] 11/19/2019: Initial KubeadmControlPlane types added [#1765](https://github.com/kubernetes-sigs/cluster-api/pull/1765) 648 - [x] 12/04/2019: Updated References to ErrorMessage/ErrorReason to FailureMessage/FailureReason 649 - [x] 12/04/2019: Initial stubbed KubeadmControlPlane controller added [#1826](https://github.com/kubernetes-sigs/cluster-api/pull/1826) 650 - [x] 07/09/2020: Document updated to reflect changes up to v0.3.9 release 651 - [x] 22/09/2020: KCP remediation added 652 - [x] 10/05/2021: Support for remediation of failures while upgrading 1 node CP 653 - [x] 05/01/2022: Support for remediation while provisioning the CP (both first CP and CP machines while current replica < desired replica); Allow control of remediation retry behavior.