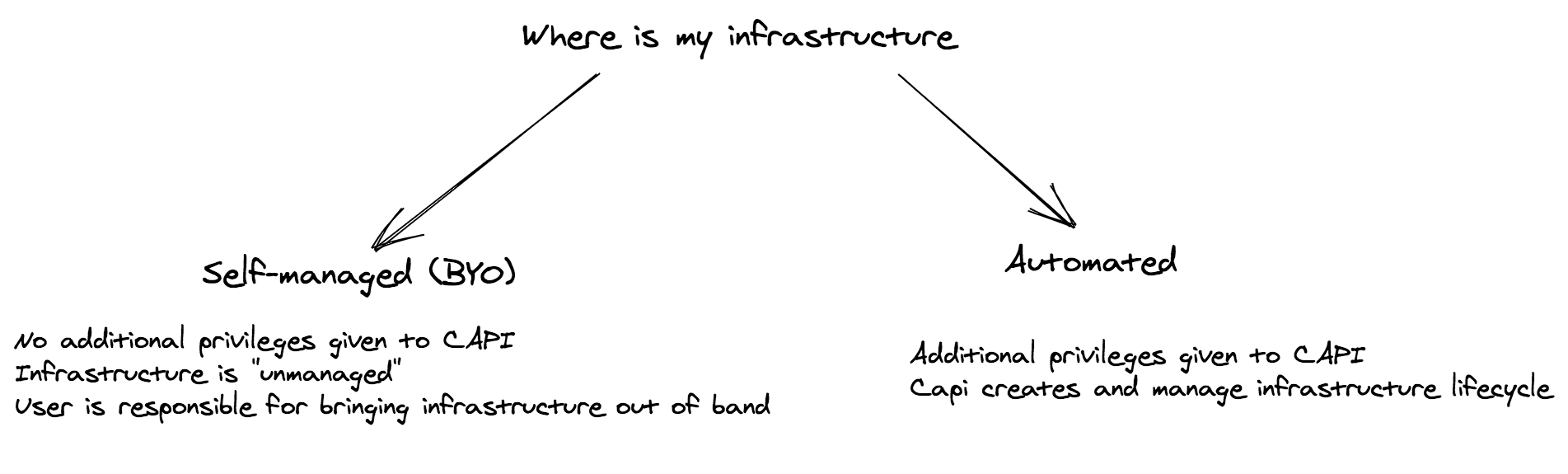

sigs.k8s.io/cluster-api@v1.7.1/docs/proposals/20210203-externally-managed-cluster-infrastructure.md (about) 1 --- 2 title: Externally Managed cluster infrastructure 3 authors: 4 - "@enxebre" 5 - "@joelspeed" 6 - "@alexander-demichev" 7 reviewers: 8 - "@vincepri" 9 - "@randomvariable" 10 - "@CecileRobertMichon" 11 - "@yastij" 12 - "@fabriziopandini" 13 creation-date: 2021-02-03 14 last-updated: 2021-02-12 15 status: implementable 16 see-also: 17 replaces: 18 superseded-by: 19 --- 20 21 # Externally Managed cluster infrastructure 22 23 ## Table of Contents 24 25 <!-- START doctoc generated TOC please keep comment here to allow auto update --> 26 <!-- DON'T EDIT THIS SECTION, INSTEAD RE-RUN doctoc TO UPDATE --> 27 28 - [Glossary](#glossary) 29 - [Managed cluster infrastructure](#managed-cluster-infrastructure) 30 - [Externally managed cluster infrastructure](#externally-managed-cluster-infrastructure) 31 - [Summary](#summary) 32 - [Motivation](#motivation) 33 - [Goals](#goals) 34 - [Non-Goals/Future Work](#non-goalsfuture-work) 35 - [Proposal](#proposal) 36 - [User Stories](#user-stories) 37 - [Story 1 - Alternate control plane provisioning with user managed infrastructure](#story-1---alternate-control-plane-provisioning-with-user-managed-infrastructure) 38 - [Story 2 - Restricted access to cloud provider APIs](#story-2---restricted-access-to-cloud-provider-apis) 39 - [Story 3 - Consuming existing cloud infrastructure](#story-3---consuming-existing-cloud-infrastructure) 40 - [Implementation Details/Notes/Constraints](#implementation-detailsnotesconstraints) 41 - [Provider implementation changes](#provider-implementation-changes) 42 - [Security Model](#security-model) 43 - [Risks and Mitigations](#risks-and-mitigations) 44 - [What happens when a user converts an externally managed InfraCluster to a managed InfraCluster?](#what-happens-when-a-user-converts-an-externally-managed-infracluster-to-a-managed-infracluster) 45 - [Future Work](#future-work) 46 - [Marking InfraCluster ready manually](#marking-infracluster-ready-manually) 47 - [Alternatives](#alternatives) 48 - [ExternalInfra CRD](#externalinfra-crd) 49 - [ManagementPolicy field](#managementpolicy-field) 50 - [Upgrade Strategy](#upgrade-strategy) 51 - [Additional Details](#additional-details) 52 - [Implementation History](#implementation-history) 53 54 <!-- END doctoc generated TOC please keep comment here to allow auto update --> 55 56 ## Glossary 57 58 Refer to the [Cluster API Book Glossary](https://cluster-api.sigs.k8s.io/reference/glossary.html). 59 60 ### Managed cluster infrastructure 61 62 Cluster infrastructure whose lifecycle is managed by a provider InfraCluster controller. 63 E.g. in AWS: 64 - Network 65 - VPC 66 - Subnets 67 - Internet gateways 68 - Nat gateways 69 - Route tables 70 - Security groups 71 - Load balancers 72 73 ### Externally managed cluster infrastructure 74 75 An InfraCluster resource (usually part of an infrastructure provider) whose lifecycle is managed by an external controller. 76 77 ## Summary 78 79 This proposal introduces support to allow infrastructure cluster resources (e.g. AzureCluster, AWSCluster, vSphereCluster, etc.) to be managed by an external controller or tool. 80 81 ## Motivation 82 83 Currently, Cluster API infrastructure providers support an opinionated happy path to create and manage cluster infrastructure lifecycle. 84 The fundamental use case we want to support is out of tree controllers or tools that can manage these resources. 85 86 For example, users could create clusters using tools such as Terraform, Crossplane, or kOps and run CAPI on top of installed infrastructure. 87 88 The proposal might also ease adoption of Cluster API in heavily restricted environments where the provider infrastructure for the cluster needs to be managed out of band. 89 90 ### Goals 91 92 - Introduce support for "externally managed" cluster infrastructure consistently across Cluster API providers. 93 - Any machine controller or machine infrastructure controllers must be able to keep operating like they do today. 94 - Reuse existing InfraCluster CRDs in "externally managed" clusters to minimise differences between the two topologies. 95 96 ### Non-Goals/Future Work 97 98 - Modify existing managed behaviour. 99 - Automatically mark InfraCluster resources as ready (this will be up to the external management component initially). 100 - Support anything other than cluster infrastructure (e.g. machines). 101 102 ## Proposal 103 104 A new annotation `cluster.x-k8s.io/managed-by: "<name-of-system>"` is going to be defined in Cluster API core repository, which helps define and identify resources managed by external controllers. The value of the annotation will not be checked by Cluster API and is considered free form text. 105 106 Infrastructure providers SHOULD respect the annotation and its contract. 107 108 When this annotation is present on an InfraCluster resource, the InfraCluster controller is expected to ignore the resource and not perform any reconciliation. 109 Importantly, it will not modify the resource or its status in any way. 110 A predicate will be provided in the Cluster API repository to aid provider implementations in filtering resources that are externally managed. 111 112 Additionally, the external management system must provide all required fields within the spec of the InfraCluster and must adhere to the CAPI provider contract and set the InfraCluster status to be ready when it is appropriate to do so. 113 114 While an "externally managed" InfraCluster won't reconcile or manage the lifecycle of the cluster infrastructure, CAPI will still be able to create compute nodes within it. 115 116 The machine controller must be able to operate without hard dependencies regardless of the cluster infrastructure being managed or externally managed. 117  118 119 ### User Stories 120 121 #### Story 1 - Alternate control plane provisioning with user managed infrastructure 122 As a cluster provider I want to use CAPI in my service offering to orchestrate Kubernetes bootstrapping while letting workload cluster operators own their infrastructure lifecycle. 123 124 For example, Cluster API Provider AWS only supports a single architecture for delivery of network resources for cluster infrastructure, but given the possible variations in network architecture in AWS, the majority of organisations are going to want to provision VPCs, security groups and load balancers themselves, and then have Cluster API Provider AWS provision machines as normal. Currently CAPA supports "bring your own infrastructure" when users fill in the `AWSCluster` spec, and then CAPA reconciles any missing resources. This has been done in an ad hoc fashion, and has proven to be a frequently brittle mechanism with many bugs. The AWSMachine controller only requires a subset of the AWSCluster resource in order to reconcile machines, in particular - subnet, load balancer (for control plane instances) and security groups. Having a formal contract for externally managed infrastructure would improve the user experience for those getting started with Cluster API and have non-trivial networking requirements. 125 126 #### Story 2 - Restricted access to cloud provider APIs 127 As a cluster operator I want to use CAPI to orchestrate kubernetes bootstrapping while restricting the privileges I need to grant for my cloud provider because of organisational cloud security constraints. 128 129 #### Story 3 - Consuming existing cloud infrastructure 130 As a cluster operator I want to use CAPI to orchestrate Kubernetes bootstrapping while reusing infrastructure that has already been created in the organisation either by me or another team. 131 132 Following from the example in Story 1, many AWS environments are tightly governed by an organisation's cloud security operations unit, and provisioning of security groups in particular is often prohibited. 133 134 ### Implementation Details/Notes/Constraints 135 136 **Managed** 137 138 - It will be default and will preserve existing behaviour. An InfraCluster CR without the `cluster.x-k8s.io/managed-by: "<name-of-system>"` annotation. 139 140 141 **Externally Managed** 142 143 An InfraCluster CR with the `cluster.x-k8s.io/managed-by: "<name-of-system>"` annotation. 144 145 The provider InfraCluster controller must: 146 - Skip any reconciliation of the resource. 147 148 - Not update the resource or its status in any way 149 150 The external management system must: 151 152 - Populate all required fields within the InfraCluster spec to allow other CAPI components to continue as normal. 153 154 - Adhere to all Cluster API contracts for infrastructure providers. 155 156 - When the infrastructure is ready, set the appropriate status as is done by the provider controller today. 157 158 #### Provider implementation changes 159 160 To enable providers to implement the changes required by this contract, Cluster API is going to provide a new `predicates.ResourceExternallyManaged` predicate as part of its utils. 161 162 This predicate filters out any resource that has been marked as "externally managed" and prevents the controller from reconciling the resource. 163 164 ### Security Model 165 166 When externally managed, the required cloud provider privileges required by CAPI might be significantly reduced when compared with a traditionally managed cluster. 167 The only privileges required by CAPI are those that are required to manage machines. 168 169 For example, when an AWS cluster is managed by CAPI, permissions are required to be able to create VPCs and other networking components that are managed by the AWSCluster controller. When externally managed, these permissions are not required as the external entity is responsible for creating such components. 170 171 Support for minimising permissions in Cluster API Provider AWS will be added to its IAM provisioning tool, `clusterawsadm`. 172 173 ### Risks and Mitigations 174 175 #### What happens when a user converts an externally managed InfraCluster to a managed InfraCluster? 176 177 There currently is no immutability support for CRD annotations within the Kubernetes API. 178 179 This means that, once a user has created their externally managed InfraCluster, they could at some point, update the annotation to make the InfraCluster appear to be managed. 180 181 There is no way to predict what would happen in this scenario. 182 The InfraCluster controller would start attempting to reconcile infrastructure that it did not create, and therefore, there may be assumptions it makes that mean it cannot manage this infrastructure. 183 184 To prevent this, we will have to implement (in the InfraCluster webhook) a means to prevent users converting externally managed InfraClusters into managed InfraClusters. 185 186 Note however, converting from managed to externally managed should cause no issues and should be allowed. 187 It will be documented as part of the externally managed contract that this is a one way operation. 188 189 ### Future Work 190 191 #### Marking InfraCluster ready manually 192 193 The content of this proposal assumes that the management of the external infrastructure is done by some controller which has the ability to set the spec and status of the InfraCluster resource. 194 195 In reality, this may not be the case. For example, if the infrastructure was created by an admin using Terraform. 196 197 When using a system such as this, a user can copy the details from the infrastructure into an InfraCluster resource and create this manually. 198 However, they will not be able to set the InfraCluster to ready as this requires updating the resource status which is difficult when not using a controller. 199 200 To allow users to adopt this external management pattern without the need for writing their own controllers or tooling, we will provide a longer term solution that allows a user to indicate that the infrastructure is ready and have the status set appropriately. 201 202 The exact mechanism for how this will work is undecided, though the following ideas have been suggested: 203 204 - Reuse of future kubectl subresource flag capabilities https://github.com/kubernetes/kubernetes/pull/99556. 205 206 - Add a secondary annotation to this contract that causes the provider InfraCluster controller to mark resources as ready 207 208 ## Alternatives 209 210 ### ExternalInfra CRD 211 212 We could have an adhoc CRD https://github.com/kubernetes-sigs/cluster-api/issues/4095 213 214 This would introduce complexity for the CAPI ecosystem with yet an additional CRD and it wouldn't scale well across providers as it would need to contain provider specific information. 215 216 ### ManagementPolicy field 217 218 As an alternative to the proposed annotation, a `ManagementPolicy` field on Infrastructure Cluster spec could be required as part of this contract. 219 The field would be an enum that initially has 2 possible values: managed and unmanaged. 220 That would require a new provider contract and modification of existing infrastructure CRDs, so this option is not preferred. 221 222 ## Upgrade Strategy 223 224 Support is introduced by adding a new annotation for the provider infraCluster. 225 226 This makes any transition towards an externally managed cluster backward compatible and leave the current managed behaviour untouched. 227 228 ## Additional Details 229 230 ## Implementation History 231 232 - [x] 11/25/2020: Proposed idea in an issue or [community meeting] https://github.com/kubernetes-sigs/cluster-api-provider-aws/pull/2124 233 - [x] 02/03/2021: Compile a Google Doc following the CAEP template https://hackmd.io/FqsAdOP6S7SFn5s-akEPkg?both 234 - [x] 02/03/2021: First round of feedback from community 235 - [x] 03/10/2021: Present proposal at a [community meeting] 236 - [x] 02/03/2021: Open proposal PR 237 238 <!-- Links --> 239 [community meeting]: https://docs.google.com/document/d/1Ys-DOR5UsgbMEeciuG0HOgDQc8kZsaWIWJeKJ1-UfbY