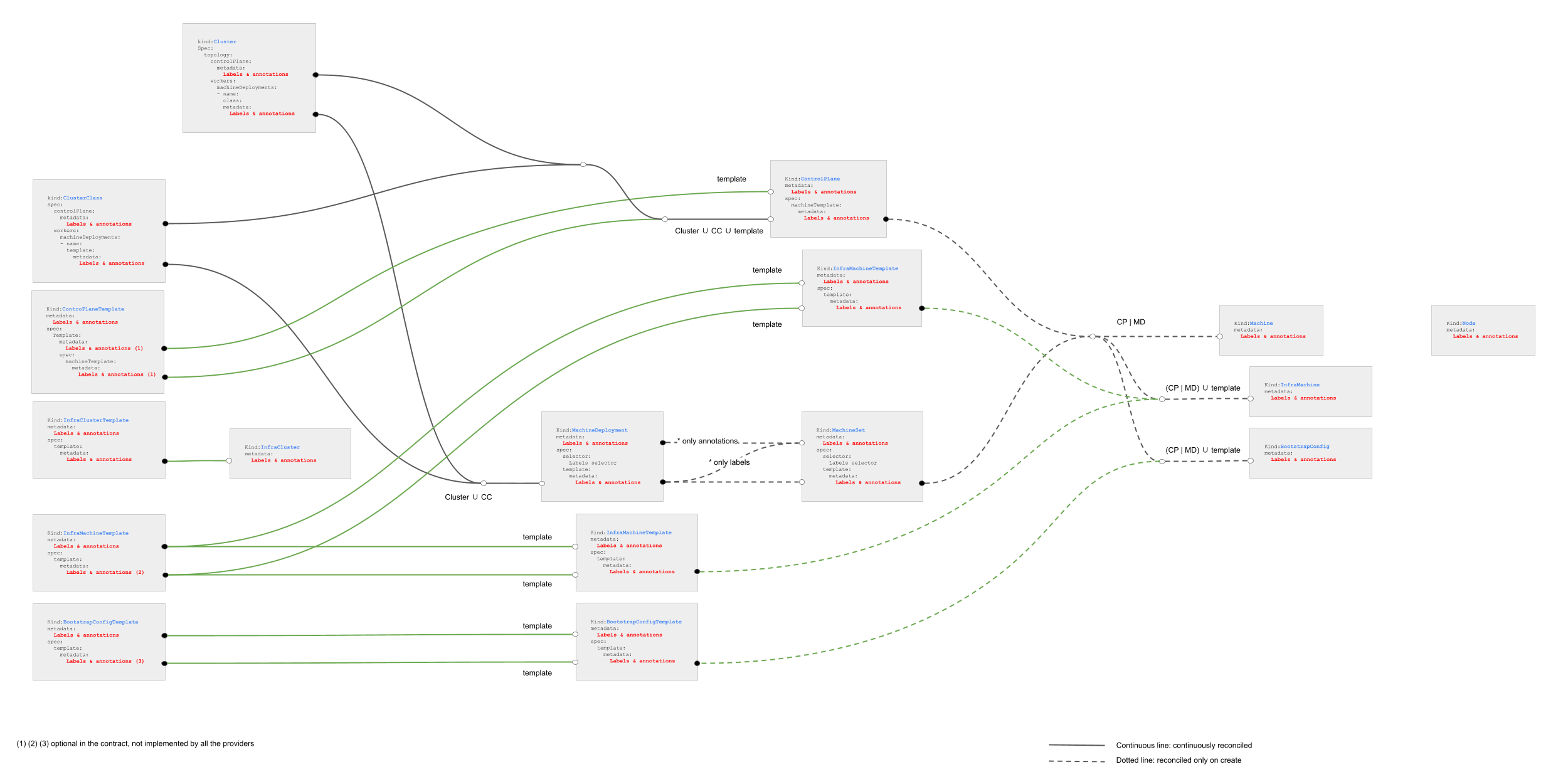

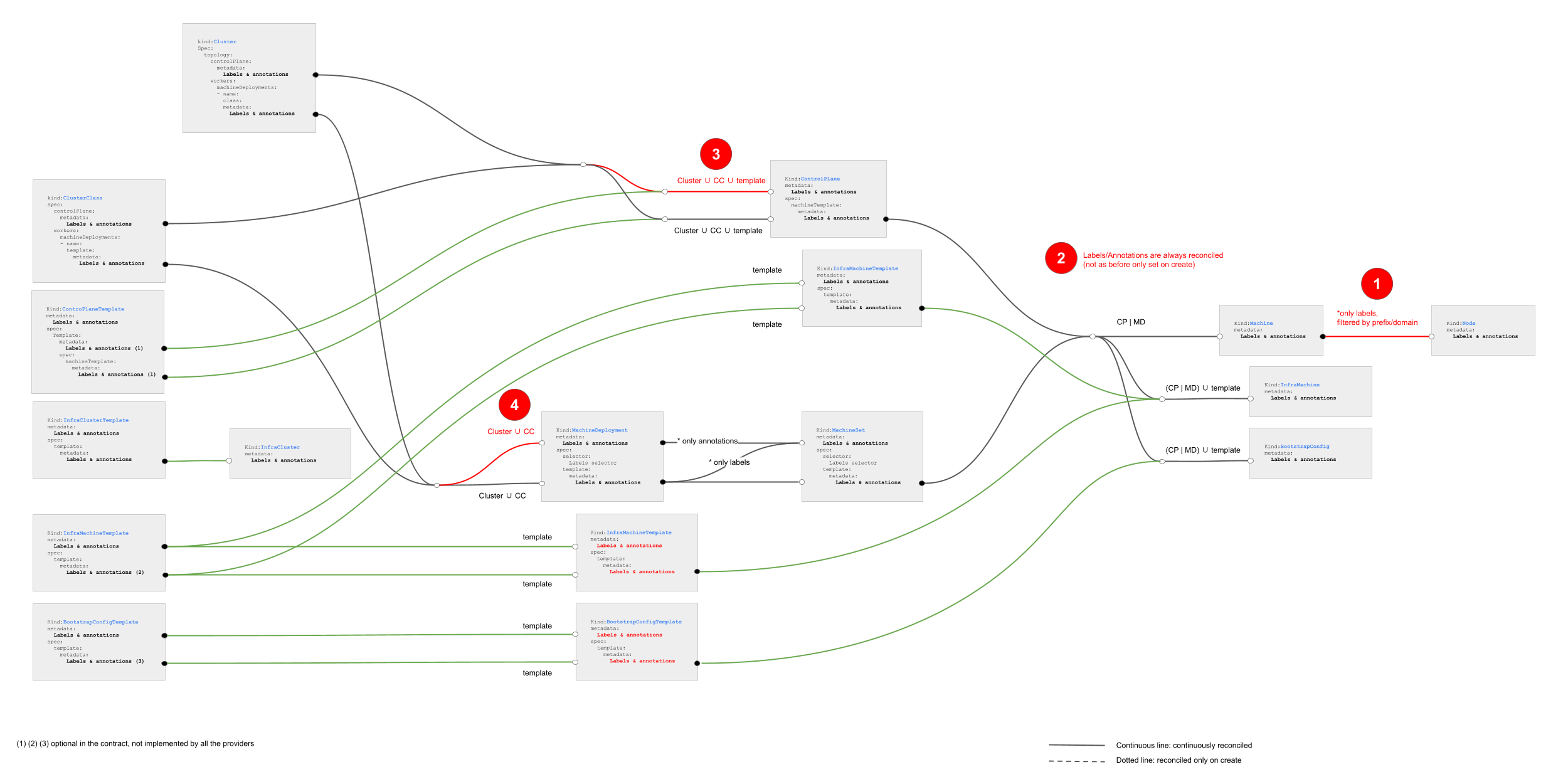

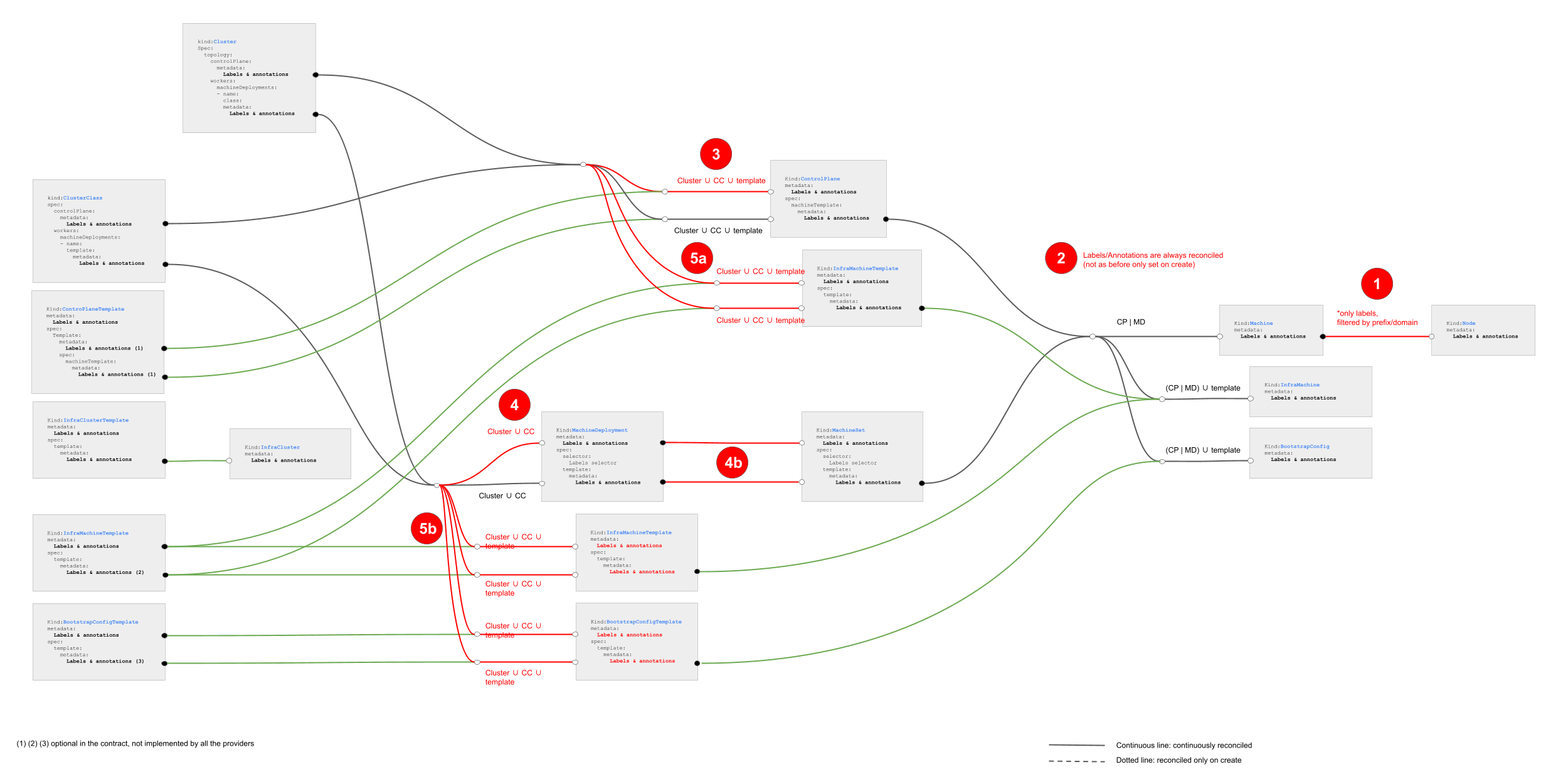

sigs.k8s.io/cluster-api@v1.7.1/docs/proposals/20221003-In-place-propagation-of-Kubernetes-objects-only-changes.md (about) 1 --- 2 title: In place propagation of changes affecting Kubernetes objects only 3 authors: 4 - "@fabriziopandini" 5 - @sbueringer 6 reviewers: 7 - @oscar 8 - @vincepri 9 creation-date: 2022-02-10 10 last-updated: 2022-02-26 11 status: implementable 12 replaces: 13 superseded-by: 14 --- 15 16 # In place propagation of changes affecting Kubernetes objects only 17 18 ## Table of Contents 19 20 <!-- START doctoc generated TOC please keep comment here to allow auto update --> 21 <!-- DON'T EDIT THIS SECTION, INSTEAD RE-RUN doctoc TO UPDATE --> 22 23 - [Glossary](#glossary) 24 - [Summary](#summary) 25 - [Motivation](#motivation) 26 - [Goals](#goals) 27 - [Non-Goals](#non-goals) 28 - [Future-Goals](#future-goals) 29 - [Proposal](#proposal) 30 - [User Stories](#user-stories) 31 - [Story 1](#story-1) 32 - [Story 2](#story-2) 33 - [Story 3](#story-3) 34 - [Story 4](#story-4) 35 - [Implementation Details/Notes/Constraints](#implementation-detailsnotesconstraints) 36 - [Metadata propagation](#metadata-propagation) 37 - [1. Label Sync Between Machine and underlying Kubernetes Nodes](#1-label-sync-between-machine-and-underlying-kubernetes-nodes) 38 - [2. Labels/Annotations always reconciled](#2-labelsannotations-always-reconciled) 39 - [3. and 4. Set top level labels/annotations for ControlPlane and MachineDeployment created from a ClusterClass](#3-and-4-set-top-level-labelsannotations-for-controlplane-and-machinedeployment-created-from-a-clusterclass) 40 - [Propagation of fields impacting only Kubernetes objects or controller behaviour](#propagation-of-fields-impacting-only-kubernetes-objects-or-controller-behaviour) 41 - [In-place propagation](#in-place-propagation) 42 - [MachineDeployment rollouts](#machinedeployment-rollouts) 43 - [What about the hash label](#what-about-the-hash-label) 44 - [KCP rollouts](#kcp-rollouts) 45 - [Avoiding conflicts with other components](#avoiding-conflicts-with-other-components) 46 - [Alternatives](#alternatives) 47 - [To not use SSA for in-place propagation and be authoritative on labels and annotations](#to-not-use-ssa-for-in-place-propagation-and-be-authoritative-on-labels-and-annotations) 48 - [To not use SSA for in-place propagation and do not delete labels/annotations](#to-not-use-ssa-for-in-place-propagation-and-do-not-delete-labelsannotations) 49 - [To not use SSA for in-place propagation and use status fields to track labels previously applied by CAPI](#to-not-use-ssa-for-in-place-propagation-and-use-status-fields-to-track-labels-previously-applied-by-capi) 50 - [Change more propagation rules](#change-more-propagation-rules) 51 - [Change more propagation rules](#change-more-propagation-rules-1) 52 - [Implementation History](#implementation-history) 53 54 <!-- END doctoc generated TOC please keep comment here to allow auto update --> 55 56 ## Glossary 57 58 Refer to the [Cluster API Book Glossary](https://cluster-api.sigs.k8s.io/reference/glossary.html). 59 60 **In-place mutable fields**: fields which changes would only impact Kubernetes objects or/and controller behaviour 61 but they won't mutate in any way provider infrastructure nor the software running on it. In-place mutable fields 62 are propagated in place by CAPI controllers to avoid the more elaborated mechanics of a replace rollout. 63 They include metadata, MinReadySeconds, NodeDrainTimeout, NodeVolumeDetachTimeout and NodeDeletionTimeout but are 64 not limited to be expanded in the future. 65 66 ## Summary 67 68 This document discusses how labels, annotation and other fields impacting only Kubernetes objects or controller behaviour (e.g NodeDrainTimeout) 69 propagate from ClusterClass to KubeadmControlPlane/MachineDeployments and ultimately to Machines. 70 71 ## Motivation 72 73 Managing labels on Kubernetes nodes has been a long standing [issue](https://github.com/kubernetes-sigs/cluster-api/issues/493) in Cluster API. 74 75 The following challenges have been identified through various iterations: 76 77 - Define how labels propagate from Machine to Node. 78 - Define how labels and annotations propagate from ClusterClass to KubeadmControlPlane/MachineDeployments and ultimately to Machines. 79 - Define how to prevent that label and annotation propagation triggers unnecessary rollouts. 80 81 The first point is being addressed by [Label Sync Between Machine and underlying Kubernetes Nodes](./20220927-label-sync-between-machine-and-nodes.md), 82 while this document tackles the remaining two points. 83 84 During a preliminary exploration we identified that the two above challenges apply also to other fields impacting only Kubernetes objects or 85 controller behaviour (see e.g. [Support to propagate properties in-place from MachineDeployments to Machines](https://github.com/kubernetes-sigs/cluster-api/issues/5880)). 86 87 As a consequence we have decided to expand this work to consider how to propagate labels, annotations and fields impacting only Kubernetes objects or 88 controller behaviour, as well as this related issue: [Labels and annotations for MachineDeployments and KubeadmControlPlane created by topology controller](https://github.com/kubernetes-sigs/cluster-api/issues/7006). 89 90 ### Goals 91 92 - Define how labels and annotations propagate from ClusterClass to KubeadmControlPlane/MachineDeployments and ultimately to Machines. 93 - Define how fields impacting only Kubernetes objects or controller behaviour propagate from ClusterClass to KubeadmControlPlane 94 MachineDeployments, and ultimately to Machines. 95 - Define how to prevent that propagation of labels, annotations and other fields impacting only Kubernetes objects or controller behaviour 96 triggers unnecessary rollouts. 97 98 ### Non-Goals 99 100 - Discuss the immutability core design principle in Cluster API (on the contrary, this proposal makes immutability even better by improving 101 the criteria on when we trigger Machine rollouts). 102 - To support in-place mutation for components or settings that exist on Machines (this proposal focuses only on labels, annotations and other 103 fields impacting only Kubernetes objects or controller behaviour). 104 105 ### Future-Goals 106 107 - Expand propagation rules including MachinePools after the [MachinePools Machine proposal](./20220209-machinepool-machines.md) is implemented. 108 109 ## Proposal 110 111 ### User Stories 112 113 #### Story 1 114 115 As a cluster admin/user, I would like a declarative and secure means by which to assign roles to my nodes via Cluster topology metadata 116 (for Clusters with ClusterClass). 117 118 As a cluster admin/user, I would like a declarative and secure means by which to assign roles to my nodes via KubeadmControlPlane and 119 MachineDeployments (for Clusters without ClusterClass). 120 121 #### Story 2 122 123 As a cluster admin/user, I would like to change labels or annotations on Machines without triggering Machine rollouts. 124 125 #### Story 3 126 127 As a cluster admin/user, I would like to change nodeDrainTimeout on Machines without triggering Machine rollouts. 128 129 #### Story 4 130 131 As a cluster admin/user, I would like to set autoscaler labels for MachineDeployments by changing Cluster topology metadata 132 (for Clusters with ClusterClass). 133 134 ### Implementation Details/Notes/Constraints 135 136 ### Metadata propagation 137 138 The following schema represent how metadata propagation works today (also documented in [book](https://cluster-api.sigs.k8s.io/developer/architecture/controllers/metadata-propagation.html)). 139 140  141 142 With this proposal we are suggesting to improve metadata propagation as described in the following schema: 143 144  145 146 Following paragraphs provide more details about the proposed changes. 147 148 #### 1. Label Sync Between Machine and underlying Kubernetes Nodes 149 150 As discussed in [Label Sync Between Machine and underlying Kubernetes Nodes](./20220927-label-sync-between-machine-and-nodes.md) we are propagating only 151 labels with a well-known prefix or a well-known domain from the Machine to the corresponding Kubernetes Node. 152 153 #### 2. Labels/Annotations always reconciled 154 155 All the labels/annotations previously set only on creation are now going to be always reconciled; 156 in order to prevent unnecessary rollouts, metadata propagation should happen in-place; 157 see [in-place propagation](#in-place-propagation) down in this document for more details. 158 159 Note: As of today the topology controller already propagates ClusterClass and Cluster topology metadata changes in-place when possible 160 in order to avoid unnecessary template rotation with the consequent Machine rollout; we do not foresee changes to this logic. 161 162 #### 3. and 4. Set top level labels/annotations for ControlPlane and MachineDeployment created from a ClusterClass 163 164 Labels and annotations from ClusterClass and Cluster.topology are going to be propagated to top-level level labels and annotations in 165 ControlPlane and MachineDeployment. 166 167 This addresses [Labels and annotations for MachineDeployments and KubeadmControlPlane created by topology controller](https://github.com/kubernetes-sigs/cluster-api/issues/7006). 168 169 Note: The proposed solution avoids to add additional metadata fields in ClusterClass and Cluster.topology, but 170 this has the disadvantage that it is not possible to differentiate top-level labels/annotations from Machines, 171 but given the discussion on the above issue this isn't a requirement. 172 173 ### Propagation of fields impacting only Kubernetes objects or controller behaviour 174 175 In addition to labels and annotations, there are also other fields that flow down from ClusterClass to KubeadmControlPlane/MachineDeployments and 176 ultimately to Machines. 177 178 Some of them can be considered like labels and annotations, because they have impacts only on Kubernetes objects or controller behaviour, but 179 not on the actual Machine itself - including infrastructure and the software running on it (in-place mutable fields). 180 Examples are `MinReadySeconds`, `NodeDrainTimeout`, `NodeVolumeDetachTimeout`, `NodeDeletionTimeout`. 181 182 Propagation of changes to those fields will be implemented using the same [in-place propagation](#in-place-propagation) mechanism implemented 183 for metadata. 184 185 ### In-place propagation 186 187 With in-place propagation we are referring to a mechanism that updates existing Kubernetes objects, like MachineSets or Machines, instead of 188 creating a new object with the updated fields and then deleting the current Kubernetes object. 189 190 The main benefit of this approach is that it prevents unnecessary rollouts of the corresponding infrastructure, with the consequent creation/ 191 deletion of a Kubernetes node and drain/scheduling of workloads hosted on the Machine being deleted. 192 193 **Important!** In-place propagation of changes as defined above applies only to metadata changes or to fields impacting only Kubernetes objects 194 or controller behaviour. This approach can not be used to apply changes to the infrastructure hosting a Machine, to the OS or any software 195 installed on it, Kubernetes components included (Kubelet, static pods, CRI etc.). 196 197 Implementing in-place propagation has two distinct challenges: 198 199 - Current rules defining when MachineDeployments or KubeadmControlPlane trigger a rollout should be modified in order to ignore metadata and 200 other fields that are going to be propagated in-place. 201 202 - When implementing the reconcile loop that performs in-place propagation, it is required to avoid impact on other components applying 203 labels or annotations to the same object. For example, when reconciling labels to a Machine, Cluster API should take care of reconciling 204 only the labels it manages, without changing any label applied by the users/by another controller on the same Machine. 205 206 #### MachineDeployment rollouts 207 208 The MachineDeployment controller determines when a rollout is required using a "semantic equality" comparison between current MachineDeployment 209 spec and the corresponding MachineSet spec. 210 211 While implementing this proposal we should change the definition of "semantic equality" in order to exclude metadata and fields that 212 should be updated in-place. 213 214 On top of that we should also account for the use case where, after deploying the new "semantic equality" rule, there is already one or more 215 MachineSet(s) matching the MachineDeployment. Today in this case Cluster API deterministically picks the oldest of them. 216 217 When exploring the solution for this proposal we discovered that the above approach can cause turbulence in the Cluster because it does not 218 take into account to which MachineSets existing Machines belong. As a consequence a Cluster API upgrade could lead to a rollout with Machines moving from 219 a "semantically equal" MachineSet to another, which is an unnecessary operation. 220 221 In order to prevent this we are modifying the MachineDeployment controller in order to pick the "semantically equal" MachineSet with more 222 Machines, thus avoiding or minimizing turbulence in the Cluster. 223 224 ##### What about the hash label 225 226 The MachineDeployment controller relies on a label with a hash value to identify Machines belonging to a MachineSet; also, the hash value 227 is used as suffix for the MachineSet name. 228 229 Currently the hash is computed using an algorithm that considers the same set of fields used to determine "semantic equality" between current 230 MachineDeployment spec and the corresponding MachineSet spec. 231 232 When exploring the solution for this proposal, we decided above algorithm can be simplified by using a simple random string 233 plus a check that ensures that the random string is not already taken by an existing MachineSet (for this MachineDeployment). 234 235 The main benefit of this change is that we are going to decouple "semantic equality" from computing a UID to be used for identifying Machines 236 belonging to a MachineSet. Thus making the code easier to understand and simplifying future changes on rollout rules. 237 238 #### KCP rollouts 239 240 The KCP controller determines when a rollout is required using a "semantic equality" comparison between current KCP 241 object and the corresponding Machine object. 242 243 The "semantic equality" implementation is pretty complex, but for the sake of this proposal only a few detail are relevant: 244 245 - Rollout is triggered if a Machine doesn't have all the labels and the annotations in spec.machineTemplate.Metadata. 246 - Rollout is triggered if the KubeadmConfig linked to a Machine doesn't have all the labels and the annotations in spec.machineTemplate.Metadata. 247 248 While implementing this proposal, above rule should be dropped, and replaced by in-place update of label & annotations. 249 Please also note that the current rule does not detect when a label/annotation is removed from spec.machineTemplate.Metadata 250 and thus users are required to remove labels/annotation manually; this is considered a bug and the new implementation 251 should account for this use case. 252 253 Also, according to the current "semantic equality" rules, changes to nodeDrainTimeout, nodeVolumeDetachTimeout, nodeDeletionTimeout are 254 applied only to new machines (they don't trigger rollout). While implementing this proposal, we should make sure that 255 those changes are propagated to existing machines, without triggering rollout. 256 257 #### Avoiding conflicts with other components 258 259 While doing [in-place propagation](#in-place-propagation), and thus continuously reconciling info from a Kubernetes 260 object to another we are also reconciling values in a map, like e.g. Labels or Annotations. 261 262 This creates some challenges. Assume that: 263 264 We want to reconcile following labels form MachineDeployment to Machine: 265 266 ```yaml 267 labels: 268 a: a 269 b: b 270 ``` 271 272 After the first reconciliation, the Machine gets above labels. 273 Now assume that we remove label `b` from the MachineDeployment; The expected set of labels is 274 275 ```yaml 276 labels: 277 a: a 278 ``` 279 280 But the machine still has the label `b`, and the controller cannot remove it, because at this stage there is not 281 a clear signal allowing to detect if this label has been applied by Cluster API or by the user or another controllers. 282 283 In order to manage properly this use case, that is co-authored maps, the solution available in API server is 284 to use [Server Side Apply patches](https://kubernetes.io/docs/reference/using-api/server-side-apply/). 285 286 Based on previous experience in introducing SSA in the topology controller this change requires a lot of testing 287 and validation. Some cases that should be specifically verified includes: 288 289 - introducing SSA patches on an already existing object (and ensure that SSA takes over ownership of managed labels/annotations properly) 290 - using SSA patches on objects after move or velero backup/restore (and ensure that SSA takes over ownership of managed labels/annotations properly) 291 292 However, despite those use case to be verified during implementation, it is assumed that using API server 293 build in capabilities is a stronger, long term solution than any other alternative. 294 295 ## Alternatives 296 297 ### To not use SSA for [in-place propagation](#in-place-propagation) and be authoritative on labels and annotations 298 299 If Cluster API uses regular patches instead of SSA patches, a well tested path in Cluster API, Cluster API can 300 be implemented in order to be authoritative on label and annotations, that means that all the labels and annotations should 301 be propagated from higher level objects (e.g. all the Machine's labels should be set on the MachineSet, and going 302 on up the propagation chain). 303 304 This is not considered acceptable, because users and other controller must be capable to apply their own 305 labels to any Kubernetes object, included the ones managed by Cluster API. 306 307 ### To not use SSA for [in-place propagation](#in-place-propagation) and do not delete labels/annotations 308 309 If Cluster API uses regular patches instead of SSA patches, but without being authoritative, Cluster API can 310 be implemented in order to add new labels from higher level objects (e.g. a new label added to MachineSet is added to 311 the corresponding Machine) and to enforce labels values from higher level objects. 312 313 But, as explained in [avoiding conflicts with other components](#avoiding-conflicts-with-other-components), using 314 this approach there is no way to determine if label/annotation has been applied by Cluster API or by the user or another controllers, 315 and thus automatic label/annotation deletion cannot be implemented. 316 317 This approach is not considered ideal, because it is transferring the ownership of labels and annotations deletion 318 to users or other controllers, and this is not considered a nice user experience. 319 320 ### To not use SSA for [in-place propagation](#in-place-propagation) and use status fields to track labels previously applied by CAPI 321 322 If Cluster API uses regular patches instead of SSA patches, without being authoritative, it is possible to implement 323 a DIY solution for tracking label ownership based on status fields or annotations. 324 325 This approach is not considered ideal, because e.g. status field do not survive move/backup and restore, and tacking 326 a step back, this is sort of re-implementing SSA or a subset of it. 327 328 ### Change more propagation rules 329 330 While working on the set of changes proposed above a set of optional changes to the existing propagation rules have been 331 identified; however, considering that the more complex part of this proposal is implementing [in-place propagation](#in-place-propagation), 332 it was decided to implement only the few, most critical changes to propagation rules. 333 334 Nevertheless we are documenting optional changes dropped from the scope of this iteration for future reference. 335 336  337 338 Optional changes: 339 340 - 4b: Simplify MachineDeployment to MachineSet label propagation 341 Leveraging on changed introduced 4, it is possible to simplify MachineDeployment to MachineSet label propagation, 342 which currently mimics Deployment to ReplicaSet label propagation. The backside of this chance is that it wouldn't be 343 possible anymore to have different labels/annotations on MachineDeployment & MachineSet. 344 345 - 5a and 5b: Propagate ClusterClass and Cluster.topology to templates 346 This changes make ClusterClass and Cluster.topology labels/annotation to be propagated to templates as well. 347 Please note that this change requires further discussions, because 348 - Contract with providers should be extended to add optional metadata fields where necessary 349 - It should be defined how to detect if a template for a specific provider has the optional metadata fields, 350 and this is tricky because Cluster API doesn't have detailed knowledge of provider's types. 351 - InfrastructureMachineTemplates are immutable in a lot of providers, so we have to discuss how/if we should 352 be able to mutate the InfrastructureMachineTemplates.spec.template.metadata. 353 354 ### Change more propagation rules 355 356 357 ## Implementation History 358 359 - [ ] 10/03/2022: First Draft of this document